Managing table data

This document describes how to manage table data in BigQuery. You can work with BigQuery table data in the following ways:

- Load data into a table

- Append to or overwrite table data

- Browse (or preview) table data

- Query table data

- Modify table data using data manipulation language (DML)

- Copy table data

- Export table data

For information on managing table schemas, see Modifying table schemas.

Before you begin

Grant roles that give the necessary permissions to users who need to perform each task in this document. Permissions required (if any) to perform a task are listed in the "Required permissions" section of the task.

Loading data into a table

You can load data when you create a table, or you can create an empty table and load the data later. When you load data, you can use schema auto-detect for supported data formats, or you can specify the schema.

For more information on loading data, see the documentation for your source data's format and location:

For more information on loading data from Cloud Storage, see:

For more information on loading data from a local source, see Loading data from local files.

Appending to and overwriting table data

You can overwrite table data using a load or query operation. You can append additional data to an existing table by performing a load-append operation or by appending query results to the table.

For more information on appending to or overwriting a table when loading data, see the documentation for your source data format:

- Appending to or overwriting a table with Avro data

- Appending to or overwriting a table with CSV data

- Appending to or overwriting a table with JSON data

- Appending to or overwriting a table with Parquet data

- Appending to or overwriting a table with ORC data

- Appending to or overwriting a table with Datastore data

To append to or overwrite a table using query results, specify a destination table and set the write disposition to either:

- Append to table — Appends the query results to an existing table.

- Overwrite table — Overwrites an existing table with the same name using the query results.

You can use the following query to append records from one table to another:

INSERT INTO <projectID>.<datasetID>.<table1> ( <column2>, <column3>) (SELECT * FROM <projectID>.<datasetID>.<table2>)

For more information on using query results to append to or overwrite data, see Writing query results.

Browsing table data

You can browse or read table data by:

- Using the Google Cloud console

- Using the bq command-line tool's

bq headcommand - Calling the

tabledata.listAPI method - Using the client libraries

Required permissions

To read table and partition data, you need the bigquery.tables.getData Identity and Access Management (IAM) permission.

Each of the following predefined IAM roles includes the permissions that you need in order to browse table and partition data:

roles/bigquery.dataViewerroles/bigquery.dataEditorroles/bigquery.dataOwnerroles/bigquery.admin

If you have the bigquery.datasets.create permission, you can browse data in the tables and partitions of the datasets you create.

For more information on IAM roles and permissions in BigQuery, see Predefined roles and permissions.

Browsing table data

To browse table data:

Console

In the Google Cloud console, open the BigQuery page.

In the Explorer panel, expand your project and select a dataset.

Click a table in the list.

Click Details and note the value in Number of rows. You may need this value to control the starting point for your results using the bq command-line tool or API.

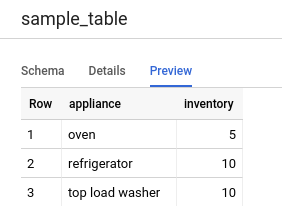

Click Preview. A sample set of data is displayed.

Command-line

Issue the bq head command with the --max_rows flag to list all columns in

a particular number of table rows. If --max_rows is not specified, the default

is 100.

To browse a subset of columns in the table (including nested and repeated

columns), use the --selected_fields flag and enter the columns as a comma-

separated list.

To specify the number of rows to skip before displaying table data, use the

--start_row=integer flag (or the -s shortcut). The

default value is 0. You can retrieve the number of rows in a table by using

the bq show command to retrieve table information.

If the table you're browsing is in a project other than your default project,

add the project ID to the command in the following format:

project_id:dataset.table.

bq head \ --max_rows integer1 \ --start_row integer2 \ --selected_fields "columns" \ project_id:dataset.table

Where:

- integer1 is the number of rows to display.

- integer2 is the number of rows to skip before displaying data.

- columns is a comma-separated list of columns.

- project_id is your project ID.

- dataset is the name of the dataset containing the table.

- table is the name of the table to browse.

Examples:

Enter the following command to list all columns in the first 10 rows in

mydataset.mytable. mydataset is in your default project.

bq head --max_rows=10 mydataset.mytable

Enter the following command to list all columns in the first 100 rows in

mydataset.mytable. mydataset is in myotherproject, not your default

project.

bq head myotherproject:mydataset.mytable

Enter the following command to display only field1 and field2 in

mydataset.mytable. The command uses the --start_row flag to skip to row 100.

mydataset.mytable is in your default project.

bq head --start_row 100 --selected_fields "field1,field2" mydataset.mytable

Because the bq head command does not create a query job, bq head commands do

not appear in your query history, and you are not charged for them.

API

Browse through a table's data by calling tabledata.list.

Specify the name of the table in the tableId parameter.

Configure these optional parameters to control the output:

maxResults— Maximum number of results to returnselectedFields— Comma-separated list of columns to return; If unspecified, all columns are returnedstartIndex— Zero-based index of the starting row to read

Values are returned wrapped in a JSON object that you must parse, as described

in the tabledata.list

reference documentation.

C#

Before trying this sample, follow the C# setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery C# API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Go

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Go API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

The Cloud Client Libraries for Go automatically paginates by default, so you do not need to implement pagination yourself, for example:

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Node.js

Before trying this sample, follow the Node.js setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Node.js API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

The Cloud Client Libraries for Node.js automatically paginates by default, so you do not need to implement pagination yourself, for example:

PHP

Before trying this sample, follow the PHP setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery PHP API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Pagination happens automatically in the Cloud Client Libraries for PHP

using the generator function rows, which fetches the next page of

results during iteration.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Ruby

Before trying this sample, follow the Ruby setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Ruby API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Pagination happens automatically in the Cloud Client Libraries for Ruby

using Table#data and Data#next.

Querying table data

You can query BigQuery data by using one of the following query job types:

Interactive query jobs. By default, BigQuery runs queries as interactive query jobs, which are intended to start executing as quickly as possible.

Batch query jobs. Batch queries have lower priority than interactive queries. When a project or reservation is using all of its available compute resources, batch queries are more likely to be queued and remain in the queue. After a batch query starts running, the batch query runs the same as an interactive query. For more information, see query queues.

Continuous query jobs. With these jobs, the query runs continuously, letting you analyze incoming data in BigQuery in real time and then write the results to a BigQuery table, or export the results to Bigtable or Pub/Sub. You can use this capability to perform time sensitive tasks, such as creating and immediately acting on insights, applying real time machine learning (ML) inference, and building event-driven data pipelines.

You can run query jobs by using the following methods:

- Compose and run a query in the Google Cloud console.

- Run the

bq querycommand in the bq command-line tool. - Programmatically call the

jobs.queryorjobs.insertmethod in the BigQuery REST API. - Use the BigQuery client libraries.

For more information on querying BigQuery tables, see Introduction to querying BigQuery data.

In addition to querying data stored in BigQuery tables, you can query data stored externally. For more information, see Introduction to external data sources.

Modifying table data

You can modify data in a table using data manipulation language (DML) statements in SQL. DML statements let you update, merge, insert, and delete rows in tables. For syntax reference and examples of each type of DML statement, see Data manipulation language statements in GoogleSQL.

The legacy SQL dialect does not support DML statements. To update or delete data using legacy SQL, you must delete the table and then recreate it with new data. Alternatively, you can write a query that modifies the data and write the query results to a new, destination table.

Copying table data

You can copy a table by:

- Using the Google Cloud console

- Using the bq command-line tool's

bq cpcommand - Calling the

jobs.insertAPI method and configuring a copy job - Using the client libraries

For more information on copying tables, see Copying a table.

Exporting table data

You can export table data to a Cloud Storage bucket in CSV, JSON, Avro, or Parquet (Preview) format. Exporting to your local machine is not supported; however, you can download and save query results using the Google Cloud console.

For more information, see Exporting table data.

Table security

To control access to tables in BigQuery, see Control access to resources with IAM.

What's next

- For more information on loading data, see Introduction to loading data.

- For more information on querying data, see Introduction to querying BigQuery data.

- For more information on modifying table schemas, see Modifying table schemas.

- For more information on creating and using tables, see Creating and using tables.

- For more information on managing tables, see Managing tables.