Run a query

This document shows you how to run a query in BigQuery and understand how much data the query will process before execution by performing a dry run.

Types of queries

You can query BigQuery data by using one of the following query job types:

Interactive query jobs. By default, BigQuery runs queries as interactive query jobs, which are intended to start executing as quickly as possible.

Batch query jobs. Batch queries have lower priority than interactive queries. When a project or reservation is using all of its available compute resources, batch queries are more likely to be queued and remain in the queue. After a batch query starts running, the batch query runs the same as an interactive query. For more information, see query queues.

Continuous query jobs. With these jobs, the query runs continuously, letting you analyze incoming data in BigQuery in real time and then write the results to a BigQuery table, or export the results to Bigtable or Pub/Sub. You can use this capability to perform time sensitive tasks, such as creating and immediately acting on insights, applying real time machine learning (ML) inference, and building event-driven data pipelines.

You can run query jobs by using the following methods:

- Compose and run a query in the Google Cloud console.

- Run the

bq querycommand in the bq command-line tool. - Programmatically call the

jobs.queryorjobs.insertmethod in the BigQuery REST API. - Use the BigQuery client libraries.

BigQuery saves query results to either a temporary table (default) or permanent table. When you specify a permanent table as the destination table for the results, you can choose whether to append or overwrite an existing table, or create a new table with a unique name.

Required roles

To get the permissions that you need to run a query job, ask your administrator to grant you the following IAM roles:

-

BigQuery Job User (

roles/bigquery.jobUser) on the project. -

BigQuery Data Viewer (

roles/bigquery.dataViewer) on all tables and views that your query references. To query views, you also need this role on all underlying tables and views. If you're using authorized views or authorized datasets, you don't need access to the underlying source data.

For more information about granting roles, see Manage access to projects, folders, and organizations.

These predefined roles contain the permissions required to run a query job. To see the exact permissions that are required, expand the Required permissions section:

Required permissions

The following permissions are required to run a query job:

-

bigquery.jobs.createon the project from which the query is being run, regardless of where the data is stored. -

bigquery.tables.getDataon all tables and views that your query references. To query views, you also need this permission on all underlying tables and views. If you're using authorized views or authorized datasets, you don't need access to the underlying source data.

You might also be able to get these permissions with custom roles or other predefined roles.

Troubleshooting

Access Denied: Project [project_id]: User does not have bigquery.jobs.create

permission in project [project_id].

This error occurs when a principal lacks permission to create a query jobs in the project.

Resolution: An administrator must grant you the bigquery.jobs.create

permission on the project you are querying. This permission is required in

addition to any permission required to access the queried data.

For more information about BigQuery permissions, see Access control with IAM.

Run an interactive query

To run an interactive query, select one of the following options:

Console

Go to the BigQuery page.

Click SQL query.

In the query editor, enter a valid GoogleSQL query.

For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;Alternatively, you can use the Reference panel to construct new queries.

Optional: To automatically display code suggestions when you type a query, click More, and then select SQL autocomplete. If you don't need autocomplete suggestions, deselect SQL autocomplete. This also turns off the project name autofill suggestions.

Optional: To select additional query settings, click More, and then click Query settings.

Click Run.

If you don't specify a destination table, the query job writes the output to a temporary (cache) table.

You can now explore the query results in the Results tab of the Query results pane.

Optional: To sort the query results by column, click Open sort menu next to the column name and select a sort order. If the estimated bytes processed for the sort is more than zero, then the number of bytes is displayed at the top of the menu.

Optional: To see visualization of your query results, go to the Visualization tab. You can zoom in or zoom out of the chart, download the chart as a PNG file, or toggle the legend visibility.

In the Visualization configuration pane, you can change the visualization type and configure the measures and dimensions of the visualization. Fields in this pane are prefilled with the initial configuration inferred from the destination table schema of the query. The configuration is preserved between following query runs in the same query editor.

For Line, Bar, or Scatter visualizations, the supported dimensions are

INT64,FLOAT64,NUMERIC,BIGNUMERIC,TIMESTAMP,DATE,DATETIME,TIME, andSTRINGdata types, while the supported measures areINT64,FLOAT64,NUMERIC, andBIGNUMERICdata types.If your query results include the

GEOGRAPHYtype, then Map is the default visualization type, which lets you visualize your results on an interactive map.Optional: In the JSON tab, you can explore the query results in the JSON format, where the key is the column name and the value is the result for that column.

bq

-

In the Google Cloud console, activate Cloud Shell.

At the bottom of the Google Cloud console, a Cloud Shell session starts and displays a command-line prompt. Cloud Shell is a shell environment with the Google Cloud CLI already installed and with values already set for your current project. It can take a few seconds for the session to initialize.

Use the

bq querycommand. In the following example, the--use_legacy_sql=falseflag lets you use GoogleSQL syntax.bq query \ --use_legacy_sql=false \ 'QUERY'

Replace QUERY with a valid GoogleSQL query. For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:bq query \ --use_legacy_sql=false \ 'SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;'The query job writes the output to a temporary (cache) table.

Optionally, you can specify the destination table and location for the query results. To write the results to an existing table, include the appropriate flag to append (

--append_table=true) or overwrite (--replace=true) the table.bq query \ --location=LOCATION \ --destination_table=TABLE \ --use_legacy_sql=false \ 'QUERY'

Replace the following:

LOCATION: the region or multi-region for the destination table—for example,

USIn this example, the

usa_namesdataset is stored in the US multi-region location. If you specify a destination table for this query, the dataset that contains the destination table must also be in the US multi-region. You cannot query a dataset in one location and write the results to a table in another location.You can set a default value for the location using the .bigqueryrc file.

TABLE: a name for the destination table—for example,

myDataset.myTableIf the destination table is a new table, then BigQuery creates the table when you run your query. However, you must specify an existing dataset.

If the table isn't in your current project, then add the Google Cloud project ID using the format

PROJECT_ID:DATASET.TABLE—for example,myProject:myDataset.myTable. If--destination_tableis unspecified, a query job is generated that writes the output to a temporary table.

API

To run a query using the API, insert a new job

and populate the query job configuration property. Optionally specify your

location in the location property in the jobReference section of the

job resource.

Poll for results by calling

getQueryResults.

Poll until jobComplete equals true. Check for errors and warnings in the

errors list.

C#

Before trying this sample, follow the C# setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery C# API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Go

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Go API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

To run a query with a proxy, see Configuring a proxy.

Node.js

Before trying this sample, follow the Node.js setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Node.js API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

PHP

Before trying this sample, follow the PHP setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery PHP API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Ruby

Before trying this sample, follow the Ruby setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Ruby API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Run a batch query

To run a batch query, select one of the following options:

Console

Go to the BigQuery page.

Click SQL query.

In the query editor, enter a valid GoogleSQL query.

For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;Click More, and then click Query settings.

In the Resource management section, select Batch.

Optional: Adjust your query settings.

Click Save.

Click Run.

If you don't specify a destination table, the query job writes the output to a temporary (cache) table.

bq

-

In the Google Cloud console, activate Cloud Shell.

At the bottom of the Google Cloud console, a Cloud Shell session starts and displays a command-line prompt. Cloud Shell is a shell environment with the Google Cloud CLI already installed and with values already set for your current project. It can take a few seconds for the session to initialize.

Use the

bq querycommand and specify the--batchflag. In the following example, the--use_legacy_sql=falseflag lets you use GoogleSQL syntax.bq query \ --batch \ --use_legacy_sql=false \ 'QUERY'

Replace QUERY with a valid GoogleSQL query. For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:bq query \ --batch \ --use_legacy_sql=false \ 'SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;'The query job writes the output to a temporary (cache) table.

Optionally, you can specify the destination table and location for the query results. To write the results to an existing table, include the appropriate flag to append (

--append_table=true) or overwrite (--replace=true) the table.bq query \ --batch \ --location=LOCATION \ --destination_table=TABLE \ --use_legacy_sql=false \ 'QUERY'

Replace the following:

LOCATION: the region or multi-region for the destination table—for example,

USIn this example, the

usa_namesdataset is stored in the US multi-region location. If you specify a destination table for this query, the dataset that contains the destination table must also be in the US multi-region. You cannot query a dataset in one location and write the results to a table in another location.You can set a default value for the location using the .bigqueryrc file.

TABLE: a name for the destination table—for example,

myDataset.myTableIf the destination table is a new table, then BigQuery creates the table when you run your query. However, you must specify an existing dataset.

If the table isn't in your current project, then add the Google Cloud project ID using the format

PROJECT_ID:DATASET.TABLE—for example,myProject:myDataset.myTable. If--destination_tableis unspecified, a query job is generated that writes the output to a temporary table.

API

To run a query using the API, insert a new job

and populate the query job configuration property. Optionally specify your

location in the location property in the jobReference section of the

job resource.

When you populate the query job properties, include the

configuration.query.priority property and set the value to BATCH.

Poll for results by calling

getQueryResults.

Poll until jobComplete equals true. Check for errors and warnings in the

errors list.

Go

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Go API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Java

To run a batch query, set the query priority to QueryJobConfiguration.Priority.BATCH when creating a QueryJobConfiguration.

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Node.js

Before trying this sample, follow the Node.js setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Node.js API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Python

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Run a continuous query

Running a continuous query job requires additional configuration. For more information, see Create continuous queries.

Use the Reference panel

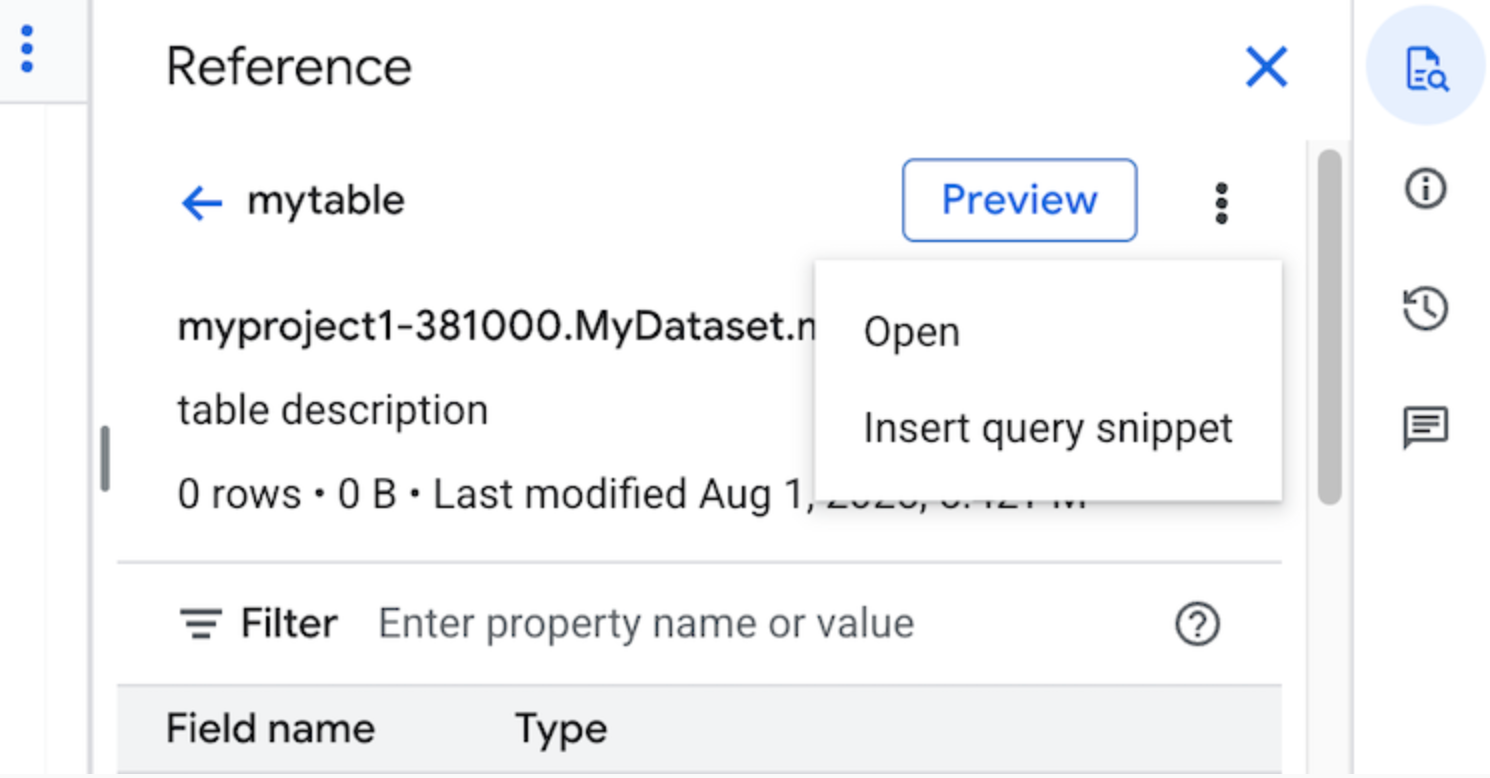

In the query editor, the Reference panel dynamically displays context-aware information about tables, snapshots, views, and materialized views. The panel lets you preview the schema details of these resources, or open them in a new tab. You can also use the Reference panel to construct new queries or edit existing queries by inserting query snippets or field names.

To construct a new query using the Reference panel, follow these steps:

In the Google Cloud console, go to the BigQuery page.

Click SQL query.

Click quick_reference_all Reference.

Click a recent or starred table or view. You can also use the search bar to find tables and views.

Click View actions, and then click Insert query snippet.

Optional: You can preview the schema details of the table or view, or open them in a new tab.

You can now either edit the query manually or insert field names directly into your query. To insert a field name, point to and click a place in the query editor where you want to insert the field name, and then click the field name in the Reference panel.

Query settings

When you run a query, you can specify the following settings:

A destination table for the query results.

The priority of the job.

Whether to use cached query results.

The job timeout in milliseconds.

Whether to use session mode.

The type of encryption to use.

The maximum number of bytes billed for the query.

The dialect of SQL to use.

The location in which to run the query. The query must run in the same location as any tables referenced in the query.

The reservation to run your query in.

Optional job creation mode

Optional job creation mode can improve the overall latency of queries that run

for a short duration, such as those from dashboards or data exploration

workloads. This mode executes the query and returns the results inline for

SELECT statements without requiring the use of

jobs.getQueryResults

to fetch the results. Queries using optional job creation mode don't create a

job when executed unless BigQuery determines that a job creation

is necessary to complete the query.

To enable optional job creation mode, set the jobCreationMode field of the

QueryRequest

instance to JOB_CREATION_OPTIONAL in the

jobs.query request body.

When the value of this field is set to JOB_CREATION_OPTIONAL,

BigQuery determines if the query can use the optional job

creation mode. If so, BigQuery executes the query and returns

all results in the rows field of the response. Since a job isn't created for

this query, BigQuery doesn't return a jobReference in the

response body. Instead, it returns a queryId field, which you can use to get

insights about the query using the INFORMATION_SCHEMA.JOBS

view. Since no

job is created, there is no jobReference that can be passed to

jobs.get and

jobs.getQueryResults

APIs to lookup these queries.

If BigQuery determines that a job is required to complete the

query, a jobReference is returned. You can inspect the job_creation_reason

field in INFORMATION_SCHEMA.JOBS

view to determine

the reason that a job was created for the query. In this case, you should use

jobs.getQueryResults

to fetch the results when the query is complete.

When you use the JOB_CREATION_OPTIONAL value, the jobReference field might

not be present in the response. Check if the field exists before accessing it.

When JOB_CREATION_OPTIONAL is specified for multi-statement queries (scripts),

BigQuery might optimize the execution process. As part of this

optimization, BigQuery might determine that it can complete the

script by creating fewer job resources than the number of individual statements,

potentially even executing the entire script without creating any job at all.

This optimization depends on BigQuery's assessment of the script, and the

optimization might not be applied in every case. The optimization is fully

automated by the system. No user controls or actions are required.

To run a query using optional job creation mode, select one of the following options:

Console

Go to the BigQuery page.

Click SQL query.

In the query editor, enter a valid GoogleSQL query.

For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;Click More, and then choose the Optional job creation query mode. To confirm this choice, click Confirm.

Click Run.

bq

-

In the Google Cloud console, activate Cloud Shell.

At the bottom of the Google Cloud console, a Cloud Shell session starts and displays a command-line prompt. Cloud Shell is a shell environment with the Google Cloud CLI already installed and with values already set for your current project. It can take a few seconds for the session to initialize.

Use the

bq querycommand and specify the--job_creation_mode=JOB_CREATION_OPTIONALflag. In the following example, the--use_legacy_sql=falseflag lets you use GoogleSQL syntax.bq query \ --rpc=true \ --use_legacy_sql=false \ --job_creation_mode=JOB_CREATION_OPTIONAL \ --location=LOCATION \ 'QUERY'

Replace QUERY with a valid GoogleSQL query, and replace LOCATION with a valid region where the dataset is located. For example, query the BigQuery public dataset

usa_namesto determine the most common names in the United States between the years 1910 and 2013:bq query \ --rpc=true \ --use_legacy_sql=false \ --job_creation_mode=JOB_CREATION_OPTIONAL \ --location=us \ 'SELECT name, gender, SUM(number) AS total FROM `bigquery-public-data.usa_names.usa_1910_2013` GROUP BY name, gender ORDER BY total DESC LIMIT 10;'The query job returns the output inline in the response.

API

To run a query in optional job creation mode using the API, run a query synchronously

and populate the QueryRequest property. Include the jobCreationMode property and set its value to JOB_CREATION_OPTIONAL.

Check the response. If jobComplete equals true and jobReference is empty, read the results from the rows field. You can also get the queryId from the response.

If jobReference is present, you can check jobCreationReason for why a job was created by BigQuery. Poll for results by calling

getQueryResults.

Poll until jobComplete equals true. Check for errors and warnings in the

errors list.

Java

Available version: 2.51.0 and up

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

To run a query with a proxy, see Configuring a proxy.

Python

Available version: 3.34.0 and up

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Node

Available version: 8.1.0 and up

Before trying this sample, follow the Node.js setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Node.js API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Go

Available version: 1.69.0 and up

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Go API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

JDBC Driver

Available version: JDBC v1.6.1 and up

Requires setting JobCreationMode=2 in the connection string.

jdbc:bigquery://https://www.googleapis.com/bigquery/v2:443;JobCreationMode=2;Location=US;

ODBC Driver

Available version: ODBC v3.0.7.1016 and up

Requires setting JobCreationMode=2 in the .ini file.

[ODBC Data Sources] Sample DSN=Simba Google BigQuery ODBC Connector 64-bit [Sample DSN] JobCreationMode=2

Quotas

For information about quotas regarding interactive and batch queries, see Query jobs.

Monitor queries

You can get information about queries as they are executing by using the

jobs explorer or by querying the

INFORMATION_SCHEMA.JOBS_BY_PROJECT view.

Dry run

A dry run in BigQuery provides the following information:

- estimate of charges in on-demand mode

- validation of your query

- approximate bytes processed by your query in capacity mode

Dry runs don't use query slots, and you are not charged for performing a dry run. You can use the estimate returned by a dry run to calculate query costs in the pricing calculator.

Perform a dry run

To perform a dry run, do the following:

Console

Go to the BigQuery page.

Enter your query in the query editor.

If the query is valid, then a check mark automatically appears along with the amount of data that the query will process. If the query is invalid, then an exclamation point appears along with an error message.

bq

Enter a query like the following using the --dry_run flag.

bq query \ --use_legacy_sql=false \ --dry_run \ 'SELECT COUNTRY, AIRPORT, IATA FROM `project_id`.dataset.airports LIMIT 1000'

For a valid query, the command produces the following response:

Query successfully validated. Assuming the tables are not modified, running this query will process 10918 bytes of data.

API

To perform a dry run by using the API, submit a query job with

dryRun set to true in the

JobConfiguration

type.

Go

Before trying this sample, follow the Go setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Go API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Node.js

Before trying this sample, follow the Node.js setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery Node.js API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

PHP

Before trying this sample, follow the PHP setup instructions in the

BigQuery quickstart using

client libraries.

For more information, see the

BigQuery PHP API

reference documentation.

To authenticate to BigQuery, set up Application Default Credentials.

For more information, see

Set up authentication for client libraries.

Python

Set the

QueryJobConfig.dry_run

property to True.

Client.query()

always returns a completed

QueryJob

when provided a dry run query configuration.

Before trying this sample, follow the Python setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Python API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

What's next

- Learn how to manage query jobs.

- Learn how to view query history.

- Learn how to save and share queries.

- Learn about query queues.

- Learn how to write query results.