Event-Driven Transfers

You can use the BigQuery Data Transfer Service to create event-driven transfers that automatically loads data based on event notifications. We recommend using event-driven transfers if you require incremental data ingestion that optimizes cost efficiency.

When you set up event-driven transfers, there can be a delay of a few minutes between each data transfer. If you require immediate data availability, we recommend using the Storage Write API that streams data directly into BigQuery with the lowest possible latency. The Storage Write API provides real-time updates for the most demanding use cases.

When choosing between the two, consider whether you need to prioritize cost-effective incremental batch ingestion with event-driven transfers, or whether you prefer the flexibility of the Storage Write API.

Data sources with event-driven transfers support

BigQuery Data Transfer Service can use event-driven transfers with the following data sources:

Limitations

Event-driven transfers to BigQuery are subject to the following limitations:

- After an event-driven transfer is triggered, the BigQuery Data Transfer Service waits up to 10 minutes before it triggers the next transfer run, regardless if an event arrives within that time.

- Event-driven transfers don't support runtime parameters for source URI or data path.

- The same Pub/Sub subscription cannot be reused by multiple event-driven transfer configurations.

Set up a Cloud Storage event-driven transfer

Event-driven transfers from Cloud Storage use Pub/Sub notifications to know when objects in the source bucket have been modified or added. When using incremental transfer mode, deleting an object at the source bucket does not delete the associated data in the destination BigQuery table.

Before you begin

Before configuring a Cloud Storage event-driven transfer, you must perform the following steps:

Enable the Pub/Sub API for the project that receives notifications.

If you are the Cloud Storage Admin (

roles/storage.admin) and the Pub/Sub Admin (roles/pubsub.admin), you can proceed to Create an event-driven transfer configuration.If you aren't the Cloud Storage Admin (

roles/storage.admin) and the Pub/Sub Admin (roles/pubsub.admin), ask your administrator to grant you theroles/storage.adminandroles/pubsub.adminroles or ask your administrator to complete configure Pub/Sub and configure Service Agent permissions in the following sections and use the pre-configured Pub/Sub subscription to create an event-driven transfer configuration.Detailed permissions required to set up event-driven transfer config notifications:

If you plan to create topics and subscriptions for publishing notifications, you must have the

pubsub.topics.createandpubsub.subscriptions.createpermissions.Whether you plan to use new or existing topics and subscriptions, you must have the following permissions. If you have already created topics and subscriptions in Pub/Sub, then you likely already have these permissions.

You must have the following permissions on the Cloud Storage bucket which you want to configure Pub/Sub notifications.

storage.buckets.getstorage.buckets.update

The

pubsub.adminandstorage.adminpredefined IAM role has all the required permissions to configure a Cloud Storage event-driven transfer. For more information, see Pub/Sub access control.

Configure Pub/Sub notifications in Cloud Storage

Ensure that you've satisfied the Prerequisites for using Pub/Sub with Cloud Storage.

Apply a notification configuration to your Cloud Storage bucket:

gcloud storage buckets notifications create gs://BUCKET_NAME --topic=TOPIC_NAME --event-types=OBJECT_FINALIZE

Replace the following:

BUCKET_NAME: The name of the Cloud Storage bucket you want to trigger file notification eventsTOPIC_NAME: The name of the Pub/Sub topic you want to receive the file notification events

You can also add a notification configuration using other methods besides the gcloud CLI. For more information, see Apply a notification configuration.

Verify that the Pub/Sub notification is correctly configured for Cloud Storage. Use the

gcloud storage buckets notifications listcommand:gcloud storage buckets notifications list gs://BUCKET_NAME

If successful, the response looks similar to the following:

etag: '132' id: '132' kind: storage#notification payload_format: JSON_API_V1 selfLink: https://www.googleapis.com/storage/v1/b/my-bucket/notificationConfigs/132 topic: //pubsub.googleapis.com/projects/my-project/topics/my-bucket

Create a pull subscription for the topic:

gcloud pubsub subscriptions create SUBSCRIPTION_ID --topic=TOPIC_NAME

Replace

SUBSCRIPTION_IDwith the name or ID of your new Pub/Sub pull subscription.You can create a pull subscription using other methods.

Configure Service Agent permissions

Find the name of the BigQuery Data Transfer Service agent for your project:

Go to the IAM & Admin page.

Select the Include Google-provided role grants checkbox.

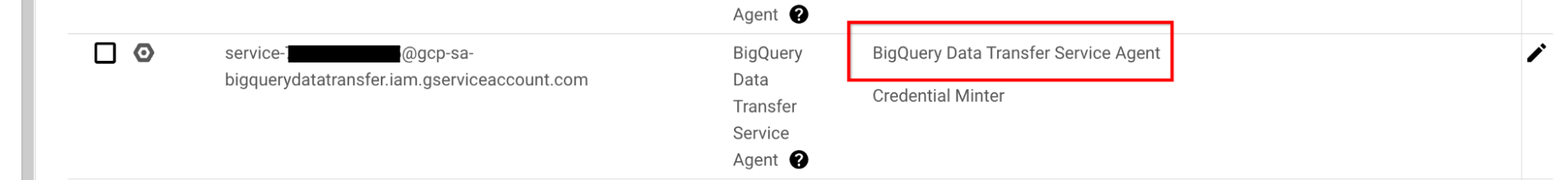

The BigQuery Data Transfer Service agent is listed with the name

service-<project_number>@gcp-sa-bigquerydatatransfer.iam.gserviceaccount.comis shown and is granted the BigQuery Data Transfer Service Agent role.

For more information about service agents, see Service agents.

Grant the

pubsub.subscriberrole to the BigQuery Data Transfer Service agent.Cloud console

Follow the instructions in Controlling access through the Google Cloud console to grant the

Pub/Sub Subscriberrole to the BigQuery Data Transfer Service agent. The role can be granted at the topic, subscription, or project level.gcloudCLIFollow the instructions in Setting a policy to add the following binding:

{ "role": "roles/pubsub.subscriber", "members": [ "serviceAccount:project-PROJECT_NUMBER@gcp-sa-bigquerydatatransfer.iam.gserviceaccount.com" }

Replace

PROJECT_NUMBERwith the project ID that is hosting the transfer resources are created and billed.Quota usage attribution: when the BigQuery Data Transfer Service agent access the Pub/Sub subscription, the quota usage is charged against the user project.

Verify that the BigQuery Data Transfer Service agent is granted the

pubsub.subscriberrole:In the Google Cloud console, go to the Pub/Sub page.

Select the Pub/Sub subscription that you used in the event-driven transfer.

If the info panel is hidden, click Show info panel in the upper right corner.

In the Permissions tab, verify that the BigQuery Data Transfer Service service agent has the

pubsub.subscriberrole

Summarized commands to configure notification and permissions

The following Google Cloud CLI commands includes all the necessary commands to set up notifications and permissions as detailed in the previous sections.

gcloud

PROJECT_ID=project_id CONFIG_NAME=config_name RESOURCE_NAME="bqdts-event-driven-${CONFIG_NAME}" # Create a Pub/Sub topic. gcloud pubsub topics create "${RESOURCE_NAME}" --project="${PROJECT_ID}" # Create a Pub/Sub subscription. gcloud pubsub subscriptions create "${RESOURCE_NAME}" --project="${PROJECT_ID}" --topic="projects/${PROJECT_ID}/topics/${RESOURCE_NAME}" # Create a Pub/Sub notification. gcloud storage buckets notifications create gs://"${RESOURCE_NAME}" --topic="projects/${PROJECT_ID}/topics/${RESOURCE_NAME}" --event-types=OBJECT_FINALIZE # Grant roles/pubsub.subscriber permission to the DTS service agent. PROJECT_NUMBER=$(gcloud projects describe "${PROJECT_ID}" --format='value(projectNumber)') gcloud pubsub subscriptions add-iam-policy-binding "${RESOURCE_NAME}" --project="${PROJECT_ID}" --member=serviceAccount:service-"${PROJECT_NUMBER}"@gcp-sa-bigquerydatatransfer.iam.gserviceaccount.com --role=roles/pubsub.subscriber

Replace the following:

PROJECT_ID: The ID of your project.CONFIG_NAME: A name to identify this transfer configuration.

Create a transfer configuration

You can create an event-driven Cloud Storage transfer by creating a Cloud Storage transfer and selecting Event-driven as the Schedule Type. As the Cloud Storage Admin (roles/storage.admin) and the Pub/Sub Admin (roles/pubsub.admin), you have sufficient permissions for the BigQuery Data Transfer Service to automatically configure Cloud Storage to send notifications.

If you aren't the Cloud Storage Admin (roles/storage.admin) and the Pub/Sub Admin (roles/pubsub.admin), you must instead ask your administrator to grant you the roles or ask your administrator to complete the required Pub/Sub notifications in Cloud Storage configurations and Service Agent permission configurations before you can create the event-driven transfer.