This guide shows you how to deploy and configure a performance-optimized SUSE Linux Enterprise Server (SLES) high-availability (HA) cluster for an SAP HANA scale-out system on Google Cloud.

This guide includes the steps for:

- Configuring an internal passthrough Network Load Balancer to reroute traffic in the event of a failure

- Configuring a Pacemaker cluster on SLES to manage the SAP systems and other resources during a failover

This guide also includes steps for configuring SAP HANA system replication, but refer to the SAP documentation for the definitive instructions.

To deploy a SAP HANA system without a Linux high-availability cluster or a standby node host, use the SAP HANA deployment guide.

This guide is intended for advanced SAP HANA users who are familiar with Linux high-availability configurations for SAP HANA.

The system that this guide deploys

Following this guide, you will deploy a multi-node SAP HANA HA system configured for full zone-redundancy with an additional instance acting as a majority maker, also known as tie-breaker node, which ensures the cluster quorum is maintained in case of the loss of one zone.

The final deployment comprises of the following resources:

- A primary and secondary site where each instance has a zonal counterpart.

- Two sites configured for synchronous replication.

- A single compute instance to act as a majority maker.

- A Pacemaker high-availability cluster resource manager with a fencing mechanism.

- Persistent disk(s) for SAP HANA data and log volumes attached to each SAP HANA instance.

This guide has you use the Terraform templates that are provided by Google Cloud to deploy the Compute Engine virtual machines (VMs) and the SAP HANA instances, which ensures that the VMs and the base SAP HANA systems meet SAP supportability requirements and conform to current best practices.

SAP HANA Studio is used in this guide to test SAP HANA system replication. You can use SAP HANA Cockpit instead, if you prefer. For information about installing SAP HANA Studio, see:

- Installing SAP HANA Studio on a Compute Engine Windows VM

- SAP HANA Studio Installation and Update Guide

Prerequisites

Before you create the SAP HANA high availability cluster, make sure that the following prerequisites are met:

- You have read the SAP HANA planning guide and the SAP HANA high-availability planning guide.

- You or your organization has a Google Cloud account and you have created a project for the SAP HANA deployment. For information about creating Google Cloud accounts and projects, see Setting up your Google account in the SAP HANA Deployment Guide.

- If you require your SAP workload to run in compliance with data residency, access control, support personnel, or regulatory requirements, then you must create the required Assured Workloads folder. For more information, see Compliance and sovereign controls for SAP on Google Cloud.

The SAP HANA installation media is stored in a Cloud Storage bucket that is available in your deployment project and region. For information about how to upload SAP HANA installation media to a Cloud Storage bucket, see Downloading SAP HANA in the SAP HANA Deployment Guide.

If OS login is enabled in your project metadata, you need to disable OS login temporarily until your deployment is complete. For deployment purposes, this procedure configures SSH keys in instance metadata. When OS login is enabled, metadata-based SSH key configurations are disabled, and this deployment fails. After deployment is complete, you can enable OS login again.

For more information, see:

If you are using VPC internal DNS, the value of the

vmDnsSettingvariable in your project metadata must be eitherGlobalOnlyorZonalPreferredto enable the resolution of the node names across zones. The default setting ofvmDnsSettingisZonalOnly. For more information, see:You have an NFS solution, such as the managed Filestore solution, for sharing the SAP HANA

/hana/sharedand/hanabackupvolumes among the hosts in the scale-out SAP HANA system. To deploy Filestore NFS servers, see Creating instances.- Note that the primary and secondary sites must have access to their own dedicated NFS paths to avoid overwriting data. To use a single Filestore instance, you must configure the deployment to use distinct sub-directories as the mount path.

Creating a network

For security purposes, create a new network. You can control who has access by adding firewall rules or by using another access control method.

If your project has a default VPC network, then don't use it. Instead, create your own VPC network so that the only firewall rules in effect are those that you create explicitly.

During deployment, Compute Engine instances typically require access to the internet to download Google Cloud's Agent for SAP. If you are using one of the SAP-certified Linux images that are available from Google Cloud, then the compute instance also requires access to the internet in order to register the license and to access OS vendor repositories. A configuration with a NAT gateway and with VM network tags supports this access, even if the target compute instances don't have external IPs.

To set up networking:

Console

- In the Google Cloud console, go to the VPC networks page.

- Click Create VPC network.

- Enter a Name for the network.

The name must adhere to the naming convention. VPC networks use the Compute Engine naming convention.

- For Subnet creation mode, choose Custom.

- In the New subnet section, specify the following configuration parameters for a

subnet:

- Enter a Name for the subnet.

- For Region, select the Compute Engine region where you want to create the subnet.

- For IP stack type, select IPv4 (single-stack) and then enter an IP

address range in the

CIDR format,

such as

10.1.0.0/24.This is the primary IPv4 range for the subnet. If you plan to add more than one subnet, then assign non-overlapping CIDR IP ranges for each subnetwork in the network. Note that each subnetwork and its internal IP ranges are mapped to a single region.

- Click Done.

- To add more subnets, click Add subnet and repeat the preceding steps. You can add more subnets to the network after you have created the network.

- Click Create.

gcloud

- Go to Cloud Shell.

- To create a new network in the custom subnetworks mode, run:

gcloud compute networks create NETWORK_NAME --subnet-mode custom

Replace

NETWORK_NAMEwith the name of the new network. The name must adhere to the naming convention. VPC networks use the Compute Engine naming convention.Specify

--subnet-mode customto avoid using the default auto mode, which automatically creates a subnet in each Compute Engine region. For more information, see Subnet creation mode. - Create a subnetwork, and specify the region and IP range:

gcloud compute networks subnets create SUBNETWORK_NAME \ --network NETWORK_NAME --region REGION --range RANGEReplace the following:

SUBNETWORK_NAME: the name of the new subnetworkNETWORK_NAME: the name of the network you created in the previous stepREGION: the region where you want the subnetworkRANGE: the IP address range, specified in CIDR format, such as10.1.0.0/24If you plan to add more than one subnetwork, assign non-overlapping CIDR IP ranges for each subnetwork in the network. Note that each subnetwork and its internal IP ranges are mapped to a single region.

- Optionally, repeat the previous step and add additional subnetworks.

Setting up a NAT gateway

If you need to create one or more VMs without public IP addresses, then you need to use network address translation (NAT) to enable the VMs to access the internet. Use Cloud NAT, a Google Cloud distributed, software-defined managed service that lets VMs send outbound packets to the internet and receive any corresponding established inbound response packets. Alternatively, you can set up a separate VM as a NAT gateway.

To create a Cloud NAT instance for your project, see Using Cloud NAT.

After you configure Cloud NAT for your project, your VM instances can securely access the internet without a public IP address.

Adding firewall rules

By default, an implied firewall rule blocks incoming connections from outside your Virtual Private Cloud (VPC) network. To allow incoming connections, set up a firewall rule for your VM. After an incoming connection is established with a VM, traffic is permitted in both directions over that connection.

You can also create a firewall rule to allow external access to specified ports,

or to restrict access between VMs on the same network. If the default

VPC network type is used, some additional default rules also

apply, such as the default-allow-internal rule, which allows connectivity

between VMs on the same network on all ports.

Depending on the IT policy that is applicable to your environment, you might need to isolate or otherwise restrict connectivity to your database host, which you can do by creating firewall rules.

Depending on your scenario, you can create firewall rules to allow access for:

- The default SAP ports that are listed in TCP/IP of All SAP Products.

- Connections from your computer or your corporate network environment to your Compute Engine VM instance. If you are unsure of what IP address to use, talk to your company's network administrator.

To create a firewall rule:

Console

In the Google Cloud console, go to the Compute Engine Firewall page.

At the top of the page, click Create firewall rule.

- In the Network field, select the network where your VM is located.

- In the Targets field, specify the resources on Google Cloud that this rule applies to. For example, specify All instances in the network. Or to to limit the rule to specific instances on Google Cloud, enter tags in Specified target tags.

- In the Source filter field, select one of the following:

- IP ranges to allow incoming traffic from specific IP addresses. Specify the range of IP addresses in the Source IP ranges field.

- Subnets to allow incoming traffic from a particular subnetwork. Specify the subnetwork name in the following Subnets field. You can use this option to allow access between the VMs in a 3-tier or scaleout configuration.

- In the Protocols and ports section, select Specified protocols and

ports and enter

tcp:PORT_NUMBER.

Click Create to create your firewall rule.

gcloud

Create a firewall rule by using the following command:

$ gcloud compute firewall-rules create firewall-name

--direction=INGRESS --priority=1000 \

--network=network-name --action=ALLOW --rules=protocol:port \

--source-ranges ip-range --target-tags=network-tagsDeploying the VMs and SAP HANA

To deploy a multi-node SAP HANA HA system configured for full zone-redundancy, you use the Cloud Deployment Manager template for SAP HANA as base for the configuration, as well as an additional template to deploy a majority maker instance.

The deployment consists of the following:

- Two matching SAP HANA systems, each with two or more worker nodes.

- A single majority maker instance also known as tie-breaker node, which ensures the cluster quorum is maintained in case of the loss of one zone.

You add definition for all systems to the same YAML file such that the Deployment Manager deploys all resources under one deployment. After the SAP HANA systems and the majority maker instance are deployed successfully, you define and configure the HA cluster.

The following instructions use the Cloud Shell, but are generally applicable to the Google Cloud CLI.

Confirm that your current quotas for resources such as persistent disks and CPUs are sufficient for the SAP HANA systems you are about to install. If your quotas are insufficient, deployment fails. For the SAP HANA quota requirements, see Pricing and quota considerations for SAP HANA.

Open the Cloud Shell or, if you installed the gcloud CLI on your local workstation, open a terminal.

Download the

template.yamlconfiguration file template for the SAP HANA high-availability cluster to your working directory by entering the following command in the Cloud Shell or gcloud CLI:wget https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/latest/dm-templates/sap_hana/template.yaml

Optionally, rename the

template.yamlfile to identify the configuration it defines.Open the

template.yamlfile in the Cloud Shell code editor or, if you are using the gcloud CLI, the text editor of your choice.To open the Cloud Shell code editor, click the pencil icon in the upper right corner of the Cloud Shell terminal window.

In the

template.yamlfile, complete the definition of the primary SAP HANA system. Specify the property values by replacing the brackets and their contents with the values for your installation. The properties are described in the following table.To create the VM instances without installing SAP HANA, delete or comment out all of the lines that begin with

sap_hana_.Property Data type Description type String Specifies the location, type, and version of the Deployment Manager template to use during deployment.

The YAML file includes two

typespecifications, one of which is commented out. Thetypespecification that is active by default specifies the template version aslatest. Thetypespecification that is commented out specifies a specific template version with a timestamp.If you need all of your deployments to use the same template version, use the

typespecification that includes the timestamp.instanceNameString The name of the VM instance currently being defined. Specify different names in the primary and secondary VM definitions. Names must be specified in lowercase letters, numbers, or hyphens. If you're intending to use the

SAPHanaSR-angiHA/DR package provided by SUSE, then make sure that the instance names in your scale-out system don't include hyphens. This is because theSAPHanaSR-angipackage is known to not work as expected when the hostnames include hyphens.instanceTypeString The type of Compute Engine virtual machine that you need to run SAP HANA on. If you need a custom VM type, specify a predefined VM type with a number of vCPUs that is closest to the number you need while still being larger. After deployment is complete, modify the number of vCPUs and the amount of memory The minimum recommended instanceTypefor the majority maker instance isn1-standard-2or the equivalent of at least 2 CPU cores and 2 GB memory.zoneString The Google Cloud zone in which to deploy the VM instance that your are defining. Specify different zones in the same region for the primary HANA, secondary HANA, and majority maker instance definitions . The zones must be in the same region that you selected for your subnet. subnetworkString The name of the subnetwork you created in a previous step. If you are deploying to a shared VPC, specify this value as [SHAREDVPC_PROJECT]/[SUBNETWORK]. For example,myproject/network1.linuxImageString The name of the Linux operating-system image or image family that you are using with SAP HANA. To specify an image family, add the prefix family/to the family name. For example,family/sles-15-sp1-sap. To specify a specific image, specify only the image name. For the list of available images and families, see the Images page in Google Cloud console.linuxImageProjectString The Google Cloud project that contains the image you are going to use. This project might be your own project or a Google Cloud image project, such as suse-sap-cloud. For more information about Google Cloud image projects, see the Images page in the Compute Engine documentation.sap_hana_deployment_bucketString The name of the Google Cloud storage bucket in your project that contains the SAP HANA installation and revision files that you uploaded in a previous step. Any upgrade revision files in the bucket are applied to SAP HANA during the deployment process. sap_hana_sidString The SAP HANA system ID (SID). The ID must consist of three alphanumeric characters and begin with a letter. All letters must be uppercase. sap_hana_instance_numberInteger The instance number, 0 to 99, of the SAP HANA system. The default is 0. sap_hana_sidadm_passwordString The password for the operating system (OS) administrator. Passwords must be at least eight characters and include at least one uppercase letter, one lowercase letter, and one number. sap_hana_system_passwordString The password for the database superuser. Passwords must be at least 8 characters and include at least one uppercase letter, one lowercase letter, and one number. sap_hana_sidadm_uidInteger The default value for the SID_LCadmuser ID is900to avoid user-created groups conflicting with SAP HANA. You can change this to a different value if you need to.sap_hana_sapsys_gidInteger The default group ID for sapsys is 79. By specifying a value above you can override this value to your requirements.sap_hana_scaleout_nodesInteger Specify 1or greater.sap_hana_shared_nfsString The NFS mount point for the /hana/sharedvolume. For example,10.151.91.122:/hana_shared_nfs.sap_hana_backup_nfsString The NFS mount point for the /hanabackupvolume. For example,10.216.41.122:/hana_backup_nfs.networkTagString A network tag that represents your VM instance for firewall or routing purposes. If you specify publicIP: Noand do not specify a network tag, be sure to provide another means of access to the internet.nic_typeString Optional but recommended if available for the target machine and OS version. Specifies the network interface to use with the VM instance. You can specify the value GVNICorVIRTIO_NET. To use a Google Virtual NIC (gVNIC), you need to specify an OS image that supports gVNIC as the value for thelinuxImageproperty. For the OS image list, see Operating system details.If you do not specify a value for this property, then the network interface is automatically selected based on the machine type that you specify for the

This argument is available in Deployment Manager template versionsinstanceTypeproperty.202302060649or later.publicIPBoolean Optional. Determines whether a public IP address is added to your VM instance. The default is Yes.serviceAccountString Optional. Specifies a service account to be used by the host VMs and by the programs that run on the host VMs. Specify the email address of the service account. For example, svc-acct-name@project-id.iam.gserviceaccount.com. By default, the Compute Engine default service account is used. For more information, see Identity and access management for SAP programs on Google Cloud. Create the definition of the secondary SAP HANA system by copying the definition of the primary SAP HANA system and pasting the copy after the primary SAP HANA system definition. See the example following these steps.

In the definition of the secondary SAP HANA system, specify different values for the following properties than you specified in the primary SAP HANA system definition:

nameinstanceNamezone

Download the

sap_majoritymaker.yamlmajority maker instance configuration file:wget https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/latest/dm-templates/sap_majoritymaker/template.yaml -O sap_majoritymaker.yaml

Copy and paste the YAML specification from the

sap_majoritymaker.yamlfile, starting from line #6 and below, to the bottom of the SAP HANAtemplate.yamlfile.Complete the definition for the majority maker instance:

- Specify a

zonethat is different from the two SAP HANA systems. - The minimum recommended

instanceTypeisn1-standard-2or the equivalent of at least 2 CPU cores and 2 GB memory.

You should now have three resources listed in your YAML file, two SAP HANA clusters and one majority maker instance, along with their configurable properties.

- Specify a

Create the instances:

gcloud deployment-manager deployments create DEPLOYMENT_NAME --config TEMPLATE_NAME.yaml

The above command invokes the Deployment Manager, which deploys the VMs, downloads the SAP HANA software from your storage bucket, and installs SAP HANA, all according to the specifications in your

template.yamlfile.Deployment processing consists of two stages. In the first stage, Deployment Manager writes its status to the console. In the second stage, the deployment scripts write their status to Cloud Logging.

Example of a complete template.yaml configuration file

The following example shows a completed template.yaml configuration file

that deploys two scale-out clusters with a SAP HANA system installed,

and a single VM instance acting as the majority maker.

The file contains the definitions of two resources to deploy:

sap_hana_primary and sap_hana_secondary. Each resource definition

contains the definitions for a VM and a SAP HANA instance.

The sap_hana_secondary resource definition was created by copying and pasting

the first definition, and then modifying the values of name,

instanceName, and zone properties. All other property values in the

two resource definitions are the same.

The properties networkTag, serviceAccount, sap_hana_sidadm_uid, and

sap_hana_sapsys_gid are from the Advanced Options section of the

configuration file template. The properties sap_hana_sidadm_uid and

sap_hana_sapsys_gid are included to show their default values, which are used

because the properties are commented out.

resources:

- name: sap_hana_primary

type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/latest/dm-templates/sap_hana/sap_hana.py

#

# By default, this configuration file uses the latest release of the deployment

# scripts for SAP on Google Cloud. To fix your deployments to a specific release

# of the scripts, comment out the type property above and uncomment the type property below.

#

# type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/yyyymmddhhmm/dm-templates/sap_hana/sap_hana.py

#

properties:

instanceName: hana-ha-vm-1

instanceType: n2-highmem-32

zone: us-central1-a

subnetwork: example-subnet-us-central1

linuxImage: family/sles-15-sp1-sap

linuxImageProject: suse-sap-cloud

sap_hana_deployment_bucket: hana2-sp4-rev46

sap_hana_sid: HA1

sap_hana_instance_number: 22

sap_hana_sidadm_password: Tempa55word

sap_hana_system_password: Tempa55word

sap_hana_scaleout_nodes: 2

sap_hana_shared_nfs: 10.151.91.123:/hana_shared_nfs

sap_hana_backup_nfs: 10.216.41.123:/hana_backup_nfs

networkTag: cluster-ntwk-tag

serviceAccount: limited-roles@example-project-123456.iam.gserviceaccount.com

# sap_hana_sidadm_uid: 900

# sap_hana_sapsys_gid: 79

- name: sap_hana_secondary

type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/latest/dm-templates/sap_hana/sap_hana.py

#

# By default, this configuration file uses the latest release of the deployment

# scripts for SAP on Google Cloud. To fix your deployments to a specific release

# of the scripts, comment out the type property above and uncomment the type property below.

#

# type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/yyyymmddhhmm/dm-templates/sap_hana/sap_hana.py

#

properties:

instanceName: hana-ha-vm-2

instanceType: n2-highmem-32

zone: us-central1-c

subnetwork: example-subnet-us-central1

linuxImage: family/sles-15-sp1-sap

linuxImageProject: suse-sap-cloud

sap_hana_deployment_bucket: hana2-sp4-rev46

sap_hana_sid: HA1

sap_hana_instance_number: 22

sap_hana_sidadm_password: Google123

sap_hana_system_password: Google123

sap_hana_scaleout_nodes: 2

sap_hana_shared_nfs: 10.141.91.124:/hana_shared_nfs

sap_hana_backup_nfs: 10.106.41.124:/hana_backup_nfs

networkTag: cluster-ntwk-tag

serviceAccount: limited-roles@example-project-123456.iam.gserviceaccount.com

# sap_hana_sidadm_uid: 900

# sap_hana_sapsys_gid: 79

- name: sap_majoritymaker

type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/latest/dm-templates/sap_majoritymaker/sap_majoritymaker.py

#

# By default, this configuration file uses the latest release of the deployment

# scripts for SAP on Google Cloud. To fix your deployments to a specific release

# of the scripts, comment out the type property above and uncomment the type property below.

#

# type: https://storage.googleapis.com/cloudsapdeploy/deploymentmanager/202208181245/dm-templates/sap_majoritymaker/sap_majoritymaker.py

properties:

instanceName: sap-majoritymaker

instanceType: n1-standard-2

zone: us-central1-b

subnetwork: example-subnet-us-central1

linuxImage: family/sles-15-sp1-sap

linuxImageProject: suse-sap-cloud

publicIP: No

Create firewall rules that allow access to the host VMs

If you haven't done so already, create firewall rules that allow access to each host VM from the following sources:

- For configuration purposes, your local workstation, a bastion host, or a jump server

- For access between the cluster nodes, the other host VMs in the HA cluster

When you create VPC firewall rules, you specify the network

tags that you defined in the template.yaml configuration file to designate

your host VMs as the target for the rule.

To verify deployment, define a rule to allow SSH connections on port 22 from a bastion host or your local workstation.

For access between the cluster nodes, add a firewall rule that allows all connection types on any port from other VMs in the same subnetwork.

Make sure that the firewall rules for verifying deployment and for intra-cluster communication are created before proceeding to the next section. For instructions, see Adding firewall rules.

Verifying the deployment of the VMs and SAP HANA

To verify deployment, you check the deployment logs in Cloud Logging and check the disks and services on the VMs of primary and secondary hosts.

In the Google Cloud console, open Cloud Logging to monitor installation progress and check for errors.

Filter the logs:

Logs Explorer

In the Logs Explorer page, go to the Query pane.

From the Resource drop-down menu, select Global, and then click Add.

If you don't see the Global option, then in the query editor, enter the following query:

resource.type="global" "Deployment"Click Run query.

Legacy Logs Viewer

- In the Legacy Logs Viewer page, from the basic selector menu, select Global as your logging resource.

Analyze the filtered logs:

- If

"--- Finished"is displayed, then the deployment processing is complete and you can proceed to the next step. If you see a quota error:

On the IAM & Admin Quotas page, increase any of your quotas that do not meet the SAP HANA requirements that are listed in the SAP HANA planning guide.

On the Deployment Manager Deployments page, delete the deployment to clean up the VMs and persistent disks from the failed installation.

Rerun your deployment.

- If

Check the deployment status of the majority maker

You can check the deployment status of the majority maker using the following command.

gcloud compute instances describe MAJORITY_MAKER_HOSTNAME --zone MAJORITY_MAKER_ZONE --format="table[box,title='Deployment Status'](name:label=Instance_Name,metadata.items.status:label=Status)"

If Complete status is displayed, then the deployment processing

is successful for the majority maker instance.

For an ongoing deployment, <blank> status is displayed.

Check the configuration of the VMs and SAP HANA

After the SAP HANA system deploys without errors, connect to each VM by using SSH. From the Compute Engine VM instances page, you can click the SSH button for each VM instance, or you can use your preferred SSH method.

Change to the root user.

$sudo su -At the command prompt, enter

df -h. On each VM, ensure that you see the/hanadirectories, such as/hana/data.Filesystem Size Used Avail Use% Mounted on /dev/sda2 30G 4.0G 26G 14% / devtmpfs 126G 0 126G 0% /dev tmpfs 126G 0 126G 0% /dev/shm tmpfs 126G 17M 126G 1% /run tmpfs 126G 0 126G 0% /sys/fs/cgroup /dev/sda1 200M 9.7M 191M 5% /boot/efi /dev/mapper/vg_hana-shared 251G 49G 203G 20% /hana/shared /dev/mapper/vg_hana-sap 32G 240M 32G 1% /usr/sap /dev/mapper/vg_hana-data 426G 7.0G 419G 2% /hana/data /dev/mapper/vg_hana-log 125G 4.2G 121G 4% /hana/log /dev/mapper/vg_hanabackup-backup 512G 33M 512G 1% /hanabackup tmpfs 26G 0 26G 0% /run/user/900 tmpfs 26G 0 26G 0% /run/user/899 tmpfs 26G 0 26G 0% /run/user/1000

Change to the SAP admin user by replacing

SID_LCin the following command with the system ID that you specified in the configuration file template. Use lowercase for any letters.#su - SID_LCadmEnsure that the SAP HANA services, such as

hdbnameserver,hdbindexserver, and others, are running on the instance by entering the following command:>HDB infoIf you are using RHEL for SAP 9.0 or later, then make sure that the packages

chkconfigandcompat-openssl11are installed on your VM instance.For more information from SAP, see SAP Note 3108316 - Red Hat Enterprise Linux 9.x: Installation and Configuration .

Validate your installation of Google Cloud's Agent for SAP

After you have deployed a VM and installed your SAP system, validate that Google Cloud's Agent for SAP is functioning properly.

Verify that Google Cloud's Agent for SAP is running

To verify that the agent is running, follow these steps:

Establish an SSH connection with your Compute Engine instance.

Run the following command:

systemctl status google-cloud-sap-agent

If the agent is functioning properly, then the output contains

active (running). For example:google-cloud-sap-agent.service - Google Cloud Agent for SAP Loaded: loaded (/usr/lib/systemd/system/google-cloud-sap-agent.service; enabled; vendor preset: disabled) Active: active (running) since Fri 2022-12-02 07:21:42 UTC; 4 days ago Main PID: 1337673 (google-cloud-sa) Tasks: 9 (limit: 100427) Memory: 22.4 M (max: 1.0G limit: 1.0G) CGroup: /system.slice/google-cloud-sap-agent.service └─1337673 /usr/bin/google-cloud-sap-agent

If the agent isn't running, then restart the agent.

Verify that SAP Host Agent is receiving metrics

To verify that the infrastructure metrics are collected by Google Cloud's Agent for SAP and sent correctly to the SAP Host Agent, follow these steps:

- In your SAP system, enter transaction

ST06. In the overview pane, check the availability and content of the following fields for the correct end-to-end setup of the SAP and Google monitoring infrastructure:

- Cloud Provider:

Google Cloud Platform - Enhanced Monitoring Access:

TRUE - Enhanced Monitoring Details:

ACTIVE

- Cloud Provider:

Set up monitoring for SAP HANA

Optionally, you can monitor your SAP HANA instances using Google Cloud's Agent for SAP. From version 2.0, you can configure the agent to collect the SAP HANA monitoring metrics and send them to Cloud Monitoring. Cloud Monitoring lets you create dashboards to visualize these metrics, set up alerts based on metric thresholds, and more.

For more information about the collection of SAP HANA monitoring metrics using Google Cloud's Agent for SAP, see SAP HANA monitoring metrics collection.

(Optional) Create a list of instances for script automation

To partially automate some of the repetitive tasks during the configuration of SAP HANA system and Pacemaker cluster, you can use bash scripts. Throughout this guide, such bash scripts are used to speed up the configuration of your SAP HANA system and Pacemaker cluster. These scripts require a list of all deployed VM instances and their corresponding zones as an input.

To enable this automation, create a file named nodes.txt and include the

details of all the deployed VM instances in the following format: zone name,

whitespace, and then the VM instance name. The following sample file is used

throughout this guide:

# cat nodes.txt us-west1-a hana-ha-vm-1 us-west1-a hana-ha-vm-1w1 us-west1-a hana-ha-vm-1w2 us-west1-b hana-majoritymaker us-west1-c hana-ha-vm-2 us-west1-c hana-ha-vm-2w1 us-west1-c hana-ha-vm-2w2

Set up passwordless SSH access

To configure the Pacemaker cluster and to synchronize the SAP HANA secure store (SSFS) keys, passwordless SSH access is required between all nodes, including the majority maker instance. For passwordless SSH access, you need to add the SSH public keys to the instance metadata of all deployed instances.

The format of the metadata is USERNAME: PUBLIC-KEY-VALUE.

For more information about adding SSH keys to VMs, see Add SSH keys to VMs that use metadata-based SSH keys.

Manual steps

For each instance in the primary and secondary systems, as well as the majority maker instance, collect the public key for the user

root.gcloud compute ssh --quiet --zone ZONE_ID INSTANCE_NAME -- sudo cat /root/.ssh/id_rsa.pub

Prepend the key with the string

root:and write the key as a new line into the file calledpublic-ssh-keys.txt, for example:root:ssh-rsa AAAAB3NzaC1JfuYnOI1vutCs= root@INSTANCE_NAME

After collecting all SSH public keys, upload the keys as metadata to all instances:

gcloud compute instances add-metadata --metadata-from-file ssh-keys=public-ssh-keys.txt --zone ZONE_ID INSTANCE_NAME

Automated steps

Alternatively, to automate the process of setting up passwordless SSH access for

all instances listed in nodes.txt,

perform the following steps from Google Cloud console:

Create a list of public keys from all deployed instances:

while read -u10 ZONE HOST ; do echo "Collecting public-key from $HOST"; { echo 'root:'; gcloud compute ssh --quiet --zone $ZONE $HOST --tunnel-through-iap -- sudo cat /root/.ssh/id_rsa.pub; } | tr -ds '\n' " " >> public-ssh-keys.txt; done 10< nodes.txtAssign the SSH public keys as metadata entries to all instances:

while read -u10 ZONE HOST ; do echo "Adding public keys to $HOST"; gcloud compute instances add-metadata --metadata-from-file ssh-keys=public-ssh-keys.txt --zone $ZONE $HOST; done 10< nodes.txt

Disable SAP HANA autostart

Manual steps

For each SAP HANA instance in the cluster, make sure that SAP HANA autostart is disabled. For failovers, Pacemaker manages the starting and stopping of the SAP HANA instances in a cluster.

On each host as SID_LCadm, stop SAP HANA:

>HDB stopOn each host, open the SAP HANA profile by using an editor, such as vi:

vi /usr/sap/SID/SYS/profile/SID_HDBINST_NUM_HOST_NAME

Set the

Autostartproperty to0:Autostart=0

Save the profile.

On each host as SID_LCadm, start SAP HANA:

>HDB start

Automated steps

Alternatively, to disable SAP HANA autostart for all instances listed in

nodes.txt,

run the following script from Google Cloud console:

while read -u10 ZONE HOST ; do gcloud compute ssh --verbosity=none --zone $ZONE $HOST -- "echo Setting Autostart=0 on \$HOSTNAME; sudo sed -i 's/Autostart=1/Autostart=0/g' /usr/sap/SID/SYS/profile/SID_HDBINST_NUM_\$HOSTNAME"; done 10< nodes.txt

Enable SAP HANA Fast Restart

Google Cloud strongly recommends enabling SAP HANA Fast Restart for each instance of SAP HANA, especially for larger instances. SAP HANA Fast Restart reduces restart time in the event that SAP HANA terminates, but the operating system remains running.

As configured by the automation scripts that Google Cloud provides,

the operating system and kernel settings already support SAP HANA Fast Restart.

You need to define the tmpfs file system and configure SAP HANA.

To define the tmpfs file system and configure SAP HANA, you can follow

the manual steps or use the automation script that

Google Cloud provides to enable SAP HANA Fast Restart. For more

information, see:

For the complete authoritative instructions for SAP HANA Fast Restart, see the SAP HANA Fast Restart Option documentation.

Manual steps

Configure the tmpfs file system

After the host VMs and the base SAP HANA systems are successfully deployed,

you need to create and mount directories for the NUMA nodes in the tmpfs

file system.

Display the NUMA topology of your VM

Before you can map the required tmpfs file system, you need to know how

many NUMA nodes your VM has. To display the available NUMA nodes on

a Compute Engine VM, enter the following command:

lscpu | grep NUMA

For example, an m2-ultramem-208 VM type has four NUMA nodes,

numbered 0-3, as shown in the following example:

NUMA node(s): 4 NUMA node0 CPU(s): 0-25,104-129 NUMA node1 CPU(s): 26-51,130-155 NUMA node2 CPU(s): 52-77,156-181 NUMA node3 CPU(s): 78-103,182-207

Create the NUMA node directories

Create a directory for each NUMA node in your VM and set the permissions.

For example, for four NUMA nodes that are numbered 0-3:

mkdir -pv /hana/tmpfs{0..3}/SID

chown -R SID_LCadm:sapsys /hana/tmpfs*/SID

chmod 777 -R /hana/tmpfs*/SIDMount the NUMA node directories to tmpfs

Mount the tmpfs file system directories and specify

a NUMA node preference for each with mpol=prefer:

SID specify the SID with uppercase letters.

mount tmpfsSID0 -t tmpfs -o mpol=prefer:0 /hana/tmpfs0/SID mount tmpfsSID1 -t tmpfs -o mpol=prefer:1 /hana/tmpfs1/SID mount tmpfsSID2 -t tmpfs -o mpol=prefer:2 /hana/tmpfs2/SID mount tmpfsSID3 -t tmpfs -o mpol=prefer:3 /hana/tmpfs3/SID

Update /etc/fstab

To ensure that the mount points are available after an operating system

reboot, add entries into the file system table, /etc/fstab:

tmpfsSID0 /hana/tmpfs0/SID tmpfs rw,nofail,relatime,mpol=prefer:0 tmpfsSID1 /hana/tmpfs1/SID tmpfs rw,nofail,relatime,mpol=prefer:1 tmpfsSID1 /hana/tmpfs2/SID tmpfs rw,nofail,relatime,mpol=prefer:2 tmpfsSID1 /hana/tmpfs3/SID tmpfs rw,nofail,relatime,mpol=prefer:3

Optional: set limits on memory usage

The tmpfs file system can grow and shrink dynamically.

To limit the memory used by the tmpfs file system, you

can set a size limit for a NUMA node volume with the size option.

For example:

mount tmpfsSID0 -t tmpfs -o mpol=prefer:0,size=250G /hana/tmpfs0/SID

You can also limit overall tmpfs memory usage for all NUMA nodes for

a given SAP HANA instance and a given server node by setting the

persistent_memory_global_allocation_limit parameter in the [memorymanager]

section of the global.ini file.

SAP HANA configuration for Fast Restart

To configure SAP HANA for Fast Restart, update the global.ini file

and specify the tables to store in persistent memory.

Update the [persistence] section in the global.ini file

Configure the [persistence] section in the SAP HANA global.ini file

to reference the tmpfs locations. Separate each tmpfs location with

a semicolon:

[persistence] basepath_datavolumes = /hana/data basepath_logvolumes = /hana/log basepath_persistent_memory_volumes = /hana/tmpfs0/SID;/hana/tmpfs1/SID;/hana/tmpfs2/SID;/hana/tmpfs3/SID

The preceding example specifies four memory volumes for four NUMA nodes,

which corresponds to the m2-ultramem-208. If you were running on

the m2-ultramem-416, you would need to configure eight memory volumes (0..7).

Restart SAP HANA after modifying the global.ini file.

SAP HANA can now use the tmpfs location as persistent memory space.

Specify the tables to store in persistent memory

Specify specific column tables or partitions to store in persistent memory.

For example, to turn on persistent memory for an existing table, execute the SQL query:

ALTER TABLE exampletable persistent memory ON immediate CASCADE

To change the default for new tables add the parameter

table_default in the indexserver.ini file. For example:

[persistent_memory] table_default = ON

For more information on how to control columns, tables and which monitoring views provide detailed information, see SAP HANA Persistent Memory.

Automated steps

The automation script that Google Cloud provides to enable

SAP HANA Fast Restart

makes changes to directories /hana/tmpfs*, file /etc/fstab, and

SAP HANA configuration. When you run the script, you might need to perform

additional steps depending on whether this is the initial deployment of your

SAP HANA system or you are resizing your machine to a different NUMA size.

For the initial deployment of your SAP HANA system or resizing the machine to increase the number of NUMA nodes, make sure that SAP HANA is running during the execution of automation script that Google Cloud provides to enable SAP HANA Fast Restart.

When you resize your machine to decrease the number of NUMA nodes, make sure that SAP HANA is stopped during the execution of the automation script that Google Cloud provides to enable SAP HANA Fast Restart. After the script is executed, you need to manually update the SAP HANA configuration to complete the SAP HANA Fast Restart setup. For more information, see SAP HANA configuration for Fast Restart.

To enable SAP HANA Fast Restart, follow these steps:

Establish an SSH connection with your host VM.

Switch to root:

sudo su -

Download the

sap_lib_hdbfr.shscript:wget https://storage.googleapis.com/cloudsapdeploy/terraform/latest/terraform/lib/sap_lib_hdbfr.sh

Make the file executable:

chmod +x sap_lib_hdbfr.sh

Verify that the script has no errors:

vi sap_lib_hdbfr.sh ./sap_lib_hdbfr.sh -help

If the command returns an error, contact Cloud Customer Care. For more information about contacting Customer Care, see Getting support for SAP on Google Cloud.

Run the script after replacing SAP HANA system ID (SID) and password for the SYSTEM user of the SAP HANA database. To securely provide the password, we recommend that you use a secret in Secret Manager.

Run the script by using the name of a secret in Secret Manager. This secret must exist in the Google Cloud project that contains your host VM instance.

sudo ./sap_lib_hdbfr.sh -h 'SID' -s SECRET_NAME

Replace the following:

SID: specify the SID with uppercase letters. For example,AHA.SECRET_NAME: specify the name of the secret that corresponds to the password for the SYSTEM user of the SAP HANA database. This secret must exist in the Google Cloud project that contains your host VM instance.

Alternatively, you can run the script using a plain text password. After SAP HANA Fast Restart is enabled, make sure to change your password. Using plain text password is not recommended as your password would be recorded in the command-line history of your VM.

sudo ./sap_lib_hdbfr.sh -h 'SID' -p 'PASSWORD'

Replace the following:

SID: specify the SID with uppercase letters. For example,AHA.PASSWORD: specify the password for the SYSTEM user of the SAP HANA database.

For a successful initial run, you should see an output similar to the following:

INFO - Script is running in standalone mode

ls: cannot access '/hana/tmpfs*': No such file or directory

INFO - Setting up HANA Fast Restart for system 'TST/00'.

INFO - Number of NUMA nodes is 2

INFO - Number of directories /hana/tmpfs* is 0

INFO - HANA version 2.57

INFO - No directories /hana/tmpfs* exist. Assuming initial setup.

INFO - Creating 2 directories /hana/tmpfs* and mounting them

INFO - Adding /hana/tmpfs* entries to /etc/fstab. Copy is in /etc/fstab.20220625_030839

INFO - Updating the HANA configuration.

INFO - Running command: select * from dummy

DUMMY

"X"

1 row selected (overall time 4124 usec; server time 130 usec)

INFO - Running command: ALTER SYSTEM ALTER CONFIGURATION ('global.ini', 'SYSTEM') SET ('persistence', 'basepath_persistent_memory_volumes') = '/hana/tmpfs0/TST;/hana/tmpfs1/TST;'

0 rows affected (overall time 3570 usec; server time 2239 usec)

INFO - Running command: ALTER SYSTEM ALTER CONFIGURATION ('global.ini', 'SYSTEM') SET ('persistent_memory', 'table_unload_action') = 'retain';

0 rows affected (overall time 4308 usec; server time 2441 usec)

INFO - Running command: ALTER SYSTEM ALTER CONFIGURATION ('indexserver.ini', 'SYSTEM') SET ('persistent_memory', 'table_default') = 'ON';

0 rows affected (overall time 3422 usec; server time 2152 usec)

Download SUSE packages

Uninstall the resource agents used for scale-up deployments and replace them with the resource agents used for scale-out.

For SLES for SAP 15 SP6 or later, using the SAPHanaSR-angi package is

recommended. For SLES for SAP 15 SP5 or earlier, you can use the

SAPHanaSR-ScaleOut and SAPHanaSR-ScaleOut-doc packages.

Manual steps

Perform the following steps on all hosts, including the majority maker instance:

Uninstall the SAP HANA scale-up resource agents:

zypper remove SAPHanaSR SAPHanaSR-doc

Install the SAP HANA scale-out resource agents:

SLES for SAP 15 SP5 or earlier

zypper in SAPHanaSR-ScaleOut SAPHanaSR-ScaleOut-doc

SLES for SAP 15 SP6 or later

zypper install SAPHanaSR-angi

Install

socat:zypper install socat

Install the latest operating system patches:

zypper patch

Automated steps

Alternatively, to automate this process for all instances listed in

nodes.txt, run

the following script from Google Cloud console:

SLES for SAP 15 SP5 or earlier

while read -u10 HOST ; do gcloud compute ssh --zone $HOST -- "sudo zypper remove -y SAPHanaSR SAPHanaSR-doc; sudo zypper in -y SAPHanaSR-ScaleOut SAPHanaSR-ScaleOut-doc socat; sudo zypper patch -y"; done 10< nodes.txt

SLES for SAP 15 SP6 or later

while read -u10 HOST ; do gcloud compute ssh --zone $HOST -- "sudo zypper remove -y SAPHanaSR SAPHanaSR-doc; sudo zypper in -y SAPHanaSR-angi socat; sudo zypper patch -y"; done 10< nodes.txt

Back up the databases

Create backups of your databases to initiate database logging for SAP HANA system replication and create a recovery point.

If you have multiple tenant databases in an MDC configuration, back up each tenant database.

The Deployment Manager template uses /hanabackup/data/SID as the default backup directory.

To create backups of new SAP HANA databases:

On the primary host, switch to

SID_LCadm. Depending on your OS image, the command might be different.sudo -i -u SID_LCadm

Create database backups:

For a SAP HANA single-database-container system:

>hdbsql -t -u system -p SYSTEM_PASSWORD -i INST_NUM \ "backup data using file ('full')"The following example shows a successful response from a new SAP HANA system:

0 rows affected (overall time 18.416058 sec; server time 18.414209 sec)

For a SAP HANA multi-database-container system (MDC), create a backup of the system database as well as any tenant databases:

>hdbsql -t -d SYSTEMDB -u system -p SYSTEM_PASSWORD -i INST_NUM \ "backup data using file ('full')">hdbsql -t -d SID -u system -p SYSTEM_PASSWORD -i INST_NUM \ "backup data using file ('full')"

The following example shows a successful response from a new SAP HANA system:

0 rows affected (overall time 16.590498 sec; server time 16.588806 sec)

Confirm that the logging mode is set to normal:

>hdbsql -u system -p SYSTEM_PASSWORD -i INST_NUM \ "select value from "SYS"."M_INIFILE_CONTENTS" where key='log_mode'"You should see:

VALUE "normal"

Enable SAP HANA system replication

As a part of enabling SAP HANA system replication, you need to copy the data and key files for the SAP HANA secure stores on the file system (SSFS) from the primary host to the secondary host. The method that this procedure uses to copy the files is just one possible method that you can use.

On the primary host as

SID_LCadm, enable system replication:>hdbnsutil -sr_enable --name=PRIMARY_HOST_NAMEOn the secondary host:

As

SID_LCadm, stop SAP HANA:>sapcontrol -nr INST_NUM -function StopSystemAs root, archive the existing SSFS data and key files:

#cd /usr/sap/SID/SYS/global/security/rsecssfs/#mv data/SSFS_SID.DAT data/SSFS_SID.DAT-ARC#mv key/SSFS_SID.KEY key/SSFS_SID.KEY-ARCCopy the data file from the primary host:

#scp -o StrictHostKeyChecking=no \ PRIMARY_HOST_NAME:/usr/sap/SID/SYS/global/security/rsecssfs/data/SSFS_SID.DAT \ /usr/sap/SID/SYS/global/security/rsecssfs/data/SSFS_SID.DATCopy the key file from the primary host:

#scp -o StrictHostKeyChecking=no \ PRIMARY_HOST_NAME:/usr/sap/SID/SYS/global/security/rsecssfs/key/SSFS_SID.KEY \ /usr/sap/SID/SYS/global/security/rsecssfs/key/SSFS_SID.KEYUpdate ownership of the files:

#chown SID_LCadm:sapsys /usr/sap/SID/SYS/global/security/rsecssfs/data/SSFS_SID.DAT#chown SID_LCadm:sapsys /usr/sap/SID/SYS/global/security/rsecssfs/key/SSFS_SID.KEYUpdate permissions for the files:

#chmod 644 /usr/sap/SID/SYS/global/security/rsecssfs/data/SSFS_SID.DAT#chmod 640 /usr/sap/SID/SYS/global/security/rsecssfs/key/SSFS_SID.KEYAs SID_LCadm, register the secondary SAP HANA system with SAP HANA system replication:

>hdbnsutil -sr_register --remoteHost=PRIMARY_HOST_NAME --remoteInstance=INST_NUM \ --replicationMode=syncmem --operationMode=logreplay --name=SECONDARY_HOST_NAMEAs SID_LCadm, start SAP HANA:

>sapcontrol -nr INST_NUM -function StartSystem

Validating system replication

On the primary host as SID_LCadm, confirm that SAP

HANA system replication is active by running the following python script:

$ python $DIR_INSTANCE/exe/python_support/systemReplicationStatus.pyIf replication is set up properly, among other indicators, the following values

are displayed for the xsengine, nameserver, and indexserver services:

- The

Secondary Active StatusisYES - The

Replication StatusisACTIVE

Also, the overall system replication status shows ACTIVE.

Enable the SAP HANA HA/DR provider hooks

SUSE recommends

that you enable the SAP HANA HA/DR provider hooks, which let

SAP HANA to send out notifications for certain events and improves failure

detection.

The SAP HANA HA/DR provider hooks

require SAP HANA 2.0 SPS 03 or a later version for the SAPHanaSR hook, and SAP

HANA 2.0 SPS 05 or a later version for SAPHanaSR-angi hook.

On both the primary and secondary site, complete the following steps:

As

SID_LCadm, stop SAP HANA:>sapcontrol -nr 00 -function StopSystem

As root or

SID_LCadm, open theglobal.inifile for editing:>vi /hana/shared/SID/global/hdb/custom/config/global.iniAdd the following definitions to the

global.inifile:SLES for SAP 15 SP5 or earlier

[ha_dr_provider_saphanasrmultitarget] provider = SAPHanaSrMultiTarget path = /usr/share/SAPHanaSR-ScaleOut/ execution_order = 1 [ha_dr_provider_sustkover] provider = susTkOver path = /usr/share/SAPHanaSR-ScaleOut/ execution_order = 2 sustkover_timeout = 30 [ha_dr_provider_suschksrv] provider = susChkSrv path = /usr/share/SAPHanaSR-ScaleOut/ execution_order = 3 action_on_lost = stop [trace] ha_dr_saphanasrmultitarget = info ha_dr_sustkover = info

SLES for SAP 15 SP6 or later

[ha_dr_provider_susHanaSR] provider = susHanaSR path = /usr/share/SAPHanaSR-angi execution_order = 1 [ha_dr_provider_suschksrv] provider = susChkSrv path = /usr/share/SAPHanaSR-angi execution_order = 3 action_on_lost = stop [ha_dr_provider_susTkOver] provider = susTkOver path = /usr/share/SAPHanaSR-angi execution_order = 1 sustkover_timeout = 30 [trace] ha_dr_sushanasr = info ha_dr_suschksrv = info ha_dr_sustkover = info

As root, create a custom configuration file in the

/etc/sudoers.ddirectory by running the following command. This new configuration file allows theSID_LCadmuser to access the cluster node attributes when thesrConnectionChanged()hook method is called.>visudo -f /etc/sudoers.d/SAPHanaSRIn the

/etc/sudoers.d/SAPHanaSRfile, add the following text:SLES for SAP 15 SP5 or earlier

Replace

SID_LCwith the SID in lowercase letters.SID_LCadm ALL=(ALL) NOPASSWD: /usr/sbin/crm_attribute -n hana_SID_LC_site_srHook_* SID_LCadm ALL=(ALL) NOPASSWD: /usr/sbin/crm_attribute -n hana_SID_LC_gsh * SID_LCadm ALL=(ALL) NOPASSWD: /usr/sbin/SAPHanaSR-hookHelper --sid=SID_LC *

SLES for SAP 15 SP6 or later

Replace the following:

SITE_A: the site name of the primary SAP HANA serverSITE_B: the site name of the secondary SAP HANA serverSID_LC: the SID, specified using lowercase letters

crm_mon -A1 | grep sitecommand as the root user, on either the SAP HANA primary server or the secondary server.Cmnd_Alias SOK_SITEA = /usr/sbin/crm_attribute -n hana_SID_LC_site_srHook_SITE_A -v SOK -t crm_config -s SAPHanaSR Cmnd_Alias SFAIL_SITEA = /usr/sbin/crm_attribute -n hana_SID_LC_site_srHook_SITE_A -v SFAIL -t crm_config -s SAPHanaSR Cmnd_Alias SOK_SITEB = /usr/sbin/crm_attribute -n hana_SID_LC_site_srHook_SITE_B -v SOK -t crm_config -s SAPHanaSR Cmnd_Alias SFAIL_SITEB = /usr/sbin/crm_attribute -n hana_SID_LC_site_srHook_SITE_B -v SFAIL -t crm_config -s SAPHanaSR SID_LCadm ALL=(ALL) NOPASSWD: /usr/bin/SAPHanaSR-hookHelper --sid=SID --case=* SID_LCadm ALL=(ALL) NOPASSWD: SOK_SITEA, SFAIL_SITEA, SOK_SITEB, SFAIL_SITEB

In your

/etc/sudoersfile, make sure that the following text is included:For SLES for SAP 15 SP3 or later:

@includedir /etc/sudoers.d

For versions up to SLES for SAP 15 SP2:

#includedir /etc/sudoers.d

Note that the

#in this text is part of the syntax and does not mean that the line is a comment.

As

SID_LCadm, start SAP HANA:>sapcontrol -nr 00 -function StartSystemAfter you complete the cluster configuration for SAP HANA, you can verify that the hook functions correctly during a failover test as described in Troubleshooting the SAPHanaSR python hook and HA cluster takeover takes too long on HANA indexserver failure.

Configure the Cloud Load Balancing failover support

The internal passthrough Network Load Balancer service with failover support routes traffic to the active host in an SAP HANA cluster based on a health check service.

Reserve an IP address for the virtual IP

The virtual IP (VIP) address , which is sometimes referred to as a floating IP address, follows the active SAP HANA system. The load balancer routes traffic that is sent to the VIP to the VM that is currently hosting the active SAP HANA system.

Open Cloud Shell:

Reserve an IP address for the virtual IP. This is the IP address that applications use to access SAP HANA. If you omit the

--addressesflag, an IP address in the specified subnet is chosen for you:$gcloud compute addresses create VIP_NAME \ --region CLUSTER_REGION --subnet CLUSTER_SUBNET \ --addresses VIP_ADDRESSFor more information about reserving a static IP, see Reserving a static internal IP address.

Confirm IP address reservation:

$gcloud compute addresses describe VIP_NAME \ --region CLUSTER_REGIONYou should see output similar to the following example:

address: 10.0.0.19 addressType: INTERNAL creationTimestamp: '2020-05-20T14:19:03.109-07:00' description: '' id: '8961491304398200872' kind: compute#address name: vip-for-hana-ha networkTier: PREMIUM purpose: GCE_ENDPOINT region: https://www.googleapis.com/compute/v1/projects/example-project-123456/regions/us-central1 selfLink: https://www.googleapis.com/compute/v1/projects/example-project-123456/regions/us-central1/addresses/vip-for-hana-ha status: RESERVED subnetwork: https://www.googleapis.com/compute/v1/projects/example-project-123456/regions/us-central1/subnetworks/example-subnet-us-central1

Create instance groups for your host VMs

In Cloud Shell, create two unmanaged instance groups and assign the primary master host VM to one and the secondary master host VM to the other:

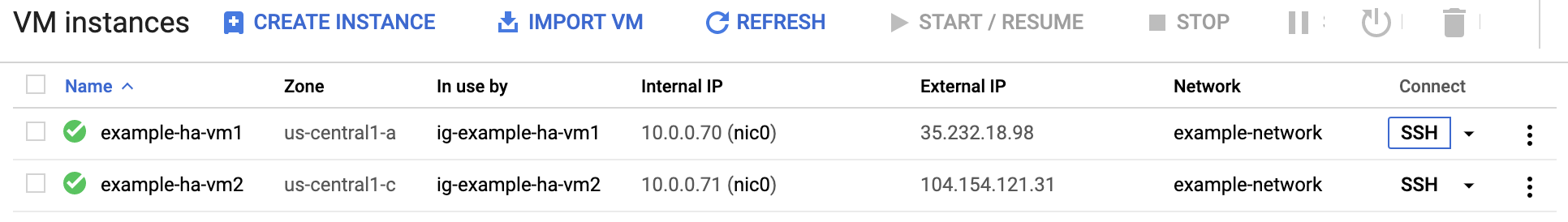

$gcloud compute instance-groups unmanaged create PRIMARY_IG_NAME \ --zone=PRIMARY_ZONE$gcloud compute instance-groups unmanaged add-instances PRIMARY_IG_NAME \ --zone=PRIMARY_ZONE \ --instances=PRIMARY_HOST_NAME$gcloud compute instance-groups unmanaged create SECONDARY_IG_NAME \ --zone=SECONDARY_ZONE$gcloud compute instance-groups unmanaged add-instances SECONDARY_IG_NAME \ --zone=SECONDARY_ZONE \ --instances=SECONDARY_HOST_NAMEConfirm the creation of the instance groups:

$gcloud compute instance-groups unmanaged listYou should see output similar to the following example:

NAME ZONE NETWORK NETWORK_PROJECT MANAGED INSTANCES hana-ha-ig-1 us-central1-a example-network example-project-123456 No 1 hana-ha-ig-2 us-central1-c example-network example-project-123456 No 1

Create a Compute Engine health check

In Cloud Shell, create the health check. For the port used by the health check, choose a port that is in the private range, 49152-65535, to avoid clashing with other services. The check-interval and timeout values are slightly longer than the defaults so as to increase failover tolerance during Compute Engine live migration events. You can adjust the values, if necessary:

$gcloud compute health-checks create tcp HEALTH_CHECK_NAME --port=HEALTHCHECK_PORT_NUM \ --proxy-header=NONE --check-interval=10 --timeout=10 --unhealthy-threshold=2 \ --healthy-threshold=2Confirm the creation of the health check:

$gcloud compute health-checks describe HEALTH_CHECK_NAMEYou should see output similar to the following example:

checkIntervalSec: 10 creationTimestamp: '2020-05-20T21:03:06.924-07:00' healthyThreshold: 2 id: '4963070308818371477' kind: compute#healthCheck name: hana-health-check selfLink: https://www.googleapis.com/compute/v1/projects/example-project-123456/global/healthChecks/hana-health-check tcpHealthCheck: port: 60000 portSpecification: USE_FIXED_PORT proxyHeader: NONE timeoutSec: 10 type: TCP unhealthyThreshold: 2

Create a firewall rule for the health checks

Define a firewall rule for a port in the private range that allows access

to your host VMs from the IP ranges that are used by Compute Engine

health checks, 35.191.0.0/16 and 130.211.0.0/22. For more information,

see Creating firewall rules for health checks.

If you don't already have one, add a network tag to your host VMs. This network tag is used by the firewall rule for health checks.

$gcloud compute instances add-tags PRIMARY_HOST_NAME \ --tags NETWORK_TAGS \ --zone PRIMARY_ZONE$gcloud compute instances add-tags SECONDARY_HOST_NAME \ --tags NETWORK_TAGS \ --zone SECONDARY_ZONEIf you don't already have one, create a firewall rule to allow the health checks:

$gcloud compute firewall-rules create RULE_NAME \ --network NETWORK_NAME \ --action ALLOW \ --direction INGRESS \ --source-ranges 35.191.0.0/16,130.211.0.0/22 \ --target-tags NETWORK_TAGS \ --rules tcp:HLTH_CHK_PORT_NUMFor example:

gcloud compute firewall-rules create fw-allow-health-checks \ --network example-network \ --action ALLOW \ --direction INGRESS \ --source-ranges 35.191.0.0/16,130.211.0.0/22 \ --target-tags cluster-ntwk-tag \ --rules tcp:60000

Configure the load balancer and failover group

Create the load balancer backend service:

$gcloud compute backend-services create BACKEND_SERVICE_NAME \ --load-balancing-scheme internal \ --health-checks HEALTH_CHECK_NAME \ --no-connection-drain-on-failover \ --drop-traffic-if-unhealthy \ --failover-ratio 1.0 \ --region CLUSTER_REGION \ --global-health-checksAdd the primary instance group to the backend service:

$gcloud compute backend-services add-backend BACKEND_SERVICE_NAME \ --instance-group PRIMARY_IG_NAME \ --instance-group-zone PRIMARY_ZONE \ --region CLUSTER_REGIONAdd the secondary, failover instance group to the backend service:

$gcloud compute backend-services add-backend BACKEND_SERVICE_NAME \ --instance-group SECONDARY_IG_NAME \ --instance-group-zone SECONDARY_ZONE \ --failover \ --region CLUSTER_REGIONCreate a forwarding rule. For the IP address, specify the IP address that you reserved for the VIP. If you need to access the SAP HANA system from outside of the region that is specified below, include the flag

--allow-global-accessin the definition:$gcloud compute forwarding-rules create RULE_NAME \ --load-balancing-scheme internal \ --address VIP_ADDRESS \ --subnet CLUSTER_SUBNET \ --region CLUSTER_REGION \ --backend-service BACKEND_SERVICE_NAME \ --ports ALLFor more information about cross-region access to your SAP HANA high-availability system, see Internal TCP/UDP Load Balancing.

Test the load balancer configuration

Even though your backend instance groups won't register as healthy until later, you can test the load balancer configuration by setting up a listener to respond to the health checks. After setting up a listener, if the load balancer is configured correctly, the status of the backend instance groups changes to healthy.

The following sections present different methods that you can use to test the configuration.

Testing the load balancer with the socat utility

You can use the socat utility to temporarily listen on the health check

port. You need to install the socatutility anyway, because

you use it later when you configure cluster resources.

On both primary and secondary master host VMs as root, install the

socatutility:#zypper install -y socatStart a

socatprocess to listen for 60 seconds on the health check port:#timeout 60s socat - TCP-LISTEN:HLTH_CHK_PORT_NUM,forkIn Cloud Shell, after waiting a few seconds for the health check to detect the listener, check the health of your backend instance groups:

$gcloud compute backend-services get-health BACKEND_SERVICE_NAME \ --region CLUSTER_REGIONYou should see output similar to the following:

--- backend: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-a/instanceGroups/hana-ha-ig-1 status: healthStatus: ‐ healthState: HEALTHY instance: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-a/instances/hana-ha-vm-1 ipAddress: 10.0.0.35 port: 80 kind: compute#backendServiceGroupHealth --- backend: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-c/instanceGroups/hana-ha-ig-2 status: healthStatus: ‐ healthState: HEALTHY instance: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-c/instances/hana-ha-vm-2 ipAddress: 10.0.0.34 port: 80 kind: compute#backendServiceGroupHealth

Testing the load balancer using port 22

If port 22 is open for SSH connections on your host VMs, you can temporarily edit the health checker to use port 22, which has a listener that can respond to the health checker.

To temporarily use port 22, follow these steps:

Click your health check in the console:

Click Edit.

In the Port field, change the port number to 22.

Click Save and wait a minute or two.

In Cloud Shell, check the health of your backend instance groups:

$gcloud compute backend-services get-health BACKEND_SERVICE_NAME \ --region CLUSTER_REGIONYou should see output similar to the following:

--- backend: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-a/instanceGroups/hana-ha-ig-1 status: healthStatus: ‐ healthState: HEALTHY instance: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-a/instances/hana-ha-vm-1 ipAddress: 10.0.0.35 port: 80 kind: compute#backendServiceGroupHealth --- backend: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-c/instanceGroups/hana-ha-ig-2 status: healthStatus: ‐ healthState: HEALTHY instance: https://www.googleapis.com/compute/v1/projects/example-project-123456/zones/us-central1-c/instances/hana-ha-vm-2 ipAddress: 10.0.0.34 port: 80 kind: compute#backendServiceGroupHealth

When you are done, change the health check port number back to the original port number.

Set up Pacemaker

The following procedure configures the SUSE implementation of a Pacemaker cluster on Compute Engine VMs for SAP HANA.

For more information about the configuring high-availability clusters on SLES, see the SUSE Linux Enterprise High Availability Extension documentation for your version of SLES.

Initialize the cluster

On the primary host as root, initialize the cluster:

SLES 15

crm cluster init -y

SLES 12

ha-cluster-init -y

Ignore the warnings related to SBD and default password. SBD and default password are not used in this deployment.

Configure the cluster

Perform the following steps on the primary host as root.

Enable maintenance mode

Put the Pacemaker cluster in maintenance mode:

crm configure property maintenance-mode="true"

Configure the general cluster properties

Configure the following general cluster properties:

crm configure property stonith-timeout="300s" crm configure property stonith-action="reboot" crm configure property stonith-enabled="true" crm configure property cluster-infrastructure="corosync" crm configure property cluster-name="hacluster" crm configure property placement-strategy="balanced" crm configure property no-quorum-policy="freeze" crm configure property concurrent-fencing="true" crm configure rsc_defaults migration-threshold="50" crm configure rsc_defaults resource-stickiness="1000" crm configure op_defaults timeout="600"

Edit the corosync.conf default settings

Open the

/etc/corosync/corosync.conffile using an editor of your choice.Remove the

consensusparameter.Modify the remaining parameters according to Google Cloud's recommendations.

The following table shows thetotemparameters for which Google Cloud recommends values, along with the impact of changing the values. For the default values of these parameters, which can differ between Linux distributions, see the documentation for your Linux distribution.Parameter Recommended value Impact of changing the value secauthoffDisables authentication and encryption of all totemmessages.join60 (ms) Increases how long the node waits for joinmessages in the membership protocol.max_messages20 Increases the maximum number of messages that might be sent by the node after receiving the token. token20000 (ms) Increases how long the node waits for a

totemprotocol token before the node declares a token loss, assumes a node failure, and starts taking action.Increasing the value of the

tokenparameter makes the cluster more tolerant of momentary infrastructure events, such as a live migration. However, it can also make the cluster take longer to detect and recover from a node failure.The value of the

tokenparameter also determines the default value of theconsensusparameter, which controls how long a node waits for consensus to be achieved before it attempts to re-establish configuration membership.consensusN/A Specifies, in milliseconds, how long to wait for consensus to be achieved before starting a new round of membership configuration.

We recommend that you omit this parameter. When the

If you explicitly specify a value forconsensusparameter is not specified, Corosync sets its value to 1.2 times the value of thetokenparameter. If you use thetokenparameter's recommended value of20000, then theconsesusparameter is set with the value24000.consensus, then make sure that the value is24000or1.2*token, whichever is greater.token_retransmits_before_loss_const10 Increases the number of token retransmits that the node attempts before it concludes that the recipient node has failed and takes action. transport- For SLES:

udpu - For RHEL 8 or later:

knet - For RHEL 7:

udpu

Specifies the transport mechanism used by corosync. - For SLES:

Join all hosts to Pacemaker cluster

Join all other hosts, including the majority maker, to the Pacemaker cluster on the primary host:

Manual steps

SLES 15

crm cluster join -y -c PRIMARY_HOST_NAME

SLES 12

ha-cluster-join -y -c PRIMARY_HOST_NAME

Automated steps

Alternatively, to automate this process for all instances listed in nodes.txt, run the following script from Google Cloud console:

while read -u10 HOST ; do echo "Joining $HOST to Pacemaker cluster"; gcloud compute ssh --tunnel-through-iap --quiet --zone $HOST -- sudo ha-cluster-join -y -c PRIMARY_HOST_NAME; done 10< nodes.txt

Ignore the error message ERROR: cluster.join: Abort: Cluster is currently active that is triggered when joining the primary node to itself.

From any host as root, confirm that the cluster shows all nodes:

# crm_mon -sYou should see output similar to the following:

CLUSTER OK: 5 nodes online, 0 resources configured

Set up fencing

You set up fencing by defining a cluster resource with a fence agent for each host VM.

To ensure the correct sequence of events after a fencing action, you also configure the operating system to delay the restart of Corosync after a VM is fenced. You also adjust the Pacemaker timeout for reboots to account for the delay.

Create the fencing device resources

Manual steps

On the primary host, as root, create the fencing resources for all nodes in the primary and secondary cluster:

Run the following command after replacing

PRIMARY_HOST_NAMEwith the hostname of a node in the primary cluster:#crm configure primitive STONITH-"PRIMARY_HOST_NAME" stonith:fence_gce \ op monitor interval="300s" timeout="120s" \ op start interval="0" timeout="60s" \ params port="PRIMARY_HOST_NAME" zone="PRIMARY_ZONE" project="PROJECT_ID" \ pcmk_reboot_timeout=300 pcmk_monitor_retries=4 pcmk_delay_max=30Repeat the previous step for all other nodes in the primary cluster.

Run the following command after replacing

SECONDARY_HOST_NAMEwith the hostname of a node in the secondary cluster.#crm configure primitive STONITH-"SECONDARY_HOST_NAME" stonith:fence_gce \ op monitor interval="300s" timeout="120s" \ op start interval="0" timeout="60s" \ params port="SECONDARY_HOST_NAME" zone="SECONDARY_ZONE" project="PROJECT_ID" \ pcmk_reboot_timeout=300 pcmk_monitor_retries=4Repeat the previous step for all other nodes in the secondary cluster.

Run the following command after replacing

MAJORITY_MAKER_HOSTNAMEwith the hostname of the majority maker instance:#crm configure primitive STONITH-"MAJORITY_MAKER_HOSTNAME" stonith:fence_gce \ op monitor interval="300s" timeout="120s" \ op start interval="0" timeout="60s" \ params port="MAJORITY_MAKER_HOSTNAME" zone="MAJORITY_MAKER_ZONE" project="PROJECT_ID" \ pcmk_reboot_timeout=300 pcmk_monitor_retries=4Set the location of the fencing device:

#crm configure location LOC_STONITH_"PRIMARY_HOST_NAME" \ STONITH-"PRIMARY_HOST_NAME" -inf: "PRIMARY_HOST_NAME"Repeat the previous step for all other hosts on the primary and secondary clusters including the majority maker host.

Set a delay for the restart of Corosync

Manual steps

On all hosts as root, create a

systemddrop-in file that delays the startup of Corosync to ensure the proper sequence of events after a fenced VM is rebooted:systemctl edit corosync.service

Add the following lines to the file:

[Service] ExecStartPre=/bin/sleep 60

Save the file and exit the editor.

Reload the systemd manager configuration.

systemctl daemon-reload

Confirm the drop-in file was created:

service corosync status

You should see a line for the drop-in file, as shown in the following example:

● corosync.service - Corosync Cluster Engine Loaded: loaded (/usr/lib/systemd/system/corosync.service; disabled; vendor preset: disabled) Drop-In: /etc/systemd/system/corosync.service.d └─override.conf Active: active (running) since Tue 2021-07-20 23:45:52 UTC; 2 days ago

Automated steps

Alternatively, to automate this process for all instances listed in nodes.txt, run the following script from Google Cloud console:

while read -u10 HOST; do gcloud compute ssh --tunnel-through-iap --quiet --zone $HOST -- "sudo mkdir -p /etc/systemd/system/corosync.service.d/; sudo echo -e '[Service]\nExecStartPre=/bin/sleep 60' | sudo tee -a /etc/systemd/system/corosync.service.d/override.conf; sudo systemctl daemon-reload"; done 10< nodes.txt

Create a local cluster IP resource for the VIP address

To configure the VIP address in the operating system, create a local cluster IP resource for the VIP address that you reserved earlier:

# crm configure primitive rsc_vip_int-primary IPaddr2 \

params ip=VIP_ADDRESS cidr_netmask=32 nic="eth0" op monitor interval=3600s timeout=60sSet up the helper health-check service

The load balancer uses a listener on the health-check port of each host to determine where the primary instance of the SAP HANA cluster is running.

To manage the listeners in the cluster, you create a resource for the listener.

These instructions use the socat utility as the listener.

On both hosts as root, install the

socat utility:#zypper in -y socatOn the primary host create a resource for the helper health-check service:

crm configure primitive rsc_healthcheck-primary anything \ params binfile="/usr/bin/socat" \ cmdline_options="-U TCP-LISTEN:HEALTHCHECK_PORT_NUM,backlog=10,fork,reuseaddr /dev/null" \ op monitor timeout=20s interval=10s \ op_params depth=0

Group the VIP and helper health-check service resources:

#crm configure group g-primary rsc_vip_int-primary rsc_healthcheck-primary meta resource-stickiness="0"

Create the SAPHanaTopology primitive resource

You define the SAPHanaTopology primitive resource in a temporary configuration

file, which you then upload to Corosync.

On the primary host as root:

Create a temporary configuration file for the

SAPHanaTopologyconfiguration parameters:#vi /tmp/cluster.tmpCopy and paste the

SAPHanaTopologyresource definitions into the/tmp/cluster.tmpfile:SLES for SAP 15 SP5 or earlier

primitive rsc_SAPHanaTopology_SID_HDBINST_NUM ocf:suse:SAPHanaTopology \ operations \$id="rsc_sap2_SID_HDBINST_NUM-operations" \ op monitor interval="10" timeout="600" \ op start interval="0" timeout="600" \ op stop interval="0" timeout="300" \ params SID="SID" InstanceNumber="INST_NUM" clone cln_SAPHanaTopology_SID_HDBINST_NUM rsc_SAPHanaTopology_SID_HDBINST_NUM \ meta clone-node-max="1" target-role="Started" interleave="true" location SAPHanaTop_not_on_majority_maker cln_SAPHanaTopology_SID_HDBINST_NUM -inf: MAJORITY_MAKER_HOSTNAME

SLES for SAP 15 SP6 or later

primitive rsc_SAPHanaTopology_SID_HDBINST_NUM ocf:suse:SAPHanaTopology \ operations \$id="rsc_sap2_SID_HDBINST_NUM-operations" \ op monitor interval="10" timeout="600" \ op start interval="0" timeout="600" \ op stop interval="0" timeout="300" \ params SID="SID" InstanceNumber="INST_NUM" clone cln_SAPHanaTopology_SID_HDBINST_NUM rsc_SAPHanaTopology_SID_HDBINST_NUM \ meta clone-node-max="1" interleave="true" location SAPHanaTop_not_on_majority_maker cln_SAPHanaTopology_SID_HDBINST_NUM -inf: MAJORITY_MAKER_HOSTNAME

Edit the

/tmp/cluster.tmpfile to replace the variable text with the SID and instance number for your SAP HANA system.On the primary as root, load the contents of the

/tmp/cluster.tmpfile into Corosync:crm configure load update /tmp/cluster.tmp

Create the SAPHana primitive resource

You define the SAPHana primitive resource by using the same method that you

used for the SAPHanaTopology resource: in a temporary configuration

file, which you then upload to Corosync.

Replace the temporary configuration file:

#rm /tmp/cluster.tmp#vi /tmp/cluster.tmpCopy and paste the

SAPHanaresource definitions into the/tmp/cluster.tmpfile:SLES for SAP 15 SP5 or earlier

primitive rsc_SAPHana_SID_HDBINST_NUM ocf:suse:SAPHanaController \ operations \$id="rsc_sap_SID_HDBINST_NUM-operations" \ op start interval="0" timeout="3600" \ op stop interval="0" timeout="3600" \ op promote interval="0" timeout="3600" \ op demote interval="0" timeout="3600" \ op monitor interval="60" role="Master" timeout="700" \ op monitor interval="61" role="Slave" timeout="700" \ params SID="SID" InstanceNumber="INST_NUM" PREFER_SITE_TAKEOVER="true" \ DUPLICATE_PRIMARY_TIMEOUT="7200" AUTOMATED_REGISTER="true" ms msl_SAPHana_SID_HDBINST_NUM rsc_SAPHana_SID_HDBINST_NUM \ meta master-node-max="1" master-max="1" clone-node-max="1" \ target-role="Started" interleave="true" colocation col_saphana_ip_SID_HDBINST_NUM 4000: g-primary:Started \ msl_SAPHana_SID_HDBINST_NUM:Master order ord_SAPHana_SID_HDBINST_NUM Optional: cln_SAPHanaTopology_SID_HDBINST_NUM \ msl_SAPHana_SID_HDBINST_NUM location SAPHanaCon_not_on_majority_maker msl_SAPHana_SID_HDBINST_NUM -inf: MAJORITY_MAKER_HOSTNAME

SLES for SAP 15 SP6 or later

primitive rsc_SAPHana_SID_HDBINST_NUM ocf:suse:SAPHanaController \ operations \$id="rsc_sap_SID_HDBINST_NUM-operations" \ op start interval="0" timeout="3600" \ op stop interval="0" timeout="3600" \ op promote interval="0" timeout="3600" \ op demote interval="0" timeout="3600" \ op monitor interval="60" role="Promoted" timeout="700" \ op monitor interval="61" role="Unpromoted" timeout="700" \ params SID="SID" InstanceNumber="INST_NUM" PREFER_SITE_TAKEOVER="true" \ DUPLICATE_PRIMARY_TIMEOUT="7200" AUTOMATED_REGISTER="true" \ HANA_CALL_TIMEOUT="120" clone mst_SAPHana_SID_HDBINST_NUM rsc_SAPHana_SID_HDBINST_NUM \ meta clone-node-max="1" \ target-role="Started" interleave="true" colocation col_saphana_ip_SID_HDBINST_NUM 4000: g-primary:Started \ mst_SAPHana_SID_HDBINST_NUM:Promoted order ord_SAPHana_SID_HDBINST_NUM Optional: cln_SAPHanaTopology_SID_HDBINST_NUM \ mst_SAPHana_SID_HDBINST_NUM location SAPHanaCon_not_on_majority_maker mst_SAPHana_SID_HDBINST_NUM -inf: MAJORITY_MAKER_HOSTNAME

For a multi-tier SAP HANA HA cluster, if you are using a version earlier than SAP HANA 2.0 SP03, set

AUTOMATED_REGISTERtofalse. This prevents a recovered instance from attempting to self-register for replication to a HANA system that already has a replication target configured. For SAP HANA 2.0 SP03 or later, you can setAUTOMATED_REGISTERtotruefor SAP HANA configurations that use multitier system replication. For additional information, see:On the primary as root, load the contents of the

/tmp/cluster.tmpfile into Corosync:crm configure load update /tmp/cluster.tmp