This document describes a reference architecture for using BigQuery ML to build a machine learning solution in your SAP environment, by using the on-premises or any cloud edition of ABAP SDK for Google Cloud. With the help of the reference architecture explained in this document, you can build solutions that extract data-driven insights to accelerate your business decision-making processes within SAP.

The intended audience for this document includes ABAP developers, SAP solution architects, and cloud architects. The document assumes that you're familiar with BigQuery and SQL.

Architecture

The following diagram shows a reference architecture for a machine learning solution that provides business insights based on the data from an SAP ERP system:

This machine learning solution architecture includes the following components:

| Component | Subsystem | Details |

|---|---|---|

| 1 | Data source | An SAP ERP system such as SAP S/4HANA, which is the source of enterprise data. |

| 2 | Data warehouse | BigQuery, which stores raw data from source systems and ML generated insights. |

| 3 | Machine learning: Training | Machine learning models, which are trained on source data to generate business insights. |

| 4 | Machine learning: Serve Insights | Components that return ML generated business insights for consumption to SAP. |

Products used

This reference architecture uses the following Google Cloud products:

- ABAP SDK for Google Cloud: Helps you develop ABAP applications that connect your SAP systems to Google Cloud services, such as BigQuery.

- BigQuery: Helps you manage and analyze your enterprise data.

Use cases

BigQuery ML empowers SAP users to derive valuable insights for improved decision-making and business outcomes by using the enterprise data stored in BigQuery. This section provides examples of use cases for which you can use BigQuery ML to build machine learning solutions.

Forecasting

Forecasting in BigQuery ML involves analyzing historical

time series data to predict future trends. For example, you can analyze

historical sales data from several store locations to predict

future sales at those locations. You can perform forecasting by

using the ML.FORECAST

function with the ARIMA_PLUS

or ARIMA_PLUS_XREG model.

Anomaly detection

Anomaly detection finds unusual patterns in data, enabling proactive response to potential problems or opportunities. With labeled data, you can utilize various supervised machine learning models for this task. You can use the linear and logistic regression, boosted trees, random forests, deep neural networks, wide-and-deep models, or AutoML model for anomaly detection.

Recommendation systems

Recommendation systems are powerful tools for businesses, helping users discover relevant content within vast collections. These systems use machine learning to suggest items that users might not find on their own, overcoming the limitations of search. Recommendation systems primarily employ two approaches:

- Content-based filtering, which recommends items based on similarity.

- Collaborative filtering, which uses similarities between users and their preferences.

Design considerations

This section provides guidance to help you use this reference architecture to develop architectures that help you to meet your specific requirements for security, privacy, compliance, cost, and performance.

Security, privacy, and compliance

To implement data governance policies, you can use BigQuery features. Data governance manages data security and quality throughout its lifecycle to ensure access and accuracy comply with policies and regulations. For more information, see Introduction to data governance in BigQuery.

Cost and performance

For an estimate of the cost of the Google Cloud resources that the data warehouse with BigQuery solution uses, see the precalculated estimate in the Google Cloud Pricing Calculator.

As you begin to scale with BigQuery, you have a number of ways available to help improve your query performance and to reduce your total spend. These methods include changing how your data is physically stored, modifying your SQL queries, and using slot reservations to ensure cost performance. For more information about ways to help scale and run your data warehouse, see Introduction to optimizing query performance.

Design alternative

While this document focuses on the on-premises or any cloud edition of ABAP SDK for Google Cloud, you can achieve similar results by using the SAP BTP edition of ABAP SDK for Google Cloud. You can adapt the provided resources to build similar solutions within your SAP BTP environment.

Deployment

This section shows you how to load data from your source SAP system into BigQuery, create a machine learning model suitable for your business requirements, and finally generate insights that can be used for business decisions in SAP.

Before you begin

Before implementing a solution based on this reference architecture, make sure that you have completed the following prerequisites:

You have a Google Cloud account and project.

Billing is enabled for your project. For information about how to confirm that billing is enabled for your project, see Verify the billing status of your projects.

The on-premises or any cloud edition of ABAP SDK for Google Cloud is installed and configured.

Authentication to access Google Cloud APIs is set up. For information about how to set up authentication, see Authentication overview for the on-premises or any cloud edition of ABAP SDK for Google Cloud.

The BigQuery API is enabled in your Google Cloud project.

Load data from an SAP source system into BigQuery

To load data from an SAP source system into a BigQuery table by

using the ABAP SDK for Google Cloud, you use the INSERT_ALL_TABLEDATA method

of the /GOOG/CL_BIGQUERY_V2 class. This data serves as the foundation

for training the machine learning models.

The following code sample illustrates how to load data from an SAP source system into a BigQuery table:

TRY.

DATA(lo_client) = NEW /goog/cl_bigquery_v2( iv_key_name = 'CLIENT_KEY' ).

TYPES:

BEGIN OF t_timeseries,

date TYPE string, "YYYY-MM-DD

value TYPE string, "Numeric value

END OF t_timeseries.

DATA: lt_data TYPE STANDARD TABLE OF t_timeseries.

"Developer TODO - populate lt_data

DATA: ls_input TYPE /goog/cl_bigquery_v2=>ty_133.

LOOP AT lt_data INTO FIELD-SYMBOL(<ls_data>).

APPEND INITIAL LINE TO ls_input-rows ASSIGNING FIELD-SYMBOL(<ls_row>).

CREATE DATA <ls_row>-json TYPE t_timeseries.

FIELD-SYMBOLS: <lfs_json> TYPE t_timeseries.

ASSIGN <ls_row>-json->* TO <lfs_json> CASTING.

<lfs_json> = <ls_data>.

ENDLOOP.

"Call API method: bigquery.tabledata.insertAll

CALL METHOD lo_client->insert_all_tabledata

EXPORTING

iv_p_dataset_id = 'BIGQUERY_DATASET'

iv_p_project_id = CONV #( lo_client->gv_project_id )

iv_p_table_id = 'BIGQUERY_TABLE'

is_input = ls_input

IMPORTING

es_output = DATA(ls_output)

ev_ret_code = DATA(lv_ret_code)

ev_err_text = DATA(lv_err_text).

lo_client->close( ).

CATCH cx_root.

"handle error

ENDTRY.

Replace the following:

CLIENT_KEY: The client key configured for authentication.BIGQUERY_DATASET: The ID of the target BigQuery dataset.BIGQUERY_TABLE: The ID of the target BigQuery table.

Alternatively, if you are looking for a no-code BigQuery data ingestion solution that handles both initial load and delta (CDC) handling, then you could consider BigQuery Connector for SAP, Cloud Data Fusion, or SAP Datasphere.

Create a machine learning model

BigQuery provides a comprehensive list of machine learning models and AI resources

to derive insights from enterprise data. One such model is ARIMA_PLUS,

which you can use to forecast future time series values based on historical data.

For example, you can use the ARIMA_PLUS model to predict future sales volumes based

on historical data. To train a new model with the SAP data that you have loaded

into BigQuery, you use the CREATE MODEL statement. Depending on

the size of your data, this might take a few minutes or hours to run. After

the model is created, you can view

the model in BigQuery Studio.

To create and train your model, run the CREATE MODEL statement:

In the Google Cloud console, click the Compose new query button.

Enter the following GoogleSQL query in the Query editor:

CREATE OR REPLACE MODEL `MODEL_NAME` OPTIONS (model_type = 'ARIMA_PLUS', time_series_timestamp_col = 'TIME_SERIES_TIMESTAMP_COL', time_series_data_col = 'TIME_SERIES_DATA_COL', auto_arima = TRUE, data_frequency = 'AUTO_FREQUENCY', decompose_time_series = TRUE ) AS SELECT {timestamp_column_name}, {timeseries_data_column_name} FROM `{BigQuery_table}`Replace the following:

MODEL_NAME: The name of your model that you have trained for generating insights.TIME_SERIES_TIMESTAMP_COL: The name of the column that provides the time points used in training the model.TIME_SERIES_DATA_COL: The name of the column that contains the data to forecast.

For more information about the arguments, see the

CREATE MODELsyntax.Click Run. Your model appears in the navigation panel.

Generate and serve insights

You can generate insights by using your machine learning model.

For example, if you created an ARIMA_PLUS model, then you can use it to

generate insights for time series values such as future sales predictions.

Generate insights

To generate insights based on a trained model, perform the following steps:

In the Google Cloud console, click the Compose new query button.

Enter the following GoogleSQL query in the Query editor:

SELECT * FROM ML.FORECAST(MODEL `MODEL_NAME`, STRUCT(HORIZON AS horizon, CONFIDENCE_LEVEL AS confidence_level));Replace the following:

MODEL_NAME: The name of your model that you have trained for generating insights.HORIZON: AnINT64value that specifies the number of time points to forecast.CONFIDENCE_LEVEL: AFLOAT64value that specifies percentage of the future values that fall in the prediction interval.

For more information about the arguments, see the The

ML.FORECASTfunction.Click Run. The ML model provides an output of forecasted values for future dates.

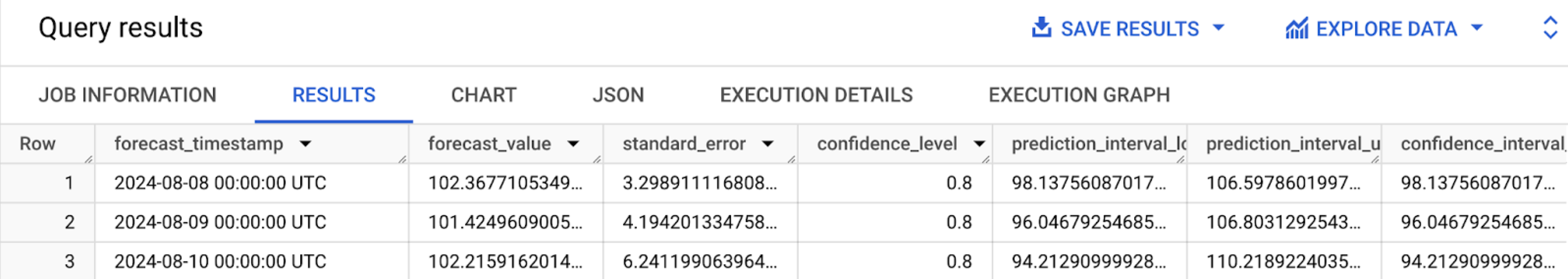

When the query is complete, click the Results tab. The results should look like the following:

Serve insights

To serve generated predictions for data driven business decisions

from your SAP application by

using the ABAP SDK for Google Cloud, you use the QUERY_JOBS method of the /GOOG/CL_BIGQUERY_V2 class.

To help you deploy the example solution explained in this section with minimal

effort, a code sample is

provided in GitHub.

This code sample shows an end-to-end example of generating forecasts

of future time series values by using the ARIMA_PLUS model and the on-premises or any cloud edition of ABAP SDK for Google Cloud.

What's next

- For an overview of BigQuery ML, see Introduction to BigQuery ML.

- To evaluate the available BigQuery machine learning models for your business process and requirements, see End-to-end user journey for each model.

- Instead of performing ad-hoc queries to get BigQuery ML predictions, you can use Cloud Run to automatically generate insights for new or changed data in BigQuery. The generated insights can be written to a temporary BigQuery table, and then retrieved by an SAP application.

- To extract greater value and insights from your SAP data, you can use the Google Cloud Cortex Data Foundation that provides a scalable and extensible data foundation, along with prebuilt data models and pipelines for common business use cases related to SAP.

- To learn more about the Google Cloud console, see Using the Google Cloud console.

- To learn about ABAP SDK for Google Cloud, see Overview of ABAP SDK for Google Cloud.

If you need help resolving problems with the ABAP SDK for Google Cloud, then do the following:

- Refer to the ABAP SDK for Google Cloud troubleshooting guide.

- Ask your questions and discuss the ABAP SDK for Google Cloud with the community on Cloud Forums.

- Collect all available diagnostic information and contact Cloud Customer Care. For information about contacting Customer Care, see Getting support for SAP on Google Cloud.

Contributors

Author: Ajith Urimajalu | SAP Application Engineer Team Lead

Other contributor: Vikash Kumar | Technical Writer