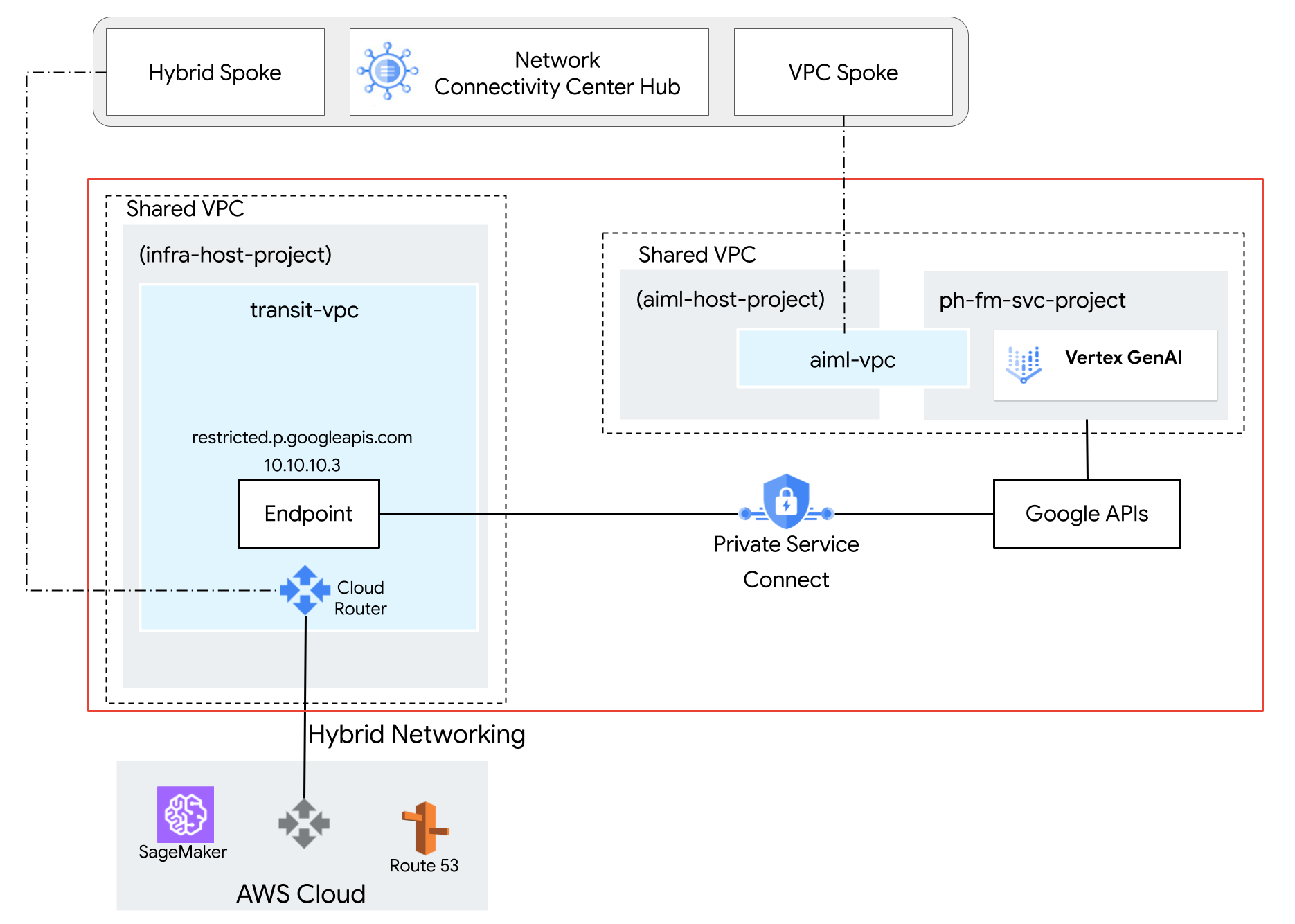

Reference architecture

In the following reference architecture, a Shared VPC is deployed with a

Gemini model in the service project,

ph-fm-svc-project (foundation model service project) with the service

policy attributes allowing private access to Vertex AI API from AWS:

- A single VPC Service Controls perimeter

- Project-defined user identity

Optional: Create the access level

If your end users require access to Vertex AI through the Google Cloud console, follow the instructions in this section to create a VPC Service Controls access level. However, if programmatic access to APIs is from private sources (such as on premises with Private Google Access or Cloud Workstations), then the access level is not required.

In this reference architecture we're using a corporate CIDR range, corp-public-block,

to allow corp employee traffic to access Google Cloud console.

Access Context Manager allows Google Cloud organization administrators to define fine-grained, attribute-based access control for projects and resources in Google Cloud.

Access levels describe the requirements for requests to be honored. Examples include:

- Device type and operating system (requires Chrome Enterprise Premium license)

- IP address

- User identity

If this is the organization's first time using Access Context Manager, then administrators must define an access policy, which is a container for access levels and service perimeters.

In the project selector at the top of the Google Cloud console, click the All tab, and then select your organization.

Create a basic access level by following the directions in the Create a basic access level page. Specify the following options:

- Under Create conditions in, choose Basic mode.

- In the Access level title field, enter

corp-public-block. - In the Conditions section, for the When condition is met, return option, choose TRUE.

- Under IP Subnetworks, choose Public IP.

- For the IP address range, specify your external CIDR range that requires access into the VPC Service Controls perimeter.

Build the VPC Service Controls service perimeter

When you create a service perimeter, you allow access to protected services from outside the perimeter by specifying the protected projects. When using VPC Service Controls with Shared VPC, you create one large perimeter including both the host and service projects. (If you only select the service project in your perimeter, network endpoints belonging to service projects appear to be outside the perimeter, because the subnets are associated only with the host project.)

Select the configuration type for the new perimeter

In this section, you create a VPC Service Controls service perimeter in dry run mode. In dry run mode, the perimeter logs violations as though the perimeters are enforced but don't prevent access to restricted services. Using dry run mode before switching to enforced mode is recommended as a best practice.

In the Google Cloud console navigation menu, click Security, and then click VPC Service Controls.

On the VPC Service Controls page, click Dry run mode.

Click New perimeter.

On the New VPC Service Perimeter tab, in the Perimeter Name box, type a name for the perimeter. Otherwise, accept the default values.

A perimeter name can have a maximum length of 50 characters, must start with a letter, and can contain only ASCII Latin letters (a-z, A-Z), numbers (0-9), or underscores (_). The perimeter name is case-sensitive and must be unique within an access policy.

Select the resources to protect

Click Resources to protect.

To add projects or VPC networks that you want to secure within the perimeter, do the following:

Click Add Resources.

To add projects to the perimeter, in the Add resources pane, click Add project.

To select a project, in the Add projects dialog, select that project's checkbox. In this reference architecture, we select the following projects:

infra-host-projectaiml-host-projectph-fm-svc-project

Click Add selected resources. The added projects appear in the Projects section.

Select the restricted services

In this reference architecture, the scope of restricted APIs is limited, enabling only the necessary APIs required for Gemini. However, as a best practice, we recommend that you restrict all services when you create a perimeter to mitigate the risk of data exfiltration from Google Cloud services.

To select the services to secure within the perimeter, do the following:

Click Restricted Services.

In the Restricted Services pane, click Add services.

In the Specify services to restrict dialog, select Vertex AI API.

Click Add Vertex AI API.

Optional: Select the VPC accessible services

The VPC accessible services setting limits the set of services that are accessible from network endpoints inside your service perimeter. In this reference architecture, we're keeping the default setting of All Services.

Optional: Select the access level

If you created a corporate CIDR access level in an earlier section, do the following to allow access to protected resources from outside the perimeter:

Click Access Levels.

Click the Choose Access Level box.

You can also add access levels after a perimeter has been created.

Select the checkbox corresponding to the access level. (In this reference architecture, this is

corp-public-block.)

Ingress and egress policies

In this reference architecture, there's no need to specify any settings in the Ingress Policy or Egress Policy panes.

Create the perimeter

Once you have completed the preceding configuration steps, create the perimeter by clicking Create perimeter.

Configure network connectivity between AWS and Google APIs

Configure Private Service Connect for Google APIs

Private Service Connect to access Google APIs is an alternative to using Private Google Access or the public domain names for Google APIs. In this case, the producer is Google.

Using Private Service Connect lets you do the following:

- Create one or more internal IP addresses to access Google APIs for different use cases.

- Direct on-premises traffic to specific IP addresses and regions when accessing Google APIs.

- Create a custom endpoint DNS name used to resolve Google APIs.

In the reference architecture, a Private Service Connect Google API

endpoint named restricted, with IP Address 10.10.10.3, is deployed with

the target VPC-SC, used as a Virtual IP (VIP) to access restricted services

configured in the VPC-SC Perimeter. Targeting non-restricted services with the

VIP is not supported. For more information, see

About accessing the Vertex AI API | Google Cloud.

Configure AWS VPC network

Network connectivity between Amazon Web Services (AWS) and Google Cloud is established using High-Availability Virtual Private Network (HA VPN) tunnels. This secure connection facilitates private communication between the two cloud environments. However, to enable seamless routing and communication between resources in AWS and Google Cloud, the Border Gateway Protocol (BGP) is employed.

In the Google Cloud environment, a custom route advertisement is required. This custom route specifically advertises the Private Service Connect Google API IP address to the AWS network. By advertising this IP address, AWS can establish a direct route to the Google API, bypassing the public internet and improving performance.

In the reference architecture, a Sagemaker instance is deployed with an association with the AWS VPC where the VPN is established with Google Cloud. Border Gateway Protocol (BGP) is used to advertise routes across HA VPN between AWS and Google Cloud network. As a result, Google Cloud and AWS can route bidirectional traffic over VPN. For more information about setting up HA VPN connections, see Create HA VPN connections between Google Cloud and AWS.

Configure Route 53 updates

Create a private hosted zone named p.googleapis.com in AWS Route 53 and add

the fully qualified domain name

REGION-aiplatform-restricted.p.googleapis.com with

the IP address 10.10.10.3 (Private Service Connect Googleapis IP)

as the DNS A record.

When the Jupyter Notebook SDK performs a DNS lookup for Vertex AI

API to reach Gemini, Route 53 returns the

Private Service Connect Google APIs IP address. Jupyter Notebook

uses the IP address obtained from Route 53 to establish a connection to the

Private Service Connect Google APIs endpoint routed through

HA VPN into Google Cloud.

Configure Sagemaker updates

This reference architecture uses Amazon SageMaker Notebook instances to access the Vertex AI API. However, you can achieve the same setup with other compute services supporting VPC, such as Amazon EC2 or AWS Lambda.

To authenticate your requests, you can either use a Google Cloud service account key or use Workload Identity Federation. For information about setting up Workload Identity Federation, see On-premises or another cloud provider.

The Jupyter Notebook instance invokes an API call to the Gemini model hosted in

Google Cloud by performing a DNS resolution to the custom Private Service Connect Google APIs

fully qualified domain name

REGION-aiplatform-restricted.p.googleapis.com overriding the

default fully qualified domain name

(REGION-aiplatform.googleapis.com).

The Vertex AI API can be called using Rest, gRPC or SDK. To use the Private Service Connect customer fully qualified domain name, update the API_ENDPOINT in Jupyter Notebook with the following:

Instructions for using Vertex AI SDK for Python

Install the SDK:

pip install --upgrade google-cloud-aiplatformImport the dependencies:

from google.cloud import aiplatform from vertexai.generative_models import GenerativeModel, Part, SafetySetting import vertexai import base64Initialize the following environment variables:

PROJECT_ID="ph-fm-svc-projects" # Google Cloud Project ID LOCATION_ID="us-central1" # Enter Vertex AI Gemini region such a s us-central1 API_ENDPOINT="us-central1-aiplatform-restricted.p.googleapis.com" # PSC Endpoint MODEL_ID="gemini-2.0-flash-001" # Gemini Model IDInitialize the Vertex AI SDK for Python:

vertexai.init(project=PROJECT_ID,api_endpoint=API_ENDPOINT, api_transport="rest")Make the following request to the Vertex AI Gemini API:

import base64 from vertexai.generative_models import GenerativeModel, Part, SafetySetting def generate(model_id, prompt): model = GenerativeModel( model_id, ) responses = model.generate_content( [prompt], generation_config=generation_config, safety_settings=safety_settings, stream=True, ) for response in responses: print(response.text, end="") generation_config = { "max_output_tokens": 8192, "temperature": 1, "top_p": 0.95, } safety_settings = [ SafetySetting( category=SafetySetting.HarmCategory.HARM_CATEGORY_HATE_SPEECH, threshold=SafetySetting.HarmBlockThreshold.OFF ), SafetySetting( category=SafetySetting.HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT, threshold=SafetySetting.HarmBlockThreshold.OFF ), SafetySetting( category=SafetySetting.HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT, threshold=SafetySetting.HarmBlockThreshold.OFF ), SafetySetting( category=SafetySetting.HarmCategory.HARM_CATEGORY_HARASSMENT, threshold=SafetySetting.HarmBlockThreshold.OFF ), ] prompt = "which weighs more: 1kg feathers or 1kg stones" generate(MODEL_ID,prompt)At this point, you can perform an API call to Gemini from Jupyter notebook to access Gemini hosted in Google Cloud. If the call is successful, the output looks like the following:

They weigh the same. Both weigh 1 kilogram.

Instructions for using the Vertex AI REST API

In this section, you set up some important variables that will be used throughout the process. These variables store information about your project, such as the location of your resources, the specific Gemini model, and the Private Service Connect endpoint you want to use.

Open a terminal window inside a Jupyter notebook.

Initialize the following environment variables:

export PROJECT_ID="ph-fm-svc-projects" export LOCATION_ID="us-central1" export API_ENDPOINT="us-central1-aiplatform-restricted.p.googleapis.com" export MODEL_ID="gemini-2.0-flash-001"Use a text editor such as

vimornanoto create a new file namedrequest.jsonthat contains the following formatted request for the Vertex AI Gemini API:{ "contents": [ { "role": "user", "parts": [ { "text": "which weighs more: 1kg feathers or 1kg stones" } ] } ], "generationConfig": { "temperature": 1, "maxOutputTokens": 8192, "topP": 0.95, "seed": 0 }, "safetySettings": [ { "category": "HARM_CATEGORY_HATE_SPEECH", "threshold": "OFF" }, { "category": "HARM_CATEGORY_DANGEROUS_CONTENT", "threshold": "OFF" }, { "category": "HARM_CATEGORY_SEXUALLY_EXPLICIT", "threshold": "OFF" }, { "category": "HARM_CATEGORY_HARASSMENT", "threshold": "OFF" } ] }Make the following cURL request to the Vertex AI Gemini API:

curl -v \ -X POST \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $(gcloud auth print-access-token)" \ "https://$API_ENDPOINT/v1/projects/$PROJECT_ID/locations/$LOCATION_ID/publishers/google/models/$MODEL_ID:streamGenerateContent" -d '@request.json'

Validate your perimeter in dry run mode

In this reference architecture, the service perimeter is configured in dry run mode, letting you test the effect of access policy without enforcement. This means that you can see how your policies would impact your environment if they were active, but without the risk of disrupting legitimate traffic.

After validating your perimeter in dry run mode, switch it to enforced mode.

What's next

- Learn how to Use

p.googleapis.comDNS names. - To learn how to validate your perimeter in dry run mode, watch the VPC Service Controls dry run logging video.

- Learn how to use the Vertex AI REST API.

- Learn more about using the Vertex AI SDK for Python.