Nesta página, mostramos como ativar o Cloud Trace no seu agente e visualizar rastreamentos para analisar tempos de resposta de consultas e operações executadas.

Um rastreamento

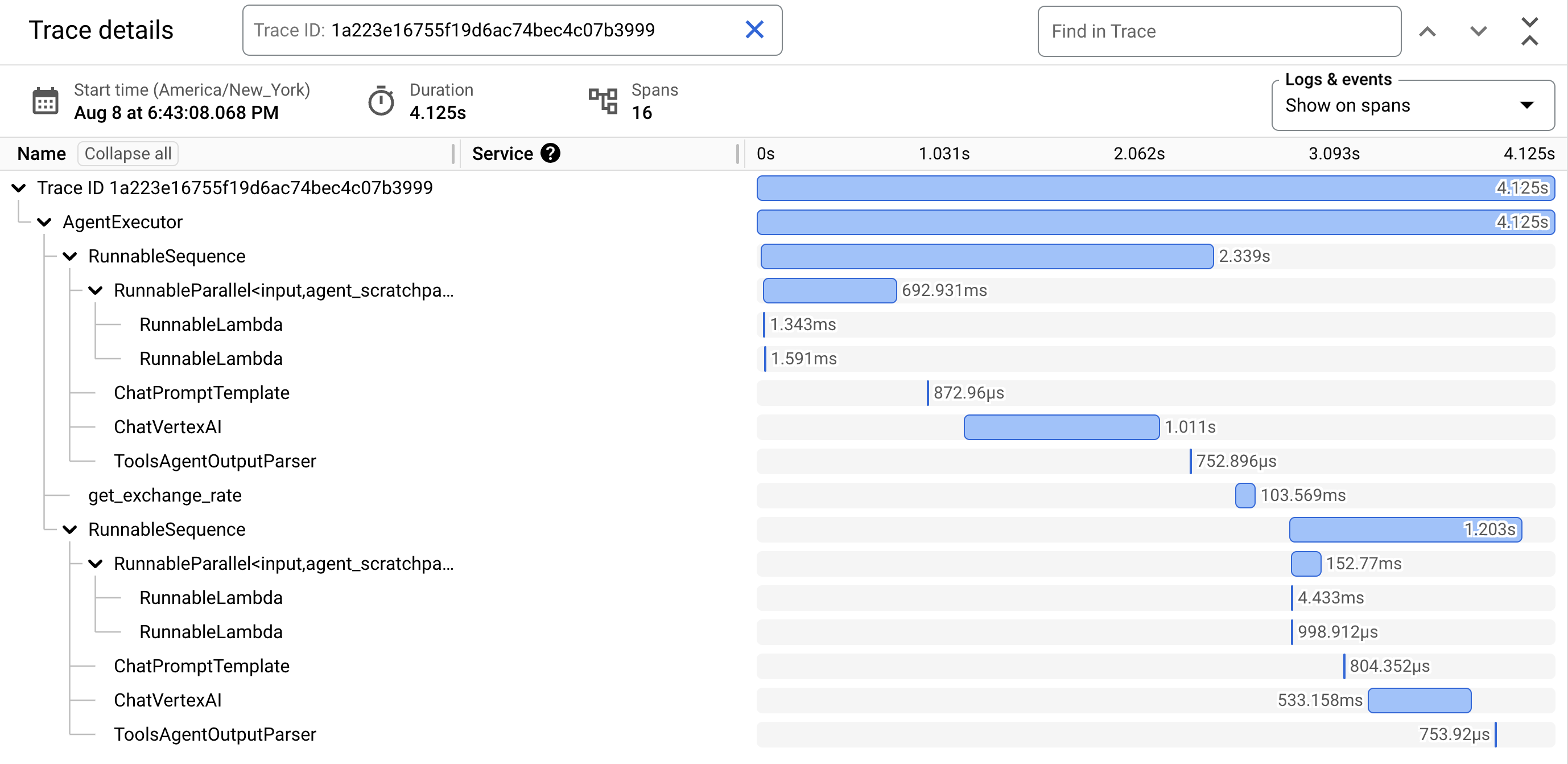

é uma linha do tempo de solicitações à medida que o agente responde a cada consulta. Por exemplo, o gráfico de Gantt a seguir mostra um trace de amostra de um LangchainAgent:

A primeira linha no diagrama de Gantt é para o trace. Um rastreamento é composto de períodos individuais, que representam uma única unidade de trabalho, como uma chamada de função ou uma interação com um LLM. O primeiro período representa a solicitação geral. Cada período fornece detalhes sobre uma operação específica, como nome, horários de início e término e atributos relevantes dentro da solicitação. Por exemplo, o JSON a seguir mostra um único intervalo que representa uma chamada para um modelo de linguagem grande (LLM):

{

"name": "llm",

"context": {

"trace_id": "ed7b336d-e71a-46f0-a334-5f2e87cb6cfc",

"span_id": "ad67332a-38bd-428e-9f62-538ba2fa90d4"

},

"span_kind": "LLM",

"parent_id": "f89ebb7c-10f6-4bf8-8a74-57324d2556ef",

"start_time": "2023-09-07T12:54:47.597121-06:00",

"end_time": "2023-09-07T12:54:49.321811-06:00",

"status_code": "OK",

"status_message": "",

"attributes": {

"llm.input_messages": [

{

"message.role": "system",

"message.content": "You are an expert Q&A system that is trusted around the world.\nAlways answer the query using the provided context information, and not prior knowledge.\nSome rules to follow:\n1. Never directly reference the given context in your answer.\n2. Avoid statements like 'Based on the context, ...' or 'The context information ...' or anything along those lines."

},

{

"message.role": "user",

"message.content": "Hello?"

}

],

"output.value": "assistant: Yes I am here",

"output.mime_type": "text/plain"

},

"events": [],

}

Para mais detalhes, consulte a documentação do Cloud Trace sobre Traces e intervalos e Contexto de trace.

Gravar rastreamentos para um agente

Para gravar rastreamentos de um agente:

ADK

Para ativar o rastreamento de AdkApp, especifique enable_tracing=True ao

desenvolver um agente do Agent Development Kit.

Exemplo:

from vertexai.agent_engines import AdkApp

from google.adk.agents import Agent

agent = Agent(

model=model,

name=agent_name,

tools=[get_exchange_rate],

)

app = AdkApp(

agent=agent, # Required.

enable_tracing=True, # Optional.

)

LangchainAgent

Para ativar o rastreamento de LangchainAgent, especifique enable_tracing=True ao

desenvolver um agente LangChain.

Exemplo:

from vertexai.agent_engines import LangchainAgent

agent = LangchainAgent(

model=model, # Required.

tools=[get_exchange_rate], # Optional.

enable_tracing=True, # [New] Optional.

)

LanggraphAgent

Para ativar o rastreamento de LanggraphAgent, especifique enable_tracing=True ao

desenvolver um agente do LangGraph.

Exemplo:

from vertexai.agent_engines import LanggraphAgent

agent = LanggraphAgent(

model=model, # Required.

tools=[get_exchange_rate], # Optional.

enable_tracing=True, # [New] Optional.

)

LlamaIndex

Para ativar o rastreamento de LlamaIndexQueryPipelineAgent, especifique enable_tracing=True ao

desenvolver um agente do LlamaIndex.

Exemplo:

from vertexai.preview import reasoning_engines

def runnable_with_tools_builder(model, runnable_kwargs=None, **kwargs):

from llama_index.core.query_pipeline import QueryPipeline

from llama_index.core.tools import FunctionTool

from llama_index.core.agent import ReActAgent

llama_index_tools = []

for tool in runnable_kwargs.get("tools"):

llama_index_tools.append(FunctionTool.from_defaults(tool))

agent = ReActAgent.from_tools(llama_index_tools, llm=model, verbose=True)

return QueryPipeline(modules = {"agent": agent})

agent = reasoning_engines.LlamaIndexQueryPipelineAgent(

model="gemini-2.0-flash",

runnable_kwargs={"tools": [get_exchange_rate]},

runnable_builder=runnable_with_tools_builder,

enable_tracing=True, # Optional

)

Personalizado

Para ativar o rastreamento de agentes personalizados, acesse Rastreamento usando o OpenTelemetry para mais detalhes.

Isso vai exportar os traces para o Cloud Trace no projeto em Configurar seu projeto do Google Cloud .

Ver traces de um agente

É possível conferir seus traces usando o Explorador de traces:

Para receber as permissões necessárias para ver dados de rastreamento no console do Google Cloud ou selecionar um escopo de rastreamento, peça ao administrador para conceder a você o papel Usuário do Cloud Trace (

roles/cloudtrace.user) do IAM no seu projeto.Acesse o Explorador de traces no console Google Cloud :

Selecione seu projeto Google Cloud (correspondente a

PROJECT_ID) na parte de cima da página.

Para saber mais, consulte a documentação do Cloud Trace.

Cotas e limites

Alguns valores de atributo podem ser truncados quando atingem os limites de cota. Para mais informações, consulte Cota do Cloud Trace.

Preços

O Cloud Trace tem um nível gratuito. Para mais informações, consulte Preços do Cloud Trace.