Pada mesh data, platform data layanan mandiri memungkinkan pengguna untuk menghasilkan nilai dari data yang memungkinkan mereka dapat membuat, membagikan, dan menggunakan produk data secara mandiri. Untuk merealisasikan manfaat ini, sebaiknya platform data layanan mandiri Anda menyediakan kemampuan yang dijelaskan pada dokumen ini.

Dokumen ini adalah bagian dari rangkaian yang menjelaskan cara menerapkan mesh data di Google Cloud. Dokumen ini mengasumsikan bahwa Anda telah membaca dan memahami konsep yang dijelaskan dalam Membangun Mesh Data terdistribusi yang modern dengan Google Cloud serta Arsitektur dan fungsi dalam mesh data.

Seri ini memiliki bagian-bagian sebagai berikut:

- Arsitektur dan fungsi dalam mesh data

- Desain platform data layanan mandiri untuk mesh data (dokumen ini)

- Membuat produk data dalam mesh data

- Menemukan dan menggunakan produk data dalam mesh data

Tim platform data biasanya membuat platform data layanan mandiri terpusat, seperti yang dijelaskan pada dokumen ini. Tim ini membuat solusi dan komponen yang dapat digunakan tim domain (produsen data dan konsumen data) untuk membuat dan menggunakan produk data. Tim domain menampilkan bagian fungsional dari mesh data. Dengan membangun komponen-komponen ini, team platform data memungkinkan pengalaman pengemabangan yang lancar dan mengurangi kompleksitas dalam mem-build, men-deploy, dan memelihara produk data yang aman dapat dioperasikan bersama.

Pada akhirnya, tim platform data harus memungkinkan tim domain untuk bergerak lebih cepat. Mereka membantu meningkatkan efisiensi tim domain dengan menyediakan serangkaian alat terbatas yang memenuhi kebutuhan tim tersebut. Dalam menyediakan alat ini, tim platform data menghilangkan beban karena tim domain mem-build dan mencari sumber alat tersebut sendiri. Pilihan alat harus dapat disesuaikan dengan berbagai kebutuhan dan tidak memaksakan cara kerja yang kaku pada tim domain data.

Tim platform data tidak boleh berfokus pada pembangunan solusi khusus untuk orkestrasi pipeline data atau untuk sistem continuous integration dan deployment berkelanjutan (CI/CD). Solusi seperti sistem CI/CD tersedia sebagai layanan cloud terkelola, misalnya, Cloud Build. Pengguna layanan cloud terkelola dapat mengurangi overhead operasional untuk tim platform data dan memungkinkan merek berfokus pada kebutuhan spesifik tim domain data sebagai pengguna platform. Dengan mengurangi overhead operasional, tim platform daya dapat lebih fokus untuk memenuhi kebutuhan spesifik tim domain data.

Arsitektur

Diagram berikut mengilustrasikan komponen arsitektur platform data layanan mandiri. Diagram ini juga menunjukkan cara komponen ini dapat mendukung tim saat mengembangkan dan memakai produk data di seluruh mesh data.

Seperti yang ditunjukkan pada diagram sebelumnya, platform data layanan mandiri menyediakan hal berikut:

Solusi platform: Solusi ini berisi komponen yang dapat disusun untuk menyediakan project dan resource Google Cloud , yang dipilih dan disusun oleh pengguna dalam berbagai kombinasi untuk memenuhi persyaratan spesifik mereka. Daripada berinteraksi langsung dengan komponen, pengguna, platform dapat berinteraksi dengan solusi platform untuk membantu mereka mencapai sasaran tertentu. Tim domain data harus merancang solusi platform untuk mengatasi poin kendala dan area friksi umum yang menyebabkan perlambatan pengembangan dan pemakaian produk data. Misalnya, tim domain data yang melakukan orientasi pada mesh data dapat menggunakan template infrastructure-as-code (IaC). Menggunakan template IaC memungkinkan mereka lebih cepat membuat serangkaian projectGoogle Cloud dengan izin Identity and Access Management (IAM), jaringan, kebijakan keamanan, dan API Google Cloudyang relevan diaktifkan untuk pengembangan produk data. Baiknya setiap solusi disertai dengan dokumentasi seperti panduan "cara memulai" dan contoh kode. Solusi platform data dan komponennya harus aman dan mematuhi kebijakan secara default.

Layanan umum: Layanan ini menyediakan kemampuan untuk visibilitas, pengelolaan, berbagi, dan observasi produk data. Layanan ini memfasilitasi kepercayaan konsumen data pada produk data, dan merupakan cara yang efektif bagi produser data untuk memberi tahu konsumen data tentang masalah dengan produk data mereka.

Solusi platform data dan layanan umum dapat mencakup hal berikut:

- Template IaC untuk menyiapkan lingkungan ruang kerja pengembangan produk data

dasar, yang meliputi:

- IAM

- Logging dan pemantauan

- Jaringan

- Panduan keamanan dan kepatuhan

- Pemberian tag resource untuk atribusi penagihan

- Penyimpanan, transformasi, dan publikasi produk data

- Pendaftaran, katalog, dan pemberian tag metadata produk data

- Template IaC yang mengikuti panduan keamanan organisasi dan praktik terbaik yang dapat digunakan untuk men-deploy resource Google Cloud ke ruang kerja pengembangan produk data yang ada.

- Template data pipeline dan aplikasi yang dapat digunakan untuk mem-bootstrap

project baru atau digunakan sebagai referensi project yang suda ada. Contoh

template tersebut meliputi:

- Penggunaan library dan framework secara umum

- Integrasi dengan logging platform, pemantauan, dan alat kemampuan observasi

- Mem-build dan menguji alat

- Pengelolaan konfigurasi

- Pengemasan dan pipeline CI/CD untuk deployment

- Autentikasi, deployment, dan pengelolaan kredensial

- Layanan umum untuk menyediakan kemampuan observasi dan tata kelola produk daya

yang dapat mencakup hal-hal berikut:

- Cek uptime untuk menampilkan status produk data secara keseluruhan.

- Metrik kustom untuk memberikan indikator bermanfaat tentang produk data.

- Dukungan operasional oleh tim pusat sehingga tim konsumen data diberi tahu tentang perubahan produk data yang mereka gunakan.

- Scorecard produk untuk menampilkan performa produk data.

- Katalog metadata untuk menemukan produk data.

- Serangkaian kebijakan komputasi yang ditentukan secara terpusat yang dapat diterapkan secara global di seluruh mesh data.

- Marketplace data untuk memfasilitasi berbagi data di seluruh tim domain.

Membuat komponen platform dan solusi menggunakan template IaC membahas keunggulan template IaC untuk mengekspos dan men-deploy produk data. Menyediakan layanan umum membahas alasan pentingnya menyediakan komponen infrastruktur umum yang telah dibuat dan dikelola oleh tim platform data bagi tim domain.

Membuat komponen platform dan solusi menggunakan template IaC

Tujuan tim platform data adalah menyiapkan platform data layanan mandiri untuk mendapatkan nilai lebih dari data. Untuk mm-build platform ini, mereka membuat dan menyediakan template infrastruktur yang telah diperiksa, aman, dan dapat diakses secara mandiri kepada tim domain. Tim domain menggunakan template ini untuk men-deploy lingkungan pengemabangan data dan konsumsi data mereka. Template IaC membantu tim platform data mencapai tujuan itu dan mengaktifkan skala. Menggunakan template IaC yang telah diperiksa dan terpercaya akan menyederhanakan proses deployment resource untuk tim domain dengan memungkinkan tim tersebut untuk menggunakan kembali pipeline CI/CD yang ada. Dengan pendekatan ini, tim domain dapat memulai dan menjadi produktif dengan cepat dalam mesh data.

Template IaC dapat dibuat menggunakan alat IaC. Meskipun terdapat beberapa alat IaC meliputi, Cloud Config Connector, Pulumi, Chef, dan Ansible, dokumen ini memberikan contoh untuk Terraform-berbasis alat IaC. Terraform adalah alat IaC open source yang memungkinkan tim platform data membuat komponen dan solusi platform yang dapat disusun secara efisien untuk resourceGoogle Cloud . Dengan menggunakan Terraform, tim platform data menulis kode yang menentukan status akhir yang dipilih dan memungkinkan alat ini mengetahui cara mencapai status tersebut. Dengan pendekatan deklaratif ini, tim platform data dapat memperlakukan resource infrastruktur sebagai artefak yang tidak dapat diubah untuk deployment di lingkungan. Cara ini juga membantu mengurangi risiko ketidaksesuaian yang muncul antara resource yang di-deploy dan kode yang dideklarasikan pada kontrol sumber (disebut sebagai penyimpangan konfigurasi). Penyimpangan konfigurasi yang disebabkan oleh perubahan ad hoc dan manual pada infrastruktur menghambat deployment komponen IaC yang aman dan dapat diulang ke lingkungan produksi.

Template IaC umum untuk komponen platform yang dapat disusun mencakup penggunaan modul Terraform untuk men-deploy resource seperti set data BigQuery, bucket Cloud Storage atau database Cloud SQL. Modul Terraform dapat digabungkan menjadi solusi menyeluruh untuk men-deploy project Google Cloud yang lengkap, termasuk resources yang relevan yang di-deploy menggunakan modules composable. Contoh modul Terraform dapat ditemukan di blueprint Terraform untuk Google Cloud.

Setiap modul Terraform harus secara default memenuhi panduan keamanan dan kebijakan kepatuhan yang digunakan oleh organisasi Anda. Panduan dan kebijakan ini juga dapat dinyatakan sebagai kode dan diotomatiskan menggunakan alat verifikasi kepatuhan otomatis seperti Google Cloud alat validasi kebijakan.

Organisasi Anda harus secara berkelanjutan menguji modul Terraform yang disediakan platform, menggunakan kepatuhan otomatis yang sama yang digunakan untuk mempromosikan perubahan dalam lingkungan produksi.

Agar komponen dan solusi dapat ditemukan dan digunakan untuk tim domain dengan minimal pengalaman Terraform, sebaiknya gunakan layanan seperti Katalog Layanan. Pengguna yang memiliki persyaratan penyesuaian signifikan yang diizinkan untuk membuat solusi deployment mereka sendiri dari template Terraform composable yang sama dengan yang digunakan solusi yang ada.

Saat menggunakan Terraform, sebaiknya Anda mengikuti praktik terbaik Google Cloud yang dijelaskan dalam Praktik terbaik untuk menggunakan Terraform.

Untuk menggambarkan cara Terraform dapat digunakan untuk membuat komponen platform, bagian berikut menjelaskan contoh penggunaan Terraform yang dapat digunakan untuk menampilkan antarmuka pengguna dan memakai produk data.

Mengekspos antarmuka konsumsi

Antarmuka konsumsi untuk produk data merupakan serangkaian jaminan pada kualitas data dan parameter operasional yang disediakan oleh tim domain data agar tim lain dapat menemukan dan menggunakan produk data mereka. Setiap antarmuka konsumsi juga termasuk model dukungan produk dan dokumentasi produk. Produk data mungkin memiliki berbagai jenis antarmuka pemakaian, seperti API atau stream, seperti yang dijelaskan dalam Mem-build produk data di mesh data. Antarmuka konsumsi yang paling umum mungkin berupa set data yang diotorisasi BigQuery, tampilan yang diotorisasi, atau fungsi yang diotorisasi. Antarmuka ini menampilkan tabel virtual hanya baca, yang dinyatakan sebagai kueri ke dalam mesh data. Antarmuka tidak memberikan izin pembaca untuk secara langsung mengakses data pokok.

Google menyediakan contoh

modul Terraform untuk membuat tampilan yang diotorisasi

tanpa memberikan izin kepada tim ke set data resmi yang mendasarinya. Kode

berikut dari modul Terraform ini memberikan izin IAM

pada tampilan yang diotorisasi dataset_id:

module "add_authorization" {

source = "terraform-google-modules/bigquery/google//modules/authorization"

version = "~> 4.1"

dataset_id = module.dataset.bigquery_dataset.dataset_id

project_id = module.dataset.bigquery_dataset.project

roles = [

{

role = "roles/bigquery.dataEditor"

group_by_email = "ops@mycompany.com"

}

]

authorized_views = [

{

project_id = "view_project"

dataset_id = "view_dataset"

table_id = "view_id"

}

]

authorized_datasets = [

{

project_id = "auth_dataset_project"

dataset_id = "auth_dataset"

}

]

}

Jika Anda perlu memberikan akses pengguna untuk beberapa tampilan, memberikan akses untuk setiap tampilan yang diotorisasi dapat memakan waktu dan lebih sulit untuk dikelola. Daripada membuat beberapa tampilan yang diotorisasi, Anda dapat menggunakan set data yang diotorisasi untuk secara otomatis mengizinkan setiap tampilan yang dibuat dalam set data yang diotorisasi.

Memakai produk data

Untuk sebagian besar kasus penggunaan, pola konsumsi ditentukan oleh aplikasi tempat data digunakan. Pengguna utama lingkungan konsumsi yang disediakan adalah untuk eksplorasi data sebelum data digunakan dalam aplikasi pengguna. Seperti yang dibahas dalam Menemukan dan menggunakan produk dalam mesh data, SQL adalah metode yang paling umum digunakan untuk membuat kueri produk data. Karena inilah, platform data harus menyediakan aplikasi SQL bagi konsumen data untuk eksplorasi data.

Bergantung pada kasus penggunaan analisis, Anda mungkin dapat menggunakan Terraform untuk men-deploy lingkungan konsumsi bagi konsumen data. Misalnya, data science adalah kasus penggunaan umum bagi konsumen data. Anda dapat menggunakan Terraform untuk men-deploy notebook yang dikelola pengguna Vertex AI untuk digunakan sebagai lingkungan pengembangan data science. Dari notebook data science, konsumen data dapat menggunakan kredensial mereka untuk login ke mesh data guna mempelajari data yang dapat mereka akses dan mengembangkan model ML berdasarkan data ini.

Untuk mempelajari cara menggunakan Terraform guna men-deploy dan membantu mengamankan lingkungan notebook di Google Cloud, lihat Membangun dan men-deploy model AI generatif dan machine learning di perusahaan.

Menyediakan layanan umum

Selain komponen dan solusi IaC layanan mandiri, tim platform data juga dapat mengambil alih pembangunan dan pengoperasian layanan platform bersama yang umum digunakan oleh beberapa tim domain data. Contoh umum layanan platform bersama meliputi software pihak ketiga yang dihosting sendiri seperti alat visualisasi business intelligence atau cluster Kafka. Di Google Cloud, tim platform data dapat memilih untuk mengelola resource seperti sink Cloud Logging dan Katalog Universal Dataplex atas nama tim domain data. Pengelolaan resource untuk tim domain data memungkinkan tim platform data memfasilitasi pengauditan dan pengelolaan kebijakan terpusat di seluruh organisasi.

Bagian berikut menunjukkan cara menggunakan Dataplex Universal Catalog untuk pengelolaan dan tata kelola terpusat dalam mesh data di Google Cloud, dan implementasi fitur kemampuan observasi data dalam mesh data.

Dataplex Universal Catalog untuk tata kelola data

Dataplex Universal Catalog menyediakan platform pengelolaan data yang membantu Anda mem-build domain data independen dalam mesh data yang mencakup organisasi. Dengan Katalog Universal Dataplex, Anda dapat mempertahankan kontrol pusat untuk mengatur dan memantau data di seluruh domain.

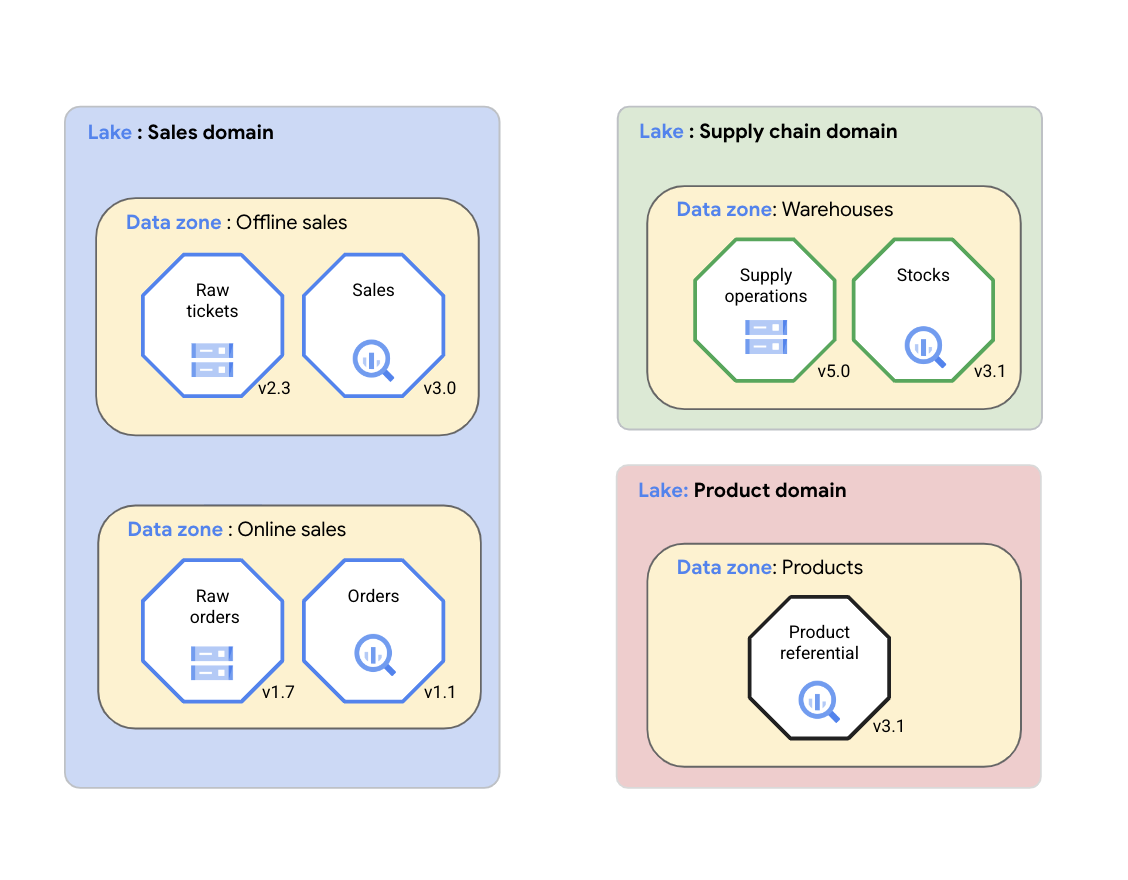

Dengan Dataplex Universal Catalog, organisasi dapat mengatur data mereka (sumber data yang didukung) dan artefak terkait seperti kode, notebook, dan log secara logis, ke dalam Dataplex Universal Catalog lake yang mewakili data domain. Dalam diagram berikut, domain penjualan menggunakan Dataplex Universal Catalog untuk mengatur asetnya, termasuk metrik dan log kualitas data, ke dalam zona Dataplex Universal Catalog.

Seperti yang ditunjukkan pada diagram sebelumnya, Katalog Universal Dataplex dapat digunakan untuk mengelola data domain di seluruh aset berikut:

- Dengan Dataplex Universal Catalog, tim domain data dapat mengelola aset data mereka secara konsisten dalam grup logis yang disebut Dataplex Universal Catalog lake. Tim domain data dapat mengatur aset Dataplex Universal Catalog mereka dengan data lake Dataplex Universal Catalog yang sama tanpa memindahkan data secara fisik atau menyimpannya ke dalam satu sistem penyimpanan. Aset Dataplex Universal Catalog dapat merujuk ke bucket Cloud Storage dan set data BigQuery yang disimpan di beberapa Google Cloud project selain Google Cloud project yang berisi data lake Dataplex Universal Catalog. Aset Dataplex Universal Catalog dapat terstruktur atau tidak terstruktur, maupun disimpan di data lake analitis atau data warehouse. Diagram menunjukkan, lake data untuk domain penjualan, domain supply chain, dan domain produk.

- Zona Dataplex Universal Catalog memungkinkan tim domain data mengatur lebih lanjut aset data ke dalam subgrup lebih kecil dengan lake Dataplex Universal Catalog yang sama dan menambahkan struktur yang menangkap aspek penting dari grup tersebut. Misalnya, zona Dataplex Universal Catalog dapat digunakan untuk mengelompokkan aset data terkait dalam produk data. Mengelompokkan aset data ke dalam satu zona Dataplex Universal Catalog memungkinkan tim domain data mengelola kebijakan akses dan kebijakan tata kelola data secara konsisten di seluruh zona sebagai produk data tunggal. Diagram menunjukkan zona data untuk penjualan offline, penjualan online, warehouse supply chain, dan produk-produk.

Data lake dan zona Dataplex Universal Catalog memungkinkan organisasi untuk menyatukan data terdistribusi dan mengaturnya berdasarkan konteks bisnis. Pengaturan ini, menjadi dasar untuk aktivitas seperti mengelola metadata, menyiapkan kebijakan tata kelola, dan memantau kualitas data. Aktivitas tersebut memungkinkan organisasi untuk mengelola data terdistribusi dalam skala besar, seperti pada mesh data.

Kemampuan observasi data

Setiap domain data harus mengimplementasikan mekanisme pemberitahuan dan pemantauannya sendiri idealnya menggunakan pendekatan standar. Setiap domain dapat menerapkan praktik pemantauan yang dijelaskan pada Konsep di pemantauan layanan, sehingga melakukan penyesuaian yang diperlukan pada domain data. Kemampuan observasi merupakan topik besar dan di luar cakupan dari dokumen ini. Bagian ini hanya membahas pola yang berguna dalam implementasi mesh data.

Untuk produk dengan beberapa konsumen data, menyediakan informasi tepat waktu untuk setiap konsumen mengenai status produk dapat menjadikannya beban operasional. Solusi dasar, seperti distribusi email yang dikelola secar amanual, biasanya rentan terhadap error. Fitur ini dapat membantu memberi tahu konsumen tentang pemadaman layanan yang direncanakan, peluncuran produk mendatang, dan penghentian layanan, tetapi tidak memberikan kesadaran operasional secara real time.

Layanan pusat dapat memainkan peran penting dalam memantau kondisi dan kualitas produk pada mesh data. Meskipun bukan prasyarat untuk keberhasilan implementasi mesh data, menerapkan fitur kemampuan observasi dapat meningkatkan kepuasan produsen dan konsumen data, serta mengurangi biaya operasional dan dukungan secara keseluruhan. Diagram berikut menunjukkan arsitektur kemampuan observasi mesh data berdasarkan pada Cloud Monitoring.

Bagian berikut menjelaskan komponen yang ditampilkan pada diagram, sebagai berikut:

- Pemeriksaan uptime untuk menampilkan status produk data secara keseluruhan.

- Metrik kustom untuk memberikan indikator bermanfaat tentang produk data.

- Dukungan operasional oleh tim platform data pusat untuk memberi tahu konsumen data tentang perubahan pada produk data yang mereka gunakan.

- Scorecard produk dan dasbor untuk menampilkan performa produk data.

Cek uptime

Produk data dapat membuat aplikasi kustom sederhana yang menerapkan cek uptime. Pemeriksaan ini dapat berfungsi sebagai indikator tingkat tinggi dari keseluruhan status produk. Misalnya, jika tim produk data menemukan penurunan tiba-tiba pada kualitas data produknya, tim dapat menandai produk yang tidak responsif. Cek uptime yang mendekati real time sangat penting bagi konsumen yang telah mendapatkan produk turunan yang mengandalkan ketersediaan konstan pada data di produk data upstream. Produsen data harus mem-build cek uptime, untuk menyertakan pemeriksaan dependensi upstream mereka, sehingga memberikan gambaran yang akurat tentang kondisi produk mereka kepada konsumen data mereka.

Konsumen data dapat menyertakan cek uptime produk ke dalam pemrosesannya. Misalnya tugas komposer membuat laporan berdasarkan data yang diberikan oleh data produk dapat memvalidasi apakah produk berstatus "berjalan" sebagai langkah pertama. Sebaiknya, aplikasi cek uptime Anda menampilkan payload terstruktur pada isi pesan respons HTTP-nya. Payload terstruktur harus menunjukkan apakah terdapat masalah, penyebab masalah dalam bentuk yang dapat dibaca manusia, dan jika memungkinkan, perkiraan waktu untuk memulihkan layanan. Payload terstruktur ini juga dapat memberikan informasi lebih detail tentang status produk. Misalnya, data berisi informasi keadaan setiap tampilan pada set data yang diotorisasi yang ditampilkan sebagai produk.

Metrik kustom

Produk data dapat memiliki berbagai metrik kustom untuk mengukur kegunaannya. Tim produsen data dapat memublikasikan metrik kustom ini ke project khusus domain Google Cloud yang telah ditetapkan. Untuk membuat pengalaman pemantauan terpadu di seluruh produk data, project pemantauan mesh data pusat dapat diberi akses ke project khusus domainnya.

Setiap tipe antarmuka konsumsi produk data memiliki metrik yang berbeda untuk mengukur kegunaannya. Metrik juga dapat bersifat spesifik untuk domain bisnis. Misalnya, metrik untuk tabel BigQuery ditampilkan melalui tampilan atau Storage Read API dapat menjadi sebagai berikut:

- Jumlah baris.

- Keaktualan data (dinyatakan sebagai jumlah detik sebelum waktu pengukuran).

- Skor kualitas data.

- Data yang tersedia. Metrik ini dapat menunjukkan bahwa data tersedia untuk kueri. Alternatifnya adalah menggunakan cek uptime yang disebutkan sebelumnya pada dokumen ini.

Metrik ini dapat dilihat sebagai indikator tingkat layanan (SLI) untuk produk tertentu.

Untuk stream data (diterapkan sebagai topik Pub/Sub), daftar ini dapat menjadi metrik Pub/Sub standar, yang tersedia melalui topik.

Dukungan operasional oleh tim platform data pusat

Tim platform data pusat dapat mengekspos dasbor kustom untuk menampilkan berbagai tingkat detail kepada konsumen data. Dasbor status sederhana yang mencantumkan produk di mesh daya dan status waktu beroperasi untuk produk tersebut dapat membantu menjawab beberapa permintaan pengguna akhir.

Tim pusat juga dapat berfungsi sebagai hub distribusi notifikasi untuk memberi tahu konsumen data tentang berbagai peristiwa dalam produk data yang mereka gunakan. Biasanya, hub ini dibuat dengan membuat kebijakan pemberitahuan. Memusatkan fungsi ini dapat mengurangi pekerjaan yang harus dilakukan oleh setiap tim pembuat data. Pembuatan kebijakan ini tidak memerlukan pengetahuan tentang domain data dan akan membantu menghindari bottleneck dalam konsumsi data.

Status akhir yang ideal untuk pemantauan mesh data adalah saat template tag produk data untuk mengekspos SLI dan tujuan tingkat layanan (SLO) yang didukung produk saat produk tersedia. Tim pusat kemudian dapat secara otomatis men-deploy pemberitahuan terkait penggunaan pemantauan layanan dengan Monitoring API.

Scorecard produk

Sebagai bagian dari perjanjian tata kelola pusat, empat fungsi dalam mesh data dapat menentukan kriteria untuk membuat scorecards untuk produk data. Scorecard ini bisa menjadi ukuran obyektif dari performa produk data.

Banyak variabel yang digunakan untuk menghitung scorecards merupakan persentase dari waktu ketika produk data memenuhi SLO-nya. Kriteria yang berguna dapat berupa persentase waktu beroperasi, skor kualitas data rata-rata dan persentase produk dengan keaktualan data yang tidak berada di bawah batas. Untuk menghitung metrik ini secara otomatis menggunakan Bahasa Kueri Monitoring (MQL), metrik kustom dan hasil cek uptime dari project monitoring pusat yang seharusnya memadai.

Langkah berikutnya

- Pelajari lebih lanjut tentang BigQuery.

- Baca tentang Dataplex.

- Untuk arsitektur referensi, diagram, dan praktik terbaik lainnya, jelajahi Pusat Arsitektur Cloud.