This page describes replacing the background of an image. Imagen on Vertex AI lets you use automatic object segmentation to maintain that content while modifying other image content. With Imagen 3 you can also provide your own mask area for more control when editing.

View Imagen for Editing and Customization model card

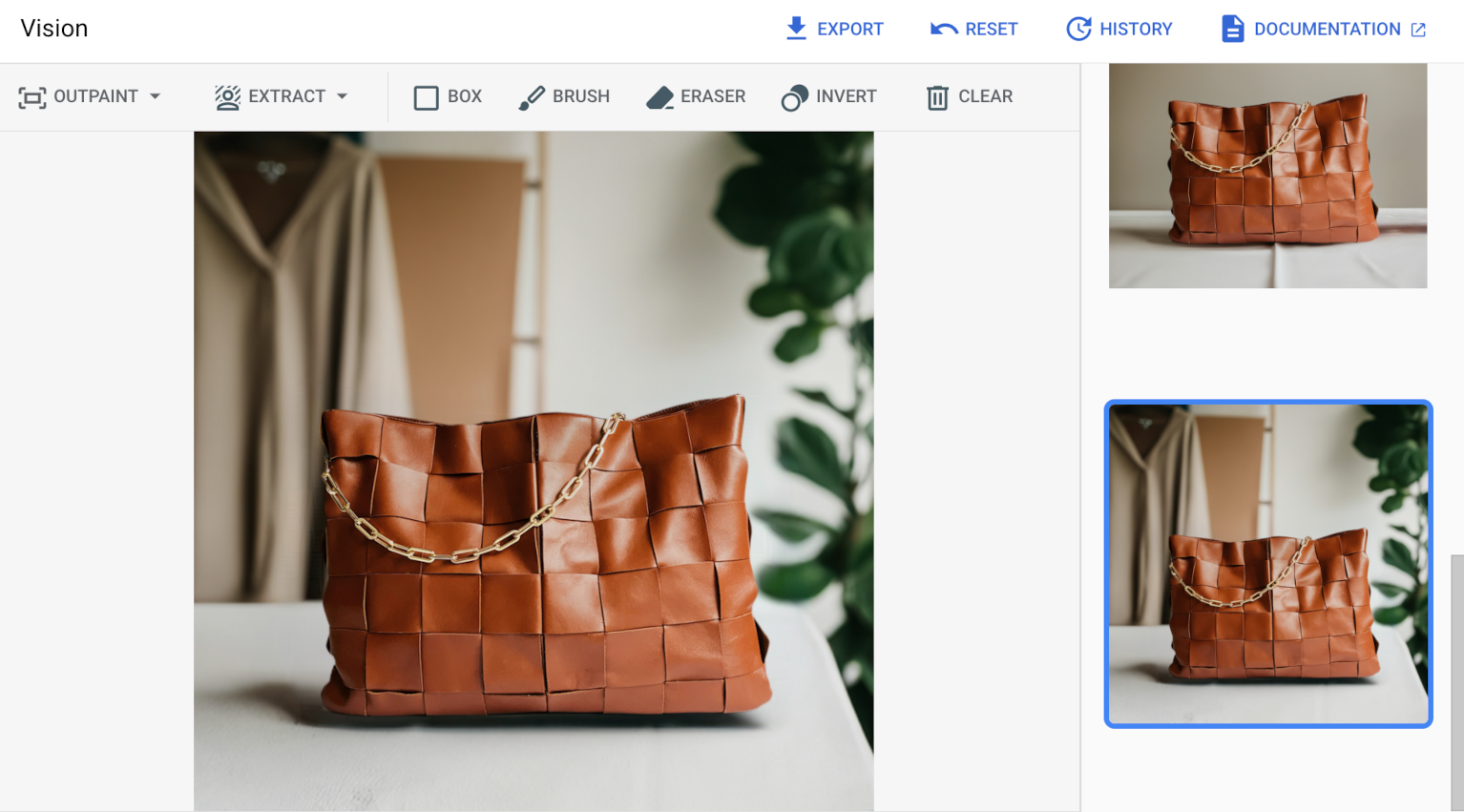

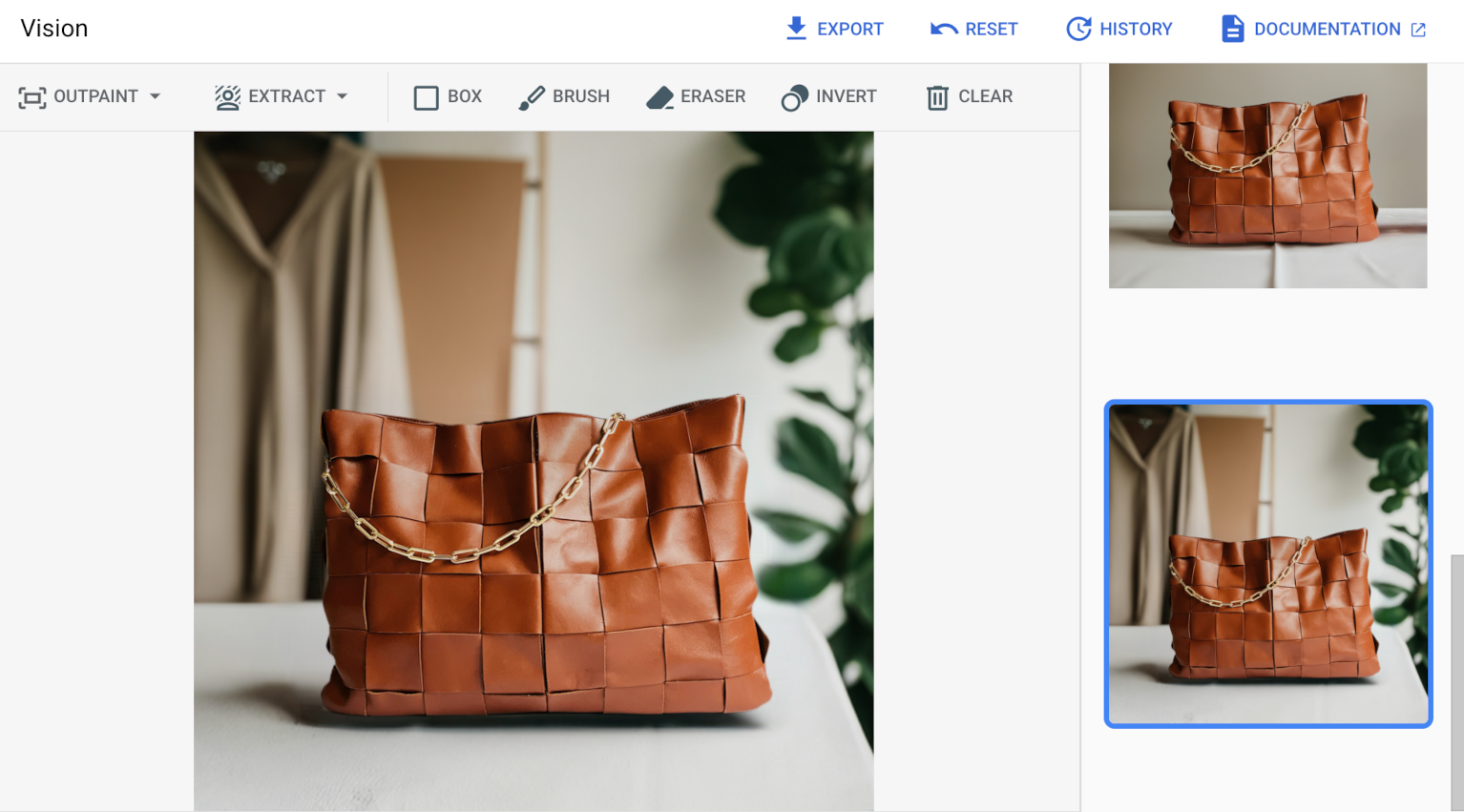

Product image editing example

The following use case highlights enhancing a product image by modifying an image's background, but preserving a product's appearance within the image.

Before you begin

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Vertex AI API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. -

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Vertex AI API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. -

Set up authentication for your environment.

Select the tab for how you plan to use the samples on this page:

Console

When you use the Google Cloud console to access Google Cloud services and APIs, you don't need to set up authentication.

Python

To use the Python samples on this page in a local development environment, install and initialize the gcloud CLI, and then set up Application Default Credentials with your user credentials.

Install the Google Cloud CLI.

If you're using an external identity provider (IdP), you must first sign in to the gcloud CLI with your federated identity.

If you're using a local shell, then create local authentication credentials for your user account:

gcloud auth application-default login

You don't need to do this if you're using Cloud Shell.

If an authentication error is returned, and you are using an external identity provider (IdP), confirm that you have signed in to the gcloud CLI with your federated identity.

For more information, see Set up ADC for a local development environment in the Google Cloud authentication documentation.

REST

To use the REST API samples on this page in a local development environment, you use the credentials you provide to the gcloud CLI.

Install the Google Cloud CLI.

If you're using an external identity provider (IdP), you must first sign in to the gcloud CLI with your federated identity.

For more information, see Authenticate for using REST in the Google Cloud authentication documentation.

Edit with an automatically detected background mask

Imagen lets you edit product images with automatic background detection. This can be helpful if you need to modify a product image's background, but preserve the product's appearance. Product image editing uses Google Product Studio (GPS) offering. You can use the GPS feature as part of Imagen using the console or API.

Use the following instructions to enable and use product image editing with automatic background detection.

Imagen 3

Use the following samples to send a product image editing request using the Imagen 3 model.

Console

-

In the Google Cloud console, go to the Vertex AI > Media Studio page.

- Click Upload. In the displayed file dialog, select a file to upload.

- Click Inpaint.

- In the Parameters panel, click Product Background.

- In the editing toolbar, click background_replaceExtract.

-

Select one of the mask extraction options:

- Background elements: detects the background elements and creates a mask around them.

- Foreground elements: detects the foreground objects and creates a mask around them.

- background_replacePeople: detects people and creates a mask around them.

-

Optional: In the Parameters side panel, adjust the following

options:

- Model: the Imagen model to use

- Number of results: the number of result to generate

- Negative prompt: items to avoid generating

- In the prompt field, enter a prompt to modify the image.

- Click sendGenerate.

Python

Install

pip install --upgrade google-genai

To learn more, see the SDK reference documentation.

Set environment variables to use the Gen AI SDK with Vertex AI:

# Replace the `GOOGLE_CLOUD_PROJECT` and `GOOGLE_CLOUD_LOCATION` values # with appropriate values for your project. export GOOGLE_CLOUD_PROJECT=GOOGLE_CLOUD_PROJECT export GOOGLE_CLOUD_LOCATION=us-central1 export GOOGLE_GENAI_USE_VERTEXAI=True

REST

For more information, see the Edit images API reference.

Before using any of the request data, make the following replacements:

- PROJECT_ID: Your Google Cloud project ID.

- LOCATION: Your project's region. For example,

us-central1,europe-west2, orasia-northeast3. For a list of available regions, see Generative AI on Vertex AI locations. - TEXT_PROMPT: The text prompt that guides what images the model generates. This field is required for both generation and editing.

referenceType: AReferenceImageis an image that provides additional context for image editing. A normal RGB raw reference image (REFERENCE_TYPE_RAW) is required for editing use cases. At most one raw reference image may exist in one request. The output image has the same height and width as raw reference image. A mask reference image (REFERENCE_TYPE_MASK) is required for masked editing use cases.referenceId: The integer ID of the reference image. In this example the two reference image objects are of different types, so they have distinctreferenceIdvalues (1and2).- B64_BASE_IMAGE: The base image to edit or upscale. The image must be specified as a base64-encoded byte string. Size limit: 10 MB.

maskImageConfig.maskMode: The mask mode for mask editing.MASK_MODE_BACKGROUNDis used to automatically mask out background without a user-provided mask.- MASK_DILATION - float. The percentage of image width to dilate this mask by. A

value of

0.00is recommended to avoid extending foreground product. Minimum: 0, maximum: 1. Default: 0.03. - EDIT_STEPS - integer. The number of sampling steps for the base model. For

product image editing, start at

75steps. - EDIT_IMAGE_COUNT - The number of edited images. Accepted integer values: 1-4. Default value: 4.

HTTP method and URL:

POST https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict

Request JSON body:

{

"instances": [

{

"prompt": "TEXT_PROMPT",

"referenceImages": [

{

"referenceType": "REFERENCE_TYPE_RAW",

"referenceId": 1,

"referenceImage": {

"bytesBase64Encoded": "B64_BASE_IMAGE"

}

},

{

"referenceType": "REFERENCE_TYPE_MASK",

"referenceId": 2,

"maskImageConfig": {

"maskMode": "MASK_MODE_BACKGROUND",

"dilation": MASK_DILATION

}

}

]

}

],

"parameters": {

"editConfig": {

"baseSteps": EDIT_STEPS

},

"editMode": "EDIT_MODE_BGSWAP",

"sampleCount": EDIT_IMAGE_COUNT

}

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred" }

Invoke-WebRequest `

-Method POST `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict" | Select-Object -Expand Content

{

"predictions": [

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"mimeType": "image/png",

"bytesBase64Encoded": "BASE64_IMG_BYTES"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

}

]

}

Imagen 2

Use the following samples to send a product image editing request using the Imagen 2 or Imagen model.

Console

In the Google Cloud console, go to the Vertex AI > Media Studio page.

-

In the lower task panel, click Edit image.

-

Click Upload to select your locally stored product image to edit.

-

In the Parameters panel, select Enable product style image editing.

-

In the Prompt field (Write your prompt here), enter your prompt.

Click Generate.

Python

To learn how to install or update the Vertex AI SDK for Python, see Install the Vertex AI SDK for Python. For more information, see the Python API reference documentation.

REST

For more information about imagegeneration model requests, see the

imagegeneration model API reference.

To enable product image editing using the Imagen 2 version

006 model (imagegeneration@006), include the following field in the

"editConfig": {} object: "editMode": "product-image". This request always

returns 4 images.

Before using any of the request data, make the following replacements:

- PROJECT_ID: Your Google Cloud project ID.

- LOCATION: Your project's region. For example,

us-central1,europe-west2, orasia-northeast3. For a list of available regions, see Generative AI on Vertex AI locations. - TEXT_PROMPT: The text prompt that guides what images the model generates. This field is required for both generation and editing.

- B64_BASE_IMAGE: The base image to edit or upscale. The image must be specified as a base64-encoded byte string. Size limit: 10 MB.

- PRODUCT_POSITION: Optional. A setting to maintain the original positioning of

the detected product or object, or allow the model to reposition it. Available values:

reposition(default value), which allows for repositioning, orfixed, which maintains the product position. For non-square input images, the product position behavior is always "reposition", even if "fixed" is set.

HTTP method and URL:

POST https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagegeneration@006:predict

Request JSON body:

{

"instances": [

{

"prompt": "TEXT_PROMPT",

"image": {

"bytesBase64Encoded": "B64_BASE_IMAGE"

},

}

],

"parameters": {

"editConfig": {

"editMode": "product-image",

"productPosition": "PRODUCT_POSITION",

}

}

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagegeneration@006:predict"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred" }

Invoke-WebRequest `

-Method POST `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagegeneration@006:predict" | Select-Object -Expand Content

{

"predictions": [

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"mimeType": "image/png",

"bytesBase64Encoded": "BASE64_IMG_BYTES"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

}

]

}

Edit with a defined mask area

You can choose to mask the area that is replaced, rather than letting Imagen detect the mask automatically.

Console

-

In the Google Cloud console, go to the Vertex AI > Media Studio page.

- Click Upload. In the displayed file dialog, select a file to upload.

- Click Inpaint.

- In the Parameters panel, click Product Background.

-

Do one of the following:

- Upload your own mask:

- Create a mask on your computer.

- Click Upload mask. In the displayed dialog, select a mask to upload.

- Define your own mask: in the editing toolbar, use the mask tools (box, brush, or masked_transitionsinvert tool) to specify the area or areas to add content to.

- Upload your own mask:

-

Optional: In the Parameters panel, adjust the following

options:

- Model: the Imagen model to use

- Number of results: the number of result to generate

- Negative prompt: items to avoid generating

- In the prompt field, enter a prompt to modify the image.

- Click Generate.

Python

Install

pip install --upgrade google-genai

To learn more, see the SDK reference documentation.

Set environment variables to use the Gen AI SDK with Vertex AI:

# Replace the `GOOGLE_CLOUD_PROJECT` and `GOOGLE_CLOUD_LOCATION` values # with appropriate values for your project. export GOOGLE_CLOUD_PROJECT=GOOGLE_CLOUD_PROJECT export GOOGLE_CLOUD_LOCATION=us-central1 export GOOGLE_GENAI_USE_VERTEXAI=True

REST

For more information, see the Edit images API reference.

Before using any of the request data, make the following replacements:

- PROJECT_ID: Your Google Cloud project ID.

- LOCATION: Your project's region. For example,

us-central1,europe-west2, orasia-northeast3. For a list of available regions, see Generative AI on Vertex AI locations. - TEXT_PROMPT: The text prompt that guides what images the model generates. This field is required for both generation and editing.

referenceId: The integer ID of the reference image. In this example the two reference image objects are of different types, so they have distinctreferenceIdvalues (1and2).- B64_BASE_IMAGE: The base image to edit or upscale. The image must be specified as a base64-encoded byte string. Size limit: 10 MB.

- B64_MASK_IMAGE: The black and white image you want to use as a mask layer to edit the original image. The image must be specified as a base64-encoded byte string. Size limit: 10 MB.

- MASK_DILATION - float. The percentage of image width to dilate this mask by. A

value of

0.00is recommended to avoid extending foreground product. Minimum: 0, maximum: 1. Default: 0.03. - EDIT_STEPS - integer. The number of sampling steps for the base model. For

product image editing, start at

75steps. - EDIT_IMAGE_COUNT - The number of edited images. Accepted integer values: 1-4. Default value: 4.

HTTP method and URL:

POST https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict

Request JSON body:

{

"instances": [

{

"prompt": "TEXT_PROMPT": [

{

"referenceType": "REFERENCE_TYPE_RAW",

"referenceId": 1,

"referenceImage": {

"bytesBase64Encoded": "B64_BASE_IMAGE"

}

},

{

"referenceType": "REFERENCE_TYPE_MASK",

"referenceId": 2,

"referenceImage": {

"bytesBase64Encoded": "B64_MASK_IMAGE"

},

"maskImageConfig": {

"maskMode": "MASK_MODE_USER_PROVIDED",

"dilation": MASK_DILATION

}

}

]

}

],

"parameters": {

"editConfig": {

"baseSteps": EDIT_STEPS

},

"editMode": "EDIT_MODE_BGSWAP",

"sampleCount": EDIT_IMAGE_COUNT

}

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred" }

Invoke-WebRequest `

-Method POST `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://LOCATION-aiplatform.googleapis.com/v1/projects/PROJECT_ID/locations/LOCATION/publishers/google/models/imagen-3.0-capability-001:predict" | Select-Object -Expand Content

{

"predictions": [

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"mimeType": "image/png",

"bytesBase64Encoded": "BASE64_IMG_BYTES"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

},

{

"bytesBase64Encoded": "BASE64_IMG_BYTES",

"mimeType": "image/png"

}

]

}

Limitations

Because masks are sometimes incomplete, the model may try to complete the foreground object when extremely small bits are missing at the boundary. As a rare side effect, when the foreground object is already complete, the model may create slight extensions.

A workaround is to segment the model output and then blend. The following is an example python snippet demonstrating a workaround:

blended = Image.composite(out_images[0].resize(image_expanded.size), image_expanded, mask_expanded)

What's next

Read articles about Imagen and other Generative AI on Vertex AI products:

- A developer's guide to getting started with Imagen 3 on Vertex AI

- New generative media models and tools, built with and for creators

- New in Gemini: Custom Gems and improved image generation with Imagen 3

- Google DeepMind: Imagen 3 - Our highest quality text-to-image model