Optical Character Recognition (OCR) is one of the three Vertex AI pre-trained APIs on Google Distributed Cloud (GDC) air-gapped. The OCR service detects text in various file types, such as images, document files, and handwritten text.

OCR offers the following methods available in Distributed Cloud to recognize text:

| Method | Description |

|---|---|

BatchAnnotateImages |

Detect text from a batch of JPEG or PNG images provided in an inline request. |

BatchAnnotateFiles |

Detect text from a batch of PDF or TIFF files provided in an inline request. |

AsyncBatchAnnotateFiles |

Detect text from a batch of PDF or TIFF files in a storage bucket for offline requests. |

Learn more about the supported languages detected by the text recognition feature.

Optical character recognition features

The OCR API can detect and extract text from images. The following two annotation features support optical character recognition:

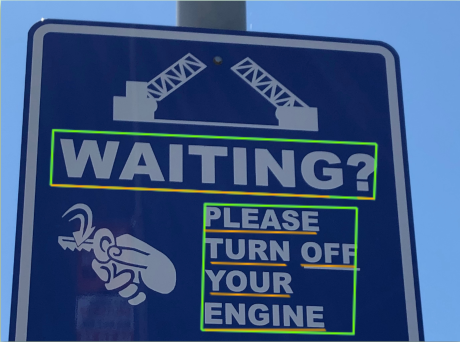

TEXT_DETECTIONdetects and extracts text from any image. For example, a photograph might contain a street or traffic sign. The OCR service returns a JSON file with the extracted string, individual words, and their bounding boxes.

Figure 1. Road sign photograph where the OCR API detects words and their bounding boxes.

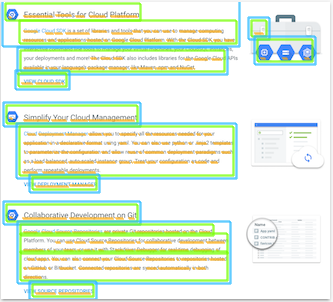

DOCUMENT_TEXT_DETECTIONalso extracts text from an image, but the service optimizes the response for dense text and documents. For example, a scanned image of typed text might contain several paragraphs and headings. The OCR service returns a JSON file with page, block, paragraph, word, and break information.

Figure 2. Scanned image of typed text where the OCR API detects information such as words, pages, and paragraphs.

Handwritten text

Figure 3 is an image of handwritten text. The OCR API detects and extracts text from these images. For a list of handwriting scripts that support handwriting recognition, see Handwriting scripts.

Figure 3. Handwriting image where the OCR API detects text.

Optical character recognition limits

The BatchAnnotateImages and BatchAnnotateFiles API methods only support a

single request per batch call.

The following table lists the current limits of the OCR service in Distributed Cloud.

| File limit for OCR | Value |

|---|---|

| Maximum number of pages | Five |

| Maximum file size | 20 MB |

| Maximum image size | 20 million pixels (length x width) |

Submitted files for the OCR API that exceed the maximum number of pages or the maximum file size return an error. Submitted files that exceed the maximum image size are downsized to 20 million pixels.

Supported file types for OCR

The OCR pre-trained API detects and transcribes text from the following file types:

- TIFF

- JPG

- PNG

You must store the files locally in your Distributed Cloud environment. You can't access files hosted in Cloud Storage or publicly available files for text detection.