This page provides considerations and recommendations that will help you to migrate workloads from Standard Google Kubernetes Engine (GKE) clusters to Autopilot clusters with minimal disruption to your services. This page is for cluster administrators who have already decided to migrate to Autopilot. If you need more information before you decide to migrate, see Choose a GKE mode of operation and Compare GKE Autopilot and Standard.

How migration works

Autopilot clusters automate many of the optional features and functionality that require manual configuration in Standard clusters. Additionally, Autopilot clusters enforce more secure default configurations for applications to provide a more production-ready environment, and reduce your required management overhead compared to Standard mode. Autopilot clusters apply many GKE best practices and recommendations by default. Autopilot uses a workload-centric configuration model, where you request what you need in your Kubernetes manifests and GKE provisions the corresponding infrastructure.

When you migrate your Standard workloads to Autopilot, you should prepare your workload manifests to ensure that they're compatible with Autopilot clusters, for example by ensuring that your manifests request infrastructure that you would normally have to provision yourself.

To prepare and execute a successful migration, you'll do the following high-level tasks:

- Run a pre-flight check on your existing Standard cluster to confirm compatibility with Autopilot.

- If applicable, modify your workload manifests to become Autopilot compatible.

- Do a dry-run where you check that your workloads function correctly on Autopilot.

- Plan and create the Autopilot cluster.

- If applicable, update your infrastructure-as-code tooling.

- Perform the migration.

Before you begin

Before you start, make sure that you have performed the following tasks:

- Enable the Google Kubernetes Engine API. Enable Google Kubernetes Engine API

- If you want to use the Google Cloud CLI for this task,

install and then

initialize the

gcloud CLI. If you previously installed the gcloud CLI, get the latest

version by running the

gcloud components updatecommand. Earlier gcloud CLI versions might not support running the commands in this document.

- Ensure that you have an existing Standard cluster with running workloads.

- Ensure that you have an Autopilot cluster with no workloads to perform dry-runs. To create a new Autopilot cluster, see Create an Autopilot cluster.

Enable the pre-flight check component in your cluster

In GKE version 1.31.6-gke.1027000 and later, the Autopilot pre-flight check component is disabled by default. You must enable the pre-flight check component before you can run the check in a cluster. If your cluster runs a GKE version earlier than 1.31.6-gke.1027000, skip to the next section.

Enable the pre-flight check component in your cluster:

gcloud container clusters update CLUSTER_NAME \ --location=LOCATION \ --enable-autopilot-compatibility-auditingReplace the following:

CLUSTER_NAME: the name of your Standard cluster.LOCATION: the location of your Standard cluster, such asus-central1.

The update operation takes up to 30 minutes to complete.

Run a pre-flight check on your Standard cluster

The Google Cloud CLI and the Google Kubernetes Engine API provide a pre-flight check tool that validates the specifications of your running Standard workloads to identify incompatibilities with Autopilot clusters. This tool is available in GKE version 1.26 and later.

- To use this tool on the command-line, run the following command:

gcloud container clusters check-autopilot-compatibility CLUSTER_NAME

Replace CLUSTER_NAME with the name of your Standard

cluster. Optionally, add --format=json to this command to get the output in a

JSON file.

The output contains findings for all your running Standard workloads, categorized and with actionable recommendations to ensure compatibility with Autopilot, where applicable. The following table describes the categories:

| Pre-flight tool results | |

|---|---|

Passed |

The workload will run as expected with no configuration needed for Autopilot. |

Passed with optional configuration |

The workload will run on Autopilot, but you can make optional configuration changes to optimize the experience. If you don't make configuration changes, Autopilot applies a default configuration for you. For example, if your workload was running on N2 machines in Standard mode, GKE applies the general-purpose compute class for Autopilot. You can optionally modify the workload to request the Balanced compute class, which is backed by N2 machines. |

Additional configuration required |

The workload won't run on Autopilot unless you make a configuration change. For example, consider a container that uses the NET_ADMIN capability in Standard. Autopilot drops this capability by default for improved security, so you'll need to enable NET_ADMIN on the cluster before you deploy the workload. |

Incompatibility |

The workload won't run on Autopilot because it uses functionality that Autopilot doesn't support. For example, Autopilot clusters reject privileged Pods

( |

Modify your workload specifications based on the pre-flight results

After you run the pre-flight check, step through the JSON output and identify workloads that need to change. We recommend implementing even the optional configuration recommendations. Each finding also provides a link to documentation that shows you what the workload specification should look like.

The most important difference between Autopilot and Standard is that infrastructure configuration in Autopilot is automated based on the workload specification. Kubernetes scheduling controls, such as node taints and tolerations, are automatically added to your running workloads. If necessary, you should also modify your infrastructure-as-code configurations, such as Helm charts or Kustomize overlays, to match.

Some common configuration changes you'll need to make include the following:

| Common configuration changes for Autopilot | |

|---|---|

| Compute and architecture configuration | Autopilot clusters use the E-series machine type by default. If you need other machine types, your workload specification must request a compute class, which tells Autopilot to place those Pods on nodes that use specific machine types or architectures. For details, see Compute classes in Autopilot. |

| Accelerators | GPU-based workloads must request GPUs in the workload specification. Autopilot automatically provisions nodes with the required machine type and accelerators. For details, see Deploy GPU workloads in Autopilot. |

| Resource requests | All Autopilot workloads need to specify values for

For details, see Resource requests in Autopilot. |

| Fault-tolerant workloads on Spot VMs | If your workloads run on Spot VMs in Standard,

request Spot Pods in the workload configuration by setting a

node selector for For details, see Spot Pods. |

Perform a dry-run on a staging Autopilot cluster

After you modify each workload manifest, do a dry-run deployment on a new staging Autopilot cluster to ensure that the workload runs as expected.

Command line

Run the following command:

kubectl create --dry-run=server -f=PATH_TO_MANIFEST

Replace PATH_TO_MANIFEST with the path to the modified

workload manifest.

IDE

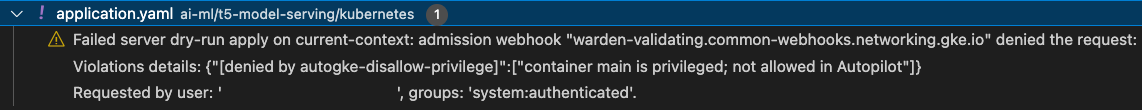

If you use the Cloud Shell Editor, the dry-run command is built-in and runs on any open manifests. If you use Visual Studio Code or Intellij IDEs, install the Cloud Code extension to automatically run the dry-run on any open manifests.

The Problems pane in the IDE shows any dry-run issues, such as in the

following image which shows a failed dry-run for a manifest that specified

privileged: true.

Plan the destination Autopilot cluster

When your dry-run no longer displays issues, plan and create the new Autopilot cluster for your workloads. This cluster is different from the Autopilot cluster that you used to test your manifest modifications in the preceding section.

Use Best practices for onboarding to GKE for basic configuration requirements. Then, read the Autopilot overview, which provides information specific to your use case at different layers.

Additionally, consider the following:

- Autopilot clusters are VPC-native, so we don't recommend migrating to Autopilot from routes-based Standard clusters.

- Use the same or a similar VPC for the Autopilot cluster and the Standard cluster, including any custom firewall rules and VPC settings.

- Autopilot clusters use GKE Dataplane V2 and only support Cilium NetworkPolicies. Calico NetworkPolicies are not supported.

- If you want to use IP masquerading in Autopilot, use an Egress NAT policy.

- Specify the primary IPv4 range for the cluster during cluster creation, with the same range size as the Standard cluster.

- Learn about the quota differences between modes, especially if you have large clusters.

- Learn about the Pods-per-node maximums for Autopilot, which are different from Standard. This matters more if you use node or Pod affinity often.

- All Autopilot clusters use Cloud DNS.

Create the Autopilot cluster

When you're ready to create the cluster, use Create an Autopilot cluster. All Autopilot clusters are regional and are automatically enrolled in a release channel, although you can specify the channel and cluster version. We recommend deploying a small sample workload to the cluster to trigger node auto-provisioning so that your production workloads can schedule immediately.

Update your infrastructure-as-code tooling

The following infrastructure-as-code providers support Autopilot:

Read your preferred provider's documentation and modify your configurations.

Choose a migration approach

The migration method that you use depends on your individual workload and how comfortable you are with networking concepts such as multi-cluster Services and multi-cluster Ingress, as well as how you manage the state of the Kubernetes objects in your cluster.

| Workload type | Pre-flight tool results | Migration approach |

|---|---|---|

| Stateless |

|

For |

| Stateful |

|

Use one of the following methods:

|

| Additional configuration required | Update your Kubernetes manifests and redeploy on Autopilot during scheduled downtime. For high-level steps, see Manually migrate stateful workloads. |

High-level migration steps

Before you begin a migration, ensure that you resolved any Incompatible or

Additional configuration required results from the pre-flight check. If you

deploy workloads with those results on Autopilot without modifications,

the workloads will fail.

The following sections are a high-level overview of a hypothetical migration. Actual steps will vary depending on your environment and each of your workloads. Plan, test, and re-test workloads for issues before migrating a production environment. Considerations include the following:

- The duration of the migration process depends on how many workloads you're migrating.

- Downtime is required while you migrate stateful workloads.

- Manual migration lets you focus on individual workloads during the migration so that you can resolve issues in real time on a case-by-case basis.

- In all cases, ensure that you migrate Services, Ingresses, and other Kubernetes objects that facilitate the functionality of your stateless and stateful workloads.

Migrate all workloads using Backup for GKE

If all the workloads (stateful and stateless) running in your Standard

cluster are compatible with Autopilot and the preflight tool returns

either Passed or Passed with optional configuration for every workload, you

can use Backup for GKE

to back up the entire state of your Standard cluster and workloads and

restore the backup onto the Autopilot cluster.

This approach has the following benefits:

- You can move all workloads from Standard to Autopilot operation with minimal configuration needed.

- You can move stateless and stateful workloads and retain the relationships between workloads, as well as associated PersistentVolumes.

- Rollbacks are intuitive and managed by Google. You can roll the entire migration back or selectively roll back specific workloads.

- You can migrate stateful workloads across Google Cloud regions. Manual migration of stateful workloads can only happen in the same region.

When you use this method, GKE applies Autopilot default

configurations to workloads that received a Passed with optional configuration

result from the pre-flight tool. Before you migrate these workloads, ensure that

you're comfortable with those defaults.

Manually migrate stateless workloads with no downtime

To migrate stateless workloads with no downtime for your services, you register the source and destination clusters to a GKE Fleet and use multi-cluster Services and multi-cluster Ingress to ensure that your workloads remain available during the migration.

- Enable multi-cluster Services and multi-cluster Ingress for your source cluster and your destination cluster. For instructions, see Configuring multi-cluster Services and Setting up Multi Cluster Ingress.

- If you have backend dependencies such as a database workload, export those Services from your Standard cluster using multi-cluster Services. This lets workloads in your Autopilot cluster access the dependencies in the Standard cluster. For instructions, see Registering a Service for export.

- Deploy a multi-cluster Ingress and a multi-cluster Service to control inbound traffic between clusters. Configure the multi-cluster Service to only send traffic to the Standard cluster. For instructions, see Deploying Ingress across clusters.

- Deploy your stateless workloads with updated manifests to the Autopilot cluster. Your exported multi-cluster Services automatically match and send traffic to the corresponding stateful workloads.

- Update your multi-cluster Service to direct inbound traffic to the Autopilot cluster. For instructions, see Cluster selection.

You're now serving your stateless workloads from the Autopilot cluster. If you only had stateless workloads in the source cluster, and no dependencies remain, proceed to Complete the migration. If you have stateful workloads, proceed to Manually migrate stateful workloads.

Manually migrate stateful workloads

After migrating your stateless workloads, you must quiesce and migrate your stateful workloads from the Standard cluster. This step requires downtime for your cluster.

- Start your environment downtime.

- Quiesce your stateful workloads.

- Ensure that you modified your workload manifests for Autopilot compatibility. For details, see Modify your workload specifications based on the pre-flight results.

Deploy the workloads on your Autopilot cluster.

Deploy the Services for your stateful workloads on the Autopilot cluster.

Update your in-cluster networking to let your stateless workloads continue to communicate with their backend workloads:

- If you used a static IP address in your Standard cluster backend Services, reuse that IP address in Autopilot.

- If you let Kubernetes assign an IP address, deploy your backend Services, get the new IP address, and update your DNS to use the new IP address.

At this stage, the following should be true:

- You're running all your stateless workloads in Autopilot.

- Any backend stateful workloads are also running in Autopilot.

- Your stateless and stateful workloads can communicate with each other.

- Your multi-cluster Service directs all inbound traffic to your Autopilot cluster.

When you've migrated all the workloads and Kubernetes objects to the new cluster, proceed to Complete the migration.

Alternative: Manually migrate all workloads during downtime

If you don't want to use multi-cluster Services and multi-cluster Ingress to migrate workloads with minimal downtime, migrate all your workloads during downtime. This method results in longer downtime for your services, but doesn't require working with multi-cluster features.

- Start your downtime.

- Deploy your stateless manifests on the Autopilot cluster.

- Manually migrate your stateful workloads. For instructions, see the Manually migrate stateful workloads section.

- Modify DNS records for both intra-cluster and inbound external traffic to use the new IP addresses of Services.

- End your downtime.

Complete the migration

After moving all your workloads and Services to the new Autopilot cluster, end your downtime and allow your environment to soak for a predetermined duration. When you're satisfied with the state of your migration and are sure that you won't need to roll the migration back, you can clean up migration artifacts and complete the migration.

Optional: Clean up multi-cluster features

If you used multi-cluster Ingress and multi-cluster Services to migrate, and you don't want your Autopilot cluster to remain registered to a Fleet, do the following:

- For inbound external traffic, deploy an Ingress and set it to the IP address of the Services that expose your workloads. For instructions, see Ingress for external Application Load Balancers.

- For intra-cluster traffic, such as from frontend workloads to stateful dependencies, update cluster DNS records to use the IP addresses of those Services.

- Delete the multi-cluster Ingress and the multi-cluster Service resources that you created during the migration.

- Disable multi-cluster Ingress and multi-cluster Services.

- Unregister the Autopilot cluster from the Fleet.

Delete the Standard cluster

After enough time has passed after the migration completes, and you're satisfied with the state of your new cluster, delete the Standard cluster. We recommend that you keep your backed up Standard manifests.

Roll back a faulty migration

If you experience issues and want to revert to the Standard cluster, do one of the following, depending on how you performed the migration:

If you used Backup for GKE to create backups during the migration, restore the backups onto the original Standard cluster. For instructions, see Restore a backup.

If you manually migrated workloads, repeat the migration steps in the previous sections with the Standard cluster as the destination and the Autopilot cluster as the source. At a high-level, this involves the following steps:

- Start downtime.

- Manually migrate stateful workloads to the Standard cluster. For instructions, see the Manually migrate stateful workloads section.

- Move stateless workloads to the Standard cluster using the original manifests that you backed up prior to the migration.

- Deploy your Ingress to the Standard cluster and cutover your DNS to the new IP addresses for Services.

- Delete the Autopilot cluster.