This page describes best practices for executing scheduled Cloud Run jobs for Google Cloud projects when using a VPC Service Controls perimeter.

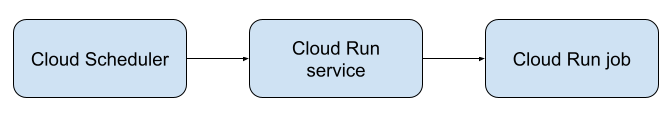

Cloud Scheduler cannot trigger jobs inside a VPC Service Controls perimeter. You must take additional steps to set up scheduled jobs. In particular, you must proxy the request through another component. We recommend using a Cloud Run service as the proxy.

The following diagram shows the architecture:

Before you begin

Set up Cloud Run for VPC Service Controls. This is a one-time setup that all subsequent scheduled jobs use. You must also do some per-service setup later, which is described in the instructions that follow.

Set up a scheduled job

To set up a scheduled job inside a VPC Service Controls perimeter:

Create a job, and note the name of your job.

Complete the per-job Cloud Run-specific VPC Service Controls setup. You need to connect your job to a VPC network and route all traffic through that network.

If you don't have an existing Cloud Run job that you want to trigger, test the feature by deploying the sample Cloud Run jobs container

us-docker.pkg.dev/cloudrun/container/job:latestto Cloud Run.Deploy the Cloud Run service that acts as a proxy. See Sample proxy service for a sample service that triggers a Cloud Run job in response to a request. After deployment, the console displays the service's URL next to the text URL:.

Complete the per-service Cloud Run-specific VPC Service Controls setup. You need to connect the service to a VPC network, and route all traffic through that network. Make sure to set ingress to Internal.

Create a Cloud Scheduler cron job that triggers your Cloud Run proxy service:

Click Create Job.

Enter the values you want for the Name, Region, Frequency, and Timezone fields. For more information, see Create a cron job using Cloud Scheduler.

Click Configure the execution.

Select Target type HTTP.

For URL, enter the Cloud Run proxy service URL that you noted in the previous step.

For HTTP method, select Get.

For Auth header, select Add OIDC token

For Service Account, select Compute Engine default service account or a custom service account that has the

run.routes.invokepermission or theCloud Run Invokerrole.For Audience, enter the same enter the Cloud Run proxy service URL that you noted in the previous step.

Leave all other fields blank.

Click Create to create the Cloud Scheduler cron job.

Sample proxy service

The following section shows a sample python service that proxies requests and triggers the Cloud Run job.

Create a file called

main.pyand paste the following code into it. Update the job name, region, and project ID to the values you need.import os from flask import Flask app = Flask(__name__) # pip install google-cloud-run from google.cloud import run_v2 @app.route('/') def hello(): client = run_v2.JobsClient() # UPDATE TO YOUR JOB NAME, REGION, AND PROJECT ID job_name = 'projects/YOUR_PROJECT_ID/locations/YOUR_JOB_REGION/jobs/YOUR_JOB_NAME' print("Triggering job...") request = run_v2.RunJobRequest(name=job_name) operation = client.run_job(request=request) response = operation.result() print(response) return "Done!" if __name__ == '__main__': app.run(debug=True, host="0.0.0.0", port=int(os.environ.get("PORT", 8080)))

Create a file named

requirements.txtand paste the following code into it:google-cloud-run flask

Create a Dockerfile with the following contents:

FROM python:3.9-slim-buster WORKDIR /app COPY requirements.txt requirements.txt RUN pip install --no-cache-dir -r requirements.txt COPY . . CMD ["python3", "main.py"]

Build and deploy the container. Source-based deployments can be challenging to set up in a VPC Service Controls environment, due to the need to set up Cloud Build custom workers. If you have an existing build and deploy pipeline, use it to build the source code into a container and deploy the container as a Cloud Run service.

If you don't have an existing build and deploy setup, build the container locally and push it to Artifact Registry, for example:

PROJECT_ID=YOUR_PROJECT_ID REGION=YOUR_REGION AR_REPO=YOUR_AR_REPO CLOUD_RUN_SERVICE=job-runner-service docker build -t $CLOUD_RUN_SERVICE . docker tag $CLOUD_RUN_SERVICE $REGION_ID-docker.pkg.dev/$PROJECT_ID/AR_REPO/$CLOUD_RUN_SERVICE docker push $REGION_ID-docker.pkg.dev/$PROJECT_ID/AR_REPO/$CLOUD_RUN_SERVICE

Note the service URL returned by the deploy command.

What's next

After you use this feature, learn more by reading the following: