This page describes GPU configuration for your Cloud Run workerpools. Google provides NVIDIA L4 GPUs with 24 GB of GPU memory (VRAM), which is separate from the instance memory.

GPU on Cloud Run is fully managed, with no extra drivers or libraries needed. The GPU feature offers on-demand availability with no reservations needed, similar to the way on-demand CPU and on-demand memory work in Cloud Run.

Cloud Run instances with an attached L4 GPU with drivers pre-installed start in approximately 5 seconds, at which point the processes running in your container can start to use the GPU.

You can configure one GPU per Cloud Run instance. If you use sidecar containers, note that the GPU can only be attached to one container.

Supported regions

asia-southeast1(Singapore)asia-south1(Mumbai) . This region is available by invitation only. Contact your Google Account team if you are interested in this region.europe-west1(Belgium)Low CO2

europe-west4(Netherlands)Low CO2

us-central1(Iowa)Low CO2

us-east4(Northern Virginia)

Supported GPU types

You can use one L4 GPU per Cloud Run instance. An L4 GPU has the following pre-installed drivers:

- The current NVIDIA driver version: 535.216.03 (CUDA 12.2)

Pricing impact

See Cloud Run pricing for GPU pricing details. Note the following requirements and considerations:

- There is a difference in cost between GPU zonal redundancy and non-zonal redundancy. See Cloud Run pricing for GPU pricing details.

- GPU worker pools cannot be autoscaled. You are charged for the GPU even if the GPU is not running any process, and as long as the worker pool GPU instance is running.

- CPU and memory for worker pools is priced differently than services and jobs. However, GPU SKU is priced the same as services and jobs.

- You must use a minimum of 4 CPU and 16 GiB of memory.

- GPU is billed for the entire duration of the instance lifecycle.

GPU zonal redundancy options

By default, Cloud Run deploys your worker pool across multiple zones within a region. This architecture provides inherent resilience: if a zone experiences an outage, Cloud Run automatically routes traffic away from the affected zone to healthy zones within the same region.

When working with GPU resources, keep in mind GPU resources have specific capacity constraints. During a zonal outage, the standard failover mechanism for GPU workloads relies on sufficient unused GPU capacity being available in the remaining healthy zones. Due to the constrained nature of GPUs, this capacity might not always be available.

To increase the availability of your GPU-accelerated worker pools during zonal outages, you can configure zonal redundancy specifically for GPUs:

Zonal Redundancy Turned On (default): Cloud Run reserves GPU capacity for your worker pool across multiple zones. This significantly increases the probability that your worker pool can successfully handle traffic rerouted from an affected zone, offering higher reliability during zonal failures with additional cost per GPU second.

Zonal Redundancy Turned Off: Cloud Run attempts failover for GPU workloads on a best-effort basis. Traffic is routed to other zones only if sufficient GPU capacity is available at that moment. This option does not guarantee reserved capacity for failover scenarios but results in a lower cost per GPU second.

SLA

The SLA for Cloud Run GPU depends on whether the worker pool uses the zonal redundancy or non-zonal redundancy option. Refer to the SLA page for details.

Request a quota increase

Projects using Cloud Run nvidia-l4 GPUs in a region for the first

time are automatically granted 3 GPU quota (zonal redundancy off) when the first

deployment is created.

If you need additional Cloud Run GPUs, you must request a quota increase

for your Cloud Run worker pool. Use the links provided in the following

buttons to request the quota you need.

| Quota needed | Quota link |

|---|---|

| GPU with zonal redundancy turned off (lower price) | Request GPU quota without zonal redundancy |

| GPU with zonal redundancy turned on (higher price) | Request GPU quota with zonal redundancy |

For more information on requesting quota increases, see How to increase quota.

Before you begin

The following list describes requirements and limitations when using GPUs in Cloud Run:

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Cloud Run API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. - Request required quota.

- Consult GPU Best practices: Cloud Run worker pools with GPUs for recommendations on building your container image and loading large models.

- Make sure your Cloud Run worker pool has the following configurations:

- Configure the billing settings to instance-based billing. Note that worker pools that are set to instance-based billing can still scale to zero.

- Configure a minimum of 4 CPU for your worker pool, with 8 CPU recommended.

- Configure a minimum of 16 GiB of memory, with 32 GiB recommended.

- Determine and set an optimal maximum concurrency for your GPU usage.

Required roles

To get the permissions that you need to configure and deploy Cloud Run worker pools, ask your administrator to grant you the following IAM roles on workerpools:

-

Cloud Run Developer (

roles/run.developer) - the Cloud Run worker pool -

Service Account User (

roles/iam.serviceAccountUser) - the service identity

For a list of IAM roles and permissions that are associated with Cloud Run, see Cloud Run IAM roles and Cloud Run IAM permissions. If your Cloud Run worker pool interfaces with Google Cloud APIs, such as Cloud Client Libraries, see the service identity configuration guide. For more information about granting roles, see deployment permissions and manage access.

Configure a Cloud Run worker pool with GPU

Any configuration change leads to the creation of a new revision. Subsequent revisions will also automatically get this configuration setting unless you make explicit updates to change it.

You can use the Google Cloud console, Google Cloud CLI or YAML to configure GPU.

Console

In the Google Cloud console, go to Cloud Run:

Select Worker Pools from the Cloud Run navigation menu, and click Deploy container to configure a new worker pool. If you are configuring an existing worker pool, click the worker pool, then click Edit and deploy new revision.

If you are configuring a new worker pool, fill out the initial worker pool settings page, then click Container(s), Volumes, Networking, Security to expand the worker pool configuration page.

Click the Container tab.

- Configure CPU, memory, concurrency, execution environment, and startup probe following the recommendations in Before you begin

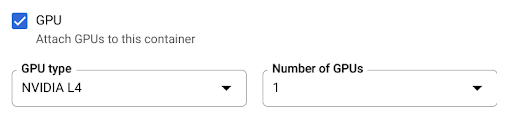

- Check the GPU checkbox, then select the GPU type from the GPU type menu, and the number of GPUs from the Number of GPUs menu.

- By default, zonal redundancy is turned on. To change the current setting, select the GPU checkbox to show the GPU redundancy options.

- Select No zonal redundancy to turn off zonal redundancy

- Select Zonal redundancy to turn on zonal redundancy.

Click Create or Deploy.

gcloud

To create a GPU-enabled worker pool, use the

gcloud beta run worker-pools deploy

command:

gcloud beta run worker-pools deploy WORKER_POOL \ --image IMAGE_URL \ --gpu 1

Replace the following:

- WORKER_POOL: the name of your Cloud Run worker pool.

- IMAGE_URL: a reference to the container image that

contains the worker pool, such as

us-docker.pkg.dev/cloudrun/container/worker-pool:latest.

To update the GPU configuration for a worker pool, use the

gcloud beta run worker-pools update

command:

gcloud beta run worker-pools update WORKER_POOL \ --image IMAGE_URL \ --cpu CPU \ --memory MEMORY \ --gpu GPU_NUMBER \ --gpu-type GPU_TYPE \ --GPU_ZONAL_REDUNDANCY

Replace the following:

- WORKER_POOL: the name of your Cloud Run worker pool.

- IMAGE_URL: a reference to the container image that

contains the worker pool, such as

us-docker.pkg.dev/cloudrun/container/worker-pool:latest. - CPU: the number of CPU. You must specify at least

4CPU. - MEMORY: the amount of memory. You must specify at least

16Gi(16 GiB). - GPU_NUMBER: the value

1(one). If this is unspecified but a GPU_TYPE is present, the default is1. - GPU_TYPE: the GPU type. If this is unspecified but a GPU_NUMBER is present, the default is

nvidia-l4(nvidiaL4 lowercase L, not numeric value fourteen). - GPU_ZONAL_REDUNDANCY:

no-gpu-zonal-redundancyto turn off zonal redundancy, orgpu-zonal-redundancyto turn on zonal redundancy.

YAML

If you are creating a new worker pool, skip this step. If you are updating an existing worker pool, download its YAML configuration:

gcloud beta run worker-pools describe WORKER_POOL --format export > workerpool.yaml

Update the

nvidia.com/gpu:attribute andnodeSelector::

run.googleapis.com/accelerator:apiVersion: run.googleapis.com/v1 kind: WorkerPool metadata: name: WORKER_POOL spec: template: metadata: annotations: run.googleapis.com/launch-stage: BETA run.googleapis.com/gpu-zonal-redundancy-disabled: 'GPU_ZONAL_REDUNDANCY' spec: containers: - image: IMAGE_URL resources: limits: cpu: 'CPU' memory: 'MEMORY' nvidia.com/gpu: '1' nodeSelector: run.googleapis.com/accelerator: GPU_TYPE

Replace the following:

- WORKER_POOL: the name of your Cloud Run worker pool.

- IMAGE_URL: a reference to the container image that

contains the worker pool, such as

us-docker.pkg.dev/cloudrun/container/worker-pool:latest. - CPU: the number of CPU. You must specify at least

4CPU. - MEMORY: the amount of memory. You must specify at least

16Gi(16 GiB). - GPU_TYPE: the value

nvidia-l4(nvidia-L4 lowercase L, not numeric value fourteen). - GPU_ZONAL_REDUNDANCY:

falseto turn on GPU zonal redundancy, ortrueto turn it off.

Create or update the worker pool using the following command:

gcloud beta run worker-pools replace workerpool.yaml

Terraform

To learn how to apply or remove a Terraform configuration, see Basic Terraform commands.

resource "google_cloud_run_v2_worker_pool" "default" {

provider = google-beta

name = "WORKER_POOL"

location = "REGION"

template {

gpu_zonal_redundancy_disabled = "GPU_ZONAL_REDUNDANCY"

containers {

image = "IMAGE_URL"

resources {

limits = {

"cpu" = "CPU"

"memory" = "MEMORY"

"nvidia.com/gpu" = "1"

}

}

}

node_selector {

accelerator = "GPU_TYPE"

}

}

}

Replace the following:

- WORKER_POOL: the name of your Cloud Run worker pool.

- GPU_ZONAL_REDUNDANCY:

falseto turn on GPU zonal redundancy, ortrueto turn it off. - IMAGE_URL: a reference to the container image that

contains the worker pool, such as

us-docker.pkg.dev/cloudrun/container/worker-pool:latest. - CPU: the number of CPU. You must specify at least

4CPU. - MEMORY: the amount of memory. You must specify at least

16Gi(16 GiB). - GPU_TYPE: the value

nvidia-l4(nvidia-L4 lowercase L, not numeric value fourteen).

View GPU settings

To view the current GPU settings for your Cloud Run worker pool:

Console

In the Google Cloud console, go to the Cloud Run worker pools page:

Click the worker pool you are interested in to open the Worker pools details page.

Click Edit and deploy new revision.

Locate the GPU setting in the configuration details.

gcloud

Use the following command:

gcloud beta run worker-pools describe WORKER_POOL

Locate the GPU setting in the returned configuration.

Remove GPU

You can remove GPU using the Google Cloud console, the Google Cloud CLI, or YAML.

Console

In the Google Cloud console, go to Cloud Run:

Select Worker Pools from the Cloud Run navigation menu, and click Deploy container to configure a new worker pool. If you are configuring an existing worker pool, click the worker pool, then click Edit and deploy new revision.

If you are configuring a new worker pool, fill out the initial worker pool settings page, then click Container(s), Volumes, Networking, Security to expand the worker pool configuration page.

Click the Container tab.

- Uncheck the GPU checkbox.

- Click Create or Deploy.

gcloud

To remove GPU, set the number of GPUs to 0:

gcloud beta run worker-pools update WORKER_POOL --gpu 0

Replace WORKER_POOL with the name of your Cloud Run worker pool.

YAML

If you are creating a new worker pool, skip this step. If you are updating an existing worker pool, download its YAML configuration:

gcloud beta run worker-pools describe WORKER_POOL --format export > workerpool.yaml

Delete the

nvidia.com/gpu:and thenodeSelector: run.googleapis.com/accelerator: nvidia-l4lines.Create or update the worker pool using the following command:

gcloud beta run worker-pools replace workerpool.yaml

Libraries

By default, all of the NVIDIA L4 driver libraries are mounted under

/usr/local/nvidia/lib64. Cloud Run automatically appends this path to

the LD_LIBRARY_PATH environment variable (i.e. ${LD_LIBRARY_PATH}:/usr/local/nvidia/lib64)

of the container with the GPU. This allows the dynamic linker to find the NVIDIA

driver libraries. The linker searches and resolves paths

in the order you list in the LD_LIBRARY_PATH environment variable. Any

values you specify in this variable take precedence over the default Cloud Run

driver libraries path /usr/local/nvidia/lib64.

If you want to use a CUDA version greater than 12.2,

the easiest way is to depend on a newer NVIDIA base image

with forward compatibility packages already installed. Another option is to

manually install the NVIDIA forward compatibility packages

and add them to LD_LIBRARY_PATH. Consult NVIDIA's compatibility matrix

to determine which CUDA versions are forward compatible with the provided NVIDIA

driver version (535.216.03).