This page describes GPU configuration for your Cloud Run jobs. GPUs work well for AI workloads such as, training large language models (LLMs) using your preferred frameworks, performing batch or offline inference on LLMs, and handling other compute-intensive tasks like video processing and graphics rendering as background jobs. Google provides NVIDIA L4 GPUs with 24 GB of GPU memory (VRAM), which is separate from the instance memory.

GPU on Cloud Run is fully managed, with no extra drivers or libraries needed. The GPU feature offers on-demand availability with no reservations needed, similar to the way on-demand CPU and on-demand memory work in Cloud Run.

Cloud Run instances with an attached L4 GPU with drivers pre-installed start in approximately 5 seconds, at which point the processes running in your container can start to use the GPU.

You can configure one GPU per Cloud Run instance. If you use sidecar containers, note that the GPU can only be attached to one container.

Supported regions

asia-southeast1(Singapore)asia-south1(Mumbai) . This region is available by invitation only. Contact your Google Account team if you are interested in this region.europe-west1(Belgium)Low CO2

europe-west4(Netherlands)Low CO2

us-central1(Iowa)Low CO2

us-east4(Northern Virginia)

Supported GPU types

You can use one L4 GPU per Cloud Run instance. An L4 GPU has the following pre-installed drivers:

- The current NVIDIA driver version: 535.216.03 (CUDA 12.2)

Pricing impact

See Cloud Run pricing for GPU pricing details. Note the following requirements and considerations:

- GPU for jobs follow No zonal redundancy pricing.

- You must use a minimum of 4 CPU and 16 GiB of memory.

- GPU is billed for the entire duration of the instance lifecycle.

GPU non-zonal redundancy

The Cloud Run jobs feature provides non-zonal redundancy support only for GPU-enabled instances. With non-zonal redundancy enabled, Cloud Run attempts failover for GPU-enabled jobs on a best-effort basis. Cloud Run routes job executions to other zones only if sufficient GPU capacity is available at that moment. This option does not guarantee reserved capacity for failover scenarios but results in a lower cost per GPU second.

See configure a Cloud Run job with GPU for details on enabling non-zonal redundancy.

Request a quota increase

If your project doesn't have GPU quota, deploy a Cloud Run service to automatically receive a grant of 3 nvidia-l4 GPU quota (zonal redundancy off) for that region. Note that this automatic quota grant is subject to availability depending on your CPU and memory capacity. This limits the count of GPUs that might be active across all of the project's services, jobs, and worker pools at any given time.

If you need additional Cloud Run GPUs for jobs, request a quota increase.

Before you begin

The following list describes requirements and limitations when using GPUs in Cloud Run:

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator role

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator role

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Cloud Run API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. - Consult Best practices: Cloud Run jobs with GPUs for optimizing performance when using Cloud Run jobs with GPU.

Required roles

To get the permissions that you need to configure Cloud Run jobs, ask your administrator to grant you the following IAM roles on jobs:

-

Cloud Run Developer (

roles/run.developer) - the Cloud Run job -

Service Account User (

roles/iam.serviceAccountUser) - the service identity

For a list of IAM roles and permissions that are associated with Cloud Run, see Cloud Run IAM roles and Cloud Run IAM permissions. If your Cloud Run job interfaces with Google Cloud APIs, such as Cloud Client Libraries, see the service identity configuration guide. For more information about granting roles, see deployment permissions and manage access.

Configure a Cloud Run job to use GPUs

You can use the Google Cloud console, Google Cloud CLI or YAML to configure GPU.

Console

In the Google Cloud console, go to the Cloud Run Jobs page:

Click Deploy container to fill out the initial job settings page. If you are configuring an existing job, select the job, then click View and edit job configuration.

Click Container(s), Volumes, Connections, Security to expand the job properties page.

Click the Container tab.

- Configure CPU, memory, and startup probe following the recommendations in Before you begin.

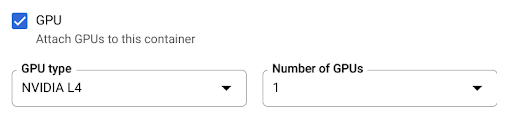

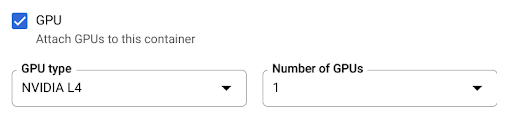

- Check the GPU checkbox. Then select the GPU type from the GPU type menu, and the number of GPUs from the Number of GPUs menu.

Click Create or Update.

gcloud

To enable non-zonal redundancy, you must specify

--no-gpu-zonal-redundancy. This is required for using GPU with jobs.

To create a job using GPUs enabled, use the gcloud run jobs create command:

gcloud run jobs create JOB_NAME \ --image=IMAGE \ --gpu=1 \ --no-gpu-zonal-redundancy

Replace:

- JOB_NAME with the name of your Cloud Run job.

- IMAGE_URL: a reference to the

container image—for

example,

us-docker.pkg.dev/cloudrun/container/job:latest.

To update the GPU configuration for a job, use the gcloud run jobs update command:

gcloud run jobs update JOB_NAME \ --image IMAGE_URL \ --cpu CPU \ --memory MEMORY \ --gpu GPU_NUMBER \ --gpu-type GPU_TYPE \ --parallelism PARALLELISM \ --no-gpu-zonal-redundancy

Replace:

- JOB_NAME with the name of your Cloud Run job.

- IMAGE_URL: a reference to the

container image—for

example,

us-docker.pkg.dev/cloudrun/container/job:latest. - CPU with a minimum of

4CPU for your job. Recommended value is8. - MEMORY with a minimum of

16Gi(16 GiB). Recommended value is32Gi. - GPU_NUMBER with the value

1(one). If this is unspecified but a GPU_TYPE is present, the default is1. - GPU_TYPE with the GPU type. If this is unspecified but a GPU_NUMBER is present, the default is

nvidia-l4(nvidiaL4 lowercase L, not numeric value fourteen). - PARALLELISM with an integer value less than the lowest value of the applicable quota limits you allocated for your project.

YAML

You must set the annotation run.googleapis.com/gpu-zonal-redundancy-disabled:

to 'true`. This enables non-zonal redundancy, which is required for GPUs for

jobs.

If you are creating a new job, skip this step. If you are updating an existing job, download its YAML configuration:

gcloud run jobs describe JOB_NAME --format export > job.yaml

Update the

nvidia.com/gpuattribute,annotations: run.googleapis.com/launch-stagefor launch stage, andnodeSelector::

run.googleapis.com/acceleratorapiVersion: run.googleapis.com/v1 kind: Job metadata: name: JOB_NAME labels: cloud.googleapis.com/location: REGION spec: template: metadata: annotations: run.googleapis.com/gpu-zonal-redundancy-disabled: 'true' spec: template: spec: containers: - image: IMAGE_URL limits: cpu: 'CPU' memory: 'MEMORY' nvidia.com/gpu: 'GPU_NUMBER' nodeSelector: run.googleapis.com/accelerator: GPU_TYPE

Replace:

- JOB_NAME with the name of your Cloud Run job.

- IMAGE_URL: a reference to the

container image—for

example,

us-docker.pkg.dev/cloudrun/container/job:latest - CPU with the number of CPU. You must specify at least

4CPU. - MEMORY with the amount of memory. You must specify at least

16Gi(16 GiB). - GPU_NUMBER with the value

1(one) because we only support attaching one GPU per Cloud Run instance. - GPU_TYPE with the value

nvidia-l4(nvidia-L4 lowercase L, not numeric value fourteen).

Create or update the job using the following command:

gcloud run jobs replace job.yaml

View GPU settings

To view the current GPU settings for your Cloud Run job:

Console

In the Google Cloud console, go to the Cloud Run jobs page:

Click the job you are interested in to open the Job details page.

Click View and Edit job configuration.

Locate the GPU setting in the configuration details.

gcloud

Use the following command:

gcloud run jobs describe JOB_NAME

Locate the GPU setting in the returned configuration.

Detach GPU resources from a job

You can detach GPU resources from a job using the Google Cloud console, Google Cloud CLI or YAML.

Console

In the Google Cloud console, go to the Cloud Run Jobs page:

In the jobs list, click a job to open that job's details.

Click View and edit job configuration.

Click Container(s), Volumes, Connections, Security to expand the job properties page.

Click the Container tab.

- Clear the GPU checkbox.

Click Update.

gcloud

To detach GPU resources from your Cloud Run job, set the number of GPUs to 0 using the

gcloud run jobs update

command:

gcloud run jobs update JOB_NAME --gpu 0

Replace JOB_NAME with the name of your Cloud Run job.

YAML

If you are creating a new job, skip this step. If you are updating an existing job, download its YAML configuration:

gcloud run jobs describe JOB_NAME --format export > job.yaml

Delete the

nvidia.com/gpu:, therun.googleapis.com/gpu-zonal-redundancy-disabled: 'true', and thenodeSelector: run.googleapis.com/accelerator: nvidia-l4lines.Create or update the job using the following command:

gcloud run jobs replace job.yaml

Libraries

By default, all of the NVIDIA L4 driver libraries are mounted under

/usr/local/nvidia/lib64. Cloud Run automatically appends this path to

the LD_LIBRARY_PATH environment variable (i.e. ${LD_LIBRARY_PATH}:/usr/local/nvidia/lib64)

of the container with the GPU. This allows the dynamic linker to find the NVIDIA

driver libraries. The linker searches and resolves paths

in the order you list in the LD_LIBRARY_PATH environment variable. Any

values you specify in this variable take precedence over the default Cloud Run

driver libraries path /usr/local/nvidia/lib64.

If you want to use a CUDA version greater than 12.2,

the easiest way is to depend on a newer NVIDIA base image

with forward compatibility packages already installed. Another option is to

manually install the NVIDIA forward compatibility packages

and add them to LD_LIBRARY_PATH. Consult NVIDIA's compatibility matrix

to determine which CUDA versions are forward compatible with the provided NVIDIA

driver version (535.216.03).

About GPUs and parallelism

If you are running parallel tasks in a job execution, determine and set parallelism value to less than the GPU quota without zonal redundancy allocated for your project. To request for a quota increase, see How to increase quota. GPU tasks start as quickly as possible and go up to a maximum that varies depending on how much GPU quota you allocated for the project and the region selected. Cloud Run deployments fail if you set parallelism to more than the GPU quota limit.

To calculate the GPU quota

your job uses per execution, multiply the number of GPUs per job task

with the parallelism value. For example, if you have a GPU quota of 10, and

deploy your Cloud Run job with --gpu=1, --parallelism=10, then your

job consumes all 10 GPU quota. Alternatively, if you deploy with --gpu=1, --parallelism=20, then deployments fail.

For more information, see Best practices: Cloud Run jobs with GPUs.

What's next

See Run AI inference on Cloud Run with GPUs for tutorials.