When you create a data pipeline in Cloud Data Fusion, you use a series of stages, known as nodes, to move and manage data as it flows from source to sink. Each node consists of a plugin, a customizable module that extends the capabilities of Cloud Data Fusion.

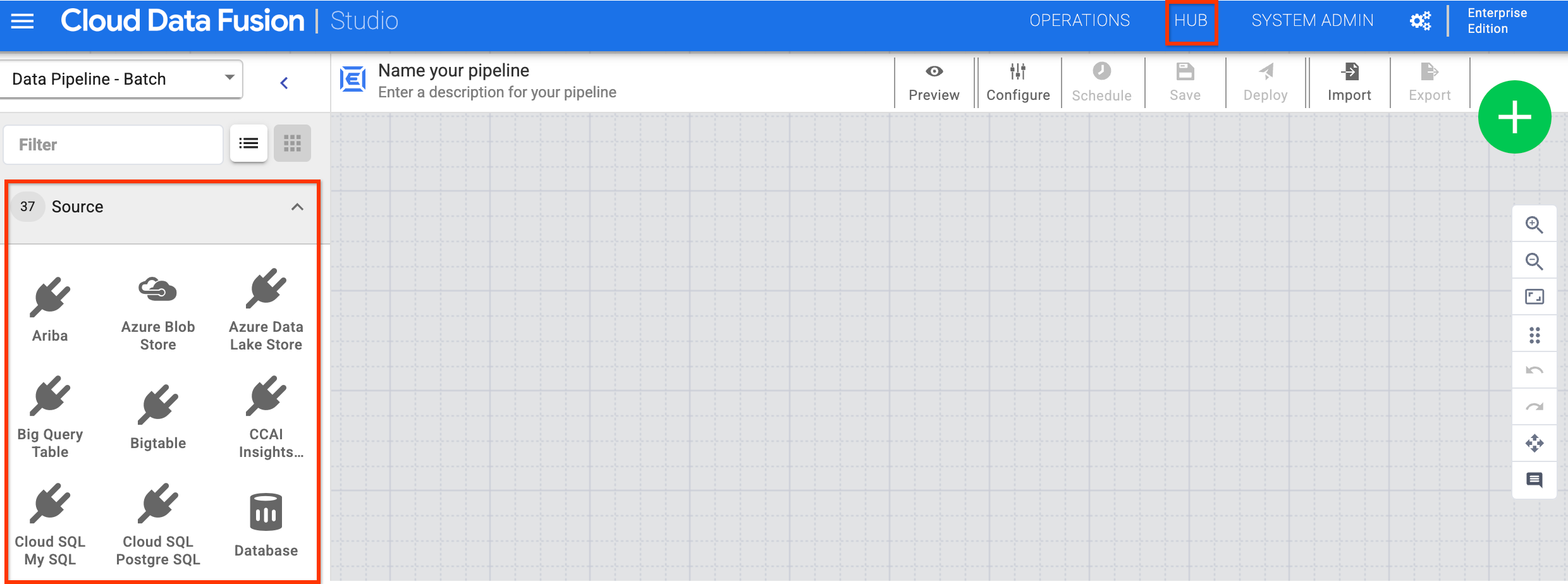

You can find the plugins in Cloud Data Fusion web interface by going to the Studio page. For more plugins, click Hub.

Plugin types

Plugins are categorized into the following categories:

- Sources

- Transformations

- Analytics

- Sinks

- Conditions and actions

- Error handlers and alerts

Sources

Source plugins connect to databases, files, or real-time streams from which your pipeline reads data. You set up sources for your data pipeline using the web interface, so you don't have to worry about coding low-level connections.

Transformations

Transform plugins change data after it's ingested from a source. For example, you can clone a record, change the file format to JSON, or use the Javascript plugin to create a custom transformation.

Analytics

Analytics plugins perform aggregations, such as joining data from different sources and running analytics and machine learning operations.

Sinks

Sink plugins write data to resources, such as Cloud Storage, BigQuery, Spanner, relational databases, file systems, and mainframes. You can query the data that gets written to the sink using the Cloud Data Fusion web interface or REST API.

Conditions and actions

Use condition and action plugins to schedule actions that take place during a workflow that don't directly manipulate data in the workflow. For example:

- Use the Database plugin to schedule a database command to run at the end of your pipeline.

- Use the File Move plugin to trigger an action that moves files within Cloud Storage.

Error handlers and alerts

When stages encounter null values, logical errors, or other sources of errors, you can use an error handler plugin to catch errors. Use these plugins to find errors in the output after a transform or analytics plugin. You can write the errors to a database for analysis.

What's next

- Explore the plugins.

- Create a data pipeline with the plugins.