This tutorial describes how to migrate data from Aerospike to Bigtable. The tutorial explains the differences between Aerospike and Bigtable and how to transform your workload to run in Bigtable. It is for database practitioners who are looking for a database service on Google Cloud that is similar to Aerospike. This tutorial assumes that you are familiar with database schemas, data types, the fundamentals of NoSQL, and relational database systems. This tutorial relies on running predefined tasks to perform an example migration. After you finish the tutorial, you can modify the provided code and steps to match your environment.

Bigtable is a petabyte-scale, fully managed NoSQL database service for large analytical and operational workloads. You can use it as a storage engine for your low-latency and petabyte-scale service with higher availability and durability. You can analyze data in Bigtable using Google Cloud data analytics services like Dataproc and BigQuery.

Bigtable is ideal for advertising technology (ad tech), financial technology (fintech), and the Internet of Things (IoT) services that are implemented with NoSQL databases like AeroSpike or Cassandra. If you are looking for NoSQL-managed service, use Bigtable.

Architecture

The following reference architecture diagram shows common components that you can use to migrate data from Aerospike to Bigtable.

In the preceding diagram, the data migrates from an on-premises environment using Aerospike to Bigtable on Google Cloud by using two different methods. The first method migrates the data by using batch processing. It starts by moving the Aerospike backup data into a Cloud Storage bucket. When the backup data arrives in Cloud Storage, it triggers Cloud Run functions to start a batch extract, transform, and load (ETL) process using Dataflow. The Dataflow job converts the backup data into a Bigtable compatible format and imports the data into the Bigtable instance.

The second method migrates the data by using streaming processing. In this method, you connect to Aerospike using a message queue, such as Kafka using Aerospike Connect, and transfer messages in real time to Pub/Sub on Google Cloud. When the message arrives into a Pub/Sub topic, it is processed by the Dataflow streaming job in real time to convert and import the data into the Bigtable instance.

With batch processing, you can efficiently migrate big chunks of data. However, it often requires sufficient cutover downtime, while migrating and updating service for new databases. If you want to minimize cutover downtime, you might consider using streaming processing to migrate data gradually after first batch processing to keep consistency from the backup data until complete graceful cutover. In this document, you can migrate from Aerospike by using batch processing with example applications, including the cutover process.

Comparing Aerospike and Bigtable

Before starting your data migration, it's fundamental for you to understand the data model differences between Aerospike and Bigtable.

The Bigtable data model is a distributed, multidimensional, sorted key-value map with rows and column families. By contrast, the Aerospike data model is a row-oriented database, where every record is uniquely identified by a key. The difference between the models is how they group the attributes of an entity. Bigtable groups related attributes into a column family, while Aerospike groups attributes in a set. Aerospike supports more data types compared to Bigtable. For example, Aerospike supports integers, strings, lists, and maps. Bigtable treats all data as raw byte strings for most purposes.

A schema in Aerospike is flexible, and dynamic values in the same bins can have different types. Apps that use either Aerospike or Bigtable have similar flexibility and data administration responsibility: apps handle data types and integrity constraints, instead of relying on the database engine.

Bookshelf migration

The Bookshelf app is a web app where users can store information about books and see the list of all the books currently stored in the database. The app uses a book identifier (ID) to search for book information. The app or the database automatically generates these IDs. When a user selects the image of a book, the app's backend loads the details about that book from the database.

In this tutorial, you migrate data from the bookshelf app using Aerospike to Bigtable. After the migration, you can access the books from Bigtable.

The following diagram shows how the data is migrated from Aerospike to Bigtable:

In the preceding diagram, data is migrated in the following way:

- You back up data about books from the current Aerospike database and transfer the data to a Cloud Storage bucket.

- When you upload the backup data to the bucket, it automatically

triggers the

as2btDataflow job through Cloud Storage update notifications using Cloud Run function. - After the data migration is completed by the

as2btDataflow job, you change the database backend from Aerospike to Bigtable so that the bookshelf app loads book data from the Bigtable cluster.

Objectives

- Deploy a tutorial environment for migration from Aerospike to Bigtable.

- Create an example app backup dataset from Aerospike in Cloud Storage.

- Use Dataflow to transfer the data schema and migrate it to Bigtable.

- Change the example app configuration to use Bigtable as a backend.

- Verify that the bookshelf app is running properly with Bigtable.

Costs

In this document, you use the following billable components of Google Cloud:

To generate a cost estimate based on your projected usage,

use the pricing calculator.

Bigtable charges are based on the number of node hours, the amount of data stored, and amount of network bandwidth that you use. To estimate the cost of the Bigtable cluster and other resources, you can use the pricing calculator. The example pricing calculator setup uses three Bigtable nodes instead of a single node. The total estimated cost in the preceding example is more than the actual total cost of this tutorial.

When you finish the tasks that are described in this document, you can avoid continued billing by deleting the resources that you created. For more information, see Clean up.

Before you begin

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Cloud Resource Manager API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles.Terraform uses the Cloud Resource Manager API to enable the APIs that are required for this tutorial.

-

In the Google Cloud console, activate Cloud Shell.

Preparing your environment

To prepare the environment for the Aerospike to Bigtable migration, you run the following tools directly from Cloud Shell:

- The Google Cloud CLI

- The Bigtable command-line tool,

cbt - Terraform

- Apache Maven

These tools are already available in Cloud Shell, so you don't need to install these tools again.

Configure your project

In Cloud Shell, inspect the project ID that Cloud Shell automatically configures. Your command prompt is updated to reflect your currently active project and displays in this format:

USERNAME@cloudshell:~ (PROJECT_ID)$If the project ID isn't configured correctly, you can configure it manually:

gcloud config set project <var>PROJECT_ID</var>Replace

PROJECT_IDwith your Google Cloud project ID.Configure

us-east1as the region andus-east1-bas the zone:gcloud config set compute/region us-east1 gcloud config set compute/zone us-east1-bFor more information about regions and zones, see Geography and regions.

Deploy the tutorial environment

In Cloud Shell, clone the code repository:

git clone https://github.com/fakeskimo/as2bt.git/In Cloud Shell, initialize the Terraform working directory:

cd "$HOME"/as2bt/bookshelf/terraform terraform initConfigure Terraform environment variables for deployment:

export TF_VAR_gce_vm_zone="$(gcloud config get-value compute/zone)" export TF_VAR_gcs_bucket_location="$(gcloud config get-value compute/region)"Review the Terraform execution plan:

terraform planThe output is similar to the following:

Terraform will perform the following actions: # google_bigtable_instance.bookshelf_bigtable will be created + resource "google_bigtable_instance" "bookshelf_bigtable" { + display_name = (known after apply) + id = (known after apply) + instance_type = "DEVELOPMENT" + name = "bookshelf-bigtable" + project = (known after apply) + cluster { + cluster_id = "bookshelf-bigtable-cluster" + storage_type = "SSD" + zone = "us-east1-b" } }(Optional) To visualize which resources with dependencies are deployed by Terraform, draw graphs:

terraform graph | dot -Tsvg > graph.svgProvision the tutorial environment:

terraform apply

Verifying the tutorial environment and bookshelf app

After you provision the environment and before you start the data migration job, you need to verify that all the resources have been deployed and configured. This section explains how to verify the provisioning process and helps you understand what components are configured in the environment.

Verify the tutorial environment

In Cloud Shell, verify the

bookshelf-aerospikeCompute Engine instance:gcloud compute instances listThe output shows that the instance is deployed in the

us-east1-bzone:NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS bookshelf-aerospike us-east1-b n1-standard-2 10.142.0.4 34.74.72.3 RUNNING

Verify the

bookshelf-bigtableBigtable instance:gcloud bigtable instances listThe output is similar to the following:

NAME DISPLAY_NAME STATE bookshelf-bigtable bookshelf-bigtable READY

This Bigtable instance is used as the migration target for later steps.

Verify that the

bookshelfCloud Storage bucket is in the Dataflow pipeline job:gcloud storage ls gs://bookshelf-* --bucketsBecause Cloud Storage bucket names need to be globally unique, the name of the bucket is created with a random suffix. The output is similar to the following:

gs://bookshelf-616f60d65a3abe62/

Add a book to the Bookshelf app

In Cloud Shell, get the external IP address of the

bookshelf-aerospikeinstance:gcloud compute instances list --filter="name:bookshelf-aerospike" \ --format="value(networkInterfaces[0].accessConfigs.natIP)"Make a note of the IP address because it's needed in the next step.

To open the Bookshelf app, in a web browser, go to

http://IP_ADDRESS:8080.Replace

IP_ADDRESSwith the external IP address that you copied from the previous step.To create a new book, click Add book.

In the Add book window, complete the following fields, and then click Save:

- In the Title field, enter

Aerospike-example. - In the Author field, enter

Aerospike-example. - In the Date Published field, enter today's date.

- In the Description field, enter

Aerospike-example.

This book is used to verify that the Bookshelf app is using Aerospike as the book storage.

- In the Title field, enter

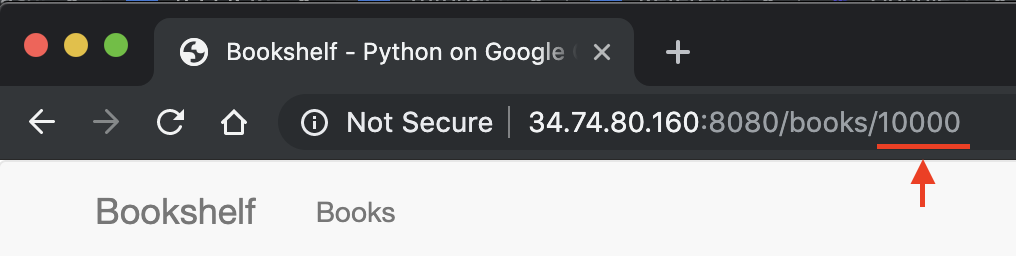

In the Bookshelf app URL, make a note of the book ID. For example, if the URL is

34.74.80.160:8080/books/10000, the book ID is10000.

In Cloud Shell, use SSH to connect to the

bookshelf-aerospikeinstance:gcloud compute ssh bookshelf-aerospikeFrom the

bookshelf-aerospikeinstance session, verify that a new book was created with the book ID that you previously noted:aql -c 'select * from bookshelf.books where id = "BOOK_ID"'

The output is similar to the following:

+----------------------+----------------------+---------------+----------------------+----------+---------+ | title | author | publishedDate | description | imageUrl | id | +----------------------+----------------------+---------------+----------------------+----------+---------+ | " Aerospike-example" | " Aerospike-example" | "2000-01-01" | " Aerospike-example" | "" | "10000" | +----------------------+----------------------+---------------+----------------------+----------+---------+ 1 row in set (0.001 secs)

If your book ID isn't listed, repeat the steps to add a new book.

Transferring backup data from Aerospike to Cloud Storage

In Cloud Shell, from the

bookshelf-aerospikeinstance session, create an Aerospike backup file:aql -c "select * from bookshelf.books" --timeout=-1 --outputmode=json \` | tail -n +2 | jq -c '.[0] | .[]' \ | gcloud storage cp - $(gcloud storage ls gs://bookshelf-* --buckets)bookshelf-backup.jsonThis command processes the data and creates a backup file through the following process:

- Selects book information from Aerospike and prints it out in the JSON prettyprint format.

- Removes the first two headings from the output and converts the

data into Newline delimited JSON

(ndjson)

format by using

jq, a command-line JSON processor. - Uses the gcloud CLI to upload the data into the Cloud Storage bucket.

Verify that the Aerospike backup file is uploaded and exists in the Cloud Storage bucket:

gcloud storage ls gs://bookshelf-*/bookshelf-*\ gs://bookshelf-616f60d65a3abe62/bookshelf-backup.json(Optional) Review the backup file contents from the Cloud Storage bucket:

gcloud storage cat -r 0-1024 gs://bookshelf-*/bookshelf-backup.json | head -n 2The output is similar to the following:

{"title":"book_2507","author":"write_2507","publishedDate":"1970-01-01","imageUrl":"https://storage.googleapis.com/aerospike2bt-bookshelf/The_Home_Edit-2019-06-24-044906.jpg","description":"test_2507","createdBy":"write_2507","createdById":"2507_anonymous","id":"2507"} {"title":"book_3867","author":"write_3867","publishedDate":"1970-01-01","imageUrl":"https://storage.googleapis.com/aerospike2bt-bookshelf/The_Home_Edit-2019-06-24-044906.jpg","description":"test_3867","createdBy":"write_3867","createdById":"3867_anonymous","id":"3867"}Exit the SSH session and return to Cloud Shell:

exit

Migrating the backup data to Bigtable using Dataflow

You can now migrate the backup data from Cloud Storage to a Bigtable instance. This section explains how to use Dataflow pipelines to migrate data that is compatible with a Bigtable schema.

Configure the Dataflow migration job

In Cloud Shell, go to the

dataflowdirectory in the example code repository:cd "$HOME"/as2bt/dataflow/Configure environment variables for a Dataflow job:

export BOOKSHELF_BACKUP_FILE="$(gcloud storage ls gs://bookshelf*/bookshelf-backup.json)" export BOOKSHELF_DATAFLOW_ZONE="$(gcloud config get-value compute/zone)"Check that the environment variables are configured correctly:

env | grep BOOKSHELFIf the environment variables are correctly configured, the output is similar to the following:

BOOKSHELF_BACKUP_FILE=gs://bookshelf-616f60d65a3abe62/bookshelf-backup.json BOOKSHELF_DATAFLOW_ZONE=us-east1-b

Run the Dataflow job

In Cloud Shell, migrate data from Cloud Storage to the Bigtable instance:

./run_oncloud_json.shTo monitor the backup data migration job, in the Google Cloud console, go to the Jobs page.

Wait until the job successfully completes. When the job successfully completes, the output in Cloud Shell is similar to the following:

Dataflow SDK version: 2.13.0 Submitted job: 2019-12-16_23_24_06-2124083021829446026 [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 08:20 min [INFO] Finished at: 2019-12-17T16:28:08+09:00 [INFO] ------------------------------------------------------------------------

Check the migration job results

In Cloud Shell, verify that the backup data was transferred correctly into Bigtable:

cbt -instance bookshelf-bigtable lookup books 00001The output is similar to the following:

---------------------------------------- 00001 info:author @ 2019/12/17-16:26:04.434000 "Aerospike-example" info:description @ 2019/12/17-16:26:04.434000 "Aerospike-example" info:id @ 2019/12/17-16:26:04.434000 "00001" info:imageUrl @ 2019/12/17-16:26:04.434000 "" info:publishedDate @ 2019/12/17-16:26:04.434000 "2019-10-01" info:title @ 2019/12/17-16:26:04.434000 "Aerospike-example"

Changing the bookshelf database from Aerospike to Bigtable

After you successfully migrate data from Aerospike to Bigtable, you can change the Bookshelf app configuration to use Bigtable for storage. When you set up this configuration, new books are saved into the Bigtable instances.

Change the Bookshelf app configuration

In Cloud Shell, use SSH to connect to the

bookshelf-aerospikeapp:gcloud compute ssh bookshelf-aerospikeVerify that the current

DATA_BACKENDconfiguration isaerospike:grep DATA_BACKEND /opt/app/bookshelf/config.pyThe output is the following:

DATA_BACKEND = 'aerospike'

Change the

DATA_BACKENDconfiguration fromaerospiketobigtable:sudo sed -i "s/DATA_BACKEND =.*/DATA_BACKEND = 'bigtable'/g" /opt/app/bookshelf/config.pyVerify that the

DATA_BACKENDconfiguration is changed tobigtable:grep DATA_BACKEND /opt/app/bookshelf/config.pyThe output is the following:

DATA_BACKEND = 'bigtable'

Restart the Bookshelf app that uses the new

bigtablebackend configuration:sudo supervisorctl restart bookshelfVerify that the Bookshelf app has restarted and is running correctly:

sudo supervisorctl status bookshelfThe output is similar to the following:

bookshelf RUNNING pid 18318, uptime 0:01:00

Verify the bookshelf app is using the Bigtable backend

- In a browser, go to

http://IP_ADDRESS:8080. Add a new book named

Bigtable-example.To verify that the

Bigtable-examplebook was created in a Bigtable instance from the Bookshelf app, copy the book ID from the address bar in the browser.In Cloud Shell, look up the

Bigtable-examplebook data from a Bigtable instance:cbt -instance bookshelf-bigtable lookup books 7406950188The output is similar to the following:

---------------------------------------- 7406950188 info:author @ 2019/12/17-17:28:25.592000 "Bigtable-example" info:description @ 2019/12/17-17:28:25.592000 "Bigtable-example" info:id @ 2019/12/17-17:28:25.592000 "7406950188" info:image_url @ 2019/12/17-17:28:25.592000 "" info:published_date @ 2019/12/17-17:28:25.592000 "2019-10-01" info:title @ 2019/12/17-17:28:25.592000 "Bigtable-example"

You have successfully performed a data migration from Aerospike to Bigtable and changed the bookshelf configuration to connect to a Bigtable backend.

Clean up

The easiest way to eliminate billing is to delete the Google Cloud project that you created for the tutorial. Alternatively, you can delete the individual resources.

Delete the project

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

What's next

- Learn how to design your Bigtable schema.

- Read about how to start your Migration to Google Cloud.

- Understand which strategies you have for transferring big datasets.

- Explore reference architectures, diagrams, and best practices about Google Cloud. Take a look at our Cloud Architecture Center.