Pelatihan dan ekstraksi model kustom memungkinkan Anda membuat model sendiri yang dirancang khusus untuk dokumen Anda tanpa menggunakan AI generatif. Opsi ini ideal jika Anda tidak ingin menggunakan AI generatif dan ingin mengontrol semua aspek model terlatih.

Konfigurasi set data

Set data dokumen diperlukan untuk melatih, melatih ulang, atau mengevaluasi versi pemroses. Prosesor Document AI belajar dari contoh, seperti halnya manusia. Set data memicu stabilitas prosesor dalam hal performa.Set data pelatihan

Untuk meningkatkan model dan akurasinya, latih set data pada dokumen Anda. Model terdiri dari dokumen dengan kebenaran dasar. Anda memerlukan minimal tiga dokumen untuk melatih model baru.Set data pengujian

Set data pengujian adalah yang digunakan model untuk menghasilkan skor F1 (akurasi). Set data ini terdiri dari dokumen dengan kebenaran dasar. Untuk melihat seberapa sering model benar, kebenaran dasar digunakan untuk membandingkan prediksi model (kolom yang diekstrak dari model) dengan jawaban yang benar. Set data pengujian harus memiliki minimal tiga dokumen.Sebelum memulai

Jika belum melakukannya, aktifkan penagihan dan Document AI API.

Membangun dan mengevaluasi model kustom

Mulai dengan membuat, lalu mengevaluasi pemroses kustom.

Buat pemroses dan tentukan kolom yang ingin Anda ekstrak, yang penting karena memengaruhi kualitas ekstraksi.

Tetapkan lokasi set data: Pilih folder opsi default Dikelola Google. Tindakan ini dapat dilakukan secara otomatis segera setelah membuat pemroses.

Buka tab Build, lalu pilih Import Documents dengan mengaktifkan pelabelan otomatis (lihat Pelabelan otomatis dengan model dasar). Anda memerlukan minimal 10 dokumen dalam set pelatihan dan 10 dokumen dalam set pengujian untuk melatih model kustom.

Melatih model:

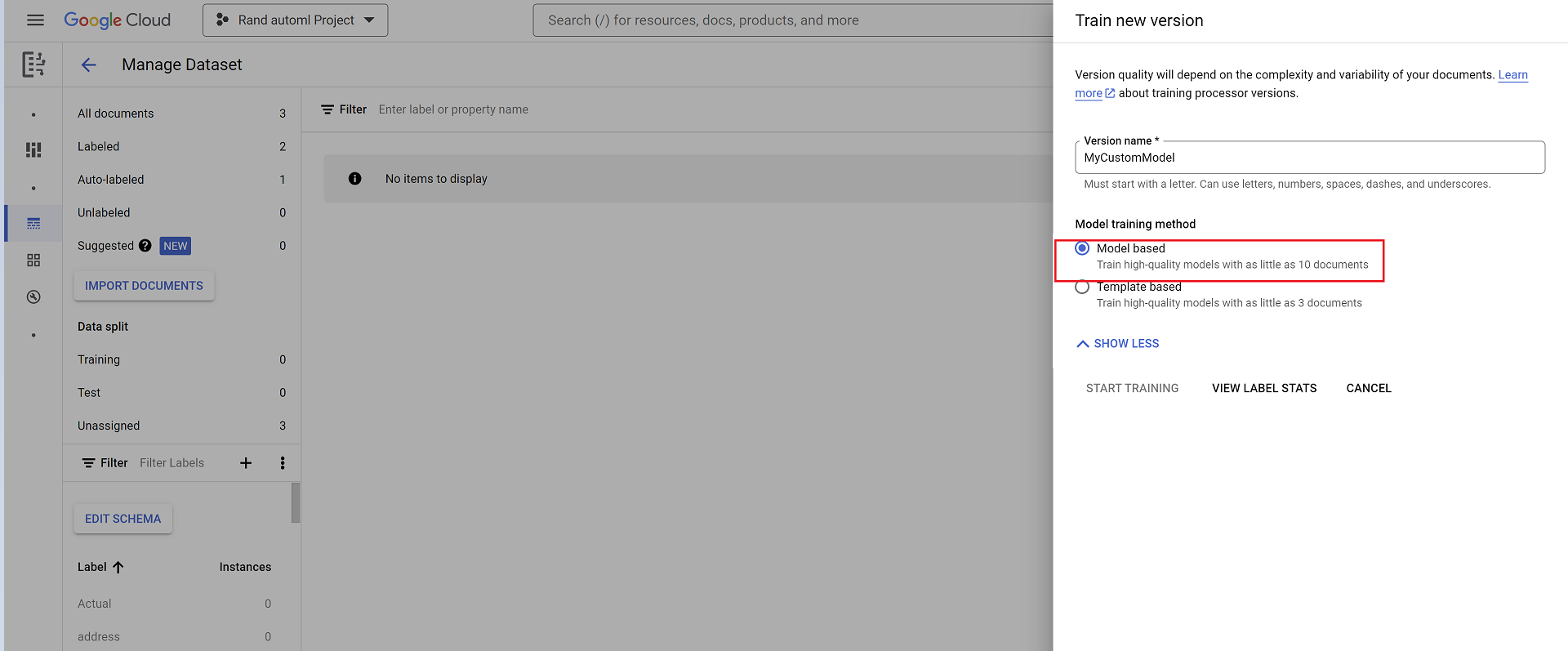

- Pilih Train new version dan beri nama versi pemroses.

- Buka Tampilkan opsi lanjutan dan pilih opsi Berbasis model.

Evaluasi:

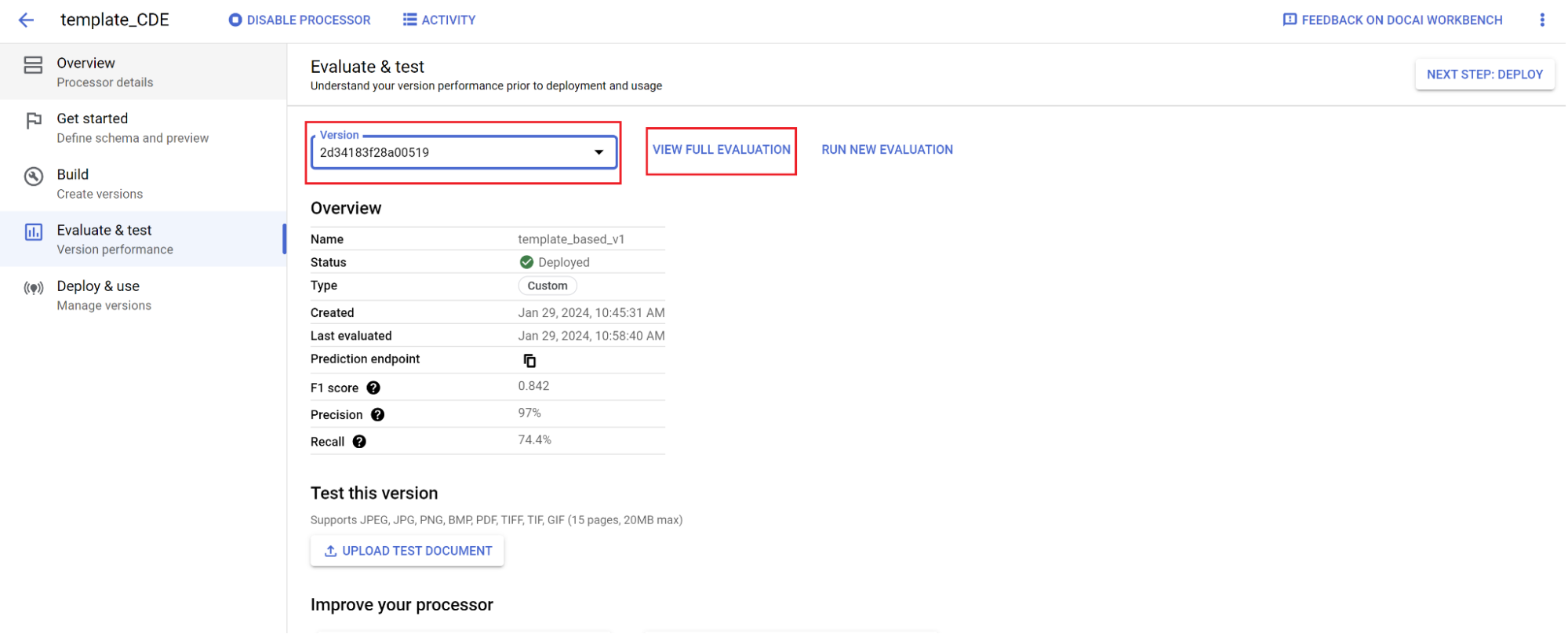

- Buka Evaluasi & uji, pilih versi yang baru saja Anda latih, lalu pilih Lihat evaluasi lengkap.

- Sekarang Anda akan melihat metrik seperti f1, presisi, dan perolehan untuk seluruh dokumen dan setiap kolom.

- Tentukan apakah performa memenuhi sasaran produksi Anda. Jika tidak, evaluasi ulang set pelatihan dan pengujian, biasanya dengan menambahkan dokumen ke set pengujian pelatihan yang tidak diuraikan dengan baik.

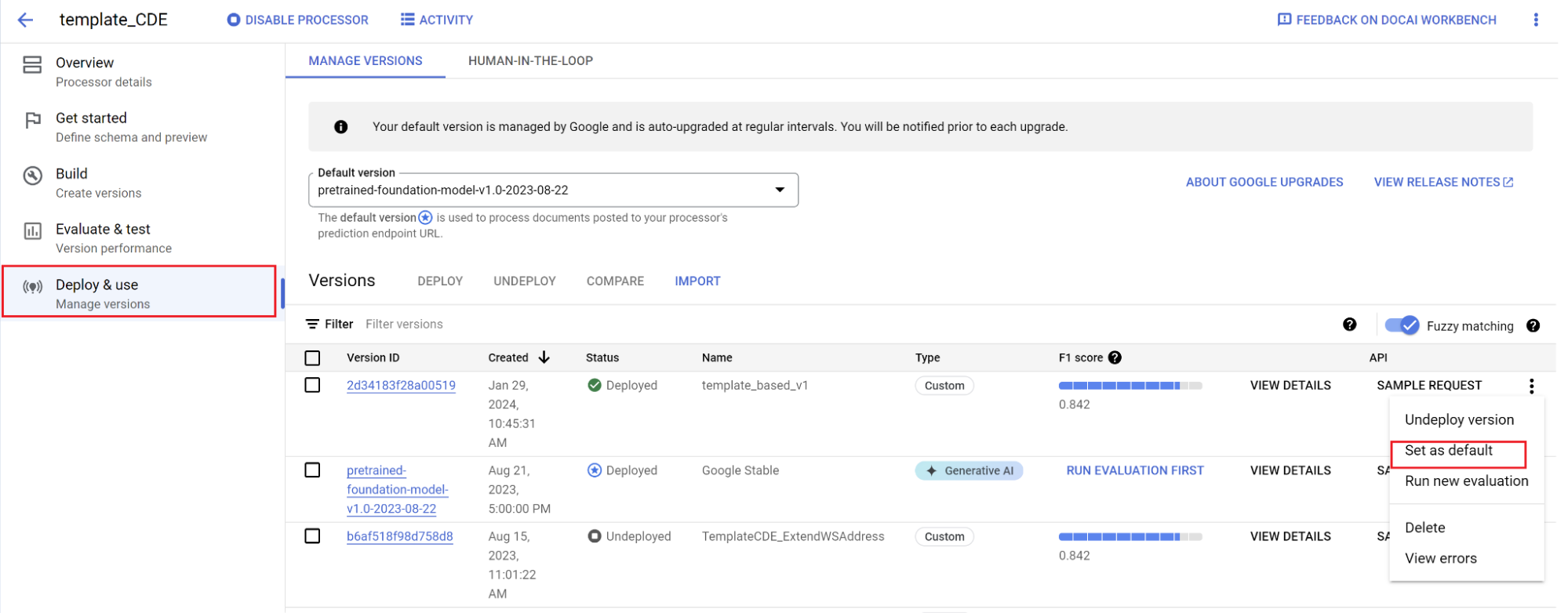

Tetapkan versi baru sebagai default.

- Buka Kelola versi.

- Buka menu , lalu pilih Tetapkan sebagai default.

Model Anda kini di-deploy dan dokumen yang dikirim ke prosesor ini kini menggunakan versi kustom Anda. Anda ingin mengevaluasi performa model untuk memeriksa apakah model memerlukan pelatihan lebih lanjut.

Referensi evaluasi

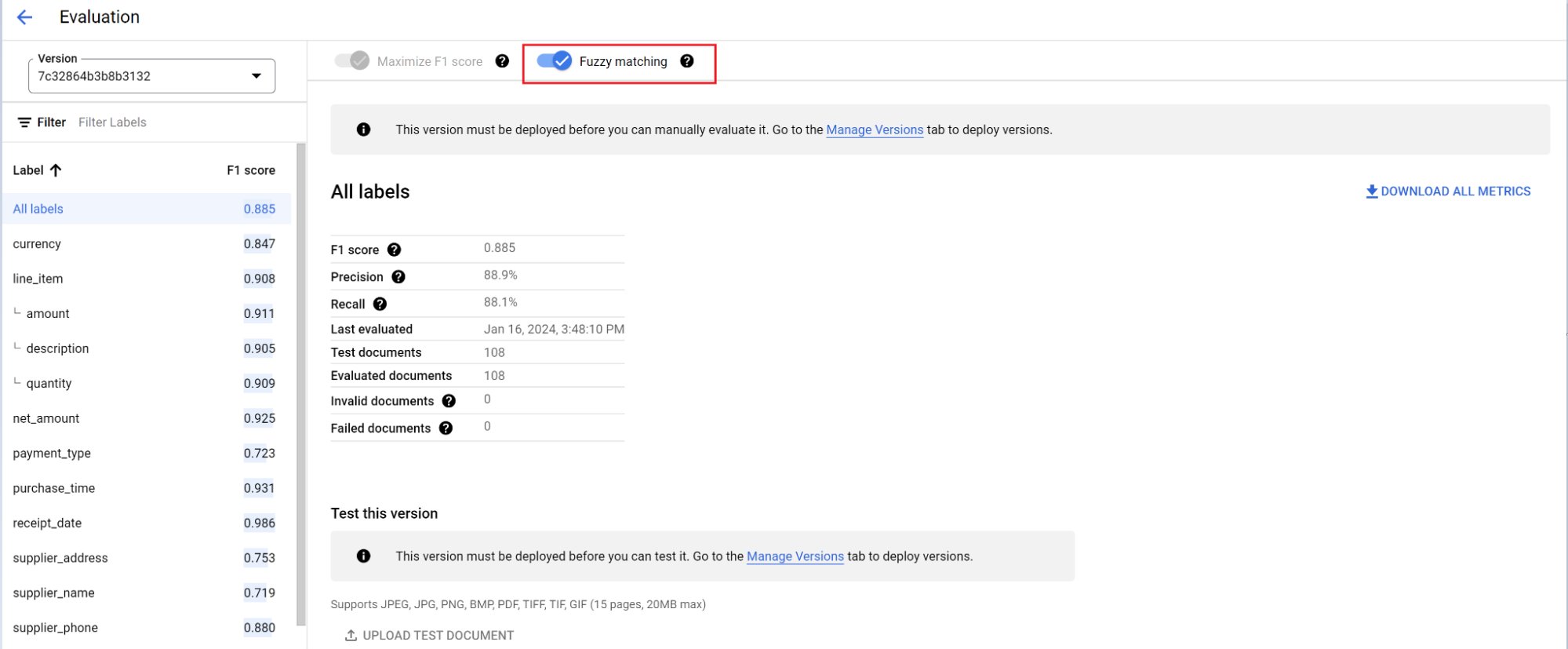

Mesin evaluasi dapat melakukan pencocokan persis atau pencocokan tidak persis. Untuk kecocokan persis, nilai yang diekstrak harus sama persis dengan data sebenarnya atau dihitung sebagai tidak cocok.

Ekstraksi pencocokan tidak persis yang memiliki sedikit perbedaan seperti perbedaan kapitalisasi tetap dihitung sebagai kecocokan. Setelan ini dapat diubah di layar Evaluasi.

Pelabelan otomatis dengan model dasar

Model dasar dapat mengekstrak kolom secara akurat untuk berbagai jenis dokumen, tetapi Anda juga dapat menyediakan data pelatihan tambahan untuk meningkatkan akurasi model untuk struktur dokumen tertentu.

Document AI menggunakan nama label yang Anda tentukan dan anotasi sebelumnya untuk memberi label pada dokumen dalam skala besar dengan pelabelan otomatis.

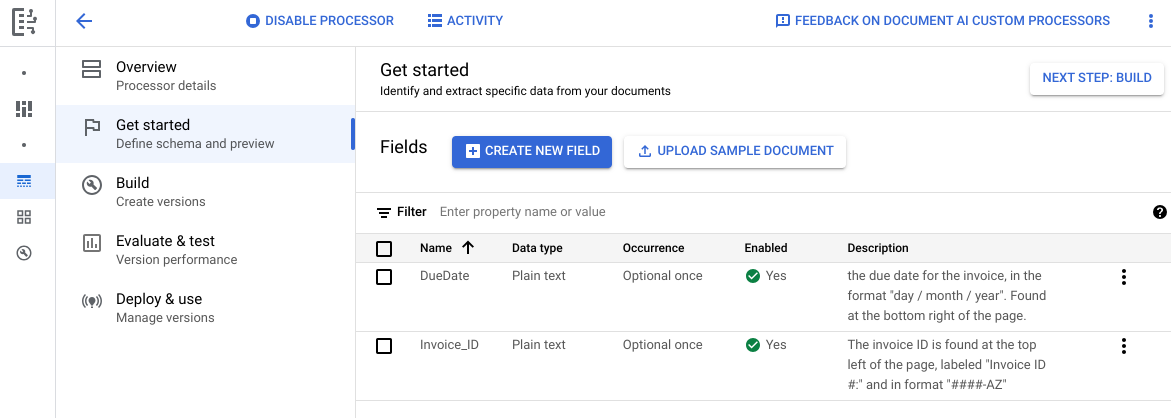

- Setelah Anda membuat pemroses kustom, buka tab Mulai.

- Pilih Buat kolom baru.

- Berikan nama yang deskriptif dan isi kolom deskripsi. Deskripsi properti memungkinkan Anda memberikan konteks, insight, dan pengetahuan sebelumnya tambahan untuk setiap entity guna meningkatkan akurasi dan performa ekstraksi.

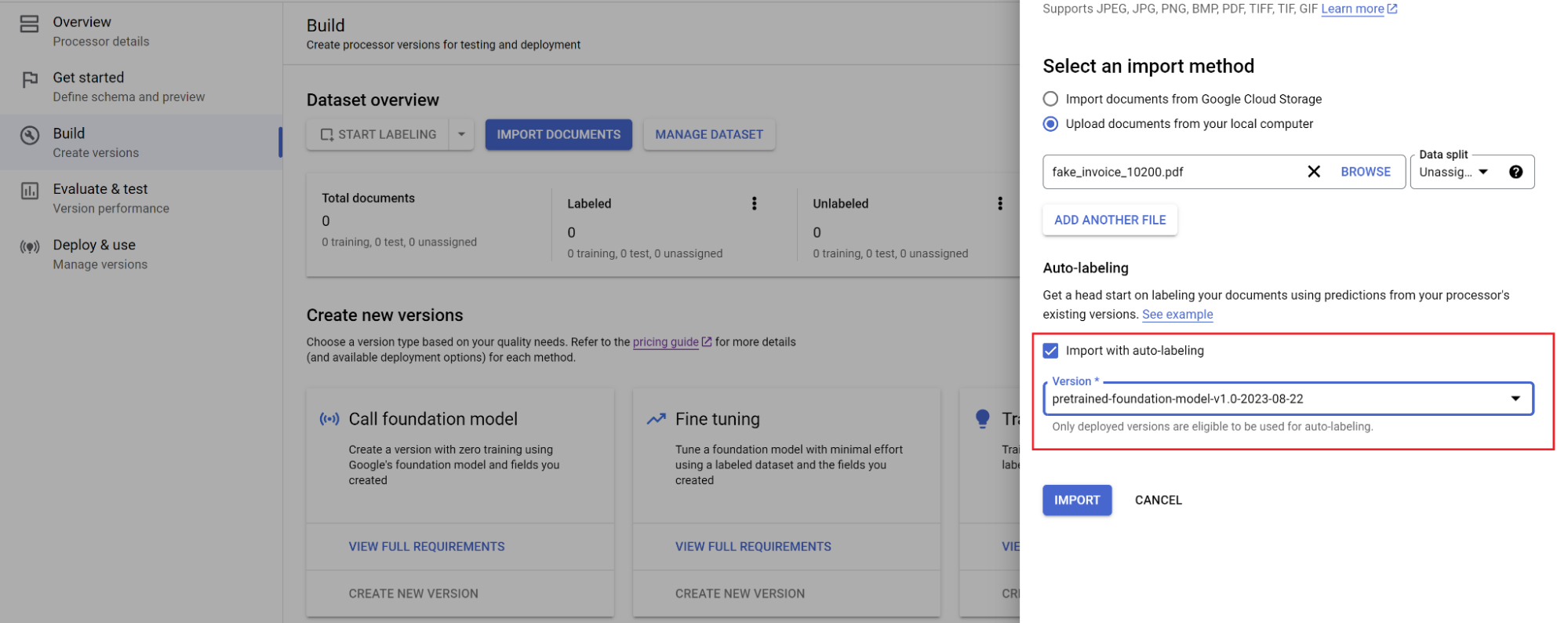

Buka tab Build, lalu pilih Impor dokumen.

Pilih jalur dokumen dan kumpulan dokumen yang akan diimpor. Centang kotak pelabelan otomatis, lalu pilih model dasar.

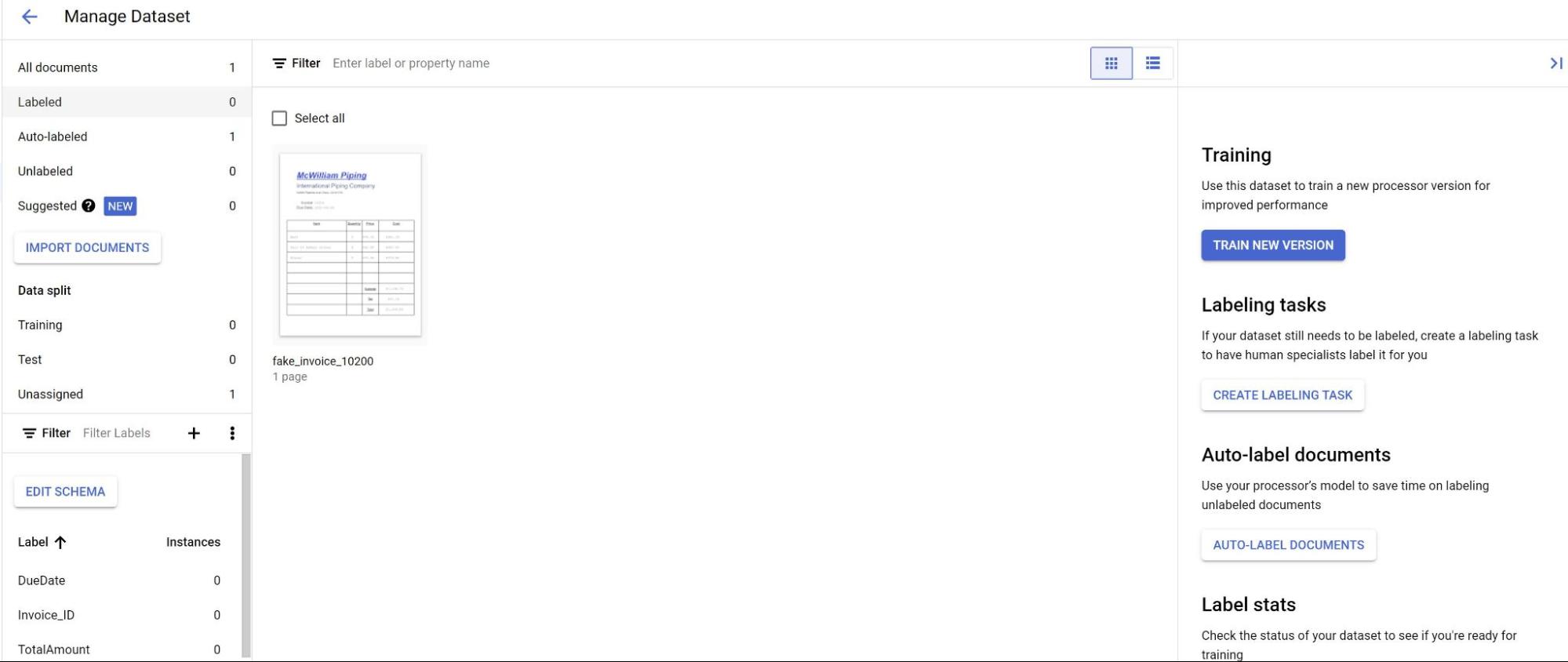

Di tab Build, pilih Kelola Set Data. Anda akan melihat dokumen yang diimpor. Pilih salah satu dokumen Anda.

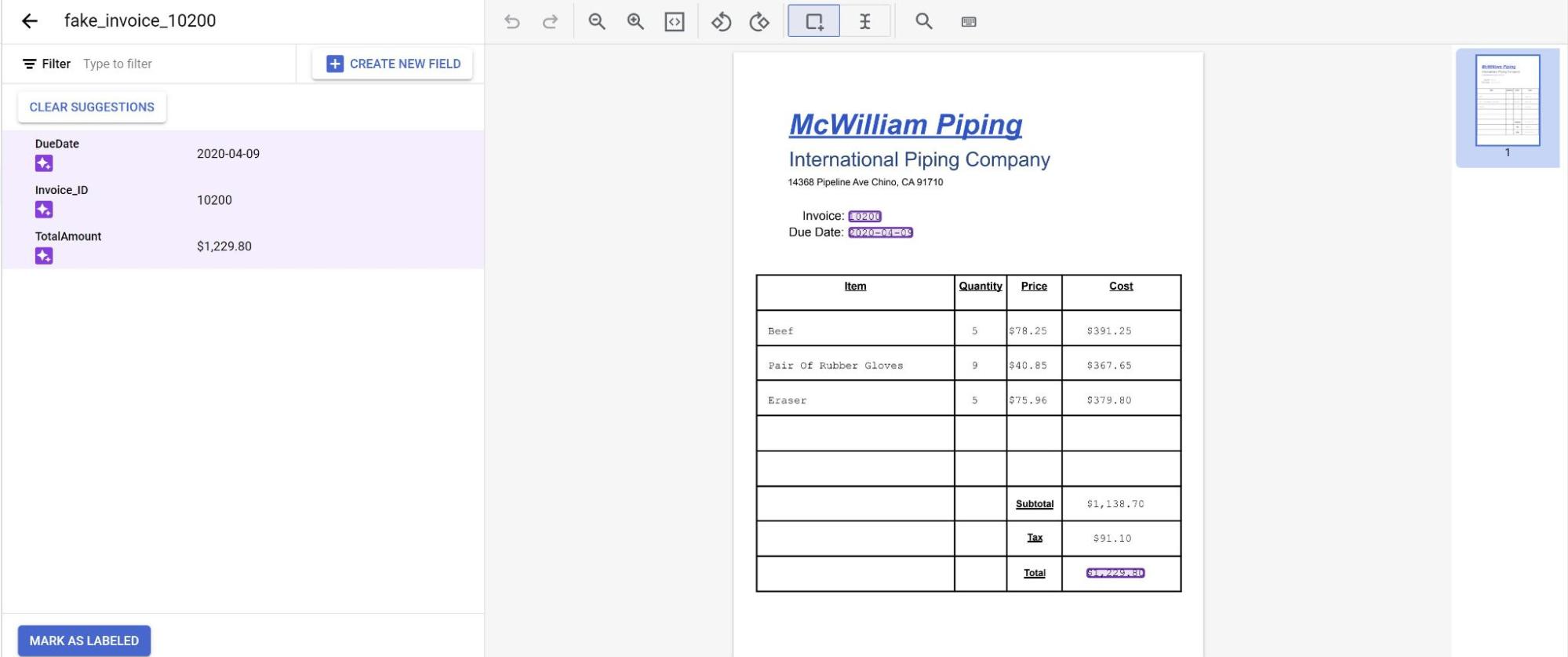

Sekarang Anda akan melihat prediksi dari model yang disorot dengan warna ungu.

- Tinjau setiap label yang diprediksi oleh model dan pastikan sudah benar. Jika ada kolom yang tidak ada, tambahkan juga.

- Setelah dokumen ditinjau, pilih Tandai sebagai diberi label. Dokumen kini siap digunakan oleh model. Pastikan dokumen berada di set Pengujian atau Pelatihan.