For streaming pipelines, a straggler is defined as a work item with the following characteristics:

- It prevents the watermark from advancing for a significant length of time (on the order of minutes).

- It processes for a long time relative to other work items in the same stage.

Stragglers hold back the watermark and add latency to the job. If the lag is acceptable for your use case, then you don't need to take any action. If you want to reduce a job's latency, start by addressing any stragglers.

View streaming stragglers in the Google Cloud console

After you start a Dataflow job, you can use the Google Cloud console to view any detected stragglers.

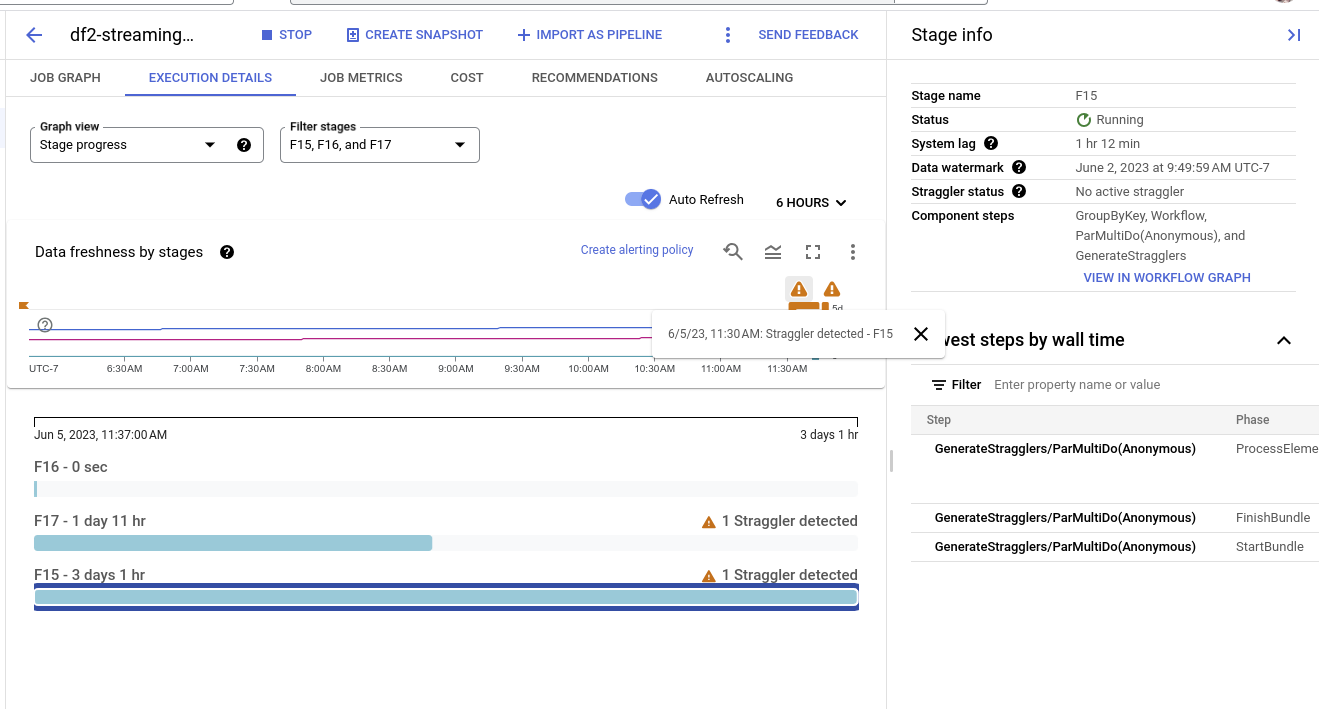

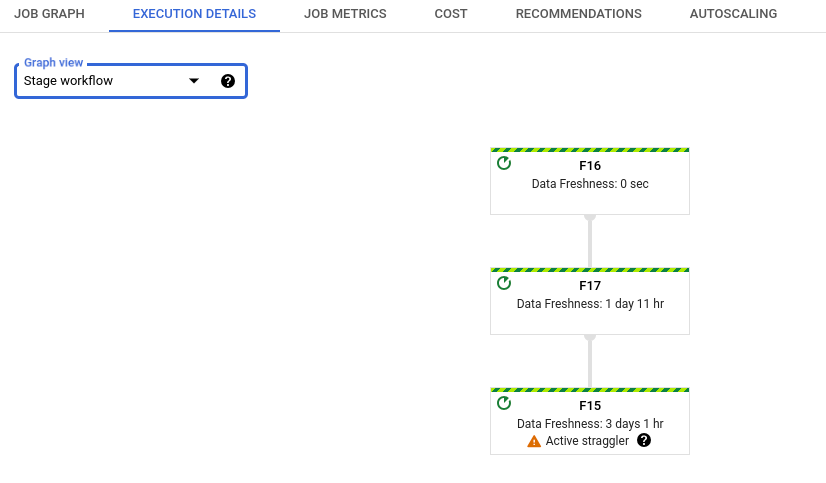

You can view streaming stragglers in the stage progress view or the stage workflow view.

View stragglers by stage progress

To view stragglers by stage progress:

In the Google Cloud console, go to the Dataflow Jobs page.

Click the name of the job.

In the Job details page, click the Execution details tab.

In the Graph view list, select Stage progress. The progress graph shows aggregated counts of all stragglers detected within each stage.

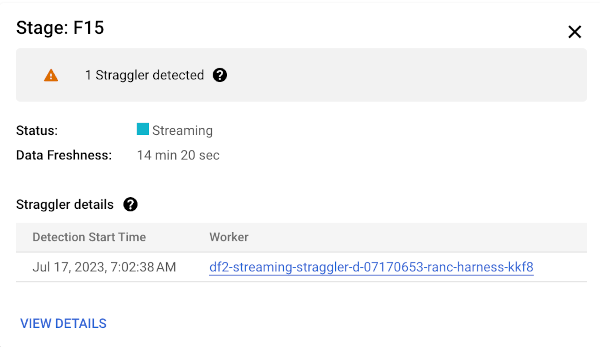

To see details for a stage, hold the pointer over the bar for the stage. The details pane includes a link to the worker logs. Clicking this link opens Cloud Logging scoped to the worker and the time range when the straggler was detected.

View stragglers by stage workflow

To view stragglers by stage workflow:

In the Google Cloud console, go to the Dataflow Jobs page.

Go to Jobs

Click the name of the job.

In the job details page, click the Execution details tab.

In the Graph view list, select Stage workflow. The stage workflow shows the execution stages of the job, represented as a workflow graph.

Troubleshoot streaming stragglers

If a straggler is detected, it means that an operation in your pipeline has been running for an unusually long time.

To troubleshoot the issue, first check whether Dataflow insights pinpoints any issues.

If you still can't determine the cause, check the worker logs for the stage that reported the straggler. To see the relevant worker logs, view the straggler details in the stage progress. Then click the link for the worker. This link opens Cloud Logging, scoped to the worker and the time range when the straggler was detected. Look for problems that might be slowing down the stage, such as:

- Bugs in

DoFncode or stuckDoFns. Look for stack traces in the logs, near the timestamp when the straggler was detected. - Calls to external services that take a long time to complete. To mitigate this issue, batch calls to external services and set timeouts on RPCs.

- Quota limits in sinks. If your pipeline outputs to a Google Cloud service, you might be able to raise the quota. For more information, see the Cloud Quotas documentation. Also, consult the documentation for the particular service for optimization strategies, as well as the documentation for the I/O Connector.

DoFnsthat perform large read or write operations on persistent state. Consider refactoring your code to perform smaller reads or writes on persistent state.

You can also use the Side info panel to find the slowest steps in the stage. One of these steps might be causing the straggler. Click on the step name to view the worker logs for that step.

After you determine the cause, update your pipeline with new code and monitor the result.

What's next

- Learn to use the Dataflow monitoring interface.

- Understand the Execution details tab in the monitoring interface.