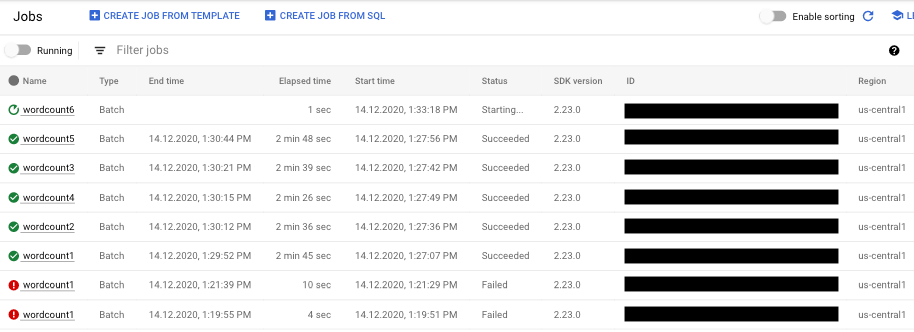

To see a list of your Dataflow jobs, go to the Dataflow > Jobs page in the Google Cloud console.

A list of Dataflow jobs appears along with their status.

A job can have the following statuses:

- —: the monitoring interface has not yet received a status from the Dataflow service.

- Running: the job is running.

- Starting...: the job is created, but the system needs some time to prepare before launching.

- Queued: either a FlexRS job is queued or a Flex Template job is being launched (which might take several minutes).

- Canceling...: the job is being canceled.

- Canceled: the job is canceled.

- Draining...: the job is being drained.

- Drained: the job is drained.

- Updating...: the job is being updated.

- Updated: the job is updated.

- Succeeded: the job has finished successfully.

- Failed: the job failed to complete.

Access job visualizers

To access charts for monitoring your job, click the job name within the Dataflow monitoring interface. The Job details page is displayed, which contains the following information:

- Job graph: visual representation of your pipeline

- Execution details: tool to optimize your pipeline performance

- Job metrics: metrics about the running of your job

- Cost: metrics about the estimated cost of your job

- Autoscaling: metrics related to streaming job autoscaling events

- Job info panel: descriptive information about your pipeline

- Job logs: logs generated by the Dataflow service at the job level

- Worker logs: logs generated by the Dataflow service at the worker level

- Diagnostics: table showing where errors occurred along the chosen timeline and possible recommendations for your pipeline

- Data sampling: tool that lets you observe the data at each step of a pipeline

Within the Job details page, you can switch your job view with the Job graph, Execution details, Job metrics, Cost, and Autoscaling tabs.

Use the Google Cloud CLI to list jobs

You can also use the Google Cloud CLI to get a list of your Dataflow jobs.

To list the Dataflow jobs in your project, use the

dataflow jobs list command:

gcloud dataflow jobs list

The command returns a list of your current jobs. The following is a sample output:

ID NAME TYPE CREATION_TIME STATE REGION 2015-06-03_16_39_22-4020553808241078833 wordcount-janedoe-0603233849 Batch 2015-06-03 16:39:22 Done us-central1 2015-06-03_16_38_28-4363652261786938862 wordcount-johndoe-0603233820 Batch 2015-06-03 16:38:28 Done us-central1 2015-05-21_16_24_11-17823098268333533078 bigquerytornadoes-johndoe-0521232402 Batch 2015-05-21 16:24:11 Done europe-west1 2015-05-21_13_38_06-16409850040969261121 bigquerytornadoes-johndoe-0521203801 Batch 2015-05-21 13:38:06 Done us-central1 2015-05-21_13_17_18-18349574013243942260 bigquerytornadoes-johndoe-0521201710 Batch 2015-05-21 13:17:18 Done europe-west1 2015-05-21_12_49_37-9791290545307959963 wordcount-johndoe-0521194928 Batch 2015-05-21 12:49:37 Done us-central1 2015-05-20_15_54_51-15905022415025455887 wordcount-johndoe-0520225444 Batch 2015-05-20 15:54:51 Failed us-central1 2015-05-20_15_47_02-14774624590029708464 wordcount-johndoe-0520224637 Batch 2015-05-20 15:47:02 Done us-central1

To display more information about a job, use the

dataflow jobs describe

command:

gcloud dataflow jobs describe JOB_ID

Replace JOB_ID with the job ID. The output from

this command looks similar to following:

createTime: '2015-02-09T19:39:41.140Z' currentState: JOB_STATE_DONE currentStateTime: '2015-02-09T19:56:39.510Z' id: 2015-02-09_11_39_40-15635991037808002875 name: tfidf-bchambers-0209193926 projectId: google.com:clouddfe type: JOB_TYPE_BATCH

To format the result into JSON, run the command with the --format=json option:

gcloud --format=json dataflow jobs describe JOB_ID

Archive (hide) Dataflow jobs from a list

When you archive a Dataflow job, the job is removed from the list of jobs in the Dataflow Jobs page in the console. The job is moved to an archived jobs list. You can only archive completed jobs, which includes jobs in the following states:

JOB_STATE_CANCELLEDJOB_STATE_DRAINEDJOB_STATE_DONEJOB_STATE_FAILEDJOB_STATE_UPDATED

For more information on verifying these states, see Detect Dataflow job completion.

For troubleshooting information when you are archiving jobs, see Archive job errors in "Troubleshoot Dataflow errors."

All archived jobs are deleted after a 30 day retention period.

Archive a job

Follow these steps to remove a completed job from the main jobs list on the Dataflow Jobs page.

Console

In the Google Cloud console, go to the Dataflow Jobs page.

A list of Dataflow jobs appears along with their status.

Select a job.

On the Job Details page, click Archive. If the job hasn't completed, the Archive option isn't available.

REST

To archive a job using the API, use the

projects.locations.jobs.update

method.

In this request you must specify an updated

JobMetadata

object. In the JobMetadata.userDisplayProperties object, use the key-value

pair "archived":"true".

In addition to the updated JobMetadata object, your API request must also

include the

updateMask

query parameter in the request URL:

https://dataflow.googleapis.com/v1b3/[...]/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived

Before using any of the request data, make the following replacements:

- PROJECT_ID: your project ID

- REGION: a Dataflow region

- JOB_ID: the ID of your Dataflow job

HTTP method and URL:

PUT https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived

Request JSON body:

{

"job_metadata": {

"userDisplayProperties": {

"archived": "true"

}

}

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X PUT \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred" }

Invoke-WebRequest `

-Method PUT `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived" | Select-Object -Expand Content

You should receive a JSON response similar to the following:

{

"id": "JOB_ID",

"projectId": "PROJECT_ID",

"currentState": "JOB_STATE_DONE",

"currentStateTime": "2025-05-20T20:54:41.651442Z",

"createTime": "2025-05-20T20:51:06.031248Z",

"jobMetadata": {

"userDisplayProperties": {

"archived": "true"

}

},

"startTime": "2025-05-20T20:51:06.031248Z"

}

gcloud

This command archives a single job. The job must be in a terminal state to be able to archive it. Otherwise, the request is rejected. After sending the command, you must confirm that you want to run it.

Before using any of the command data below, make the following replacements:

JOB_ID: The ID of the Dataflow job that you want to archive.REGION: Optional. The Dataflow region of your job.

Execute the following command:

Linux, macOS, or Cloud Shell

gcloud dataflow jobs archive JOB_ID --region=REGION_ID

Windows (PowerShell)

gcloud dataflow jobs archive JOB_ID --region=REGION_ID

Windows (cmd.exe)

gcloud dataflow jobs archive JOB_ID --region=REGION_ID

You should receive a response similar to the following:

Archived job [JOB_ID].

createTime: '2025-06-29T11:00:02.432552Z'

currentState: JOB_STATE_DONE

currentStateTime: '2025-06-29T11:04:25.125921Z'

id: JOB_ID

jobMetadata:

userDisplayProperties:

archived: 'true'

projectId: PROJECT_ID

startTime: '2025-06-29T11:00:02.432552Z'

View and restore archived jobs

Follow these steps to view archived jobs or to restore archived jobs to the main jobs list on the Dataflow Jobs page.

Console

In the Google Cloud console, go to the Dataflow Jobs page.

Click the Archived toggle. A list of archived Dataflow jobs appears.

Select a job.

To restore the job to the main jobs list on the Dataflow Jobs page, on the Job Details page, click Restore.

REST

To restore an archived job using the API, use the

projects.locations.jobs.update

method.

In this request you must specify an updated

JobMetadata

object. In the JobMetadata.userDisplayProperties object, use the

key-value pair "archived":"false".

In addition to the updated JobMetadata object, your API request must also

include the

updateMask

query parameter in the request URL:

https://dataflow.googleapis.com/v1b3/[...]/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived

Before using any of the request data, make the following replacements:

- PROJECT_ID: your project ID

- REGION: a Dataflow region

- JOB_ID: the ID of your Dataflow job

HTTP method and URL:

PUT https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived

Request JSON body:

{

"job_metadata": {

"userDisplayProperties": {

"archived": "false"

}

}

}

To send your request, choose one of these options:

curl

Save the request body in a file named request.json,

and execute the following command:

curl -X PUT \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json; charset=utf-8" \

-d @request.json \

"https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived"

PowerShell

Save the request body in a file named request.json,

and execute the following command:

$cred = gcloud auth print-access-token

$headers = @{ "Authorization" = "Bearer $cred" }

Invoke-WebRequest `

-Method PUT `

-Headers $headers `

-ContentType: "application/json; charset=utf-8" `

-InFile request.json `

-Uri "https://dataflow.googleapis.com/v1b3/projects/PROJECT_ID/locations/REGION/jobs/JOB_ID/?updateMask=job_metadata.user_display_properties.archived" | Select-Object -Expand Content

You should receive a JSON response similar to the following:

{

"id": "JOB_ID",

"projectId": "PROJECT_ID",

"currentState": "JOB_STATE_DONE",

"currentStateTime": "2025-05-20T20:54:41.651442Z",

"createTime": "2025-05-20T20:51:06.031248Z",

"jobMetadata": {

"userDisplayProperties": {

"archived": "false"

}

},

"startTime": "2025-05-20T20:51:06.031248Z"

}