This page describes Vertex AI Search for commerce Conversational Commerce agent, which is a guided search capability. The conversational commerce agent capability functions as part of the guided search package, providing users with a real-time, ongoing conversational experience that's more interactive.

What is Conversational Commerce agent?

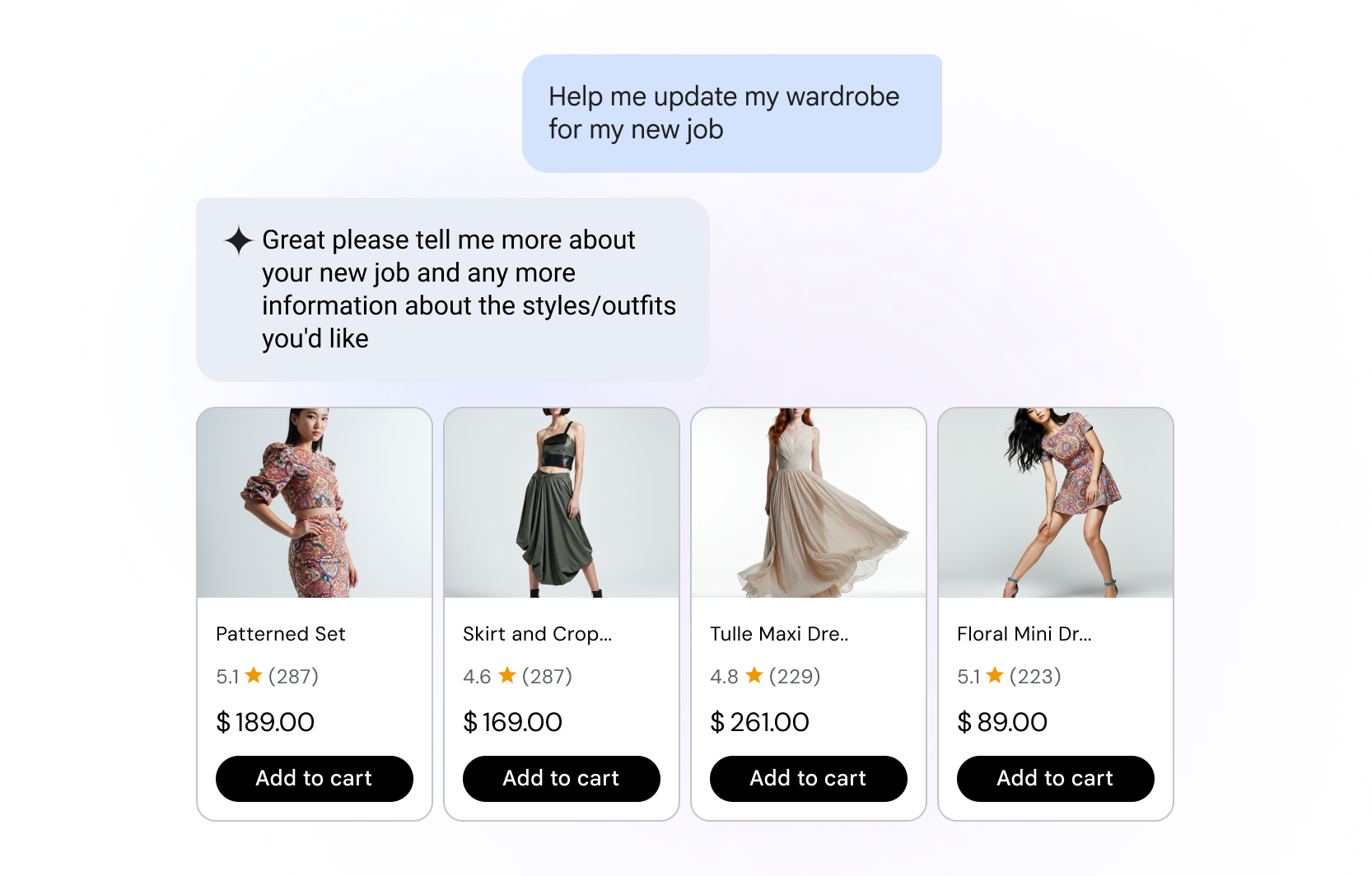

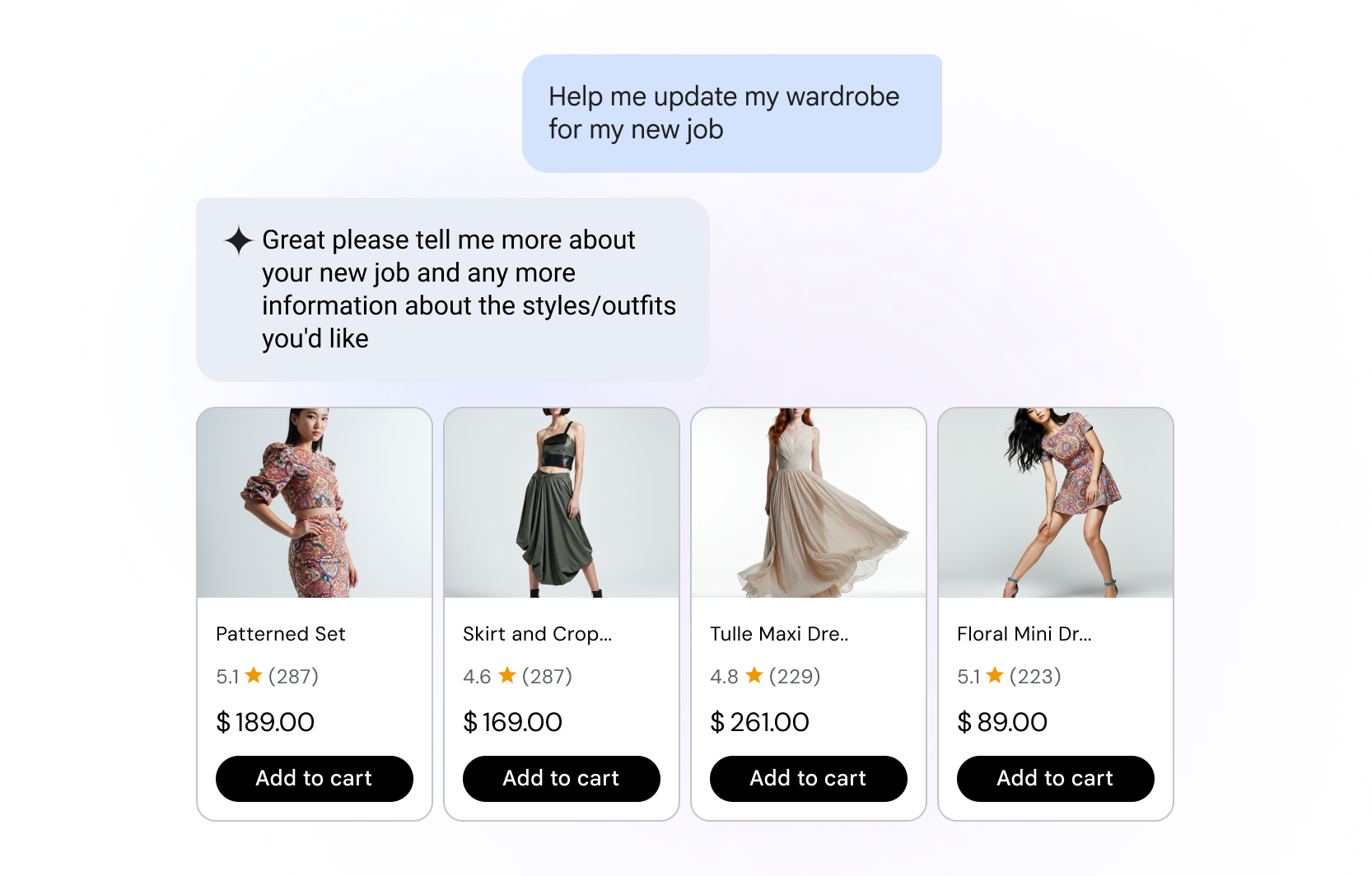

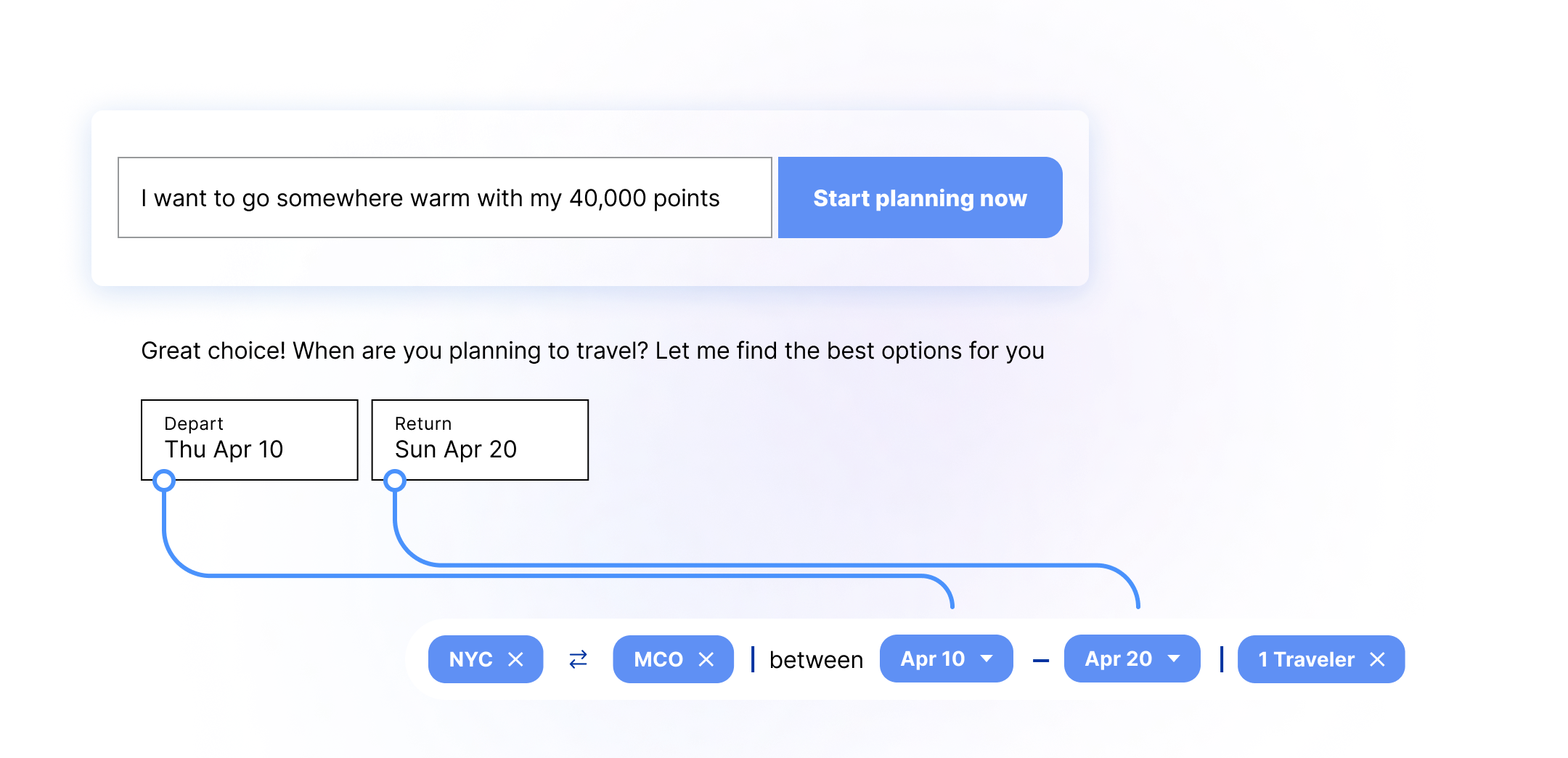

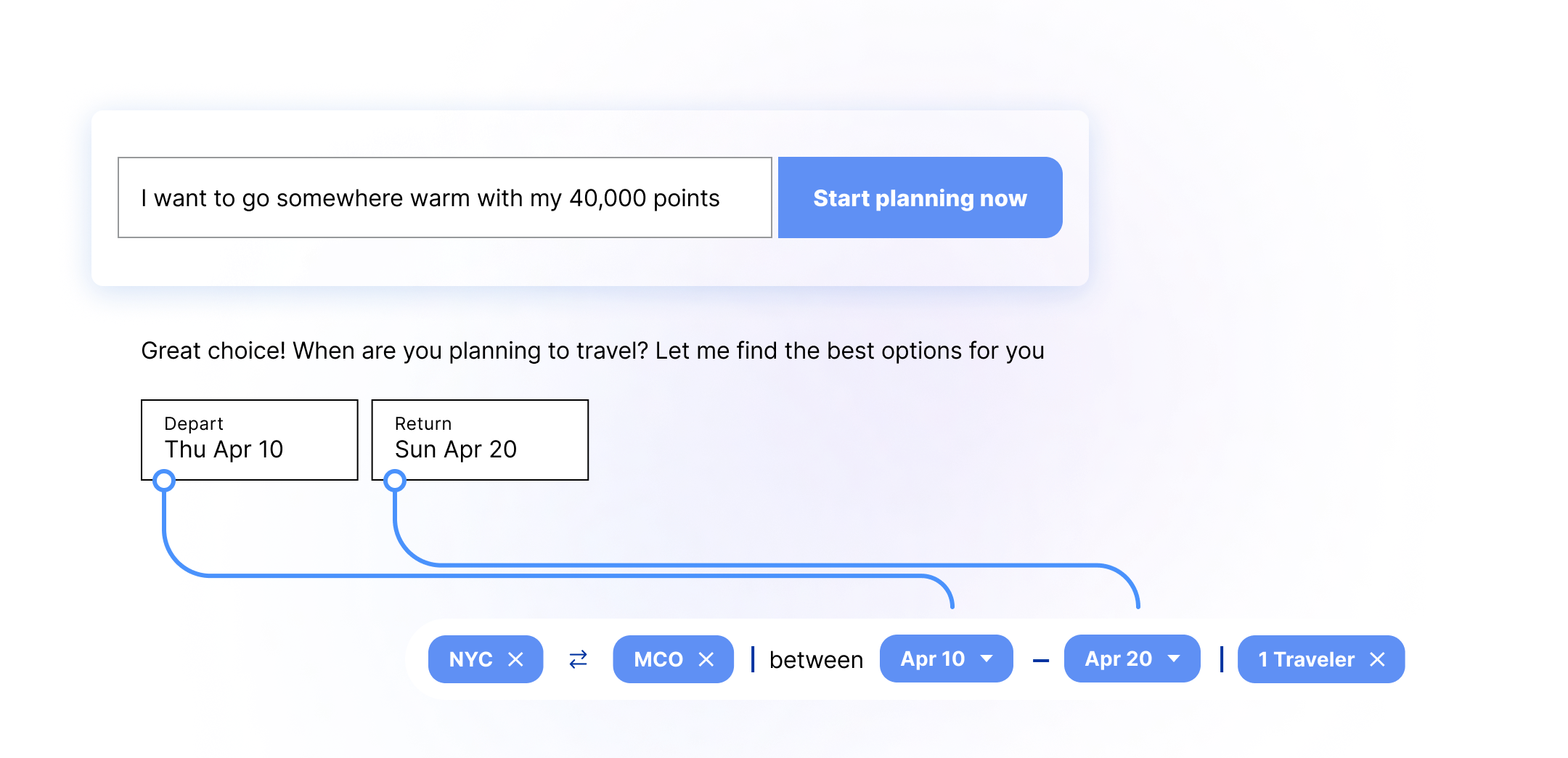

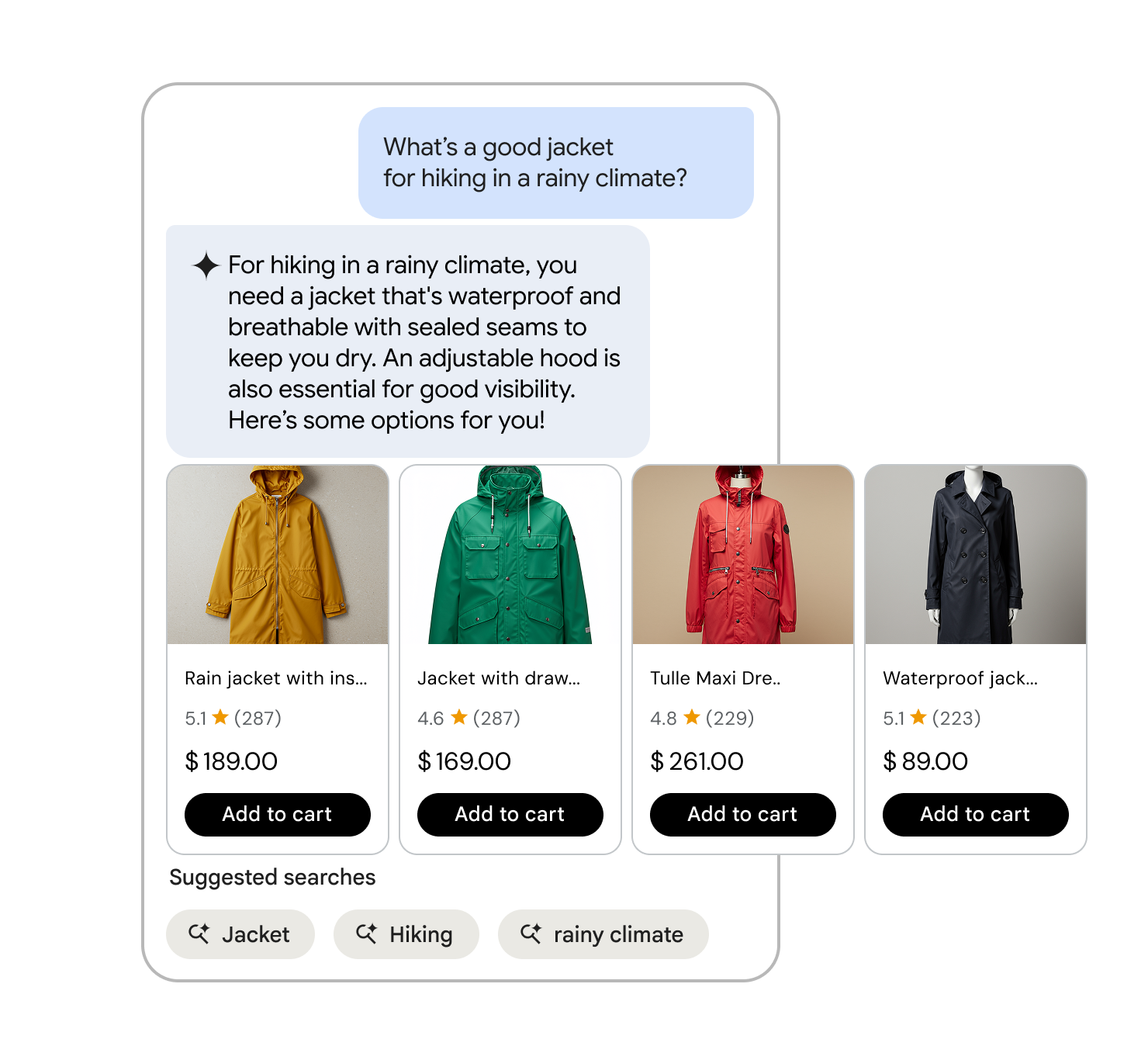

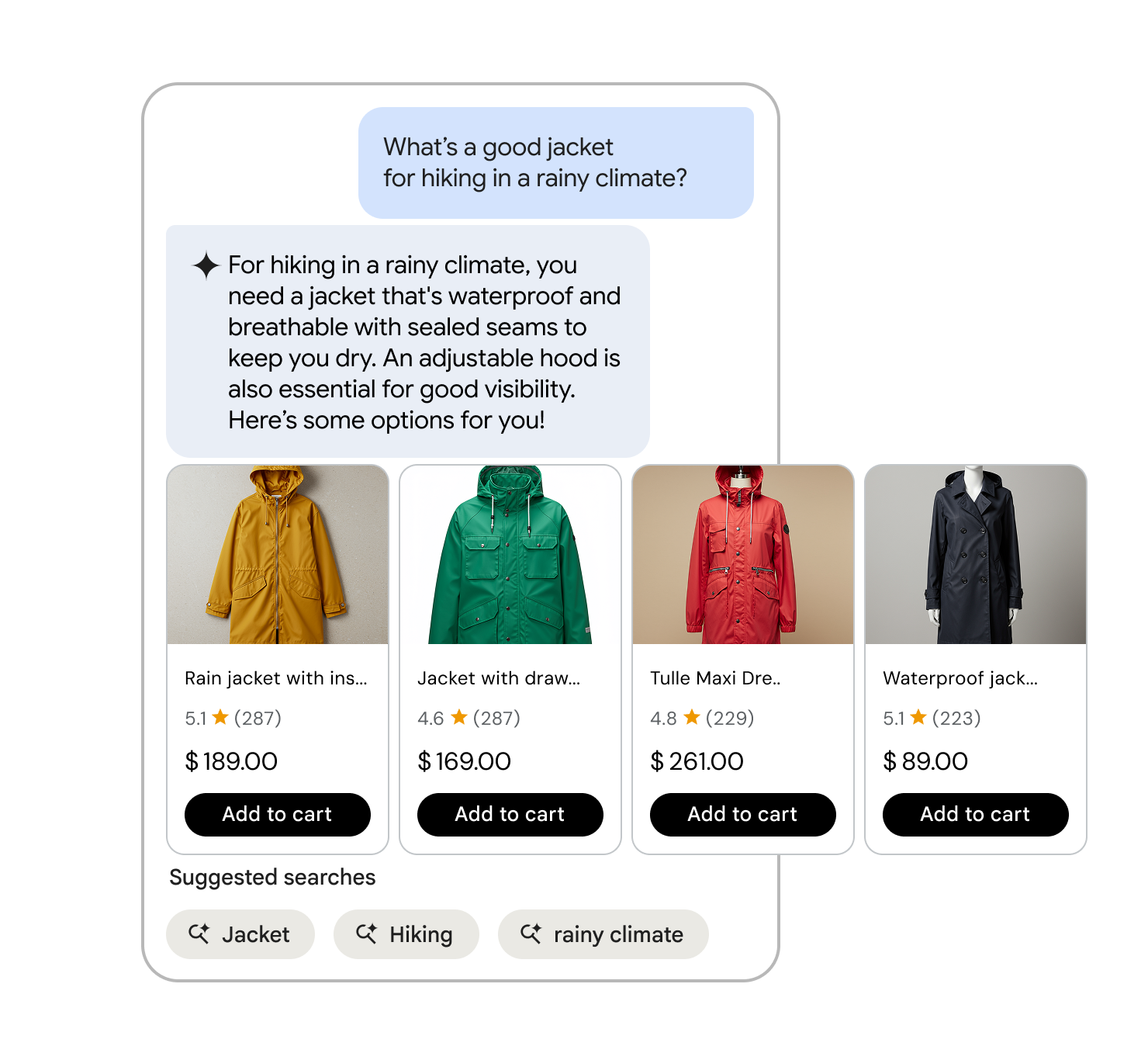

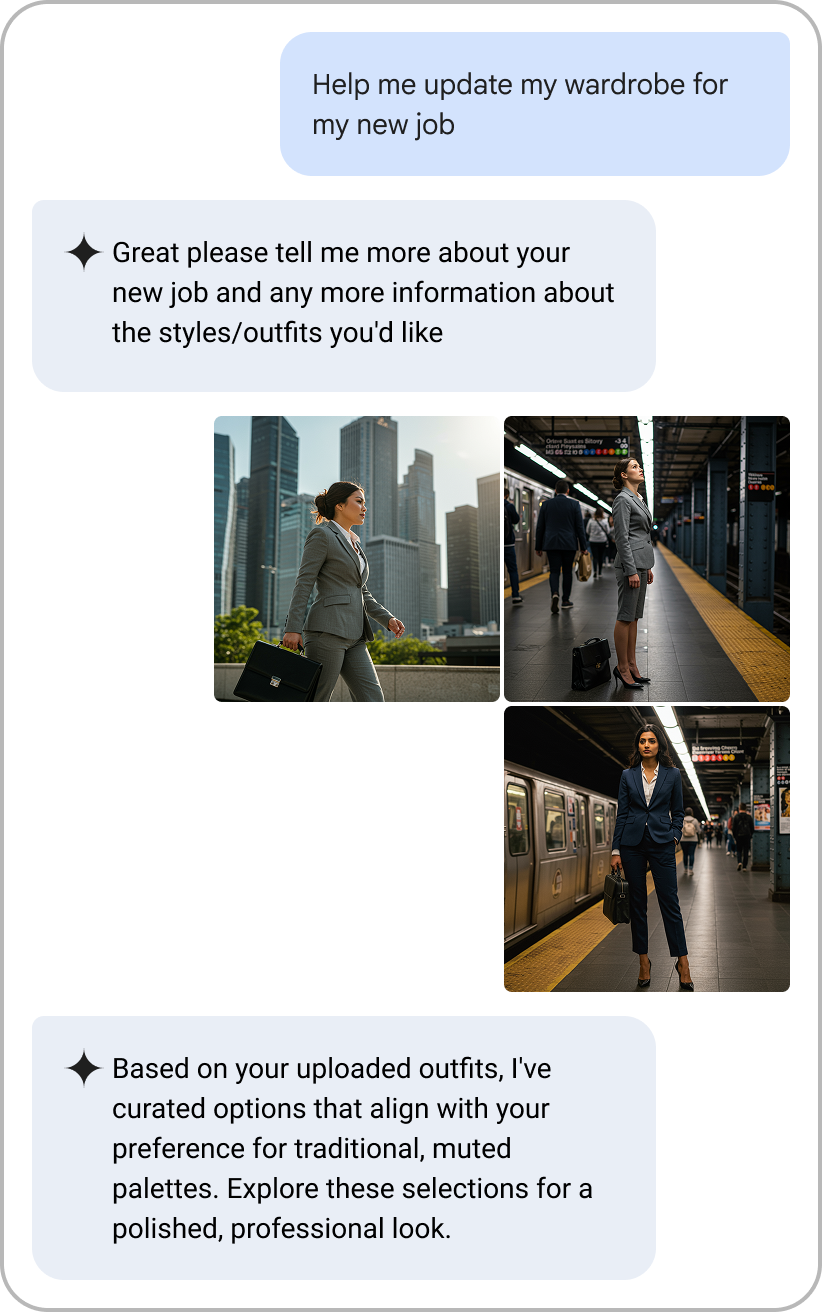

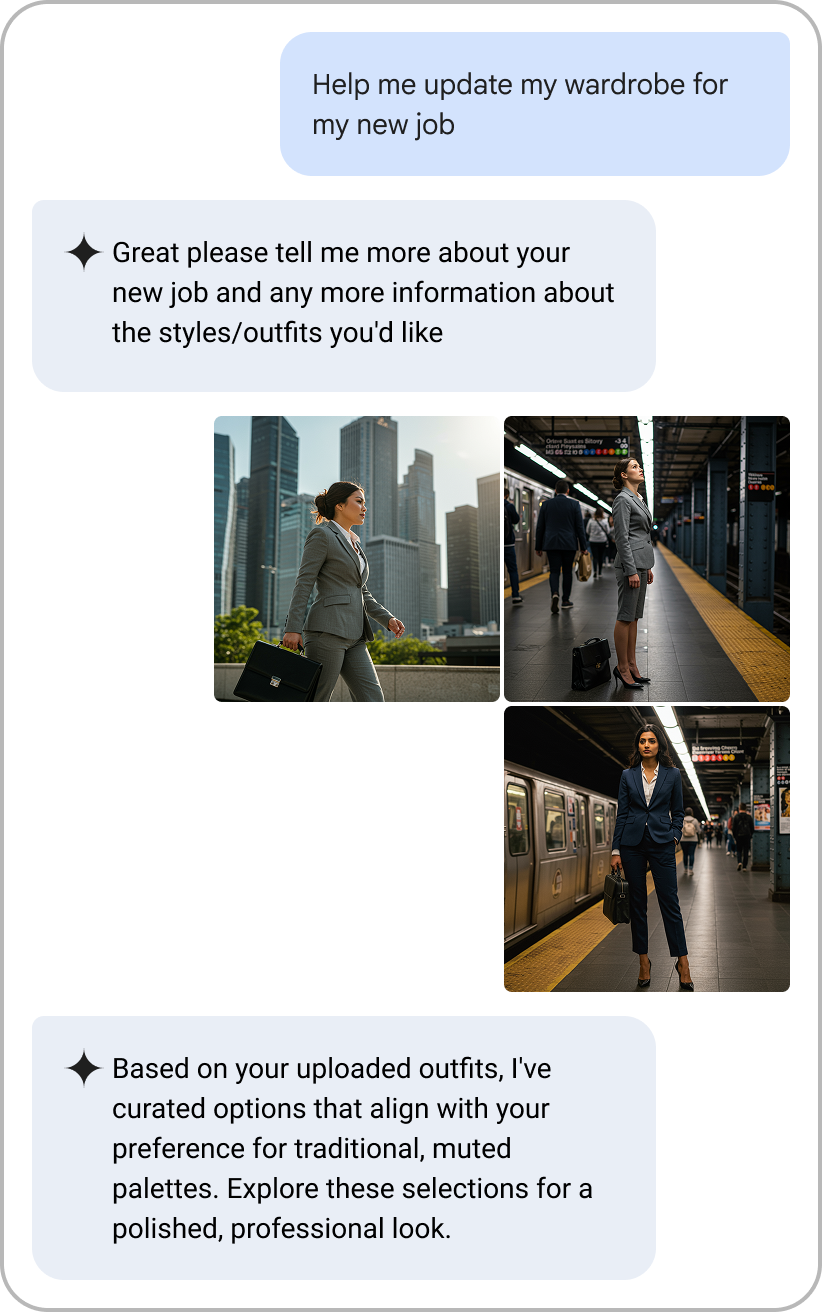

Conversational Commerce agent is an AI-driven guided search and product discovery tool. Instead of searching with keywords, users utilize natural language to ask for what they need, which includes follow up questions, multimodal interactions, improved intent understanding, and grounding with data beyond the product catalog.

Use case: Handles natural language queries beyond core product searches.

- Help me plan a party

- Compare 1% and 2% milk

- What are the best running shoes for trails?

Key difference from conversational product filtering: Provides AI-generated text answers and understands broader user intent.

Remember the architecture: The two-API model is still in effect. You must call the Search API to get product results based on suggestions from the Conversational API.

Conversational Commerce agent capabilities

Conversational Commerce agent adds to the Vertex AI Search for commerce experience in the following ways:

- Narrows user queries effectively: Conversational Commerce agent filters 10,000 products down to less than 100 products, increasing the likelihood that the user decides to make a purchase.

- Hyper-personalization: Search agents analyze shoppers' preferences, purchase history, and social media activity to provide more personalized product recommendations, promotions, and shopping experiences.

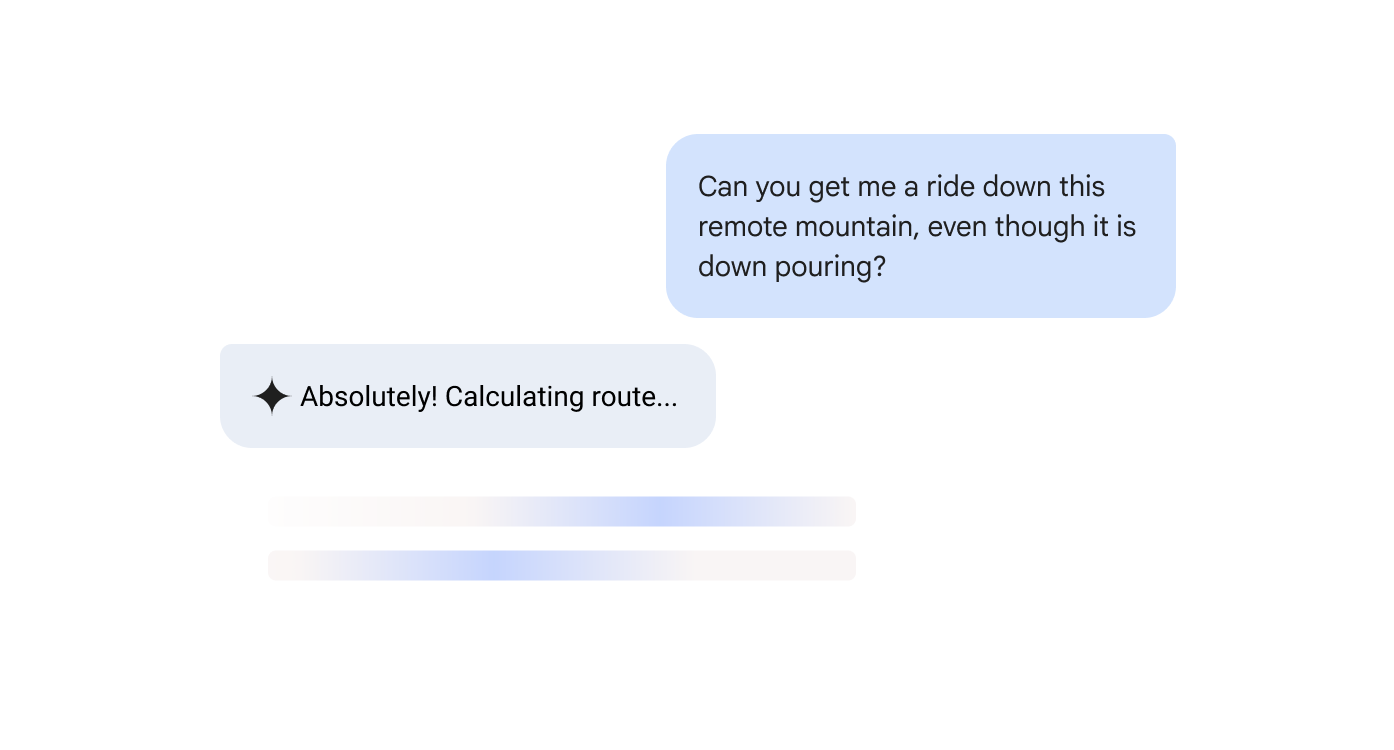

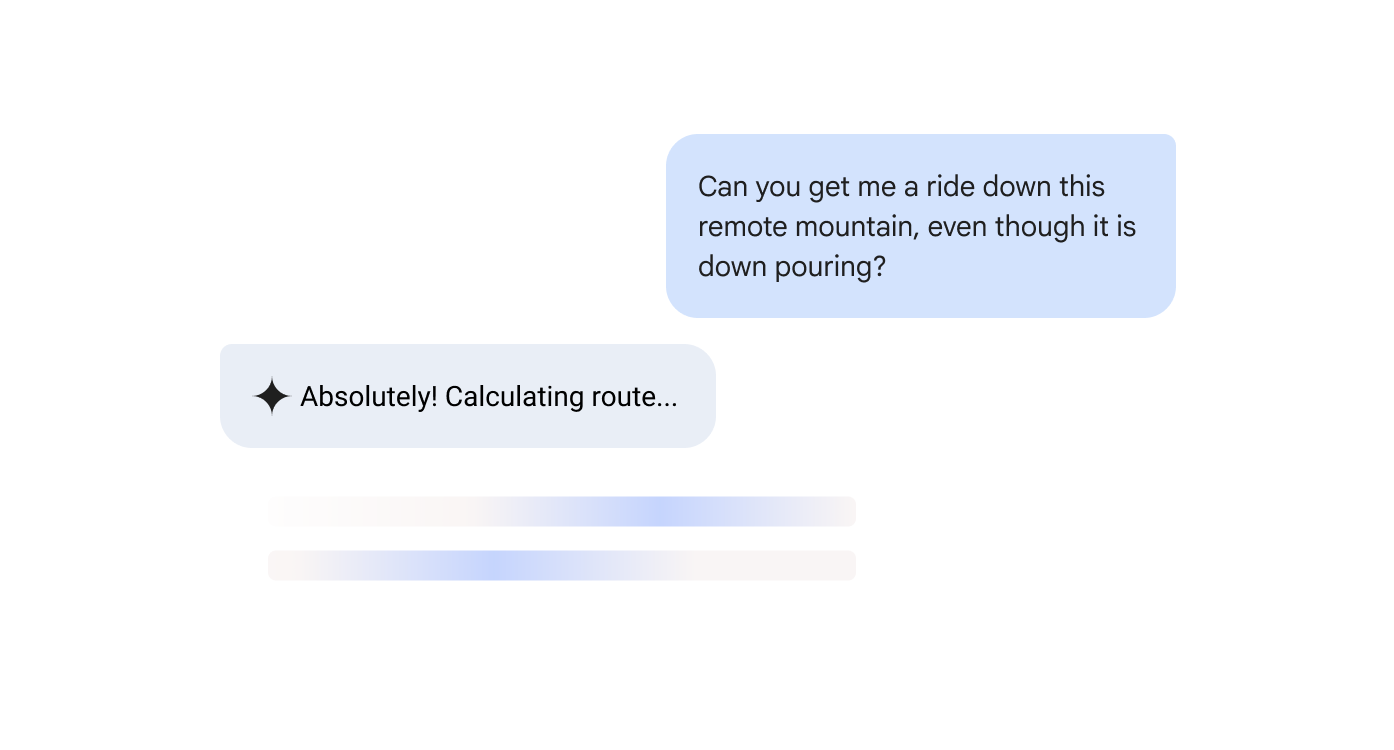

- Integrated end-to-end journeys: From product discovery to checkout, the search agents accompany the end user along their entire shopping journey with immersive, dynamic, and continuous conversation.

- Adapted to commerce use case: Conversational Commerce agent covers ecommerce, product discovery, and support.

- Immersive user experiences: With the help of search agents threading user conversations, augmented and virtual reality can be additionally implemented on the merchant site to create virtual try-ons, store tours, and spatial product visualization.

Impact of Conversational Commerce agent

As a central part of the guided search package, Conversational Commerce agent improves search result relevance and reduces user friction.

Customary commerce search is the most common way to find products. Customary approaches rely on rigid keyword matching, which requires your users to use specific words and manually adjust filters to refine results. However, only 1 in 10 consumers say that they find exactly what they're looking for when using legacy keyword-based search.

Conversational Commerce agent solves the most frustrating problems for users when searching, such as inexact matches, irrelevant results, or zero-return queries.

Natural language understanding

AI-powered search recognizes full-phrase queries, interprets intent and accounts for variation in language.

Predictive assistance

Helps users formulate queries more effectively by suggesting completions as they type.

Multimodal inputs

Conversational Commerce agent enables users to search using multimodal input methods such as voice and image, in addition to text. It understandings user intent, context and natural language variations in phrasing without losing context.

- Voice search: Spoken queries are often structured differently than typed ones. Vertex AI Search for commerce processes these variations while accounting for variables like accents, background noise and "um's," "uh's" and "like's." For mobile, voice search is not only easier to input, but it can also take up less screen space, allowing more real estate product visuals.

- Image search: Image recognition makes it faster to find a similar or unique item on a social media post or by snapping a picture in real life. Shoppers can then use image search to quickly search and locate a similar item on your site.

Mobile-first experience

Nearly 80% of all ecommerce visits worldwide occurred on a mobile phone in 2024. Smaller screens, shorter user sessions and clustered menus create unique challenges for legacy search experiences. Conversational Commerce agent is designed to enable users to access the full power of AI-driven search from their mobile devices.

The role of external search and AI assistants

When a user has a preferred retailer, they tend to go directly to it. However, when exploring new or broader options, the customer user journey is more likely to first start at a marketplace orientation, such as Google Search or an AI assistant.

As AI-driven search evolves, the Vertex AI Search for commerce guided search package bridges the gap between external and on-site product discovery. By treating the transition to the commerce site as a single, fluid conversation, Conversational Commerce agent ensures users find relevant results quickly.