This page shows you how to create a Dataproc cluster that uses the Spark Spanner connector to read data from Spanner using Apache Spark

The Spanner connector works with Spark to read data from the Spanner database using the Spanner Java library. The Spanner connector supports reading Spanner tables and graphs into Spark DataFrames and GraphFrames.

Costs

In this document, you use the following billable components of Google Cloud:

- Dataproc

- Spanner

- Cloud Storage

To generate a cost estimate based on your projected usage,

use the pricing calculator.

Before you begin

Before using the Spanner connector in this tutorial, set up a Dataproc cluster and a Spanner instance and database.

Set up a Dataproc cluster

Create a Dataproc cluster or use an existing Dataproc cluster that has the following settings:

VM service account permissions. The cluster VM service account must be assigned the appropriate Spanner permissions. If you use Data Boost (Data Boost is enabled in the example code in Export Spanner tables), the VM service account must also have the required Data Boost IAM permissions.

Access scope. The cluster must be created with

cloud-platformscope or the appropriatespannerscope enabled.cloud-platformscope is enabled by default for clusters created with image version 2.1 or higher.The following instructions show you how to set

cloud-platformscope as part of a a cluster creation request that uses the Google Cloud console, gcloud CLI, or Dataproc API. For additional cluster creation instructions, see Create a cluster.Google Cloud console

- In the Google Cloud console, open the Dataproc Create a cluster page.

- On the Manage security panel in the Project access section, click "Enables the cloud-platform scope for this cluster".

- Fill in or confirm the other cluster creation fields. then click Create.

gcloud CLI

You can run the following

gcloud dataproc clusters createcommand to create a cluster with thecloud-platformscope enabled.gcloud dataproc clusters create CLUSTER_NAME --scopes https://www.googleapis.com/auth/cloud-platform

API

You can specify the GceClusterConfig.serviceAccountScopes as part of a clusters.create request.

"serviceAccountScopes": "https://www.googleapis.com/auth/cloud-platform"

Set up a Spanner instance with a Singers database table

Create a Spanner instance

with a database that contains a Singers table. Note the Spanner

instance ID and database ID.

Use the Spanner connector with Spark

The Spanner connector is available for Spark versions 3.1+.

You specify the

connector version as part of the Cloud Storage

connector JAR file specification when you

submit a job to

a Dataproc cluster.

Example: gcloud CLI Spark job submission with the Spanner connector.

gcloud dataproc jobs submit spark \ --jars=gs://spark-lib/spanner/spark-3.1-spanner-CONNECTOR_VERSION.jar \ ... [other job submission flags]

Replace the following:

CONNECTOR_VERSION: Spanner connector version.

Choose the Spanner connector version from the version list in the GitHub

GoogleCloudDataproc/spark-spanner-connector repository.

Read Spanner tables

You can use Python or Scala to read Spanner table data into a Spark Dataframe using the Spark data source API.

PySpark

You can run the example PySpark code in this section on your cluster by submitting the job to the

Dataproc service or by running the job from the spark-submit REPL

on the cluster master node.

Dataproc job

- Create a

singers.pyfile in using a local text editor or in Cloud Shell using the pre-installedvi,vim, ornanotext editor. - After populating the placehoder variables, paste the following code

into the

singers.pyfile. Note that the Spanner Data Boost feature is enabled, which has near-zero impact on the main Spanner instance.#!/usr/bin/env python """Spanner PySpark read example.""" from pyspark.sql import SparkSession spark = SparkSession \ .builder \ .master('yarn') \ .appName('spark-spanner-demo') \ .getOrCreate() # Load data from Spanner. singers = spark.read.format('cloud-spanner') \ .option("projectId", "PROJECT_ID") \ .option("instanceId", "INSTANCE_ID") \ .option("databaseId", "DATABASE_ID") \ .option("table", "TABLE_NAME") \ .option("enableDataBoost", "true") \ .load() singers.createOrReplaceTempView('Singers') # Read from Singers result = spark.sql('SELECT * FROM Singers') result.show() result.printSchema()

Replace the following:

- PROJECT_ID: Your Google Cloud project ID. Project IDs are listed in the Project info section on the Google Cloud console Dashboard.

- INSTANCE_ID, DATABASE_ID, and TABLE_NAME : See

Set up a Spanner instance with

Singersdatabase table.

- Save the

singers.pyfile. - Submit the job

to the Dataproc service using the Google Cloud console, gcloud CLI or

Dataproc API.

Example: gcloud CLI job submission with the Spanner connector.

gcloud dataproc jobs submit pyspark singers.py \ --cluster=CLUSTER_NAME \ --region=REGION \ --jars=gs://spark-lib/spanner/spark-3.1-spanner-CONNECTOR_VERSION.jarReplace the following:

- CLUSTER_NAME: The name of the new cluster.

- REGION: An available Compute Engine region to run the workload.

- CONNECTOR_VERSION: Spanner connector version.

Choose the Spanner connector version from the version list in the GitHub

GoogleCloudDataproc/spark-spanner-connectorrepository.

spark-submit job

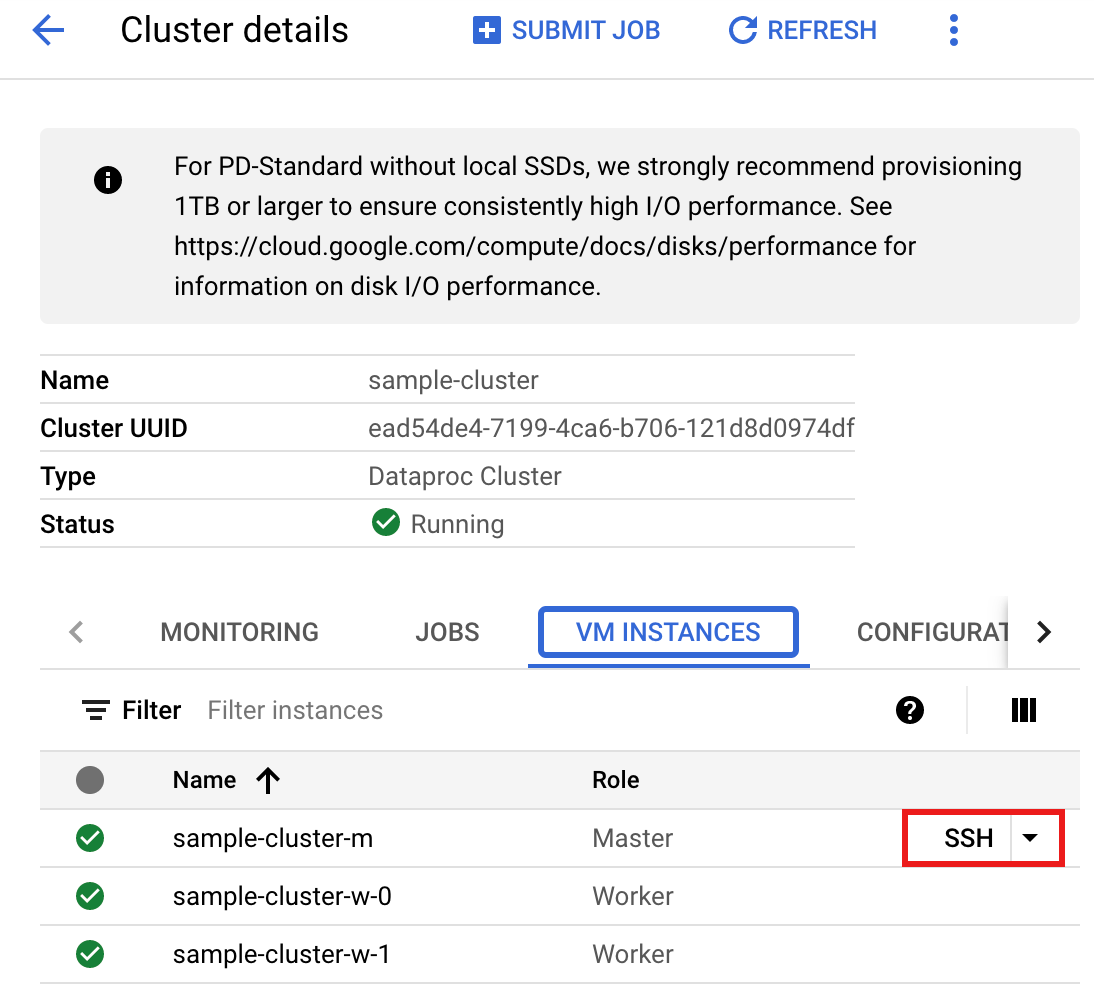

- Connect to the Dataproc cluster master node using SSH.

- Go to the Dataproc Clusters page in the Google Cloud console, then click the name of your cluster.

- On the Cluster details page, select the VM Instances tab. Then click

SSHto the right of the name of the cluster master node.

A browser window opens at your home directory on the master node.

Connected, host fingerprint: ssh-rsa 2048 ... ... user@clusterName-m:~$

- Create a

singers.pyfile on the master node using the pre-installedvi,vim, ornanotext editor.- Paste the following code into the

singers.pyfile. Note that the Spanner Data Boost feature is enabled, which has near-zero impact on the main Spanner instance.#!/usr/bin/env python """Spanner PySpark read example.""" from pyspark.sql import SparkSession spark = SparkSession \ .builder \ .master('yarn') \ .appName('spark-spanner-demo') \ .getOrCreate() # Load data from Spanner. singers = spark.read.format('cloud-spanner') \ .option("projectId", "PROJECT_ID") \ .option("instanceId", "INSTANCE_ID") \ .option("databaseId", "DATABASE_ID") \ .option("table", "TABLE_NAME") \ .option("enableDataBoost", "true") \ .load() singers.createOrReplaceTempView('Singers') # Read from Singers result = spark.sql('SELECT * FROM Singers') result.show() result.printSchema()

Replace the following:

- PROJECT_ID: Your Google Cloud project ID. Project IDs are listed in the Project info section on the Google Cloud console Dashboard.

- INSTANCE_ID, DATABASE_ID, and TABLE_NAME : See

Set up a Spanner instance with

Singersdatabase table.

- Save the

singers.pyfile.

- Paste the following code into the

- Run

singers.pywithspark-submitto create the SpannerSingerstable.spark-submit --jars gs://spark-lib/spanner/spark-3.1-spanner-CONNECTOR_VERSION.jar singers.py

Replace the following:

- CONNECTOR_VERSION: Spanner connector version.

Choose the Spanner connector version from the version list in the GitHub

GoogleCloudDataproc/spark-spanner-connectorrepository.

The output is:

... +--------+---------+--------+---------+-----------+ |SingerId|FirstName|LastName|BirthDate|LastUpdated| +--------+---------+--------+---------+-----------+ | 1| Marc|Richards| null| null| | 2| Catalina| Smith| null| null| | 3| Alice| Trentor| null| null| +--------+---------+--------+---------+-----------+ root |-- SingerId: long (nullable = false) |-- FirstName: string (nullable = true) |-- LastName: string (nullable = true) |-- BirthDate: date (nullable = true) |-- LastUpdated: timestamp (nullable = true) only showing top 20 rows

- CONNECTOR_VERSION: Spanner connector version.

Choose the Spanner connector version from the version list in the GitHub

Scala

To run the example Scala code on your cluster, complete the following steps:

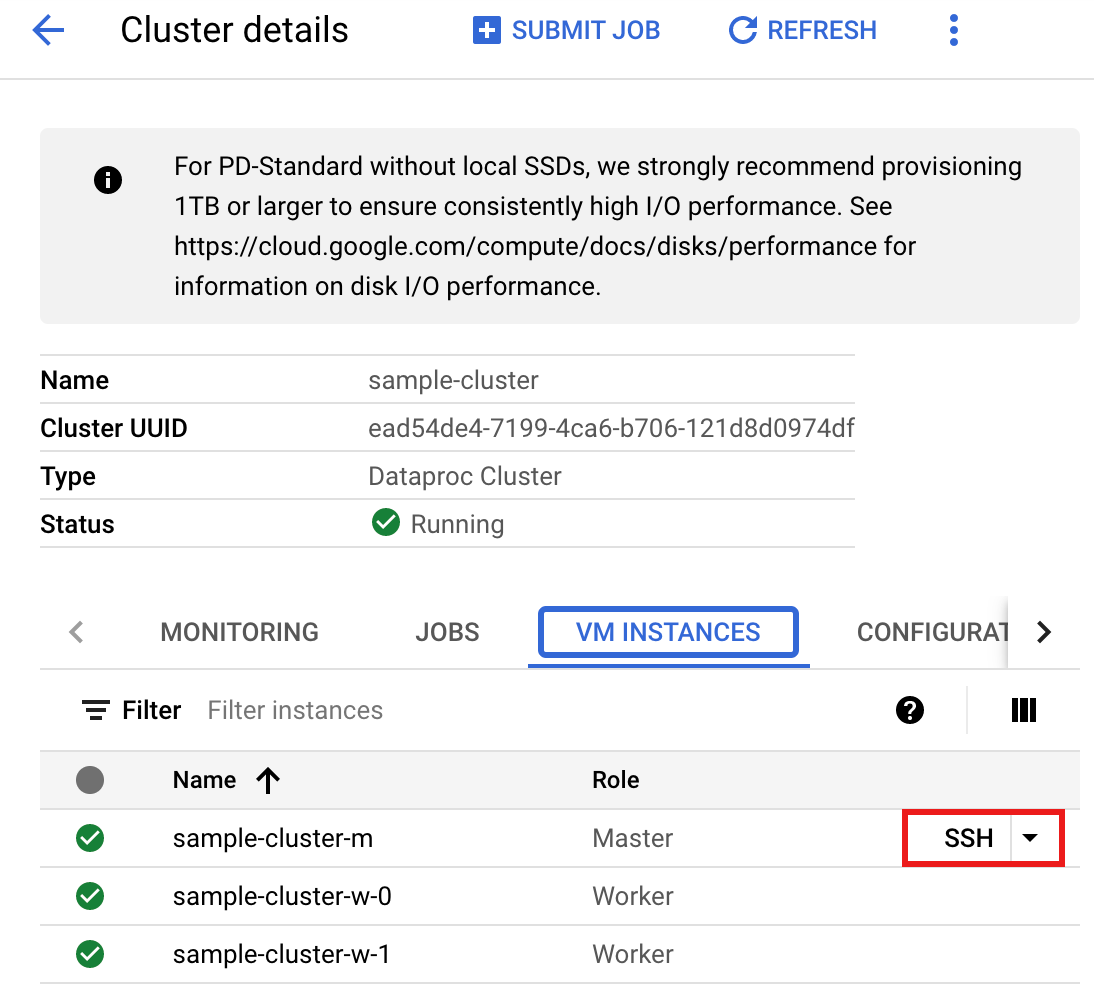

- Connect to the Dataproc cluster master node using SSH.

- Go to the Dataproc Clusters page in the Google Cloud console, then click the name of your cluster.

- On the Cluster details page, select the VM Instances tab. Then click

SSHto the right of the name of the cluster master node.

A browser window opens at your home directory on the master node.

Connected, host fingerprint: ssh-rsa 2048 ... ... user@clusterName-m:~$

- Create a

singers.scalafile on the master node using the pre-installedvi,vim, ornanotext editor.- Paste the following code into the

singers.scalafile. Note that the Spanner Data Boost feature is enabled, which has near-zero impact on the main Spanner instance.object singers { def main(): Unit = { /* * Uncomment (use the following code) if you are not running in spark-shell. * import org.apache.spark.sql.SparkSession val spark = SparkSession.builder() .appName("spark-spanner-demo") .getOrCreate() */ // Load data in from Spanner. See // https://github.com/GoogleCloudDataproc/spark-spanner-connector/blob/main/README.md#properties // for option information. val singersDF = (spark.read.format("cloud-spanner") .option("projectId", "PROJECT_ID") .option("instanceId", "INSTANCE_ID") .option("databaseId", "DATABASE_ID") .option("table", "TABLE_NAME") .option("enableDataBoost", true) .load() .cache()) singersDF.createOrReplaceTempView("Singers") // Load the Singers table. val result = spark.sql("SELECT * FROM Singers") result.show() result.printSchema() } }

Replace the following:

- PROJECT_ID: Your Google Cloud project ID. Project IDs are listed in the Project info section on the Google Cloud console Dashboard.

- INSTANCE_ID, DATABASE_ID, and TABLE_NAME : See

Set up a Spanner instance with

Singersdatabase table.

- Save the

singers.scalafile.

- Paste the following code into the

- Launch the

spark-shellREPL.$ spark-shell --jars=gs://spark-lib/spanner/spark-3.1-spanner-CONNECTOR_VERSION.jar

Replace the following:

CONNECTOR_VERSION: Spanner connector version. Choose the Spanner connector version from the version list in the GitHub

GoogleCloudDataproc/spark-spanner-connectorrepository. - Run

singers.scalawith the:load singers.scalacommand to create the SpannerSingerstable. The output listing displays examplesfrom the Singers output.> :load singers.scala Loading singers.scala... defined object singers > singers.main() ... +--------+---------+--------+---------+-----------+ |SingerId|FirstName|LastName|BirthDate|LastUpdated| +--------+---------+--------+---------+-----------+ | 1| Marc|Richards| null| null| | 2| Catalina| Smith| null| null| | 3| Alice| Trentor| null| null| +--------+---------+--------+---------+-----------+ root |-- SingerId: long (nullable = false) |-- FirstName: string (nullable = true) |-- LastName: string (nullable = true) |-- BirthDate: date (nullable = true) |-- LastUpdated: timestamp (nullable = true)

Read Spanner graphs

The Spanner connector supports exporting the graph into separate

node and edge DataFrames

as well as exporting into

GraphFrames

directly.

The following example exports a Spanner into a GraphFrame.

It uses the Python SpannerGraphConnectorclass,

included in the Spanner connector jar, to read the

Spanner Graph.

from pyspark.sql import SparkSession connector_jar = "gs://spark-lib/spanner/spark-3.1-spanner-CONNECTOR_VERSION.jar" spark = (SparkSession.builder.appName("spanner-graphframe-graphx-example") .config("spark.jars.packages", "graphframes:graphframes:0.8.4-spark3.5-s_2.12") .config("spark.jars", connector_jar) .getOrCreate()) spark.sparkContext.addPyFile(connector_jar) from spannergraph import SpannerGraphConnector connector = (SpannerGraphConnector() .spark(spark) .project("PROJECT_ID") .instance("INSTANCE_ID") .database("DATABASE_ID") .graph("GRAPH_ID")) g = connector.load_graph() g.vertices.show() g.edges.show()

Replace the following:

- CONNECTOR_VERSION: Spanner connector version.

Choose the Spanner connector version from the version list in the GitHub

GoogleCloudDataproc/spark-spanner-connectorrepository. - PROJECT_ID: Your Google Cloud project ID. Project IDs are listed in the Project info section on the Google Cloud console Dashboard.

- INSTANCE_ID, DATABASE_ID, and TABLE_NAME Insert the instance, database, and graph IDs.

To export node and edge DataFrames instead of GraphFrames, use

load_dfs instead:

df_vertices, df_edges, df_id_map = connector.load_dfs()

Clean up

To avoid incurring ongoing charges to your Google Cloud account, you can stop or delete your Dataproc cluster and delete your Spanner instance.

What's next

- Refer to the

pyspark.sql.DataFrameexamples. - For Spark DataFrame language support, see the following:

- Refer to the Spark Spanner Connector repository on GitHub.

- See the Spark job tuning tips.