In this document, you use the following billable components of Google Cloud:

- Dataproc

- Compute Engine

- Cloud Scheduler

To generate a cost estimate based on your projected usage,

use the pricing calculator.

Before you begin

Set up your project

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Dataproc, Compute Engine, and Cloud Scheduler APIs.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. -

Install the Google Cloud CLI.

-

If you're using an external identity provider (IdP), you must first sign in to the gcloud CLI with your federated identity.

-

To initialize the gcloud CLI, run the following command:

gcloud init -

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Dataproc, Compute Engine, and Cloud Scheduler APIs.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles. -

Install the Google Cloud CLI.

-

If you're using an external identity provider (IdP), you must first sign in to the gcloud CLI with your federated identity.

-

To initialize the gcloud CLI, run the following command:

gcloud init

Create a custom role

- Open the Open IAM & Admin → Roles

page in the Google Cloud console.

- Click CREATE ROLE to open the Create Role page.

- Complete the Title, Description, ID, Launch stage fields. Suggestion: Use "Dataproc Workflow Template Create" as the role title.

- Click ADD PERMISSIONS,

- In the Add permissions form, click Filter, then select "Permission". Complete the filter to read "Permission: dataproc.workflowTemplates.instantiate".

- Click the checkbox to the left of the listed permission, then

click Add.

- On the Create Role page, click ADD PERMISSIONS again to repeat the

previous sub-steps to add the "iam.serviceAccounts.actAs" permission

to the custom role. The Create Role page now lists two permissions.

- Click CREATE on the Custom Role page.

The custom role is listed on the Roles page.

Create a service account

In the Google Cloud console, go to the Service Accounts page.

Select your project.

Click Create Service Account.

In the Service account name field, enter the name

workflow-scheduler. The Google Cloud console fills in the Service account ID field based on this name.Optional: In the Service account description field, enter a description for the service account.

Click Create and continue.

Click the Select a role field and choose the Dataproc Workflow Template Create custom role that you created in the previous step.

Click Continue.

In the Service account admins role field, enter your Google account email address.

Click Done to finish creating the service account.

Create a workflow template.

Copy and run the commands listed below in a local terminal window or in Cloud Shell to create and define a workflow template.

Notes:

- The commands specify the "us-central1"

region. You can specify

a different region or delete the

--regionflag if you have previously rungcloud config set compute/regionto set the region property. - The "-- " (dash dash space) sequence in the

add-jobcommand passes the1000argument to the SparkPi job, which specifies the number of samples to use to estimate the value of Pi.

- Create the workflow template.

gcloud dataproc workflow-templates create sparkpi \ --region=us-central1

- Add the spark job to the sparkpi workflow template. The "compute" step ID

is required, and identifies the added SparkPi job.

gcloud dataproc workflow-templates add-job spark \ --workflow-template=sparkpi \ --step-id=compute \ --class=org.apache.spark.examples.SparkPi \ --jars=file:///usr/lib/spark/examples/jars/spark-examples.jar \ --region=us-central1 \ -- 1000

- Use a

managed,

single-node

cluster to run the workflow. Dataproc will create the

cluster, run the workflow on it, then delete the cluster when the workflow completes.

gcloud dataproc workflow-templates set-managed-cluster sparkpi \ --cluster-name=sparkpi \ --single-node \ --region=us-central1

- Click the

sparkpiname on the Dataproc Workflows page in the Google Cloud console to open the Workflow template details page. Confirm the sparkpi template attributes.

Create a Cloud Scheduler job

Open the Cloud Scheduler page in the Google Cloud console (you may need to select your project to open the page). Click CREATE JOB.

Enter or select the following job information:

- Select a region: "us-central" or other region where you created your workflow template.

- Name: "sparkpi"

- Frequency: "* * * * *" selects every minute; "0 9 * * 1" selects every Monday at 9 AM. See Defining the Job Schedule for other unix-cron values. Note: You will be able to click a RUN NOW button on the Cloud Scheduler Jobs in the Google Cloud console to run and test your job regardless of the frequency you set for your job.

- Timezone: Select your timezone. Type "United States" to list U.S. timezones.

- Target: "HTTP"

- URL: Insert the following URL after inserting

your-project-id. Replace "us-central1" if you created

your workflow template in a different region. This URL will call

the Dataproc

workflowTemplates.instantiateAPI to run your sparkpi workflow template.https://dataproc.googleapis.com/v1/projects/your-project-id/regions/us-central1/workflowTemplates/sparkpi:instantiate?alt=json

- HTTP method:

- "POST"

- Body: "{}"

- Auth header:

- "Add OAuth token"

- Service account: Insert the service account address of

the service account that you created for this tutorial.

You can use the following account address after inserting your-project-id:

workflow-scheduler@your-project-id.iam.gserviceaccount.com

- Scope: You can ignore this item.

- Click CREATE.

Test your scheduled workflow job

On the

sparkpijob row on the Cloud Scheduler Jobs page, click RUN NOW.Wait a few minutes, then open the Dataproc Workflows page to verify that the sparkpi workflow completed.

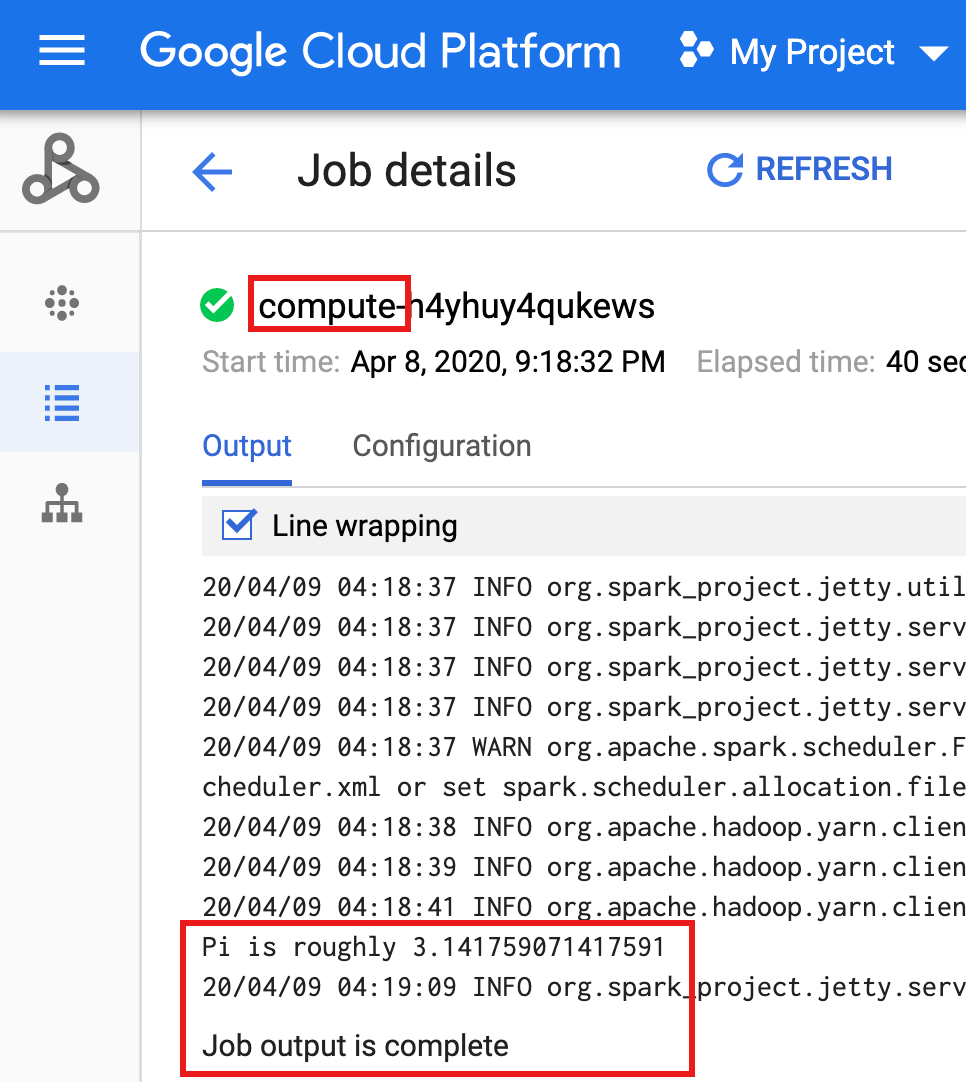

After the workflow deletes the managed cluster, job details persist in the Google Cloud console. Click the

compute...job listed on the Dataproc Jobs page to view workflow job details.

Clean up

The workflow in this tutorial deletes its managed cluster when the workflow completes. Keeping the workflow lets you rerun the workflow and does not incur charges. You can delete other resources created in this tutorial to avoid recurring costs.

Delete a project

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

Delete your workflow template

gcloud dataproc workflow-templates delete sparkpi \ --region=us-central1

Delete your Cloud Scheduler job

Open the Cloud Scheduler Jobs

page in the Google Cloud console, select the box to the left of the sparkpi

function, then click DELETE.

Delete your service account

Open the IAM & Admin → Service Accounts

page in the Google Cloud console, select the box to the left of the workflow-scheduler...

service account, then click DELETE.