This document describes how to enable data lineage on Google Cloud Serverless for Apache Spark batch workloads and interactive sessions at the project, batch workload, or interactive session level.

Overview

Data lineage is a Dataplex Universal Catalog feature that lets you track how data moves through your systems: where it comes from, where it is passed to, and what transformations are applied to it.

Google Cloud Serverless for Apache Spark workloads and sessions capture lineage events and publish them to the Dataplex Universal Catalog Data Lineage API. Serverless for Apache Spark integrates with the Data Lineage API through OpenLineage, using the OpenLineage Spark plugin.

You can access lineage information through Dataplex Universal Catalog, using lineage graphs and the Data Lineage API. For more information, see View lineage graphs in Dataplex Universal Catalog.

Availability, capabilities, and limitations

Data lineage, which supports BigQuery and Cloud Storage

data sources, is available for workloads and sessions that run with

Serverless for Apache Spark runtime versions 1.1, 1.2, and 2.2,

with the following exceptions and limitations:

- Data lineage is not available for SparkR or Spark streaming workloads or sessions.

Before you begin

On the project selector page in the Google Cloud console, select the project to use for your Serverless for Apache Spark workloads or sessions.

Enable the Data Lineage API.

Required roles

If your batch workload uses the

default Serverless for Apache Spark service account,

it has the Dataproc Worker

role, which enables data lineage. No additional action is necessary.

However, if your batch workload uses a custom service account to enable data lineage, you must grant a required role to the custom service account as explained in the following paragraph.

To get the permissions that you need to use data lineage with Dataproc , ask your administrator to grant you the following IAM roles on your batch workload custom service account:

-

Grant one of the following roles:

-

Dataproc Worker (

roles/dataproc.worker) -

data lineage Editor (

roles/datalineage.editor) -

data lineage Producer (

roles/datalineage.producer) -

data lineage Administrator (

roles/datalineage.admin)

-

Dataproc Worker (

For more information about granting roles, see Manage access to projects, folders, and organizations.

You might also be able to get the required permissions through custom roles or other predefined roles.

Enable data lineage at the project level

You can enable data lineage at the project level. When enabled at the project level, all subsequent batch workloads and interactive sessions that you run in the project will have Spark lineage enabled.

How to enable data lineage at the project level

To enable data lineage at the project level, set the following custom project metadata.

| Key | Value |

|---|---|

DATAPROC_LINEAGE_ENABLED |

true |

DATAPROC_CLUSTER_SCOPES |

https://www.googleapis.com/auth/cloud-platform |

You can disable data lineage at the project level by setting the

DATAPROC_LINEAGE_ENABLED metadata to false.

Enable data lineage for a Spark batch workload

You can enable data lineage on a batch workload

by setting the spark.dataproc.lineage.enabled property to true when you

submit the workload.

Batch workload example

This example submits a batch lineage-example.py workload with Spark lineage

enabled.

gcloud dataproc batches submit pyspark lineage-example.py \ --region=REGION \ --deps-bucket=gs://BUCKET \ --properties=spark.dataproc.lineage.enabled=true

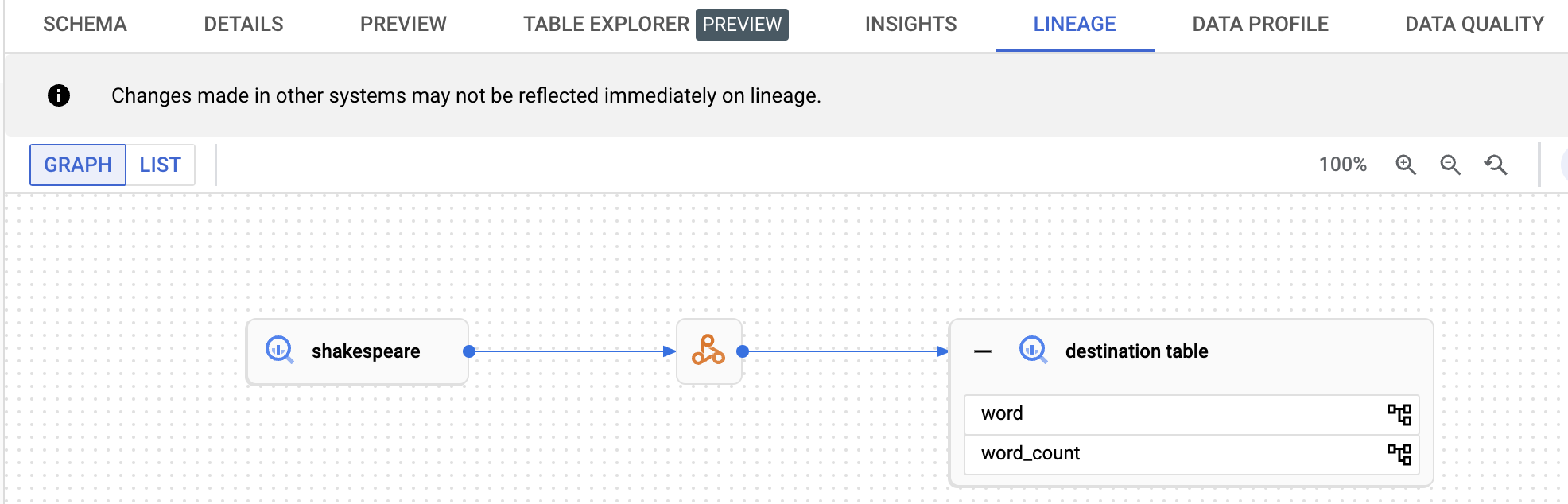

lineage-example.py reads data from a public BigQuery

table, and then writes the output a new table in an existing BigQuery

dataset. It uses a Cloud Storage bucket for temporary storage.

#!/usr/bin/env python

from pyspark.sql import SparkSession

import sys

spark = SparkSession \

.builder \

.appName('LINEAGE_BQ_TO_BQ') \

.getOrCreate()

source = 'bigquery-public-data:samples.shakespeare'

words = spark.read.format('bigquery') \

.option('table', source) \

.load()

words.createOrReplaceTempView('words')

word_count = spark.sql('SELECT word, SUM(word_count) AS word_count FROM words GROUP BY word')

destination_table = 'PROJECT_ID:DATASET.TABLE'

word_count.write.format('bigquery') \

.option('table', destination_table) \

.option('writeMethod', 'direct') \

.save()

Make the following replacements:

REGION: Select a region to run your workload.

BUCKET: The name of an existing Cloud Storage bucket to store dependencies.

PROJECT_ID, DATASET, and TABLE: Insert your project ID, the name of an existing BigQuery dataset, and the name of a new table to create in the dataset (the table must not exist).

You can view the lineage graph in the Dataplex Universal Catalog UI.

Enable data lineage for a Spark interactive session

You can enable data lineage on a Spark interactive session

by setting the spark.dataproc.lineage.enabled property to true when you

create the session or session template.

Interactive session example

The following PySpark notebook code configures a Serverless for Apache Spark interactive session with Spark data lineage enabled. It then creates a Spark Connect session that runs a word count query on a public BigQuery Shakespeare dataset, and then writes the output to a new table in an existing BigQuery dataset.

# Configure the Dataproc Serverless interactive session

# to enable Spark data lineage.

from google.cloud.dataproc_v1 import Session

session = Session()

session.runtime_config.properties["spark.dataproc.lineage.enabled"] = "true"

# Create the Spark Connect session.

from google.cloud.dataproc_spark_connect import DataprocSparkSession

spark = DataprocSparkSession.builder.dataprocSessionConfig(session).getOrCreate()

# Run a wordcount query on the public BigQuery Shakespeare dataset.

source = "bigquery-public-data:samples.shakespeare"

words = spark.read.format("bigquery").option("table", source).load()

words.createOrReplaceTempView('words')

word_count = spark.sql(

'SELECT word, SUM(word_count) AS word_count FROM words GROUP BY word')

# Output the results to a BigQuery destination table.

destination_table = 'PROJECT_ID:DATASET.TABLE'

word_count.write.format('bigquery') \

.option('table', destination_table) \

.save()

Make the following replacements:

- PROJECT_ID, DATASET, and TABLE: Insert your project ID, the name of an existing BigQuery dataset, and the name of a new table to create in the dataset (the table must not exist).

You can view the data lineage graph by clicking the destination table name listed in the navigation pane on BigQuery Explorer page, then selecting the lineage tab on the table details pane.

View lineage in Dataplex Universal Catalog

A lineage graph displays relationships between your project resources and the processes that created them. You can view data lineage information in the Google Cloud console or retrieve the information from the Data Lineage API as JSON data.

What's next

- Learn more about data lineage.