Cloud Composer 3 | Cloud Composer 2 | Cloud Composer 1

This page refers only to issues related to DAG File Processing, for issues scheduling tasks, see Troubleshooting Airflow scheduler issues.

Troubleshooting Workflow

Inspect DAG processor logs

If you have complex DAGs, then Airflow DAG processors might not parse all your DAGs. This might lead to many issues that have the following symptoms.

Symptoms:

If a DAG processor encounters problems when parsing your DAGs, then it might lead to a combination of the listed issues. If DAGs are generated dynamically, these issues might be more impactful compared to static DAGs.

- DAGs are not visible in Airflow UI and DAG UI.

- DAGs are not scheduled for execution.

- There are errors in the DAG processor logs, for example:

dag-processor-manager [2023-04-21 21:10:44,510] {manager.py:1144} ERROR - Processor for /home/airflow/gcs/dags/dag-example.py with PID 68311 started at 2023-04-21T21:09:53.772793+00:00 has timed out, killing it.or

dag-processor-manager [2023-04-26 06:18:34,860] {manager.py:948} ERROR - Processor for /home/airflow/gcs/dags/dag-example.py exited with return code 1.DAG processors experience issues which lead to Airflow scheduler restarts.

Airflow tasks that are scheduled for execution are cancelled and DAG runs for DAGs that failed to be parsed might be marked as

failed. For example:airflow-scheduler Failed to get task '<TaskInstance: dag-example.task1--1 manual__2023-04-17T10:02:03.137439+00:00 [removed]>' for dag 'dag-example'. Marking it as removed.

Solution:

Increase parameters related to DAG parsing:

Increase

[core]dagbag_import_timeoutto at least 120 seconds (or more, if required).Increase

[core]dag_file_processor_timeoutto at least 180 seconds (or more, if required). This value must be higher than[core]dagbag_import_timeout.

Correct or remove DAGs that cause problems to DAG processors.

Inspecting DAG parse times

To verify if the issue happens at DAG parse time, follow these steps.

Console

In Google Cloud console you can use the Monitoring page and the Logs tab to inspect DAG parse times.

Inspect DAG parse times with the Cloud Composer Monitoring page:

In Google Cloud console, go to the Environments page.

In the list of environments, click the name of your environment. The Monitoring page opens.

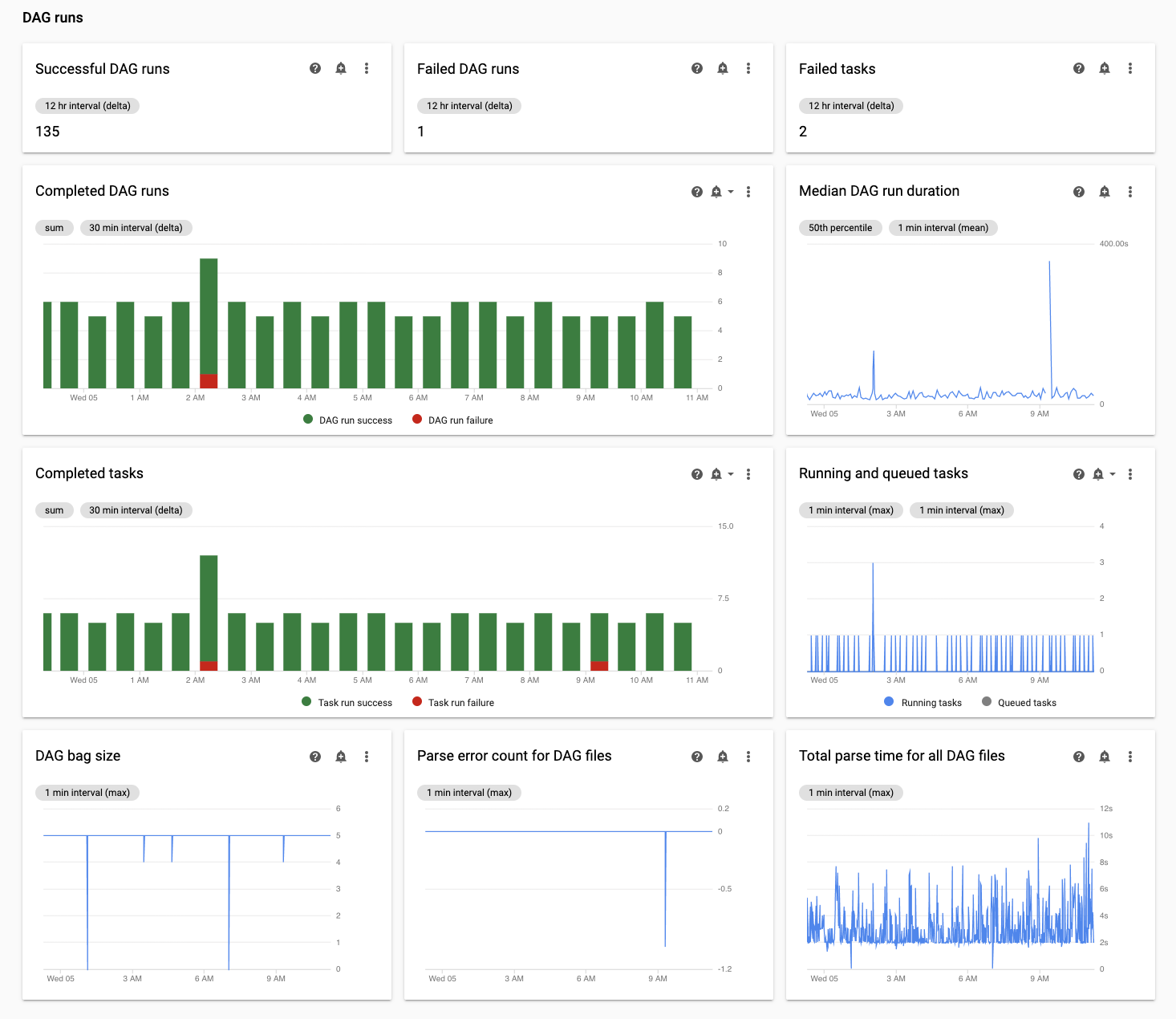

In the Monitoring tab, select DAG Statistics and review the Total parse time for all DAG files chart to identify possible issues. We recommend to monitor this chart for some time to identify DAG parsing issues over several DAG parsing cycles.

Inspect DAG parse times with the Cloud Composer Logs tab:

In Google Cloud console, go to the Environments page.

In the list of environments, click the name of your environment. The Monitoring page opens.

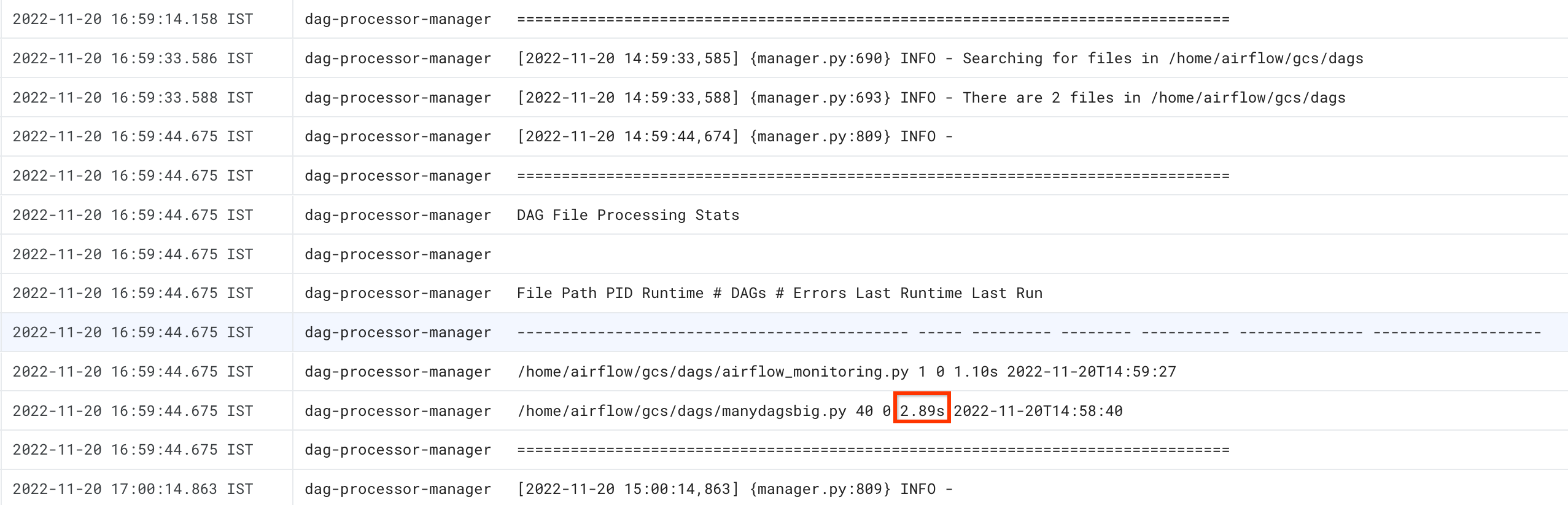

Go to the Logs tab, and from the All logs navigation tree select the DAG processor manager section.

Review

dag-processor-managerlogs and identify possible issues.

gcloud

Use the dags report command to see the parse time for all your DAGs.

gcloud composer environments run ENVIRONMENT_NAME \

--location LOCATION \

dags report

Replace:

ENVIRONMENT_NAMEwith the name of the environment.LOCATIONwith the region where the environment is located.

The output of the command looks similar to the following:

file | duration | dag_num | task_num | dags

======================+================+=========+==========+===================

/manydagsbig.py | 0:00:00.038334 | 2 | 10 | serial-0,serial-0

/airflow_monitoring.py| 0:00:00.001620 | 1 | 1 | airflow_monitoring

Look for the duration value for each of the dags listed in the table. A large value might indicate that one of your DAGs is not implemented in an optimal way. From the output table, you can identify which DAGs have a long parsing time.

Troubleshooting issues at DAG parse time

The following sections describe symptoms and potential fixes for some common issues at DAG parse time.

Limited number of threads

Allowing DAG processors to use only a limited number of threads might impact your DAG parse times.

To solve the issue, override the [scheduler]parsing_processes

Airflow configuration option. Set the initial value to the

number of vCPUs used by the scheduler, minus one CPU. For example, if the

scheduler uses 2 vCPUs, set this value to 1. Then adjust the scheduler resource

allocation so that it works at ~70% of vCPU or memory capacity.

Make the DAG processor ignore unnecessary files

You can improve performance of the Airflow DAG processor (which runs together with the Airflow scheduler in Cloud Composer 2) by skipping unnecessary files in the DAGs folder. Airflow DAG processor ignores files and folders specified in the.airflowignore file.

To make the Airflow DAG processor ignore unnecessary files:

- Create an

.airflowignorefile. - In this file, list files and folders that should be ignored.

- Upload this file to the

/dagsfolder in your environment's bucket.

For more information about the .airflowignore file format, see

Airflow documentation.

Airflow processes paused DAGs

You can pause DAGs to stop them from running. This saves resources of Airflow workers.

Airflow DAG processors continue parsing paused DAGs. If you want to

improve the performance of DAG processors, use

.airflowignore or delete

paused DAGs from the DAGs folder.

Common Issues

The following sections describe symptoms and potential fixes for some common parsing issues.

DAG load import timeout

Symptom:

- In the Airflow web interface, at the top of the DAGs list page, a red alert

box shows

Broken DAG: [/path/to/dagfile] Timeout. In Cloud Monitoring: The

airflow-schedulerlogs contain entries similar to:ERROR - Process timed outERROR - Failed to import: /path/to/dagfileAirflowTaskTimeout: Timeout

Fix:

Override the dag_file_processor_timeout Airflow

configuration option and allow more time for DAG parsing:

| Section | Key | Value |

|---|---|---|

core |

dag_file_processor_timeout |

New timeout value |

A DAG is not visible in Airflow UI or DAG UI and the scheduler does not schedule it

The DAG processor parses each DAG before it can be scheduled by the scheduler and before a DAG becomes visible in the Airflow UI or DAG UI.

The following Airflow configuration options define timeouts for parsing DAGs:

[core]dagbag_import_timeoutdefines how much time the DAG processor has to parse a single DAG.[core]dag_file_processor_timeoutdefines the total amount of time the DAG processor can spend on parsing all DAGs.

If a DAG is not visible in the Airflow UI or DAG UI:

Check DAG processor logs if the DAG processor is able to correctly process your DAG. In case of problems, you might see the following log entries in the DAG processor or scheduler logs:

[2020-12-03 03:06:45,672] {dag_processing.py:1334} ERROR - Processor for /usr/local/airflow/dags/example_dag.py with PID 21903 started at 2020-12-03T03:05:55.442709+00:00 has timed out, killing it.Check scheduler logs to see if the scheduler works correctly. In case of problems, you might see the following log entries in scheduler logs:

DagFileProcessorManager (PID=732) last sent a heartbeat 240.09 seconds ago! Restarting it Process timed out, PID: 68496

Solutions:

Fix all DAG parsing errors. The DAG processor parses multiple DAGs, and in rare cases parsing errors of one DAG can negatively impact the parsing of other DAGs.

If the parsing of your DAG takes more than the amount of seconds defined in

[core]dagbag_import_timeout, then increase this timeout.If the parsing of all your DAGs takes more than the amount of seconds defined in

[core]dag_file_processor_timeout, then increase this timeout.If your DAG takes a long time to parse, it can also mean that it is not implemented in an optimal way. For example, if it reads read many environment variables, or performs calls to external services or Airflow database. To the extent possible, avoid performing such operations in global sections of DAGs.

Increase CPU and memory resources for Scheduler so it can work faster.

Increase the number of DAG processor processes so that parsing can be done faster. You can do so by increasing the value of

[scheduler]parsing_process.

What's next

- Troubleshooting file synchronization issues

- Troubleshooting Airflow scheduler issues

- Troubleshooting DAGs