Cloud Composer 3 | Cloud Composer 2 | Cloud Composer 1

This page describes how you can group tasks in your Airflow pipelines using the following design patterns:

- Grouping tasks in the DAG graph.

- Triggering children DAGs from a parent DAG.

- Grouping tasks with the

TaskGroupoperator.

Group tasks in the DAG graph

To group tasks in certain phases of your pipeline, you can use relationships between the tasks in your DAG file.

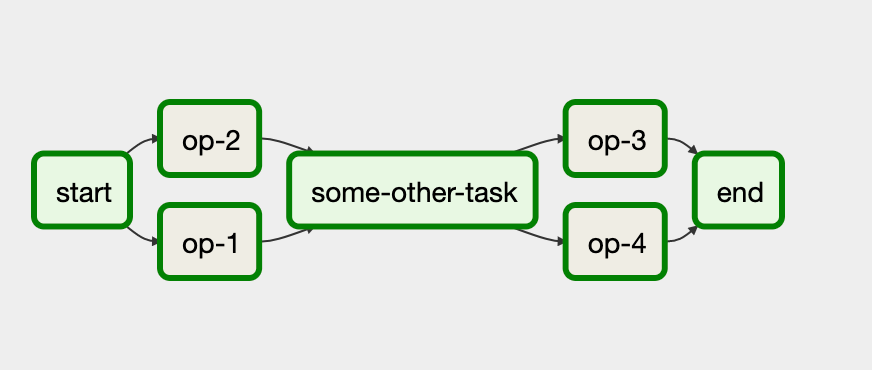

Consider the following example:

In this workflow, tasks op-1 and op-2 run together after the initial

task start. You can achieve this by grouping tasks together with the statement

start >> [task_1, task_2].

The following example provides a complete implementation of this DAG:

Trigger children DAGs from a parent DAG

You can trigger one DAG from another DAG with the

TriggerDagRunOperator operator.

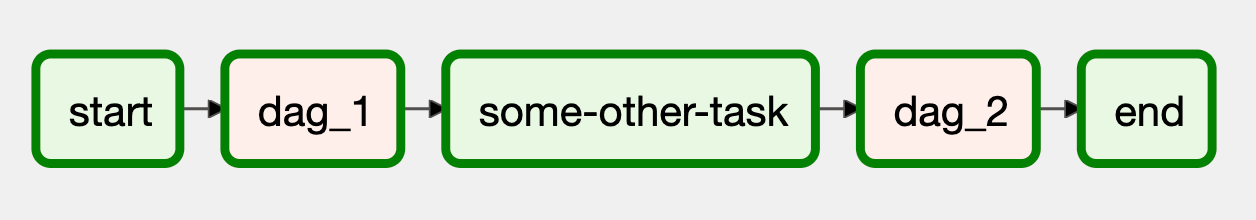

Consider the following example:

In this workflow, the blocks dag_1 and dag_2 represent a series of tasks

that are grouped together in a separate DAG in the Cloud Composer

environment.

The implementation of this workflow requires two separate DAG files. The controlling DAG file looks like the following:

The implementation of the child DAG, which is triggered by the controlling DAG, looks like the following:

You must upload both DAG files in your Cloud Composer environment for the DAG to work.

Grouping tasks with the TaskGroup operator

You can use the

TaskGroup operator to group tasks

together in your DAG. Tasks defined within a TaskGroup block are still part

of the main DAG.

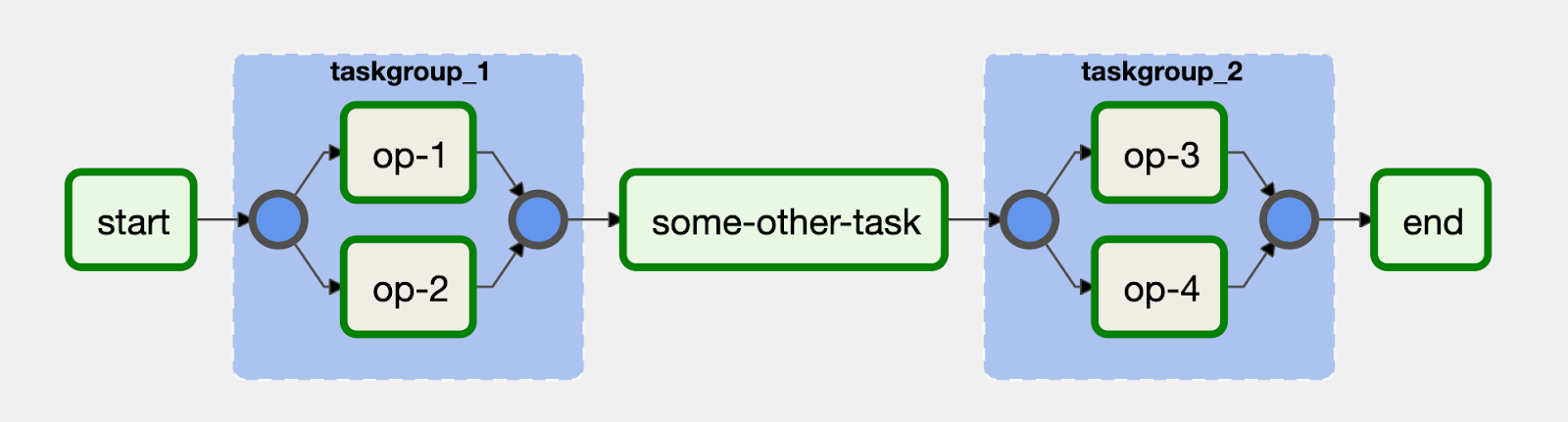

Consider the following example:

The tasks op-1 and op-2 are grouped together in a block with ID

taskgroup_1. An implementation of this workflow looks like the following code: