You're viewing Apigee and Apigee hybrid documentation.

View

Apigee Edge documentation.

Symptom

Cassandra pods fail to start in one of the regions in a multi-region Apigee hybrid setup. When

applying the overrides.yaml file, the Cassandra pods do not start successfully.

Error messages

- You will observe the following error message in the Cassandra pod logs:

Exception (java.lang.RuntimeException) encountered during startup: A node with address 10.52.18.40 already exists, cancelling join. use cassandra.replace_addrees if you want to replace this node.

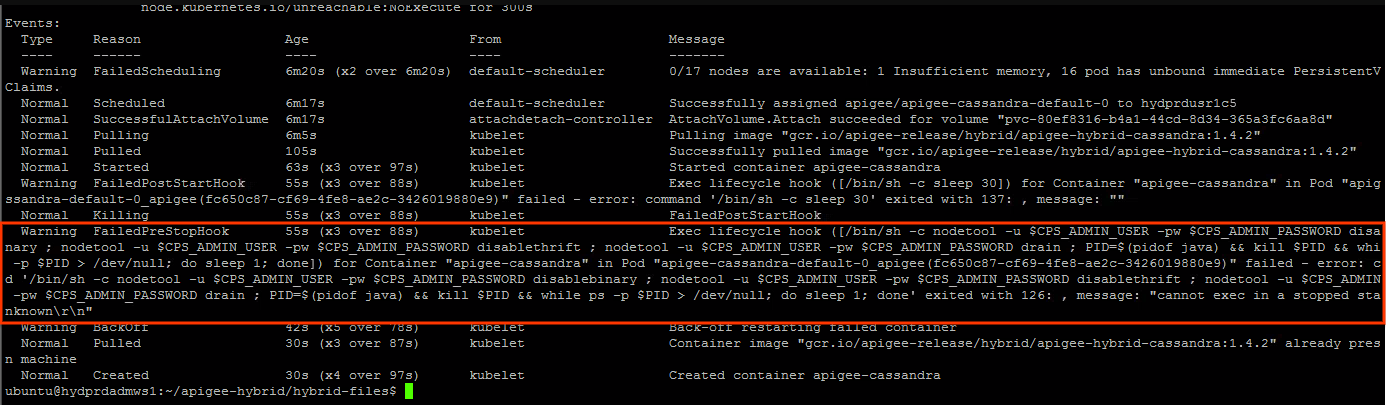

You may observe the following warning in the Cassandra pod status:

Possible causes

This issue is typically observed in the following scenario:

- The Apigee runtime cluster is deleted in one of the regions.

- An attempt to reinstall the Apigee runtime cluster is made in the region with Cassandra

seed host configuration in the

overrides.yamlfile as described in Multi-region deployment on GKE and GKE on-prem. - Deleting the Apigee runtime cluster does not remove the references in the Cassandra cluster. Thus, the stale references of the Cassandra pods in the deleted cluster will be retained. Because of this, when you try to reinstall the Apigee runtime cluster in the secondary region, the Cassandra pods complain that certain IP addresses already exist. That is because the IP addresses may be assigned from the same subnet which was used earlier.

| Cause | Description |

|---|---|

| Stale references to deleted secondary region pods in Cassandra cluster | Deleting the Apigee runtime cluster in the secondary region does not remove the references to IP addresses of Cassandra pods in the secondary region. |

Cause: Stale references to deleted secondary region pods in Cassandra cluster

Diagnosis

- The error message in the in the Cassandra pod logs

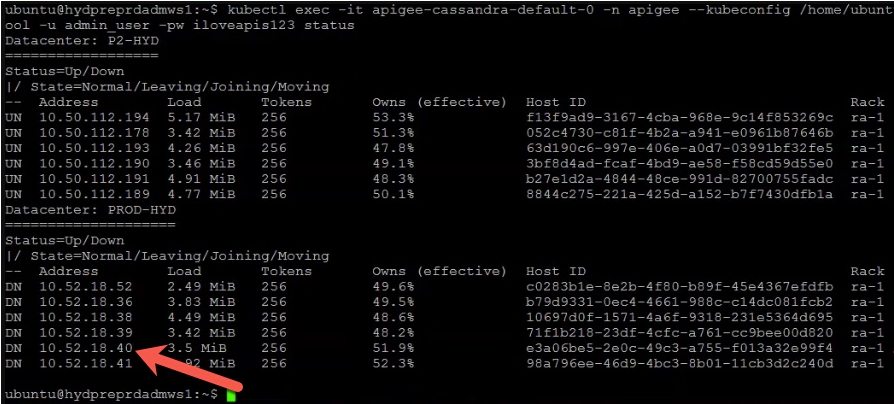

A node with address 10.52.18.40 already existsindicates that there exists a stale reference to one of the secondary region Cassandra pods with the IP address10.52.18.40. Validate this by running thenodetool statuscommand in the primary region.Sample Output:

The above example shows that the IP address

10.52.18.40associated with Cassandra pods of the secondary region is still listed in the output. - If the output contains the stale references to Cassandra pods in the secondary region, then it indicates that the secondary region has been deleted, but the IP addresses of the Cassandra pods in the secondary region are not removed.

Resolution

Perform the following steps to remove the stale references of Cassandra pods of the deleted cluster:

- Log into the container and connect to the Cassandra command-line interface by following the steps at Create the client container.

- After you have logged into the container and connected to the Cassandra

cqlshinterface, run the following SQL query to list the currentkeyspacedefinitions:select * from system_schema.keyspaces;

Sample output showing current keyspaces:

In the following output,

Primary-DC1refers to the primary region andSecondary-DC2refers to the secondary region.bash-4.4# cqlsh 10.50.112.194 -u admin_user -p ADMIN.PASSWORD --ssl Connected to apigeecluster at 10.50.112.194:9042. [cqlsh 5.0.1 | Cassandra 3.11.6 | CQL spec 3.4.4 | Native protocol v4] Use HELP for help. admin_user@cqlsh> Select * from system_schema.keyspaces; keyspace_name | durable_writes | replication -------------------------------------+----------------+-------------------------------------------------------------------------------------------------- system_auth | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} kvm_tsg1_apigee_hybrid_prod_hybrid | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} kms_tsg1_apigee_hybrid_prod_hybrid | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} system_schema | True | {'class': 'org.apache.cassandra.locator.LocalStrategy'} system_distributed | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} system | True | {'class': 'org.apache.cassandra.locator.LocalStrategy'} perses | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} cache_tsg1_apigee_hybrid_prod_hybrid | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} rtc_tsg1_apigee_hybrid_prod_hybrid | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} quota_tsg1_apigee_hybrid_prod_hybrid | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} system_traces | True | {'Primary-DC1': '3', 'Secondary-DC2': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'} (11 rows)

As you can see, the

keyspacesare referring to bothPrimary-DC1andSecondary-DC2, even though the Apigee runtime cluster was deleted in the secondary region.The stale references to

Secondary-DC2have to be deleted from each of thekeyspacedefinitions. - Before deleting the stale references in the

keyspacedefinitions, use the following command to delete the entire Apigee hybrid installation except ASM (Istio) andcert-managerfrom theSecondary-DC2. For more information, refer to Uninstall the hybrid runtime.helm uninstall -n APIGEE_NAMESPACE ENV_GROUP_RELEASE_NAME ENV_RELEASE_NAME $ORG_NAME ingress-manager telemetry redis datastore

Also, uninstall

apigee-operator:helm uninstall -n APIGEE_NAMESPACE operator

- Remove the stale references to

Secondary-DC2from each of thekeyspacesby altering thekeyspacedefinition.ALTER KEYSPACE system_auth WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE kvm_ORG_NAME_apigee_hybrid_prod_hybrid WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE kms_ORG_NAME_apigee_hybrid_prod_hybrid WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE system_distributed WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE perses WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE cache_ORG_NAME_apigee_hybrid_ENV_NAME_hybrid WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE rtc_ORG_NAME_apigee_hybrid_ENV_NAME_hybrid WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE quota_ORG_NAME_apigee_hybrid_ENV_NAME_hybrid WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'}; ALTER KEYSPACE system_traces WITH replication = {'Primary-DC1': '3', 'class': 'org.apache.cassandra.locator.NetworkTopologyStrategy'};

- Verify that the stale references to the

Secondary-DC2region have been removed from all thekeyspacesby running the following command:select * from system_schema.keyspaces;

- Log in to a Cassandra pod of the

Primary-DC1and remove the references to UUIDs of all the Cassandra pods ofSecondary-DC2. The UUIDs can be obtained from thenodetool statuscommand as described earlier in Diagnosis.kubectl exec -it -n apigee apigee-cassandra-default-0 -- bash nodetool -u admin_user -pw ADMIN.PASSWORD removenode UUID_OF_CASSANDRA_POD_IN_SECONDARY_DC2

- Verify that no Cassandra pods of

Secondary-DC2are present by running thenodetool statuscommand again. - Install the Apigee runtime cluster in the secondary region (

Secondary-DC2) by following the steps in Multi-region deployment on GKE and GKE on-prem.

Must gather diagnostic information

If the problem persists even after following the above instructions, gather the following diagnostic information and then contact Google Cloud Customer Care:

- The Google Cloud Project ID

- The name of the Apigee hybrid organization

- The

overrides.yamlfiles from both the primary and secondary regions, masking any sensitive information - Kubernetes pod status in all namespaces of both the primary and secondary

regions:

kubectl get pods -A > kubectl-pod-status`date +%Y.%m.%d_%H.%M.%S`.txt

- A kubernetes

cluster-infodump from both the primary and secondary regions:# generate kubernetes cluster-info dump kubectl cluster-info dump -A --output-directory=/tmp/kubectl-cluster-info-dump # zip kubernetes cluster-info dump zip -r kubectl-cluster-info-dump`date +%Y.%m.%d_%H.%M.%S`.zip /tmp/kubectl-cluster-info-dump/*

- The output of the below

nodetoolcommands from the primary region.export u=`kubectl -n apigee get secrets apigee-datastore-default-creds -o jsonpath='{.data.jmx\.user}' | base64 -d` export pw=`kubectl -n apigee get secrets apigee-datastore-default-creds -o jsonpath='{.data.jmx\.password}' | base64 -d` kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw info 2>&1 | tee /tmp/k_nodetool_info_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw describecluster 2>&1 | tee /tmp/k_nodetool_describecluster_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw failuredetector 2>&1 | tee /tmp/k_nodetool_failuredetector_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw status 2>&1 | tee /tmp/k_nodetool_status_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw gossipinfo 2>&1 | tee /tmp/k_nodetool_gossipinfo_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw netstats 2>&1 | tee /tmp/k_nodetool_netstats_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw proxyhistograms 2>&1 | tee /tmp/k_nodetool_proxyhistograms_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw tpstats 2>&1 | tee /tmp/k_nodetool_tpstats_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw gcstats 2>&1 | tee /tmp/k_nodetool_gcstats_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw version 2>&1 | tee /tmp/k_nodetool_version_$(date +%Y.%m.%d_%H.%M.%S).txt kubectl -n apigee exec -it apigee-cassandra-default-0 -- nodetool -u $u -pw $pw ring 2>&1 | tee /tmp/k_nodetool_ring_$(date +%Y.%m.%d_%H.%M.%S).txt

References

- Apigee hybrid Multi-region deployment on GKE and GKE on-prem

- Kubernetes Documentation

-

Cassandra

nodetool statuscommand