Personalized Service Health logs service health events in Cloud Logging. It records all updates to the event's description, relevance, or state as a distinct log.

This document explains how to view, export, and store Service Health logs.

Before you begin

Verify that billing is enabled for your Google Cloud project.

- Enable the Service Health API for the project that you want to view, export, or store logs for.

- Get access to Service Health logs.

Log schema

See the Log schema reference for the fields you can set in your query.

View Service Health logs

To view Service Health logs:

- Go to the Google Cloud console.

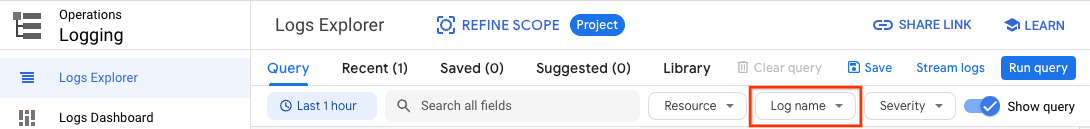

- Select Operations > Logging > Logs Explorer.

- Select a Google Cloud project at the top of the page.

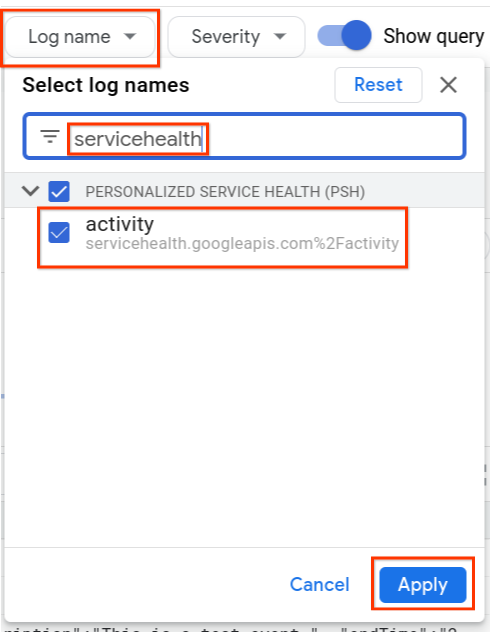

In the Log name drop-down menu, select Service Health.

A list of logs for

type.googleapis.com/google.cloud.servicehealth.logging.v1.EventLogappears.Expand a log entry to view the event details. The following example shows a typical event.

"insertId":"1pw1msgf6a3zc6", "timestamp":"2022-06-14T17:17:55.722035096Z", "receiveTimestamp":"2022-06-14T17:17:55.722035096Z", "logName":"projects/PROJECT_ID/logs/servicehealth.googleapis.com%2Factivity", "resource":{ "type":"servicehealth.googleapis.com/Event", "labels":{ "resource_container":"797731824162", "location":"global", "event_id":"U4AqrjwFQYi5fFBmyAX-Gg" } } "labels":{ "new_event":"true" "updated_fields": "[]" }, "jsonPayload":{ "@type":"type.googleapis.com/google.cloud.servicehealth.logging.v1.EventLog", "category":"INCIDENT", "title":"We are experiencing a connectivity issue affecting Cloud SQL in us-east1, australia-southeast2.", "description":"We are experiencing an issue with Google Cloud infrastructure components at us-east1, australia-southeast2. Our engineering team continues to investigate the issue. We apologize to all who are affected by the disruption.", "updateTime":"2023-11-14T22:26:40Z", "endTime":"2023-11-14T22:13:20Z", "impactedLocations":"['us-east1','australia-southeast2']", "impactedProducts":"['Google Cloud SQL']", "impactedProductIds":"['hV87iK5DcEXKgWU2kDri']", "nextUpdateTime":"2023-11-14T22:40:00Z", "startTime":"2020-09-13T12:26:40Z", "state":"ACTIVE", "detailedState":"CONFIRMED", "relevance":"RELATED", }

Query Service Health logs

You can use Logs Explorer to query Service Health logs. You may need the following references to build your query:

Examples:

| Condition | Query |

| Get logs with a specific incident relevance | jsonPayload.relevance = ("IMPACTED" OR "RELATED")

|

| Combine multiple filters | jsonPayload.impactedLocations : "us-central1" AND jsonPayload.impactedProducts : "Google Compute Engine" AND jsonPayload.state = "ACTIVE"

|

Export Service Health logs

You can export Service Health logs to any Cloud Logging sink destination using the Google Cloud console, API, or the gcloud CLI. To set up a Cloud Logging sink, see Configure and manage sinks.

You can include and exclude Service Health logs by configuring the inclusion and exclusion filters for the sink.

When setting the product or location, use the values found at Google Cloud products and locations.

Aggregate Service Health logs at a folder level

Sending Service Health-related logs from all projects under a folder to a project created for Service Health logs lets you do more complex queries on them.

You'll create a sink at the folder level to send all Service Health-related logs to a new project under that folder. Do the following:

Create a new project under a folder. This project is allocated to the Service Health logs.

gcloud projects create PROJECT_ID --folder FOLDER_IDCreate an aggregated sink at the folder level for the other projects in the folder.

gcloud logging sinks create SINK_NAME \ SINK_DESTINATION --include-children \ --folder=FOLDER_ID --log-filter="LOG_FILTER"To get logs for all relevant incidents, set the LOG_FILTER value to the following:

resource.type=servicehealth.googleapis.com/Event AND jsonPayload.category=INCIDENT AND jsonPayload.relevance!=NOT_IMPACTED AND jsonPayload.@type=type.googleapis.com/google.cloud.servicehealth.logging.v1.EventLogSet permissions for the sink Service Account.

gcloud projects add-iam-policy-binding PROJECT_ID --member=SERVICE_ACCT_NAME --role=roles/logging.bucketWriter gcloud projects add-iam-policy-binding PROJECT_ID --member=SERVICE_ACCT_NAME --role=roles/logging.logWriter(Optional) If you don't want to send the logs to the

_Defaultsink, create a log bucket for Service Health-related logs in the project.gcloud logging buckets create BUCKET_ID --location=LOCATION --enable-analytics --asyncIf you created a log bucket, create a sink to send those logs to the bucket.

gcloud logging sinks create SINK_NAME_BUCKET \ logging.googleapis.com/projects/PROJECT_ID/locations/LOCATION/buckets/BUCKET_ID \ --project=PROJECT_ID --log-filter="LOG_FILTER"

Query Service Health logs with BigQuery

You can query logs sent to Cloud Logging using BigQuery by:

- Creating SQL queries.

- Using the BigQuery API to feed the results of the queries to external systems.

Do the following:

Create a log bucket in the project you created for Service Health logs.

gcloud logging buckets create BUCKET_ID --location=LOCATION --enable-analytics --async-

gcloud logging buckets update BUCKET_ID --location=LOCATION --enable-analytics --async Create a new BigQuery dataset that links to the bucket with Service Health logs.

gcloud logging links create LINK_ID --bucket=BUCKET_ID --location=LOCATIONIf necessary, enable the BigQuery API.

gcloud services enable bigquery.googleapis.com

Now you can run complex SQL queries against BigQuery, such as:

| Condition | Query |

| Get logs with a specific incident relevance | gcloud query --use_legacy_sql=false 'SELECT * FROM `PROJECT_ID.LINK_ID._AllLogs` WHERE JSON_VALUE(json_payload["relevance"]) = "IMPACTED"'

|

| Get all child log events for a parent event | gcloud query --use_legacy_sql=false 'SELECT * FROM `PROJECT_ID.LINK_ID._AllLogs` WHERE JSON_VALUE(json_payload["parentEvent"]) = "projects/PROJECT_ID/locations/global/events/EVENT_ID"'

|

Retain past events

Personalized Service Health provides limited retention of events.

If you need to retain a record of past service health events beyond a few months, we recommended storing Service Health logs.