Migrate a self-managed MySQL metastore to Dataproc Metastore

This page shows you how to migrate your external self-managed MySQL metastore to Dataproc Metastore by creating a MySQL dump file and importing the metadata into an existing Dataproc Metastore service.

Before you begin

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

Roles required to select or create a project

- Select a project: Selecting a project doesn't require a specific IAM role—you can select any project that you've been granted a role on.

-

Create a project: To create a project, you need the Project Creator

(

roles/resourcemanager.projectCreator), which contains theresourcemanager.projects.createpermission. Learn how to grant roles.

-

Verify that billing is enabled for your Google Cloud project.

-

Enable the Dataproc Metastore API.

Roles required to enable APIs

To enable APIs, you need the Service Usage Admin IAM role (

roles/serviceusage.serviceUsageAdmin), which contains theserviceusage.services.enablepermission. Learn how to grant roles.

Required Roles

To get the permissions that you need to create a Dataproc Metastore and import a MySQL metastore, ask your administrator to grant you the following IAM roles:

-

To create a service and import metadata:

-

Dataproc Metastore Editor (

roles/metastore.editor) on the project -

Dataproc Metastore Admin (

roles/metastore.admin) on the project.

-

Dataproc Metastore Editor (

-

To use the Cloud Storage object (SQL dump file) for import:

-

Storage Object Viewer (

roles/storage.objectViewer) on the Dataproc Metastore service agent. For example,service-CUSTOMER_PROJECT_NUMBER@gcp-sa-metastore.iam.gserviceaccount.com. -

Storage Object Viewer (

roles/storage.objectViewer) on the user account.

-

Storage Object Viewer (

For more information about granting roles, see Manage access to projects, folders, and organizations.

These predefined roles contain the permissions required to create a Dataproc Metastore and import a MySQL metastore. To see the exact permissions that are required, expand the Required permissions section:

Required permissions

The following permissions are required to create a Dataproc Metastore and import a MySQL metastore:

-

To create a service:

metastore.services.createon the project. -

To import metadata:

metastore.imports.createon the project. -

To use the Cloud Storage object (SQL dump file) for import:

-

storage.objects.geton the Dataproc Metastore service agent. For example,service-CUSTOMER_PROJECT_NUMBER@gcp-sa-metastore.iam.gserviceaccount.com -

storage.objects.geton the user account.

-

You might also be able to get these permissions with custom roles or other predefined roles.

For more information about specific Dataproc Metastore roles and permissions, see Manage Dataproc access with IAM.Create a Dataproc Metastore service

The following instructions demonstrate how to create a Dataproc Metastore service that you can migrate your SQL dump file to.

Console

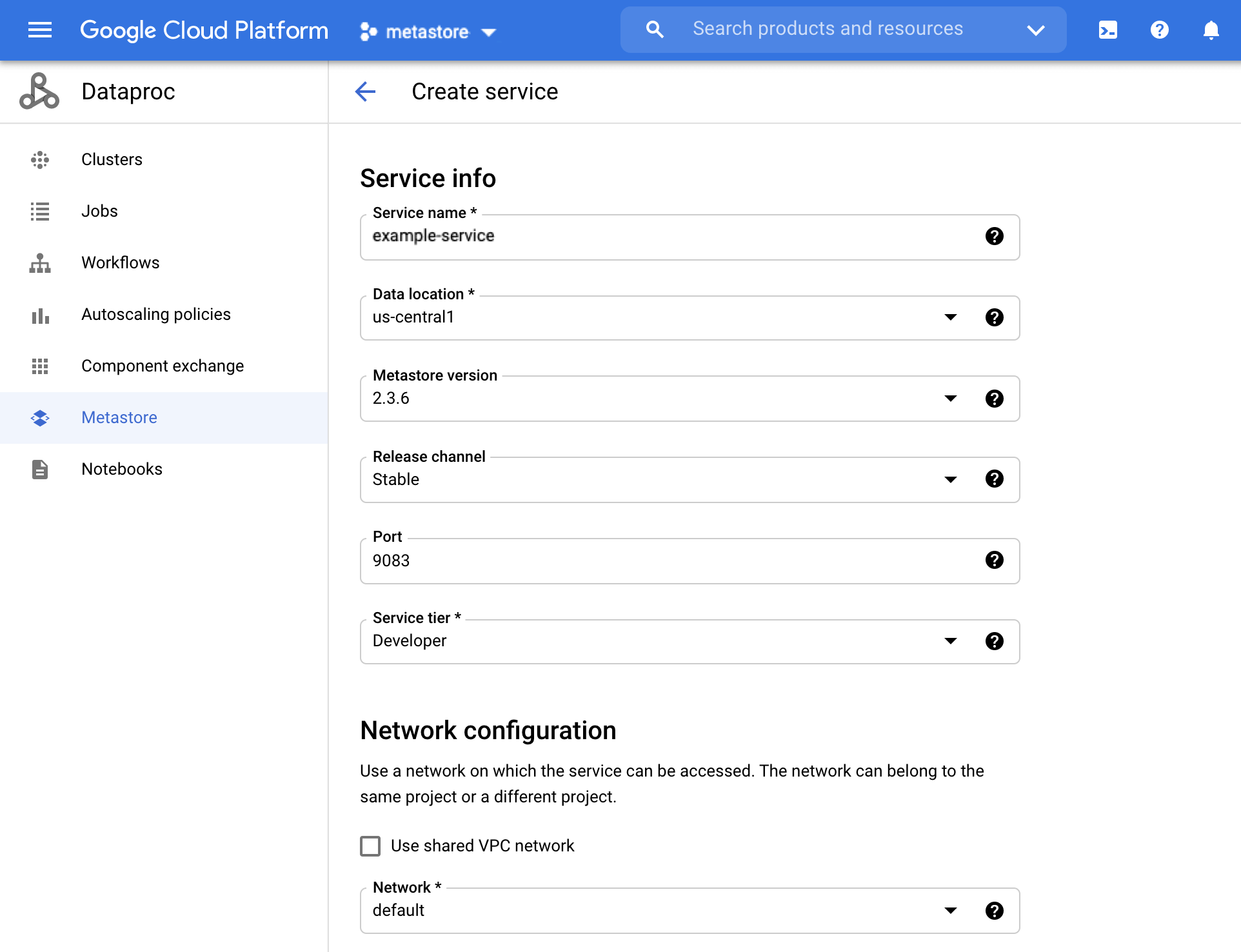

In the Google Cloud console, open the Create service page:

The Dataproc Metastore Create service page In the Service name field, enter

example-service.Select the Data location. For information on selecting a region, see Cloud locations.

For other service configuration options, use the provided defaults.

To create and start the service, click the Submit button.

Your new service appears in the Service list.

gcloud

Run the following gcloud metastore services create

command to create a service:

gcloud metastore services create example-service \

--location=LOCATION

Replace LOCATION with the Compute Engine region

where you plan to create the service. Make sure Dataproc Metastore is available

in the region.

REST

Follow the API instructions to create a service by using the API Explorer.

Prepare for migration

You must now prepare the metadata stored in your Hive metastore database for import by making a MySQL dump file and placing it into a Cloud Storage bucket.

See Prepare the import files before import for steps to prepare for migration.

Import the metadata

Now that you've prepared the dump file, import it into your Dataproc Metastore service.

See Import the files into Dataproc Metastore

for steps to import your metadata into your example-service service.

Create and attach a Dataproc cluster

After you import your metadata into your Dataproc Metastore

example-service service, create and attach a Dataproc cluster

that uses the service as its Hive metastore.

Clean up

To avoid incurring charges to your Google Cloud account for the resources used on this page, follow these steps.

- In the Google Cloud console, go to the Manage resources page.

- If the project that you plan to delete is attached to an organization, expand the Organization list in the Name column.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

Alternatively, you can delete the resources used in this tutorial:

Delete the Dataproc Metastore service.

Console

In the Google Cloud console, open the Dataproc Metastore page:

On the left of the service name, select

example-serviceby checking the box.At the top of the Dataproc Metastore page, click Delete to delete the service.

On the dialog, click Delete to confirm the deletion.

Your service no longer appears in the Service list.

gcloud

Run the following

gcloud metastore services deletecommand to delete a service:gcloud metastore services delete example-service \ --location=LOCATIONReplace

LOCATIONwith the Compute Engine region where you created the service.REST

Follow the API instructions to delete a service by using the API Explorer.

All deletions succeed immediately.

Delete the Cloud Storage bucket for the Dataproc Metastore service.