Dataproc Metastore 是在 Google Cloud上运行的全代管式无服务器 Apache Hive Metastore (HMS),具有高可用性和自动修复功能。

为了全面管理元数据,Dataproc Metastore 会将您的数据映射到 Apache Hive 表。

支持的 Apache Hive 版本

Dataproc Metastore 仅支持特定版本的 Apache Hive。如需了解详情,请参阅 Hive 版本政策。

Hive 如何处理元数据

由于 Dataproc Metastore 是 Hive Metastore,因此了解它如何管理元数据非常重要。

默认情况下,所有 Hive 应用都可以拥有代管式内部表或非代管式外部表。也就是说,您存储在 Dataproc Metastore 服务中的元数据可以同时存在于内部表和外部表中。

修改数据时,Dataproc Metastore 服务 (Hive) 会以不同的方式处理内部表和外部表。

- 内部表。同时管理元数据和表格数据。

- 外部表。仅管理元数据。

例如,如果您使用 DROP TABLE Hive SQL 语句删除表定义,则:

drop table foo

内部表。Dataproc Metastore 会删除所有元数据。 它还会删除与该表关联的文件。

外部表。Dataproc Metastore 只会删除元数据。它会保留与表关联的数据。

Hive 仓库目录

Dataproc Metastore 使用 Hive 仓库目录来管理您的内部表。Hive 数据仓库目录是存储实际数据的位置。

使用 Dataproc Metastore 服务时,默认的 Hive 仓库目录是一个 Cloud Storage 存储桶。Dataproc Metastore 仅支持将 Cloud Storage 存储分区用于仓库目录。相比之下,本地 HMS 的 Hive 仓库目录通常指向本地目录,这与云端 HMS 不同。

每次创建 Dataproc Metastore 服务时,系统都会自动为您创建此存储桶。您可以通过在 hive.metastore.warehouse.dir 属性上设置 Hive Metastore 配置替换项来更改此值。

制品 Cloud Storage 存储分区

工件存储桶用于存储 Dataproc Metastore 工件,例如导出的元数据和受管理的内部表数据。

创建 Dataproc Metastore 服务时,系统会在您的项目中自动为您创建 Cloud Storage 存储桶。默认情况下,制品存储桶和仓库目录指向同一存储桶。您无法更改制品存储桶的位置,但可以更改 Hive Warehouse 目录的位置。

制品存储桶位于以下位置:

gs://your-artifacts-bucket/hive-warehouse。- 例如

gs://gcs-your-project-name-0825d7b3-0627-4637-8fd0-cc6271d00eb4。

访问 Hive 仓库目录

在系统自动为您创建存储桶后,请确保您的 Dataproc 服务账号有权访问 Hive 仓库目录。

如需在对象级层访问仓库目录(例如 gs://mybucket/object),请使用

roles/storage.objectAdmin角色向 Dataproc 服务账号授予对相应存储桶的存储对象的读写权限。此角色必须在存储桶级或更高级别进行设置。如果您使用顶级文件夹(例如 gs://mybucket),请使用

roles/storage.storageAdmin角色向 Dataproc 服务账号授予对相应存储桶的存储对象的读写权限,以便访问仓库目录。

如果 Hive 仓库目录与 Dataproc Metastore 不在同一项目中,请确保 Dataproc Metastore 服务代理有权访问 Hive 仓库目录。Dataproc Metastore 项目的服务代理是 service-PROJECT_NUMBER@gcp-sa-metastore.iam.gserviceaccount.com。使用 roles/storage.objectAdmin 角色向服务代理授予对相应存储桶的读写权限。

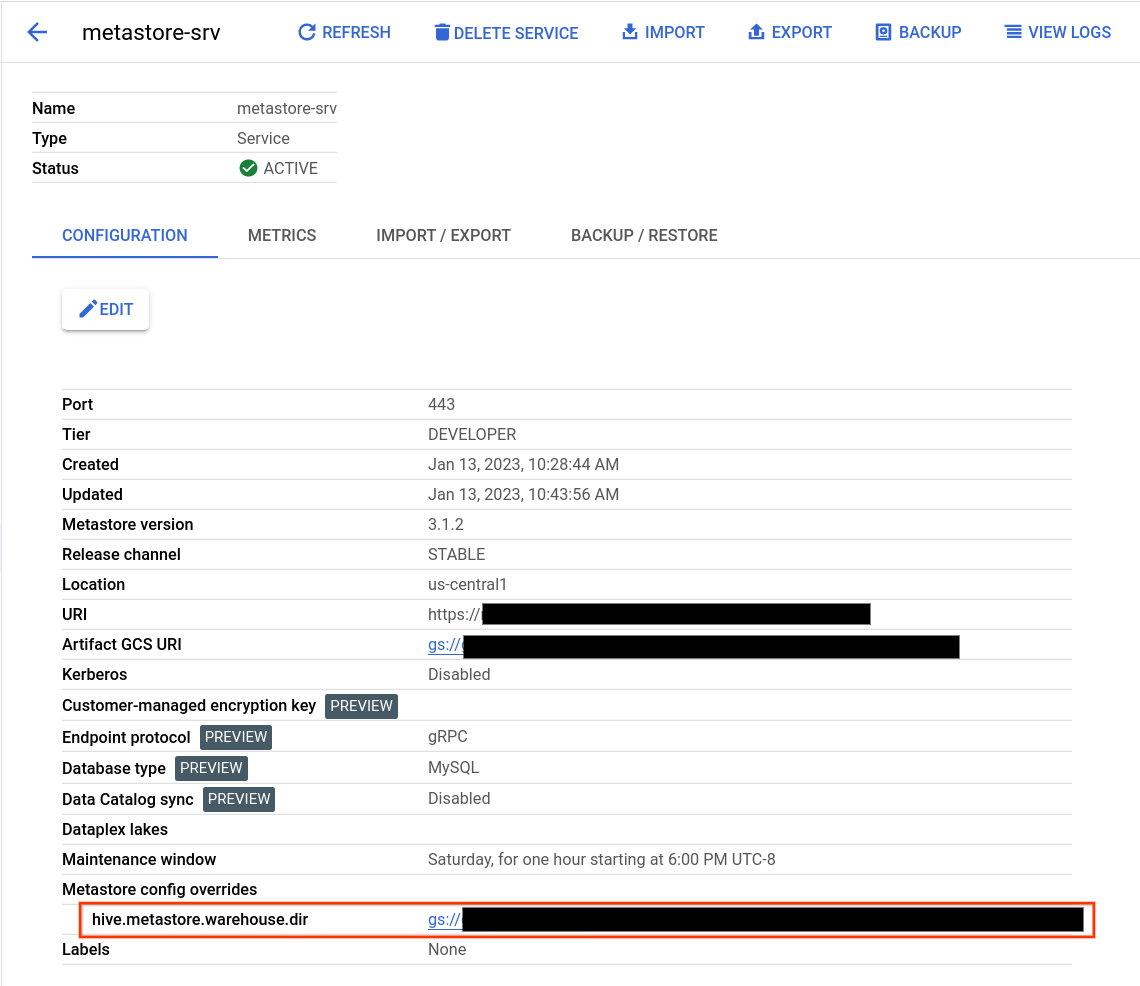

查找 Hive 仓库目录

- 打开 Dataproc Metastore 页面。

点击相应服务的名称。

系统会打开“服务详情”页面。

在配置表中,找到 Metastore 配置替换文件 > hive.metastore.warehouse.dir。

找到以

gs://开头的值。此值是 Hive 仓库目录的位置。

更改 Hive 仓库目录

如需将您自己的 Cloud Storage 存储桶与 Dataproc Metastore 搭配使用,请设置 Hive Metastore 配置替换项,以指向新的存储桶位置。

如果您更改默认数据仓库目录,请遵循以下建议。

请勿使用 Cloud Storage 存储桶根 (

gs://mybucket) 来存储 Hive 表。确保您的 Dataproc Metastore 虚拟机服务账号有权访问 Hive 仓库目录。

为获得最佳结果,请使用与您的 Dataproc Metastore 服务位于同一区域的 Cloud Storage 存储桶。虽然 Dataproc Metastore 允许使用跨区域存储分区,但位于同一位置的资源效果更好。例如,欧盟多区域存储桶不太适用于

us-central1服务。跨区域访问具有更长的延迟时间、缺少区域故障隔离,并且会产生跨区域网络带宽费用。

更改 Hive 仓库目录

- 打开 Dataproc Metastore 页面。

点击相应服务的名称。

系统会打开“服务详情”页面。

在配置表格中,找到 Metastore 配置替换文件 > hive.metastore.warehouse.dir 部分。

将

hive.metastore.warehouse.dir值更改为新存储桶的位置。例如gs://my-bucket/path/to/location。

删除存储桶

删除 Dataproc Metastore 服务不会自动删除您的 Cloud Storage 工件存储桶。您的存储桶不会自动删除,因为它可能包含有用的服务后数据。如需删除存储桶,请运行 Cloud Storage 删除操作。