Step 3: Determine integration mechanism

This page describes the third step to deploy Cortex Framework Data Foundation, the core of Cortex Framework. In this step, you configure the integration with your chosen data source. If you are using sample data, skip this step.

Integration overview

Cortex Framework helps you centralize data from various sources, along with other platforms. This creates a single source of truth for your data. Cortex Data Foundation integrates with each data source in different ways, but most of them follow a similar procedure:

- Source to Raw layer: Ingest data from data source to raw dataset using APIs. This is achieved by using Dataflow pipelines triggered through Cloud Composer DAGs.

- Raw layer to CDC layer: Apply CDC processing on raw dataset and store the output in CDC dataset. This is accomplished by Cloud Composer DAGs running BigQuery SQLs.

- CDC layer to Reporting layer: Creates final reporting tables from CDC tables in the Reporting dataset. This is accomplished by either creating runtime views on top of CDC tables or running Cloud Composer DAGs for materialized data in BigQuery tables - depending on how it's configured. For more information about configuration, see Customizing reporting settings file.

The config.json file configures the settings required to connect to data

sources for transferring data from various workloads. See the integration

options for each data source in the following resources.

- Operational:

- Marketing:

- Sustainability:

For more information about the Entity-Relationship Diagrams that each

data source supports, see the docs folder in the Cortex Framework Data Foundation repository.

K9 deployment

The K9 deployer simplifies the integration of diverse data sources. The K9 deployer is a predefined dataset within the BigQuery environment responsible for ingesting, processing, and modeling of components that are reusable across different data sources.

For example, the time dimension is reusable across all data sources where tables

might need to take analytical results based on a Gregorian calendar. The K9

deployer combines external data like weather or Google Trends with other data sources

(for example, SAP, Salesforce, Marketing). This enriched dataset enables

deeper insights and more comprehensive analysis.

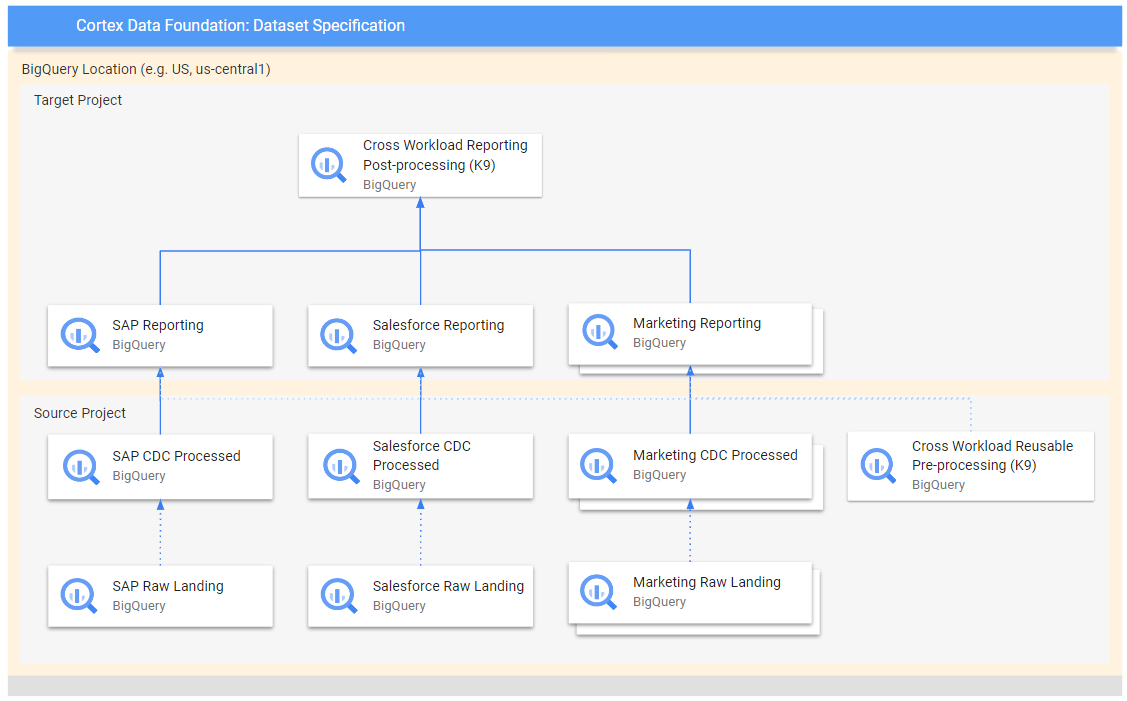

The following diagram shows the flow of data from different raw sources to various reporting layers:

In the diagram, the source project contains the raw data from the chosen data sources (SAP, Salesforce, and Marketing). While the target project contains processed data, derived from the Change Data Capture (CDC) process.

The pre-processing K9 step runs before all workloads start their deployment, so the reusable models are available during their deployment. This step transforms data from various sources to create a consistent and reusable dataset.

The post-processing K9 steps occurs after all workloads have deployed their reporting models to enable cross-workload reporting or augmenting models to find their necessary dependencies within each individual reporting dataset.

Configure the K9 deployment

Configure the Directed Acyclic Graphs (DAGs) and models to be generated in the K9 manifest file.

The K9 pre-processing step is important because it ensures that all workloads within the data pipeline have access to consistently prepared data. This reduces redundancy and ensures data consistency.

For more information about how to configure external datasets for K9, see Configure external datasets for K9.

Next steps

After you complete this step, move on to the following deployment steps:

- Establish workloads.

- Clone repository.

- Determine integration mechanism (this page).

- Set up components.

- Configure deployment.

- Execute deployment.