Chirp 3 is the latest generation of Google's multilingual Automatic Speech Recognition (ASR)-specific generative models, designed to meet user needs based on feedback and experience. Chirp 3 provides enhanced accuracy and speed beyond previous Chirp models and provides diarization and automatic language detection.

Model details

Chirp 3: Transcription, is exclusively available within the Speech-to-Text API V2.

Model identifiers

You can use Chirp 3: Transcription just like any other model by specifying the appropriate model identifier in your recognition request when using the API or the model name while in the Google Cloud console. Specify the appropriate identifier in your recognition.

| Model | Model identifier |

|---|---|

| Chirp 3 | chirp_3 |

API methods

Not all recognition methods support the same language availability sets, because Chirp 3 is available in the Speech-to-Text API V2, it supports the following recognition methods:

| API version | API method | Support |

|---|---|---|

| V2 | Speech.StreamingRecognize (good for streaming and real-time audio) | Supported |

| V2 | Speech.Recognize (good for audio shorter than one minute) | Supported |

| V2 | Speech.BatchRecognize (good for long audio 1 minute to 1 hour) | Supported |

Regional availability

Chirp 3 is available in the following Google Cloud regions, with more planned:

| Google Cloud Zone | Launch Readiness |

|---|---|

us (multi-region) |

GA |

eu (multi-region) |

GA |

asia-northeast1 |

GA |

asia-southeast1 |

GA |

asia-south1 |

Preview |

europe-west2 |

Preview |

europe-west3 |

Preview |

northamerica-northeast1 |

Preview |

Using the locations API as explained here, you can find the latest list of supported Google Cloud regions, languages and locales, and features for each transcription model.

Language availability for transcription

Chirp 3 supports transcription in StreamingRecognize, Recognize, and BatchRecognize in the

following languages:

| Language | BCP-47 Code |

Launch Readiness |

| Catalan (Spain) | ca-ES | GA |

| Chinese (Simplified, China) | cmn-Hans-CN | GA |

| Croatian (Croatia) | hr-HR | GA |

| Danish (Denmark) | da-DK | GA |

| Dutch (Netherlands) | nl-NL | GA |

| English (Australia) | en-AU | GA |

| English (United Kingdom) | en-GB | GA |

| English (India) | en-IN | GA |

| English (United States) | en-US | GA |

| Finnish (Finland) | fi-FI | GA |

| French (Canada) | fr-CA | GA |

| French (France) | fr-FR | GA |

| German (Germany) | de-DE | GA |

| Greek (Greece) | el-GR | GA |

| Hindi (India) | hi-IN | GA |

| Italian (Italy) | it-IT | GA |

| Japanese (Japan) | ja-JP | GA |

| Korean (Korea) | ko-KR | GA |

| Polish (Poland) | pl-PL | GA |

| Portuguese (Brazil) | pt-BR | GA |

| Portuguese (Portugal) | pt-PT | GA |

| Romanian (Romania) | ro-RO | GA |

| Russian (Russia) | ru-RU | GA |

| Spanish (Spain) | es-ES | GA |

| Spanish (United States) | es-US | GA |

| Swedish (Sweden) | sv-SE | GA |

| Turkish (Turkey) | tr-TR | GA |

| Ukrainian (Ukraine) | uk-UA | GA |

| Vietnamese (Vietnam) | vi-VN | GA |

| Arabic | ar-XA | Preview |

| Arabic (Algeria) | ar-DZ | Preview |

| Arabic (Bahrain) | ar-BH | Preview |

| Arabic (Egypt) | ar-EG | Preview |

| Arabic (Israel) | ar-IL | Preview |

| Arabic (Jordan) | ar-JO | Preview |

| Arabic (Kuwait) | ar-KW | Preview |

| Arabic (Lebanon) | ar-LB | Preview |

| Arabic (Mauritania) | ar-MR | Preview |

| Arabic (Morocco) | ar-MA | Preview |

| Arabic (Oman) | ar-OM | Preview |

| Arabic (Qatar) | ar-QA | Preview |

| Arabic (Saudi Arabia) | ar-SA | Preview |

| Arabic (State of Palestine) | ar-PS | Preview |

| Arabic (Syria) | ar-SY | Preview |

| Arabic (Tunisia) | ar-TN | Preview |

| Arabic (United Arab Emirates) | ar-AE | Preview |

| Arabic (Yemen) | ar-YE | Preview |

| Armenian (Armenia) | hy-AM | Preview |

| Bengali (Bangladesh) | bn-BD | Preview |

| Bengali (India) | bn-IN | Preview |

| Bulgarian (Bulgaria) | bg-BG | Preview |

| Burmese (Myanmar) | my-MM | Preview |

| Central Kurdish (Iraq) | ar-IQ | Preview |

| Chinese, Cantonese (Traditional Hong Kong) | yue-Hant-HK | Preview |

| Chinese, Mandarin (Traditional, Taiwan) | cmn-Hant-TW | Preview |

| Czech (Czech Republic) | cs-CZ | Preview |

| English (Philippines) | en-PH | Preview |

| Estonian (Estonia) | et-EE | Preview |

| Filipino (Philippines) | fil-PH | Preview |

| Gujarati (India) | gu-IN | Preview |

| Hebrew (Israel) | iw-IL | Preview |

| Hungarian (Hungary) | hu-HU | Preview |

| Indonesian (Indonesia) | id-ID | Preview |

| Kannada (India) | kn-IN | Preview |

| Khmer (Cambodia) | km-KH | Preview |

| Lao (Laos) | lo-LA | Preview |

| Latvian (Latvia) | lv-LV | Preview |

| Lithuanian (Lithuania) | lt-LT | Preview |

| Malay (Malaysia) | ms-MY | Preview |

| Malayalam (India) | ml-IN | Preview |

| Marathi (India) | mr-IN | Preview |

| Nepali (Nepal) | ne-NP | Preview |

| Norwegian (Norway) | no-NO | Preview |

| Persian (Iran) | fa-IR | Preview |

| Serbian (Serbia) | sr-RS | Preview |

| Slovak (Slovakia) | sk-SK | Preview |

| Slovenian (Slovenia) | sl-SI | Preview |

| Spanish (Mexico) | es-MX | Preview |

| Swahili | sw | Preview |

| Tamil (India) | ta-IN | Preview |

| Telugu (India) | te-IN | Preview |

| Thai (Thailand) | th-TH | Preview |

| Uzbek (Uzbekistan) | uz-UZ | Preview |

Language availability for diarization

Chirp 3 supports transcription and diarization only in BatchRecognize and Recognize in the following languages:

| Language | BCP-47 Code |

| Chinese (Simplified, China) | cmn-Hans-CN |

| German (Germany) | de-DE |

| English (United Kingdom) | en-GB |

| English (India) | en-IN |

| English (United States) | en-US |

| Spanish (Spain) | es-ES |

| Spanish (United States) | es-US |

| French (Canada) | fr-CA |

| French (France) | fr-FR |

| Hindi (India) | hi-IN |

| Italian (Italy) | it-IT |

| Japanese (Japan) | ja-JP |

| Korean (Korea) | ko-KR |

| Portuguese (Brazil) | pt-BR |

Feature support and limitations

Chirp 3 supports the following features:

| Feature | Description | Launch stage |

|---|---|---|

| Automatic punctuation | Automatically generated by the model and can be optionally disabled. | GA |

| Automatic capitalization | Automatically generated by the model and can be optionally disabled. | GA |

| Utterance-level Timestamps | Automatically generated by the model. | GA |

| Speaker Diarization | Automatically identifies the different speakers in a single-channel audio sample. Available only in BatchRecognize |

GA |

| Speech adaptation (Biasing) | Provides hints to the model in the form of phrases or words to improve recognition accuracy for specific terms or proper nouns. | GA |

| Language-agnostic audio transcription | Automatically infers and transcribes in the most prevalent language. | GA |

Chirp 3 doesn't support the following features:

| Feature | Description |

| Word-level Timestamps | Automatically generated by the model and can be optionally enabled, which some transcription degradation expected. |

| Word-level confidence scores | The API returns a value, but it isn't truly a confidence score. |

Transcribe using Chirp 3

Discover how to use Chirp 3 for transcription tasks.

Perform streaming speech recognition

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_streaming_chirp3(

audio_file: str

) -> cloud_speech.StreamingRecognizeResponse:

"""Transcribes audio from audio file stream using the Chirp 3 model of Google Cloud Speech-to-Text v2 API.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API V2 containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

content = f.read()

# In practice, stream should be a generator yielding chunks of audio data

chunk_length = len(content) // 5

stream = [

content[start : start + chunk_length]

for start in range(0, len(content), chunk_length)

]

audio_requests = (

cloud_speech.StreamingRecognizeRequest(audio=audio) for audio in stream

)

recognition_config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"],

model="chirp_3",

)

streaming_config = cloud_speech.StreamingRecognitionConfig(

config=recognition_config

)

config_request = cloud_speech.StreamingRecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

streaming_config=streaming_config,

)

def requests(config: cloud_speech.RecognitionConfig, audio: list) -> list:

yield config

yield from audio

# Transcribes the audio into text

responses_iterator = client.streaming_recognize(

requests=requests(config_request, audio_requests)

)

responses = []

for response in responses_iterator:

responses.append(response)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

return responses

Perform synchronous speech recognition

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_sync_chirp3(

audio_file: str

) -> cloud_speech.RecognizeResponse:

"""Transcribes an audio file using the Chirp 3 model of Google Cloud Speech-to-Text V2 API.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

audio_content = f.read()

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"],

model="chirp_3",

)

request = cloud_speech.RecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

content=audio_content,

)

# Transcribes the audio into text

response = client.recognize(request=request)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

return response

Perform batch speech recognition

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_batch_3(

audio_uri: str,

) -> cloud_speech.BatchRecognizeResults:

"""Transcribes an audio file from a Google Cloud Storage URI using the Chirp 3 model of Google Cloud Speech-to-Text v2 API.

Args:

audio_uri (str): The Google Cloud Storage URI of the input audio file.

E.g., gs://[BUCKET]/[FILE]

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"],

model="chirp_3",

)

file_metadata = cloud_speech.BatchRecognizeFileMetadata(uri=audio_uri)

request = cloud_speech.BatchRecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

files=[file_metadata],

recognition_output_config=cloud_speech.RecognitionOutputConfig(

inline_response_config=cloud_speech.InlineOutputConfig(),

),

)

# Transcribes the audio into text

operation = client.batch_recognize(request=request)

print("Waiting for operation to complete...")

response = operation.result(timeout=120)

for result in response.results[audio_uri].transcript.results:

print(f"Transcript: {result.alternatives[0].transcript}")

return response.results[audio_uri].transcript

Use Chirp 3 Features

Explore how you can use the latest features, with code examples:

Perform a language-agnostic transcription

Chirp 3, can automatically identify and transcribe in the dominant language spoken in the audio which is essential for multilingual applications. To achieve this set language_codes=["auto"] as indicated in the code example:

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_sync_chirp3_auto_detect_language(

audio_file: str

) -> cloud_speech.RecognizeResponse:

"""Transcribes an audio file and auto-detect spoken language using Chirp 3.

Please see https://cloud.google.com/speech-to-text/docs/encoding for more

information on which audio encodings are supported.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

audio_content = f.read()

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["auto"], # Set language code to auto to detect language.

model="chirp_3",

)

request = cloud_speech.RecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

content=audio_content,

)

# Transcribes the audio into text

response = client.recognize(request=request)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

print(f"Detected Language: {result.language_code}")

return response

Perform a language-restricted transcription

Chirp 3 can automatically identify and transcribe the dominant language in an audio file. You can also condition it on specific locales you expect, for example: ["en-US", "fr-FR"], which would focus the model's resources on the most probable languages for more reliable results, as demonstrated in the code example:

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_sync_3_auto_detect_language(

audio_file: str

) -> cloud_speech.RecognizeResponse:

"""Transcribes an audio file and auto-detect spoken language using Chirp 3.

Please see https://cloud.google.com/speech-to-text/docs/encoding for more

information on which audio encodings are supported.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

audio_content = f.read()

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US", "fr-FR"], # Set language codes of the expected spoken locales

model="chirp_3",

)

request = cloud_speech.RecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

content=audio_content,

)

# Transcribes the audio into text

response = client.recognize(request=request)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

print(f"Detected Language: {result.language_code}")

return response

Perform transcription and speaker diarization

Use Chirp 3 for transcription and diarization tasks.

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_batch_chirp3(

audio_uri: str,

) -> cloud_speech.BatchRecognizeResults:

"""Transcribes an audio file from a Google Cloud Storage URI using the Chirp 3 model of Google Cloud Speech-to-Text V2 API.

Args:

audio_uri (str): The Google Cloud Storage URI of the input

audio file. E.g., gs://[BUCKET]/[FILE]

Returns:

cloud_speech.RecognizeResponse: The response from the

Speech-to-Text API containing the transcription results.

"""

# Instantiates a client.

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"], # Use "auto" to detect language.

model="chirp_3",

features=cloud_speech.RecognitionFeatures(

# Enable diarization by setting empty diarization configuration.

diarization_config=cloud_speech.SpeakerDiarizationConfig(),

),

)

file_metadata = cloud_speech.BatchRecognizeFileMetadata(uri=audio_uri)

request = cloud_speech.BatchRecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

files=[file_metadata],

recognition_output_config=cloud_speech.RecognitionOutputConfig(

inline_response_config=cloud_speech.InlineOutputConfig(),

),

)

# Creates audio transcription job.

operation = client.batch_recognize(request=request)

print("Waiting for transcription job to complete...")

response = operation.result(timeout=120)

for result in response.results[audio_uri].transcript.results:

print(f"Transcript: {result.alternatives[0].transcript}")

print(f"Detected Language: {result.language_code}")

print(f"Speakers per word: {result.alternatives[0].words}")

return response.results[audio_uri].transcript

Improve accuracy with model adaptation

Chirp 3 can improve transcription accuracy for your specific audio using model adaptation. This lets you to provide a list of specific words and phrases, increasing the likelihood that the model will recognize them. It's especially useful for domain-specific terms, proper nouns, or unique vocabulary.

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_sync_chirp3_model_adaptation(

audio_file: str

) -> cloud_speech.RecognizeResponse:

"""Transcribes an audio file using the Chirp 3 model with adaptation, improving accuracy for specific audio characteristics or vocabulary.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

audio_content = f.read()

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"],

model="chirp_3",

# Use model adaptation

adaptation=cloud_speech.SpeechAdaptation(

phrase_sets=[

cloud_speech.SpeechAdaptation.AdaptationPhraseSet(

inline_phrase_set=cloud_speech.PhraseSet(phrases=[

{

"value": "alphabet",

},

{

"value": "cell phone service",

}

])

)

]

)

)

request = cloud_speech.RecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

content=audio_content,

)

# Transcribes the audio into text

response = client.recognize(request=request)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

return response

Enable denoiser and SNR-filtering

Chirp 3 can enhance audio quality by reducing background noise and filtering out unwanted sounds before transcription. You can improve results from noisy environments by enabling the built-in denoiser and Signal-to-Noise Ratio (SNR) filtering.

Setting denoiser_audio=true can effectively help you reduce background music or noises

like rain and street traffic.

You can set snr_threshold=X to control the minimum loudness of speech required

for transcription. This helps to filter out non-speech audio or background

noise, preventing unwanted text in your results. A higher snr_threshold means

the user needs to speak louder for the model to transcribe the utterances.

SNR-filtering can be utilized in real-time streaming use cases to avoid sending unnecessary sounds to a model for transcription. A higher value for this setting means that your speech volume must be louder relative to the background noise to be sent to the transcription model.

The configuration of snr_threshold will interact

with whether denoise_audio is true or false. When denoise_audio=true,

background noise is removed, and speech becomes relatively clearer. The

overall SNR of the audio goes up.

If your use case involves only the user's voice without others speaking, set denoise_audio=true to increase the sensitivity of SNR-filtering, which can

filter out non-speech noise. If your use case involves people

speaking in the background and you want to avoid transcribing background speech,

consider setting denoise_audio=false and lowering the SNR threshold.

The following are recommended SNR threshold values. A reasonable snr_threshold

value can be set from 0 - 1000. A value of 0 means don't filter anything, and 1000

means filter everything. Fine-tune the value if recommended setting does not

work for you.

| Denoise audio | SNR threshold | Speech sensitivity |

|---|---|---|

| true | 10.0 | high |

| true | 20.0 | medium |

| true | 40.0 | low |

| true | 100.0 | very low |

| false | 0.5 | high |

| false | 1.0 | medium |

| false | 2.0 | low |

| false | 5.0 | very low |

Python

import os

from google.cloud.speech_v2 import SpeechClient

from google.cloud.speech_v2.types import cloud_speech

from google.api_core.client_options import ClientOptions

PROJECT_ID = os.getenv("GOOGLE_CLOUD_PROJECT")

REGION = "us"

def transcribe_sync_chirp3_with_timestamps(

audio_file: str

) -> cloud_speech.RecognizeResponse:

"""Transcribes an audio file using the Chirp 3 model of Google Cloud Speech-to-Text v2 API, which provides word-level timestamps for each transcribed word.

Args:

audio_file (str): Path to the local audio file to be transcribed.

Example: "resources/audio.wav"

Returns:

cloud_speech.RecognizeResponse: The response from the Speech-to-Text API containing

the transcription results.

"""

# Instantiates a client

client = SpeechClient(

client_options=ClientOptions(

api_endpoint=f"{REGION}-speech.googleapis.com",

)

)

# Reads a file as bytes

with open(audio_file, "rb") as f:

audio_content = f.read()

config = cloud_speech.RecognitionConfig(

auto_decoding_config=cloud_speech.AutoDetectDecodingConfig(),

language_codes=["en-US"],

model="chirp_3",

denoiser_config={

denoise_audio: True,

# Medium snr threshold

snr_threshold: 20.0,

}

)

request = cloud_speech.RecognizeRequest(

recognizer=f"projects/{PROJECT_ID}/locations/{REGION}/recognizers/_",

config=config,

content=audio_content,

)

# Transcribes the audio into text

response = client.recognize(request=request)

for result in response.results:

print(f"Transcript: {result.alternatives[0].transcript}")

return response

Use Chirp 3 in the Google Cloud console

- Sign up for a Google Cloud account, and create a project.

- Go to Speech in the Google Cloud console.

- If the API isn't enabled, enable the API.

Make sure that you have an STT console Workspace. If you don't have a workspace, you must create a workspace.

Go to the transcriptions page, and click New Transcription.

Open the Workspace drop-down and click New Workspace to create a workspace for transcription.

From the Create a new workspace navigation sidebar, click Browse.

Click to create a new bucket.

Enter a name for your bucket and click Continue.

Click Create to create your Cloud Storage bucket.

After the bucket is created, click Select to select your bucket for use.

Click Create to finish creating your workspace for the Speech-to-Text API V2 console.

Perform a transcription on your actual audio.

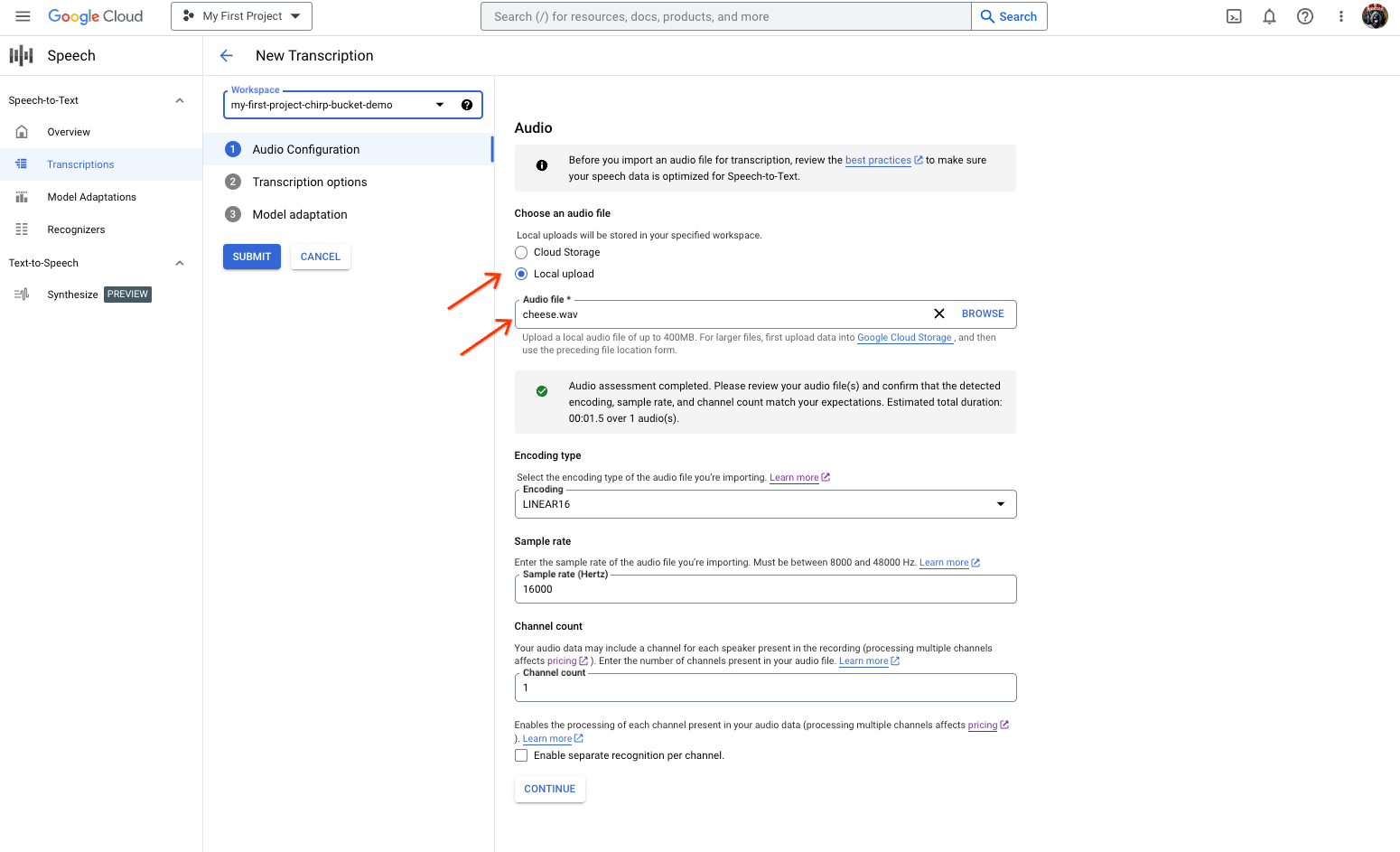

The Speech-to-Text transcription creation page, showing file selection or upload. From the New Transcription page, select your audio file through either upload (Local upload) or specifying an existing Cloud Storage file (Cloud storage).

Click Continue to move to the Transcription options.

Select the Spoken language that you plan to use for recognition with Chirp from your previously created recognizer.

In the model drop-down, select chirp_3.

In the Recognizer drop-down, select your newly created recognizer.

Click Submit to run your first recognition request using

chirp_3.

View your Chirp 3 transcription result.

From the Transcriptions page, click the name of the transcription to view its result.

In the Transcription details page, view your transcription result, and optionally playback the audio in the browser.