This tutorial shows how to use Azure Pipelines, Google Kubernetes Engine (GKE), and Google Container Registry to create a continuous integration/continuous deployment (CI/CD) pipeline for an ASP.NET MVC web application. For the purpose of this tutorial, you can select between two example applications:

- An ASP.NET Core web application that uses .NET 6.0 and runs on Linux

- An ASP.NET MVC web application that uses .NET Framework 4 and runs on Windows

The CI/CD pipeline uses two separate GKE clusters, one for development and one for production, as the following diagram shows.

At the beginning of the pipeline, developers commit changes to the example codebase. This action triggers the pipeline to create a release and to deploy it to the development cluster. A release manager can then promote the release so that it's deployed into the production cluster.

This tutorial is intended for developers and DevOps engineers. It assumes that you have basic knowledge of Microsoft .NET, Azure Pipelines, and GKE. The tutorial also requires you to have administrative access to an Azure DevOps account.

Connecting Azure Pipelines to Google Container Registry

Before you can set up continuous integration for the CloudDemo app, you must

connect Azure Pipelines to Container Registry. This connection

allows Azure Pipelines to publish container images to Container Registry.

Set up a service account for publishing images

Create a Google Cloud service account in your project:

Open the Google Cloud console.

-

In the Google Cloud console, activate Cloud Shell.

At the bottom of the Google Cloud console, a Cloud Shell session starts and displays a command-line prompt. Cloud Shell is a shell environment with the Google Cloud CLI already installed and with values already set for your current project. It can take a few seconds for the session to initialize.

To save time typing your project ID and Compute Engine zone options, set default configuration values by running the following commands:

gcloud config set project PROJECT_ID gcloud config set compute/zone us-central1-a

Replace

PROJECT_IDwith the project ID of your project.Enable the Container Registry API in the project:

gcloud services enable containerregistry.googleapis.comCreate a service account that Azure Pipelines uses to publish Docker images:

gcloud iam service-accounts create azure-pipelines-publisher \ --display-name="Azure Pipelines Publisher"Grant the Storage Admin IAM role (

roles/storage.admin) to the service account to allow Azure Pipelines to push to Container Registry:AZURE_PIPELINES_PUBLISHER=azure-pipelines-publisher@$GOOGLE_CLOUD_PROJECT.iam.gserviceaccount.com gcloud projects add-iam-policy-binding $GOOGLE_CLOUD_PROJECT \ --member serviceAccount:$AZURE_PIPELINES_PUBLISHER \ --role roles/storage.adminGenerate a service account key:

gcloud iam service-accounts keys create azure-pipelines-publisher.json \ --iam-account $AZURE_PIPELINES_PUBLISHER tr -d '\n' < azure-pipelines-publisher.json > azure-pipelines-publisher-oneline.jsonView the content of the service account key file:

echo $(<azure-pipelines-publisher-oneline.json)You need the service account key in one of the following steps.

Create a service connection for Google Container Registry

In Azure Pipelines, create a new service connection for Container Registry:

- In the Azure DevOps menu, select Project settings, and then select Pipelines > Service connections.

- Click Create service connection.

- From the list, select Docker Registry, and then click Next.

- In the dialog, enter values for the following fields:

- Registry type: Others

- Docker Registry:

https://gcr.io/PROJECT_ID, replacingPROJECT_IDwith the name of your project (for example,https://gcr.io/azure-pipelines-test-project-12345). - Docker ID:

_json_key - Password: Paste the content of

azure-pipelines-publisher-oneline.json. - Service connection name:

gcr-tutorial

- Click Save to create the connection.

Building continuously

You can now use Azure Pipelines to set up continuous integration. For each commit that's pushed to the Git repository, Azure Pipelines builds the code and packages the build artifacts into a Docker container. The container is then published to Container Registry.

The repository already contains the following Dockerfile:

.NET/Linux

.NET Framework/Windows

You now create a new pipeline that uses YAML syntax:

- Using Visual Studio or a command-line

gitclient, clone your new Git repository and check out themainbranch. - In the root of the repository, create a file named

azure-pipelines.yml. Copy the following code into the file:

.NET/Linux

resources: - repo: self fetchDepth: 1 pool: vmImage: ubuntu-20.04 trigger: - main variables: TargetFramework: 'net6.0' BuildConfiguration: 'Release' DockerImageName: 'PROJECT_ID/clouddemo' steps: - task: DotNetCoreCLI@2 displayName: Publish inputs: projects: 'applications/clouddemo/netcore/CloudDemo.MvcCore.sln' publishWebProjects: false command: publish arguments: '--configuration $(BuildConfiguration) --framework=$(TargetFramework)' zipAfterPublish: false modifyOutputPath: false - task: CmdLine@1 displayName: 'Lock image version in deployment.yaml' inputs: filename: /bin/bash arguments: '-c "awk ''{gsub(\"CLOUDDEMO_IMAGE\", \"gcr.io/$(DockerImageName):$(Build.BuildId)\", $0); print}'' applications/clouddemo/netcore/deployment.yaml > $(build.artifactstagingdirectory)/deployment.yaml"' - task: PublishBuildArtifacts@1 displayName: 'Publish Artifact' inputs: PathtoPublish: '$(build.artifactstagingdirectory)' - task: Docker@2 displayName: 'Login to Container Registry' inputs: command: login containerRegistry: 'gcr-tutorial' - task: Docker@2 displayName: 'Build and push image' inputs: Dockerfile: 'applications/clouddemo/netcore/Dockerfile' command: buildAndPush repository: '$(DockerImageName)'

.NET Framework/Windows

resources: - repo: self fetchDepth: 1 pool: vmImage: windows-2019 # Matches WINDOWS_LTSC in GKE demands: - msbuild - visualstudio trigger: - master variables: Solution: 'applications/clouddemo/net4/CloudDemo.Mvc.sln' BuildPlatform: 'Any CPU' BuildConfiguration: 'Release' DockerImageName: 'PROJECT_ID/clouddemo' steps: - task: NuGetCommand@2 displayName: 'NuGet restore' inputs: restoreSolution: '$(Solution)' - task: VSBuild@1 displayName: 'Build solution' inputs: solution: '$(Solution)' msbuildArgs: '/p:DeployOnBuild=true /p:PublishProfile=FolderProfile' platform: '$(BuildPlatform)' configuration: '$(BuildConfiguration)' - task: PowerShell@2 displayName: 'Lock image version in deployment.yaml' inputs: targetType: 'inline' script: '(Get-Content applications\clouddemo\net4\deployment.yaml) -replace "CLOUDDEMO_IMAGE","gcr.io/$(DockerImageName):$(Build.BuildId)" | Out-File -Encoding ASCII $(build.artifactstagingdirectory)\deployment.yaml' - task: PublishBuildArtifacts@1 displayName: 'Publish Artifact' inputs: PathtoPublish: '$(build.artifactstagingdirectory)' - task: Docker@2 displayName: 'Login to Container Registry' inputs: command: login containerRegistry: 'gcr-tutorial' - task: Docker@2 displayName: 'Build and push image' inputs: Dockerfile: 'applications/clouddemo/net4/Dockerfile' command: buildAndPush repository: '$(DockerImageName)'

Replace

PROJECT_IDwith the name of your project, and then save the file.Commit your changes and push them to Azure Pipelines.

Visual Studio

- Open Team Explorer and click the Home icon.

- Click Changes.

- Enter a commit message like

Add pipeline definition. - Click Commit All and Push.

Command line

Stage all modified files:

git add -ACommit the changes to the local repository:

git commit -m "Add pipeline definition"Push the changes to Azure DevOps:

git push

In the Azure DevOps menu, select Pipelines and then click Create Pipeline.

Select Azure Repos Git.

Select your repository.

On the Review your pipeline YAML page, click Run.

A new build is triggered. It might take about 6 minutes for the build to complete.

To verify that the image has been published to Container Registry, switch to your project in the Google Cloud console, select Container Registry > Images, and then click clouddemo.

A single image and the tag of this image are displayed. The tag corresponds to the numeric ID of the build that was run in Azure Pipelines.

Deploying continuously

With Azure Pipelines automatically building your code and publishing Docker images for each commit, you can now turn your attention to deployment.

Unlike some other continuous integration systems, Azure Pipelines distinguishes between building and deploying, and it provides a specialized set of tools labeled Release Management for all deployment-related tasks.

Azure Pipelines Release Management is built around these concepts:

- A release refers to a set of artifacts that make up a specific version of your app and that are usually the result of a build process.

- Deployment refers to the process of taking a release and deploying it into a specific environment.

- A deployment performs a set of tasks, which can be grouped in jobs.

- Stages let you segment your pipeline and can be used to orchestrate deployments to multiple environments—for example, development and testing environments.

The primary artifact that the CloudDemo build process produces is the Docker image. However, because the Docker image is published to Container Registry, the image is outside the scope of Azure Pipelines. The image therefore doesn't serve well as the definition of a release.

To deploy to Kubernetes, you also need a manifest, which resembles a bill of materials. The manifest not only defines the resources that Kubernetes is supposed to create and manage, but also specifies the exact version of the Docker image to use. The Kubernetes manifest is well suited to serve as the artifact that defines the release in Azure Pipelines Release Management.

Configure the Kubernetes deployment

To run the CloudDemo in Kubernetes, you need the following resources:

- A Deployment that defines a single pod that runs the Docker image produced by the build.

- A NodePort service that makes the pod accessible to a load balancer.

- An Ingress that exposes the application to the public internet by using a Cloud HTTP(S) load balancer.

The repository already contains the following Kubernetes manifest that defines these resources:

.NET/Linux

.NET Framework/Windows

Set up the development and production environments

Before returning to Azure Pipelines Release Management, you need to create the GKE clusters.

Create GKE clusters

Return to your Cloud Shell instance.

Enable the GKE API for your project:

gcloud services enable container.googleapis.com

Create the development cluster by using the following command. Note that it might take a few minutes to complete:

.NET/Linux

gcloud container clusters create azure-pipelines-cicd-dev --enable-ip-alias

.NET Framework/Windows

gcloud container clusters create azure-pipelines-cicd-dev --enable-ip-alias gcloud container node-pools create azure-pipelines-cicd-dev-win \ --cluster=azure-pipelines-cicd-dev \ --image-type=WINDOWS_LTSC \ --no-enable-autoupgrade \ --machine-type=n1-standard-2Create the production cluster by using the following command. Note that it might take a few minutes to complete:

.NET/Linux

gcloud container clusters create azure-pipelines-cicd-prod --enable-ip-alias

.NET Framework/Windows

gcloud container clusters create azure-pipelines-cicd-prod --enable-ip-alias gcloud container node-pools create azure-pipelines-cicd-prod-win \ --cluster=azure-pipelines-cicd-prod \ --image-type=WINDOWS_LTSC \ --no-enable-autoupgrade \ --machine-type=n1-standard-2

Connect Azure Pipelines to the development cluster

Just as you can use Azure Pipelines to connect to an external Docker registry like Container Registry, Azure Pipelines supports integrating external Kubernetes clusters.

It is possible to authenticate to Container Registry using a Google Cloud service account, but using Google Cloud service accounts is not supported by Azure Pipelines for authenticating with GKE. Instead, you have to use a Kubernetes service account.

To connect Azure Pipelines to your development cluster, you therefore have to create a Kubernetes service account first.

In Cloud Shell, connect to the development cluster:

gcloud container clusters get-credentials azure-pipelines-cicd-dev

Create a Kubernetes service account for Azure Pipelines:

kubectl create serviceaccount azure-pipelines-deploy

Create a Kubernetes secret containing a token credential for Azure Pipelines:

kubectl create secret generic azure-pipelines-deploy-token --type=kubernetes.io/service-account-token --dry-run -o yaml \ | kubectl annotate --local -o yaml -f - kubernetes.io/service-account.name=azure-pipelines-deploy \ | kubectl apply -f -

Assign the

cluster-adminrole to the service account by creating a cluster role binding:kubectl create clusterrolebinding azure-pipelines-deploy --clusterrole=cluster-admin --serviceaccount=default:azure-pipelines-deploy

Determine the IP address of the cluster:

gcloud container clusters describe azure-pipelines-cicd-dev --format=value\(endpoint\)

You will need this address in a moment.

In the Azure DevOps menu, select Project settings and then select Pipelines > Service connections.

Click New service connection.

Select Kubernetes and click Next.

Configure the following settings.

- Authentication method: Service account.

- Server URL:

https://PRIMARY_IP. ReplacePRIMARY_IPwith the IP address that you determined earlier. - Secret: The Kubernetes Secret that you created earlier. To get the

Secret, run the following command, copy the Secret, and then

copy the Secret into the Azure page.

kubectl get secret azure-pipelines-deploy-token -o yaml

- Service connection name:

azure-pipelines-cicd-dev.

Click Save.

Connect Azure Pipelines to the production cluster

To connect Azure Pipelines to your production cluster, you can follow the same approach.

In Cloud Shell, connect to the production cluster:

gcloud container clusters get-credentials azure-pipelines-cicd-prod

Create a Kubernetes service account for Azure Pipelines:

kubectl create serviceaccount azure-pipelines-deploy

Assign the

cluster-adminrole to the service account by creating a cluster role binding:kubectl create clusterrolebinding azure-pipelines-deploy --clusterrole=cluster-admin --serviceaccount=default:azure-pipelines-deploy

Determine the IP address of the cluster:

gcloud container clusters describe azure-pipelines-cicd-prod --format=value\(endpoint\)

You will need this address in a moment.

In the Azure DevOps menu, select Project settings and then select Pipelines > Service connections.

Click New service connection.

Select Kubernetes and click Next.

Configure the following settings:

- Authentication method: Service account.

- Server URL:

https://PRIMARY_IP. ReplacePRIMARY_IPwith the IP address that you determined earlier. - Secret: Run the following command in Cloud Shell and copy

the output:

kubectl get secret $(kubectl get serviceaccounts azure-pipelines-deploy -o custom-columns=":secrets[0].name") -o yaml

- Service connection name:

azure-pipelines-cicd-prod.

Click Save.

Configure the release pipeline

After you set up the GKE infrastructure, you return to Azure Pipelines to automate the deployment, which includes the following:

- Deploying to the development environment.

- Requesting manual approval before initiating a deployment to the production environment.

- Deploying to the production environment.

Create a release definition

As a first step, create a new release definition.

- In the Azure DevOps menu, select Pipelines > Releases.

- Click New pipeline.

- From the list of templates, select Empty job.

- When you're prompted for a name for the stage, enter

Development. - At the top of the screen, name the release CloudDemo-KubernetesEngine.

- In the pipeline diagram, next to Artifacts, click Add.

Select Build and add the following settings:

- Source type: Build

- Source (build pipeline): Select the build definition (there should be only one option)

- Default version:

Latest - Source Alias:

manifest

Click Add.

On the Artifact box, click Continuous deployment trigger (the lightning bolt icon) to add a deployment trigger.

Under Continuous deployment trigger, set the switch to Enabled.

Click Save.

Enter a comment if you want, and then confirm by clicking OK.

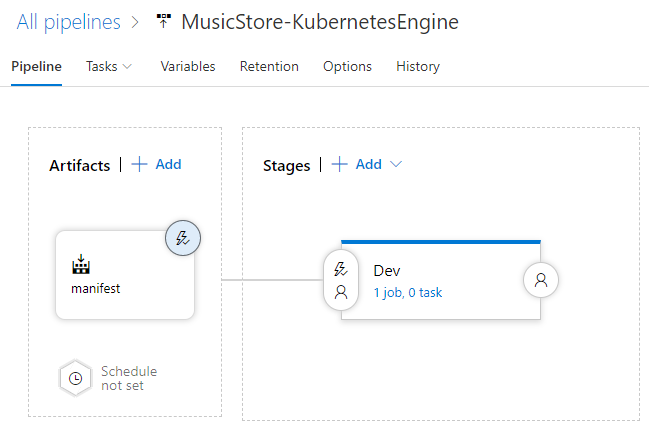

The pipeline displays similar to the following.

Deploy to the development cluster

With the release definition created, you can now configure the deployment to the GKE development cluster.

- In the menu, switch to the Tasks tab.

Click Agent job and configure the following settings:

- Agent pool: Azure Pipelines

- Agent specification: ubuntu-18.04

Next to Agent job, click Add a task to agent job to add a step to the phase.

Select the Deploy to Kubernetes task and click Add.

Click the newly added task and configure the following settings:

- Display name:

Deploy - Action: deploy

- Kubernetes service connection: azure-pipelines-cicd-dev

- Namespace:

default - Strategy: None

- Manifests:

manifest/drop/deployment.yaml

- Display name:

Click Save.

Enter a comment if you want, and then confirm by clicking OK.

Deploy to the production cluster

Finally, you configure the deployment to the GKE production cluster.

- In the menu, switch to the Pipeline tab.

- In the Stages box, select Add > New stage.

- From the list of templates, select Empty job.

- When you're prompted for a name for the stage, enter

Production. - Click the lightning bolt icon of the newly created stage.

Configure the following settings:

- Select trigger: After stage

- Stages: Dev

- Pre-deployment approvals: (enabled)

- Approvers: Select your own username.

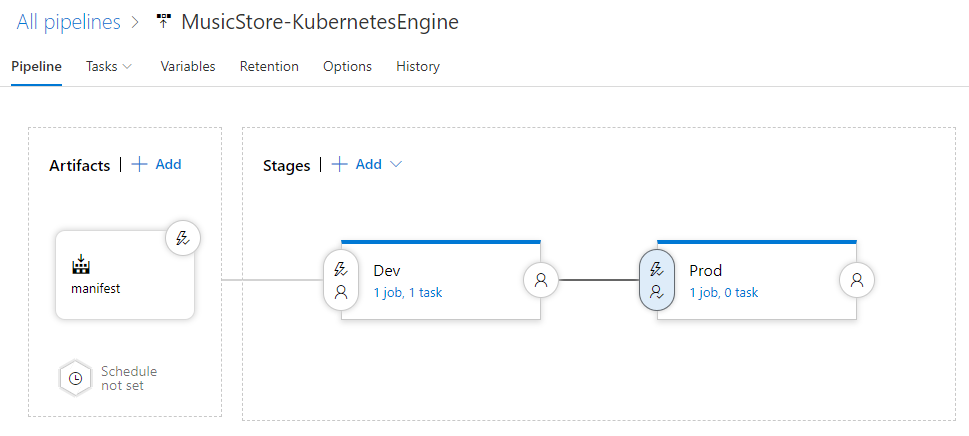

The pipeline now looks like this:

Switch to the Tasks tab.

Hold the mouse over the Tasks tab and select Tasks > Production.

Click Agent job and configure the following settings:

- Agent pool: Azure Pipelines

- Agent specification: ubuntu-18.04

Click Add a task to agent job to add a step to the phase.

Select the Deploy to Kubernetes task and click Add.

Click the newly added task and configure the following settings:

- Display name:

Deploy - Action: deploy

- Kubernetes service connection: azure-pipelines-cicd-prod

- Namespace:

default - Strategy: None

- Manifests:

manifest/drop/deployment.yaml

- Display name:

Click Save.

Enter a comment if you want, and then confirm by clicking OK.

Run the pipeline

Now that you've configured the entire pipeline, you can test it by performing a source code change:

On your local computer, open the file

Index.cshtmlfrom the Git repository you cloned earlier:.NET/Linux

The file is located in

applications\clouddemo\netcore\CloudDemo.MvcCore\Views\Home\.NET Framework/Windows

The file is located in

applications\clouddemo\net4\CloudDemo.Mvc\Views\Home\In line 26, change the value of

ViewBag.TitlefromHome PagetoThis app runs on GKE.Commit your changes, and then push them to Azure Pipelines.

Visual Studio

- Open Team Explorer and click the Home icon.

- Click Changes.

- Enter a commit message like

Change site title. - Click Commit All and Push.

Command line

Stage all modified files:

git add -ACommit the changes to the local repository:

git commit -m "Change site title"Push the changes to Azure Pipelines:

git push

In the Azure DevOps menu, select Pipelines. A build is triggered.

After the build is finished, select Pipelines > Releases. A release process is initiated.

Click Release-1 to open the details page, and wait for the status of the Development stage to switch to Succeeded.

In the Google Cloud console, select Kubernetes Engine > Services & Ingress > Ingress.

Locate the Ingress service for the azure-pipelines-cicd-dev cluster, and wait for its status to switch to Ok. This might take several minutes.

Open the link in the Frontends column of the same row. You might see an error at first because the load balancer takes a few minutes to become available. When it's ready, observe that the CloudDemo has been deployed and is using the custom title.

In Azure Pipelines, click the Approve button located under the Prod stage to promote the deployment to the production environment.

If you don't see the button, you might need to first approve or reject a previous release.

Enter a comment if you want, and then confirm by clicking Approve.

Wait for the status of the Prod environment to switch to Succeeded. You might need to manually refresh the page in your browser.

In the Google Cloud console, refresh the Services & Ingress page.

Locate the Ingress service for the azure-pipelines-cicd-prod cluster and wait for its status to switch to Ok. This might take several minutes.

Open the link in the Frontends column of the same row. Again, you might see an error at first because the load balancer take a few minutes to become available. When it's ready, you see the CloudDemo app with the custom title again, this time running in the production cluster.