This page describes how to drive deep-learning tasks such as image recognition, natural language processing, as well as other compute-intensive tasks using node pools with NVIDIA graphics processing unit (GPU) hardware accelerators for compute power with your Knative serving container instance.

Adding a node pool with GPUs to your GKE cluster

Have an administrator create a node pool with GPUs:

Setting up your service to consume GPUs

You can specify a resource limit to consume GPUs for your service by using the Google Cloud console or the Google Cloud CLI when you deploy a new service, update an existing service, or deploy a revision:

Console

- Go to Knative serving

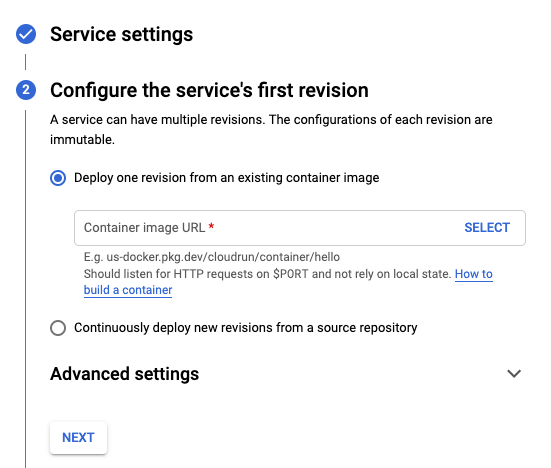

Click Create service to display the Create service form.

In the Service settings section:

- Select the GKE cluster with the GPU-enabled node pool.

- Specify the name you want to give to your service.

- Click Next to continue to the next section.

In the Configure the service's first revision section:

- Add a container image URL.

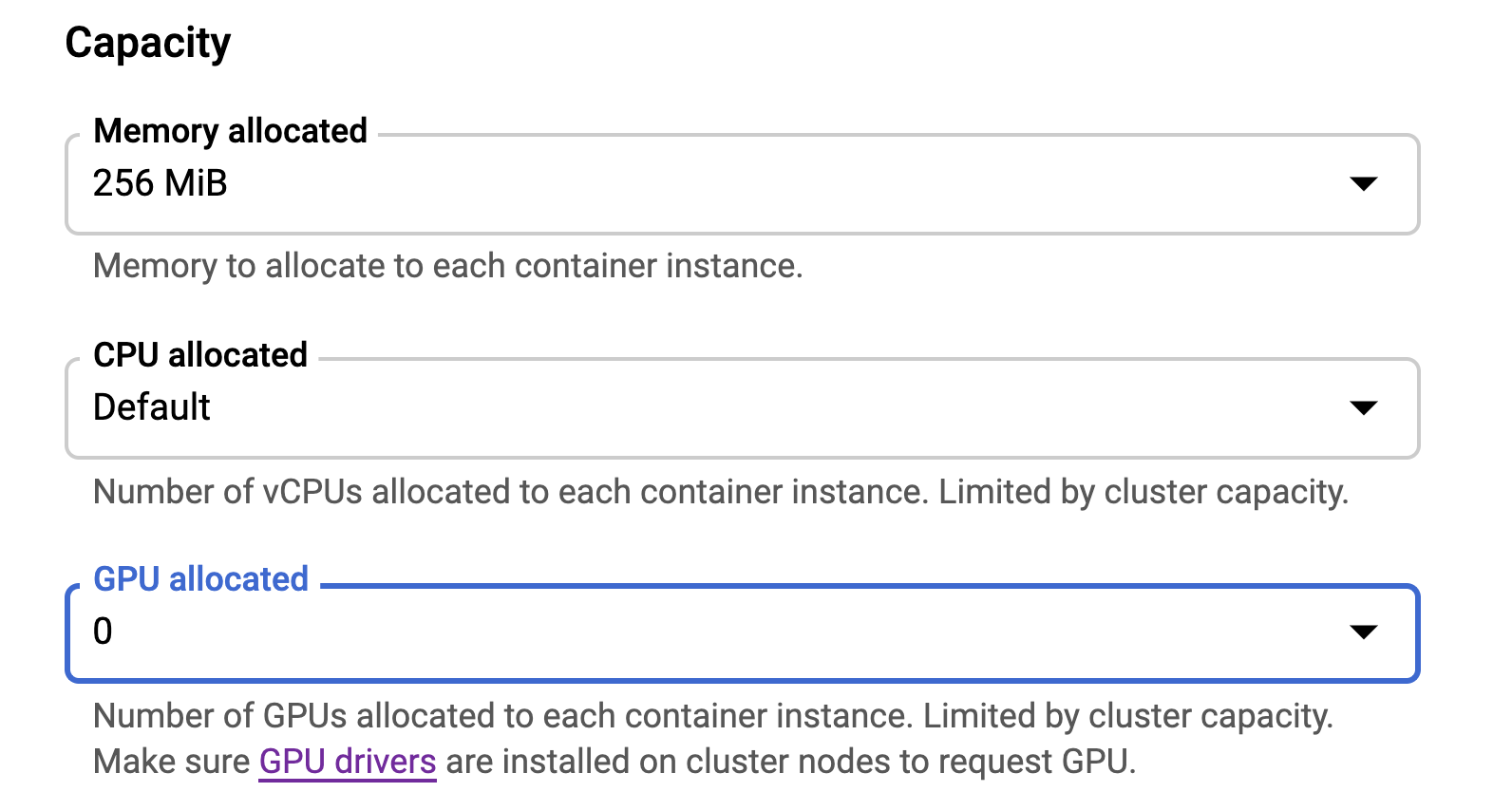

- Click Advanced settings and in the GPU allocated menu, select

the number of GPUs

that you want to allocate to your service.

Click Next to continue to the next section.

In the Configure how this service is triggered section, select which connectivity you would like to use to invoke the service.

Click Create to deploy the image to Knative serving and wait for the deployment to finish.

Command line

You can download the configuration of an existing service into a

YAML file with the gcloud run services describe command by using the

--format=export flag.

You can then modify that YAML file and deploy

those changes with the gcloud beta run services replace command.

You must ensure that you modify only the specified attributes.

Download the configuration of your service into a file named

service.yamlon local workspace:gcloud run services describe SERVICE --format export > service.yaml

Replace SERVICE with the name of your Knative serving service.

In your local file, update the

nvidia.com/gpuattribute:apiVersion: serving.knative.dev/v1 kind: Service metadata: name: SERVICE_NAME spec: template: spec: containers: – image: IMAGE_URL resources: limits: nvidia.com/gpu: "GPU_UNITS"

Replace GPU_UNITS with the desired GPU value in Kubernetes GPU units. For example, specify

1for 1 GPU.Deploy the YAML file and replace your service with the new configuration by running the following command:

gcloud beta run services replace service.yaml

For more information on GPU performance and cost, see GPUs.