This document describes how to enable Model Armor for NotebookLM Enterprise. Model Armor is a Google Cloud service that enhances the security and safety of your AI applications by proactively screening the prompts and responses given using NotebookLM Enterprise. This helps protect against various risks and ensures responsible AI practices. Model Armor is supported on NotebookLM Enterprise at no additional cost.

Model Armor's response to potential issues in user queries or responses from NotebookLM Enterprise is governed by the template's enforcement type.

If the enforcement type is Inspect and block, NotebookLM Enterprise blocks the request and displays an error message. This is the default enforcement type when you create a Model Armor template using the console.

If the enforcement type is Inspect only, NotebookLM Enterprise does not block the requests or responses.

For more information, see Define the enforcement type.

Before you begin

Make sure you have the required roles assigned to you:

To enable Model Armor in NotebookLM Enterprise, you need the Discovery Engine Admin (

roles/discoveryengine.admin) role.To create the Model Armor templates, you need the Model Armor Admin role (

roles/modelarmor.admin).To call the Model Armor APIs, you need the Model Armor User role (

roles/modelarmor.user).

Create a Model Armor template

You can create and use the same Model Armor template for user prompts and responses from the assistant, or you can create two separate Model Armor templates. For more information, see Create and manage Model Armor templates.

When creating a Model Armor template for NotebookLM Enterprise, consider these configurations:

Select Multi-region in the Regions field. The following table shows you how to map Model Armor template regions to NotebookLM Enterprise regions:

NotebookLM Enterprise multi-region Model Armor multi-region Global - US (multiple regions in United States)

- EU (multiple regions in the Europe Union)

US (multiple regions in United States) US (multiple regions in United States) EU (multiple regions in the Europe Union) EU (multiple regions in the Europe Union) Google does not recommend configuring Cloud Logging in the Model Armor template for NotebookLM Enterprise. This configuration can expose sensitive data to users with the Private Logs Viewer (

roles/logging.privateLogViewer) IAM role. Instead, consider the following options:If you need to log the data that goes through the Model Armor template, you can reroute logs to a secure storage like BigQuery, which offers stricter access controls. For more information, see Route logs to supported destinations.

You can configure Data Access audit logs to analyze and report on the request and response screening verdicts generated by Model Armor. For more information, see Configure audit logs.

Configure NotebookLM Enterprise with the Model Armor templates

The following steps describe how to add the Model Armor templates to NotebookLM Enterprise.

REST

To add the Model Armor templates to NotebookLM Enterprise, run the following command :

curl -X PATCH \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

-H "X-Goog-User-Project: PROJECT_ID" \

"https://ENDPOINT_LOCATION-discoveryengine.googleapis.com/v1alpha/projects/PROJECT_NUMBER?update_mask=customerProvidedConfig" \

-d '{

"customerProvidedConfig": {

"notebooklmConfig": {

"modelArmorConfig": {

"userPromptTemplate": "QUERY_PROMPT_TEMPLATE",

"responseTemplate": "RESPONSE_PROMPT_TEMPLATE"

}

}

}

}'

Replace the following:

PROJECT_ID: the ID of your project.PROJECT_NUMBER: the number of your Google Cloud project.ENDPOINT_LOCATION: the multi-region for your API request. Assign one of the following values:us-for the US multi-regioneu-for the EU multi-regionglobal-for the Global location

QUERY_PROMPT_TEMPLATE: the Resource name of the Model Armor templates you created.

To get the Resource name, follow the steps in the View a Model Armor template documentation, and copy the Resource name value.RESPONSE_PROMPT_TEMPLATE: the Resource name of the Model Armor templates you created.

Test if the Model Armor template is enabled

After configuring the Model Armor template, test if NotebookLM Enterprise proactively screens and blocks user prompts and responses according to the confidence levels set in the Model Armor filters.

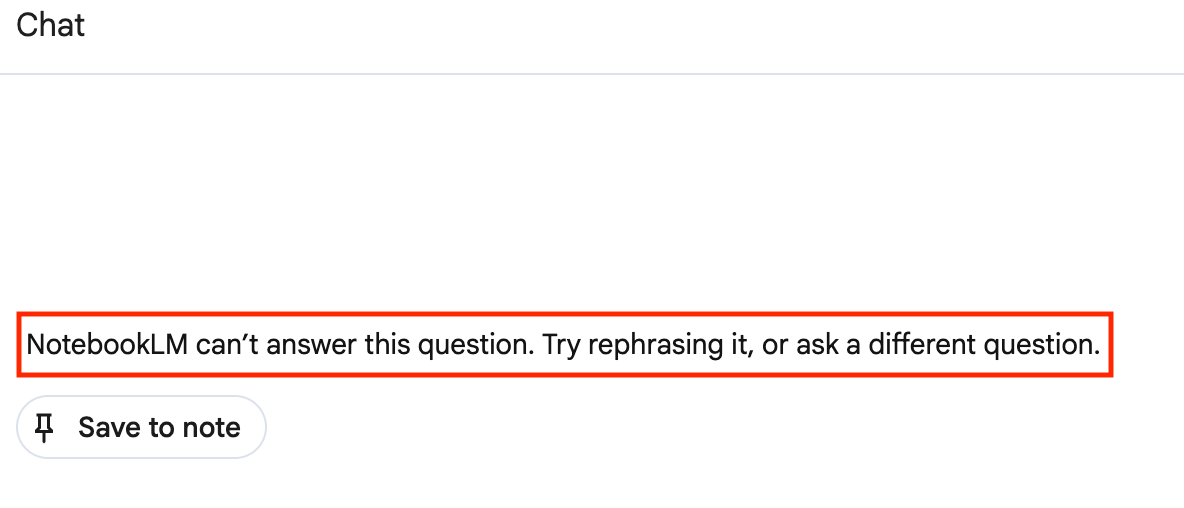

When the Model Armor template is configured to Inspect and block requests that violate the policy, the following policy violation message is shown:

Console

For example, you see the policy violation message:

REST

A JSON response which includes the following:

For example:

json

"answer.state": "SKIPPED",

"answer.assist_skipped_reasons": "CUSTOMER_POLICY_VIOLATION_REASON"

Remove the Model Armor templates from NotebookLM Enterprise

To remove the Model Armor templates from NotebookLM Enterprise, run the following command:

REST

curl -X PATCH \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

-H "X-Goog-User-Project: PROJECT_ID" \

"https://ENDPOINT_LOCATION-discoveryengine.googleapis.com/v1alpha/projects/PROJECT_NUMBER?update_mask=customer_provided_config" \

-d '{

"customer_provided_config": {

"notebooklm_config": {

}

}

}'

Replace the following:

PROJECT_ID: the ID of your project.PROJECT_NUMBER: the number of your Google Cloud project.ENDPOINT_LOCATION: the multi-region for your API request. Assign one of the following values:us-for the US multi-regioneu-for the EU multi-regionglobal-for the Global location

Configure audit logs

Model Armor records Data Access audit logs that you can use to analyze the request and response screening verdicts. These logs don't contain the user queries or responses from NotebookLM Enterprise, so they are safe for reporting and analytics. For more information, see Audit logging for Model Armor.

To access these logs, you need to have the

Private Logs Viewer

(roles/logging.privateLogViewer) IAM role.

Enable Data Access audit logs

To enable the Data Access audit logs, follow these steps:

In the Google Cloud console, go to IAM & Admin > Audit Logs.

Select the Model Armor API.

In the Permission type section, select the Data read permission type.

Click Save.

Examine Data Access audit logs

To examine the Data Access audit logs, follow these steps:

In the Google Cloud console, go to Logs Explorer.

Search the logs for the following method names:

google.cloud.modelarmor.v1.ModelArmor.SanitizeUserPromptto view the user requests that were screened.google.cloud.modelarmor.v1.ModelArmor.SanitizeModelResponseto view the responses that were screened.